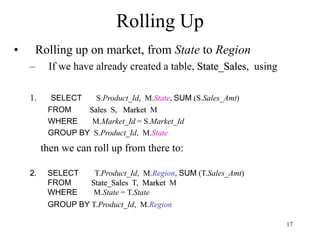

This document discusses OLAP (Online Analytical Processing) and data mining. It begins by comparing OLTP (Online Transaction Processing) and OLAP, noting that OLTP maintains operational databases while OLAP uses stored data to guide strategic decisions. Common OLAP techniques like slicing, dicing, drilling down and rolling up are introduced. Data mining aims to extract knowledge from databases by finding patterns and associations. The document outlines common data mining algorithms and discusses data warehouses for housing OLAP and mining data.