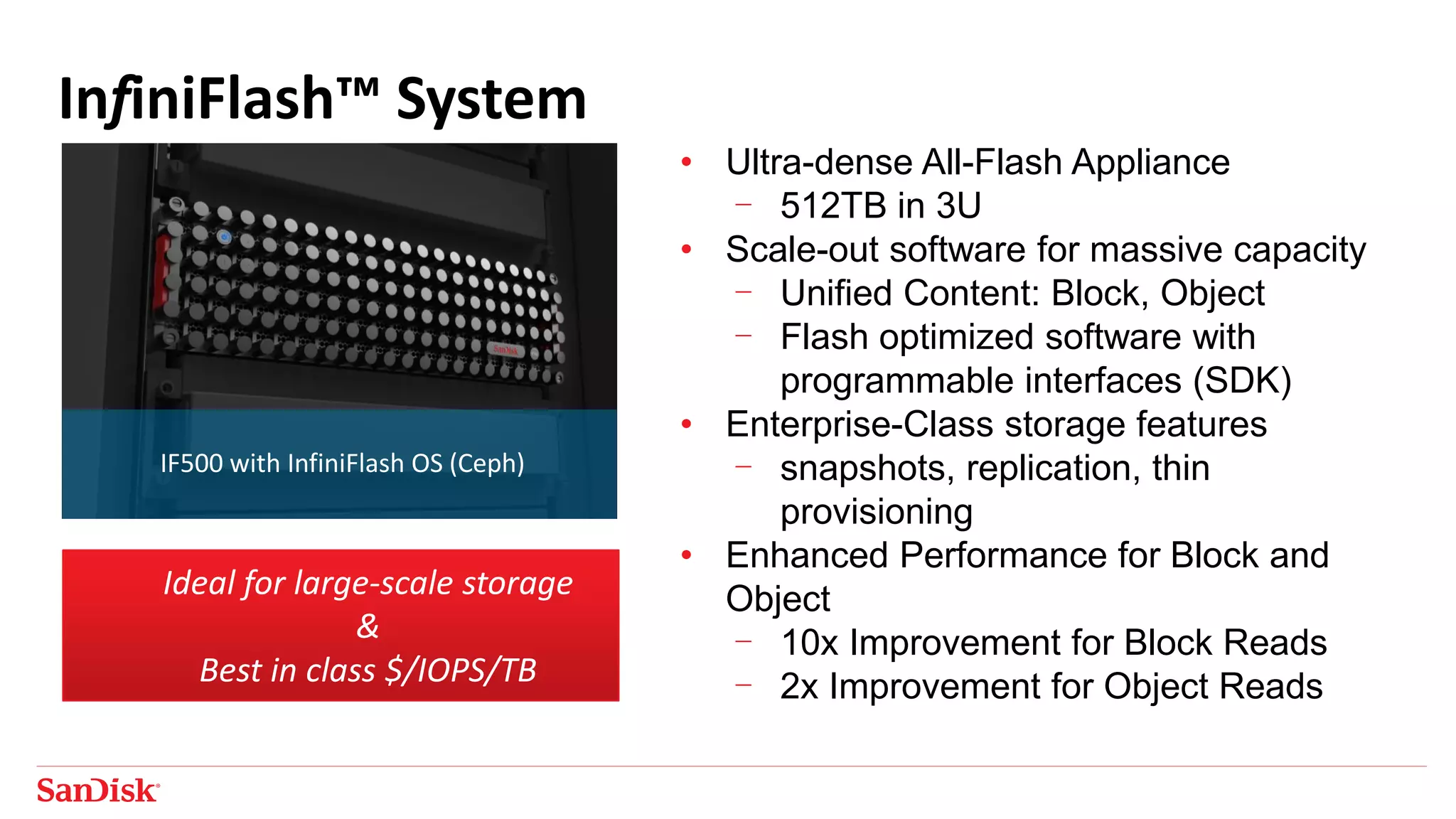

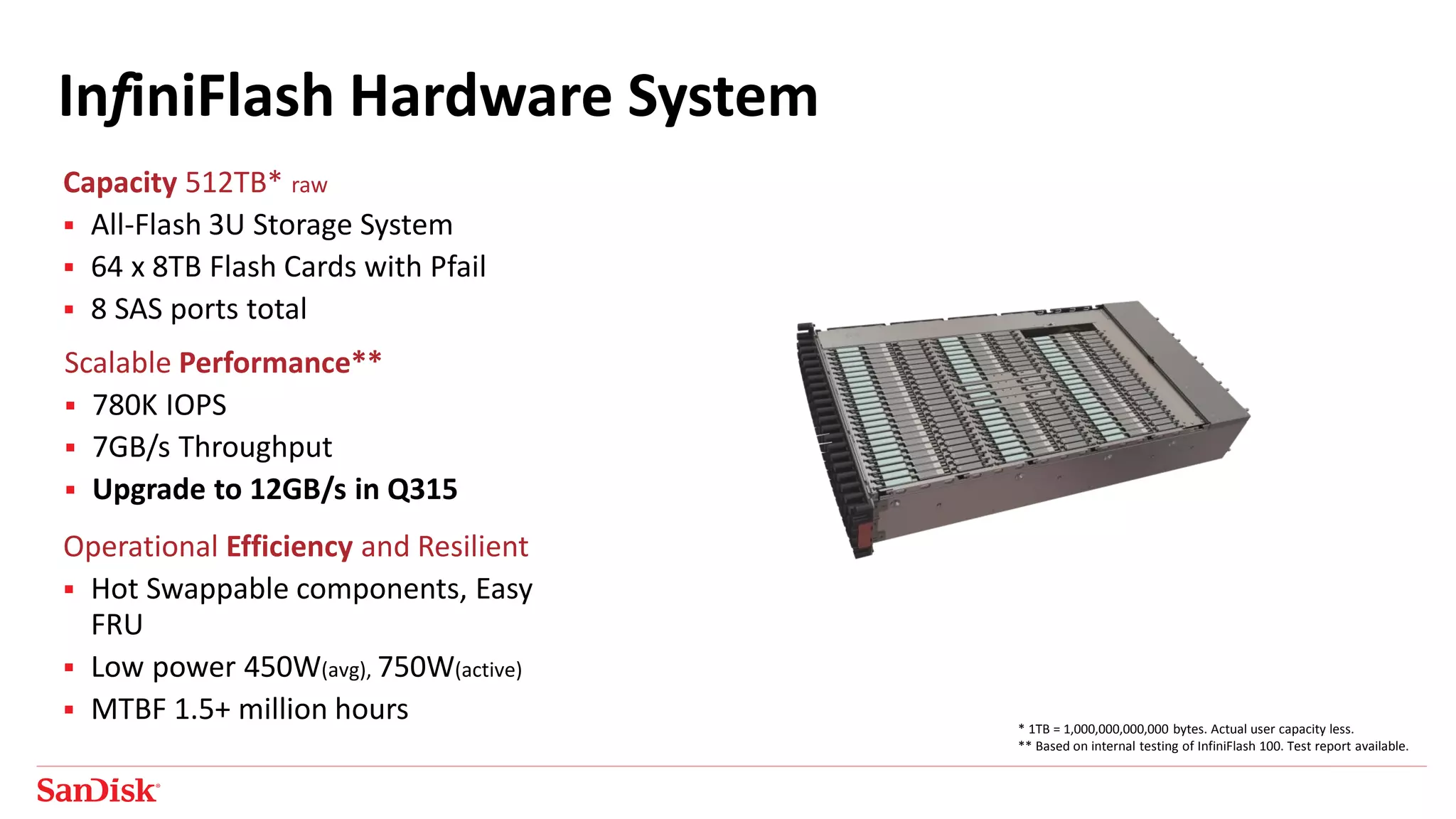

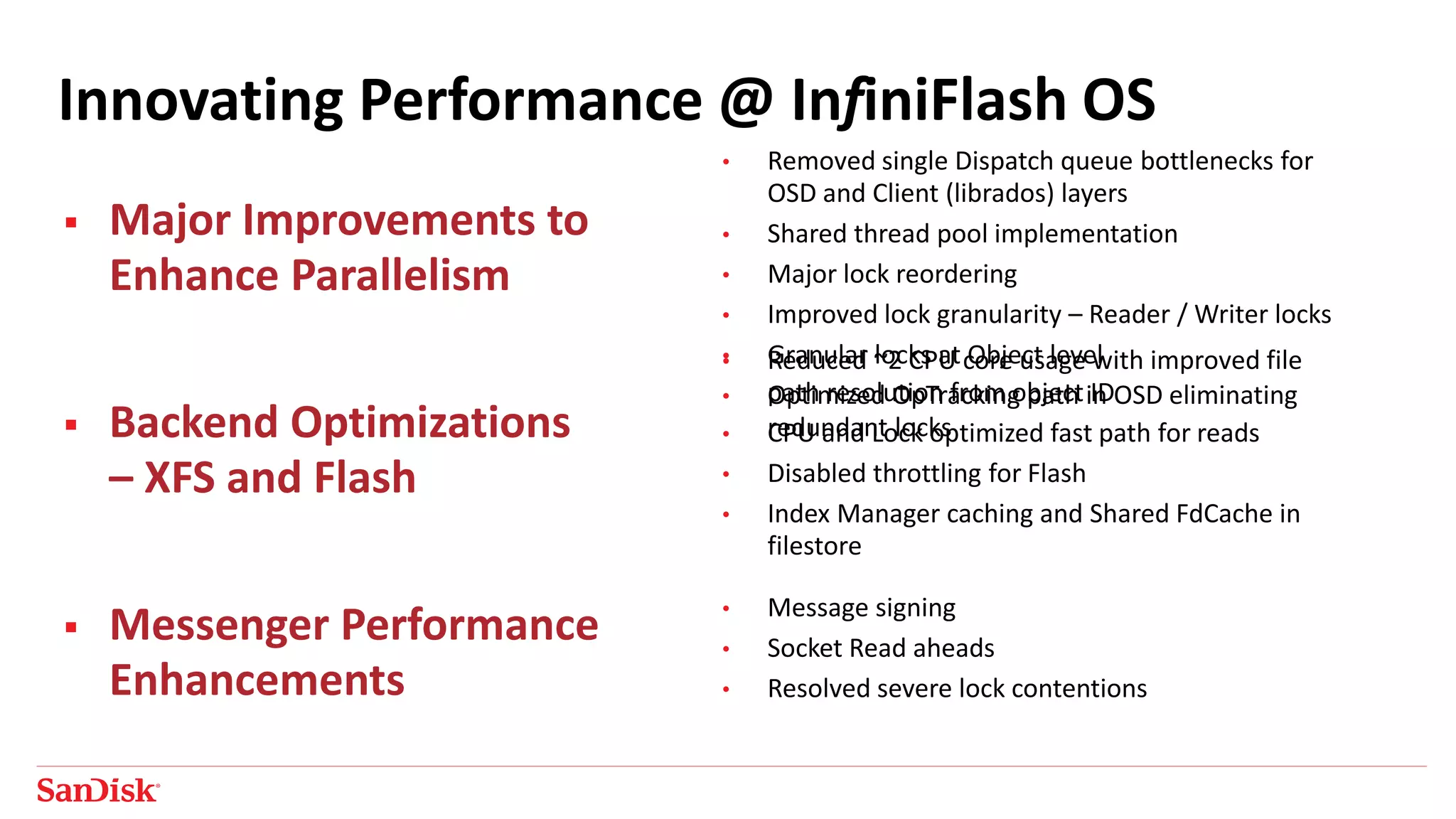

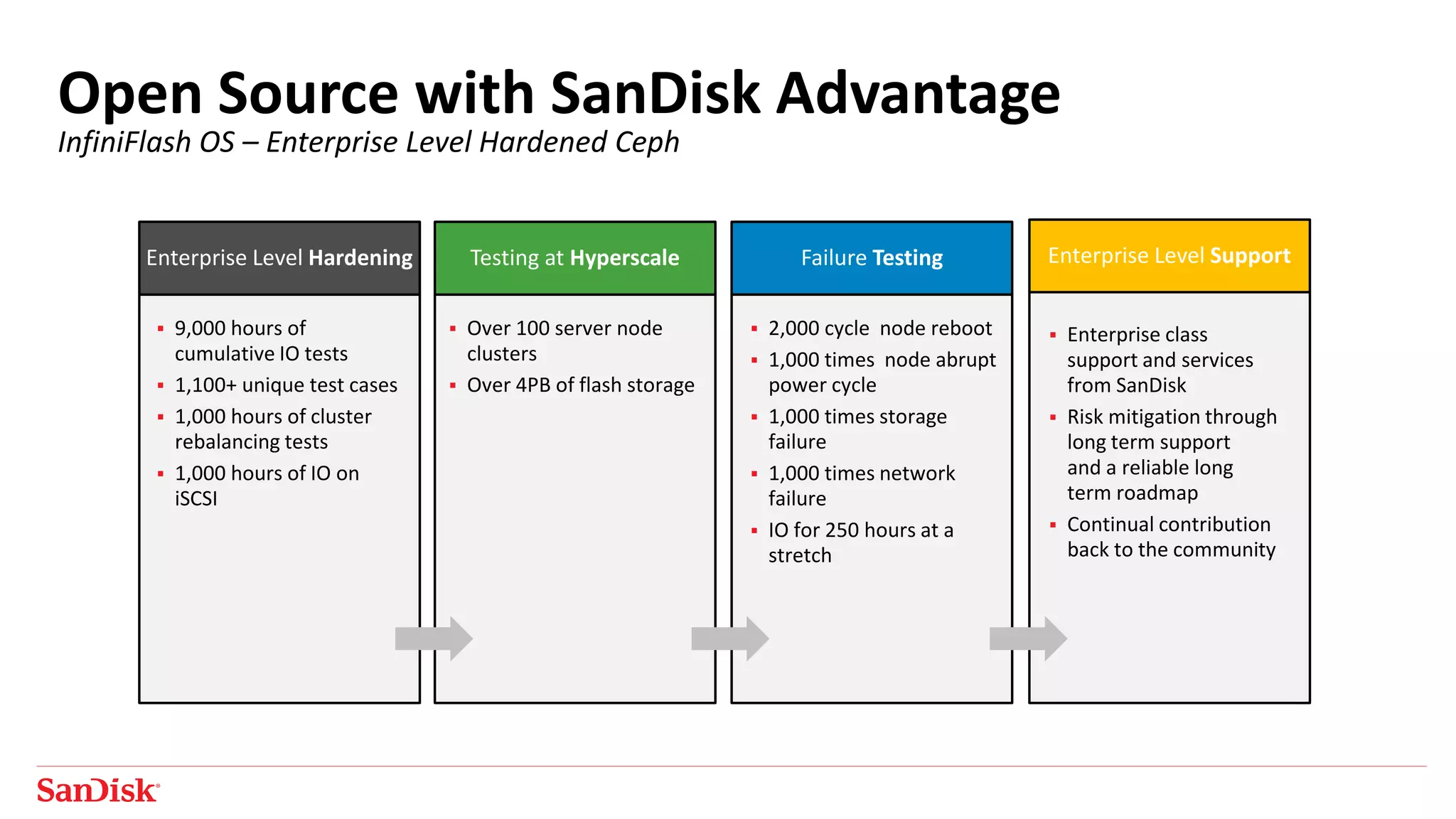

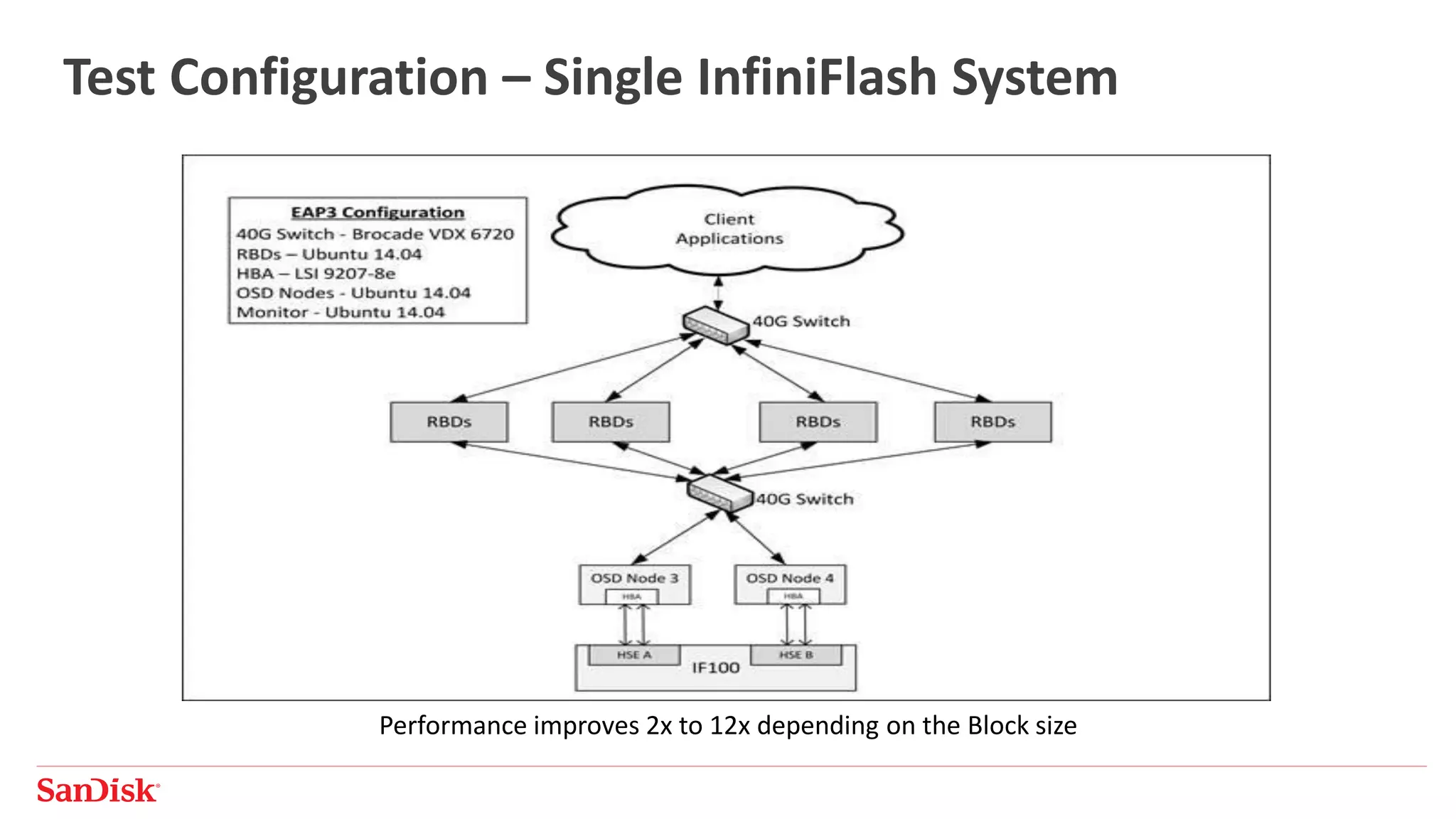

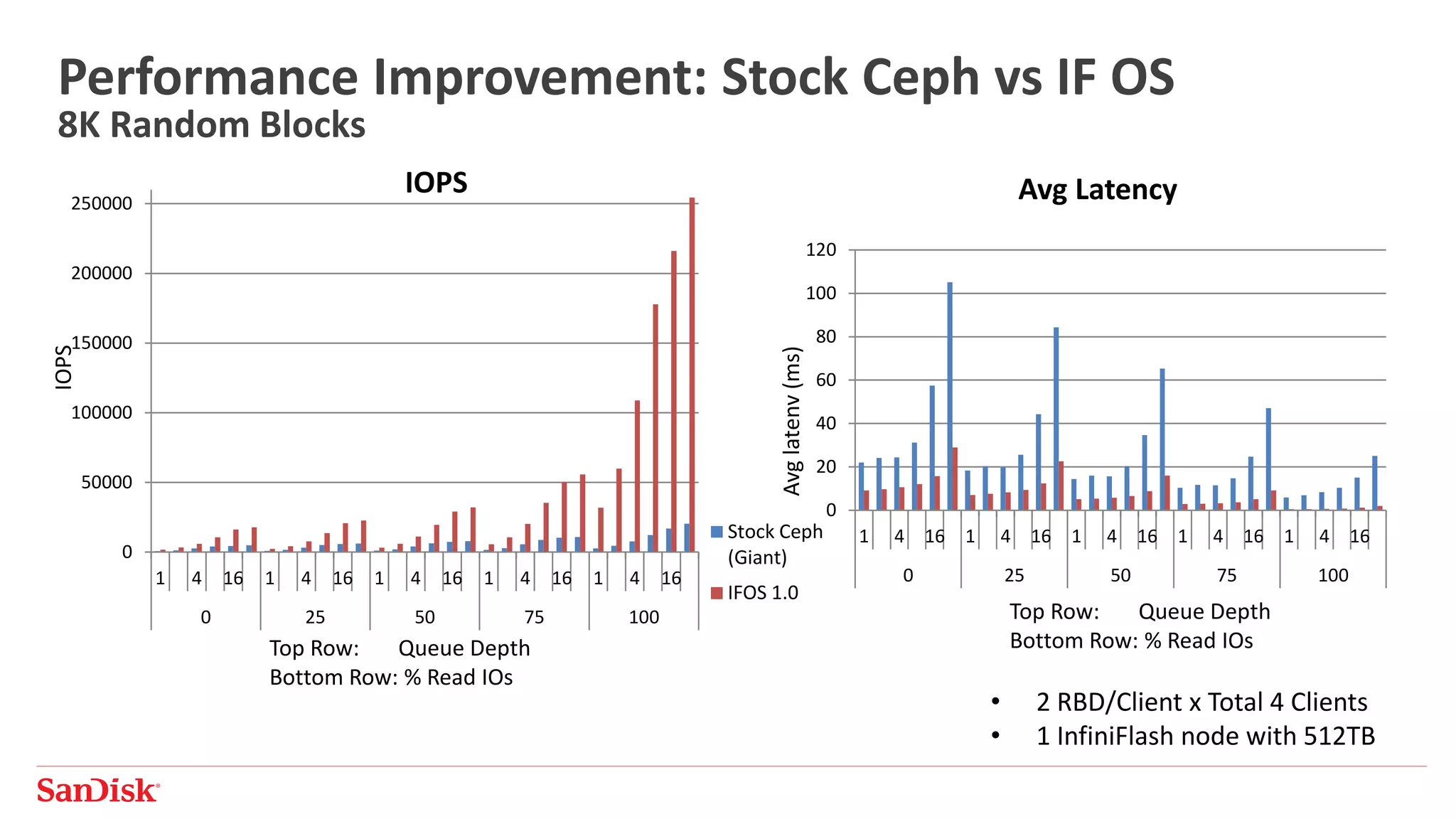

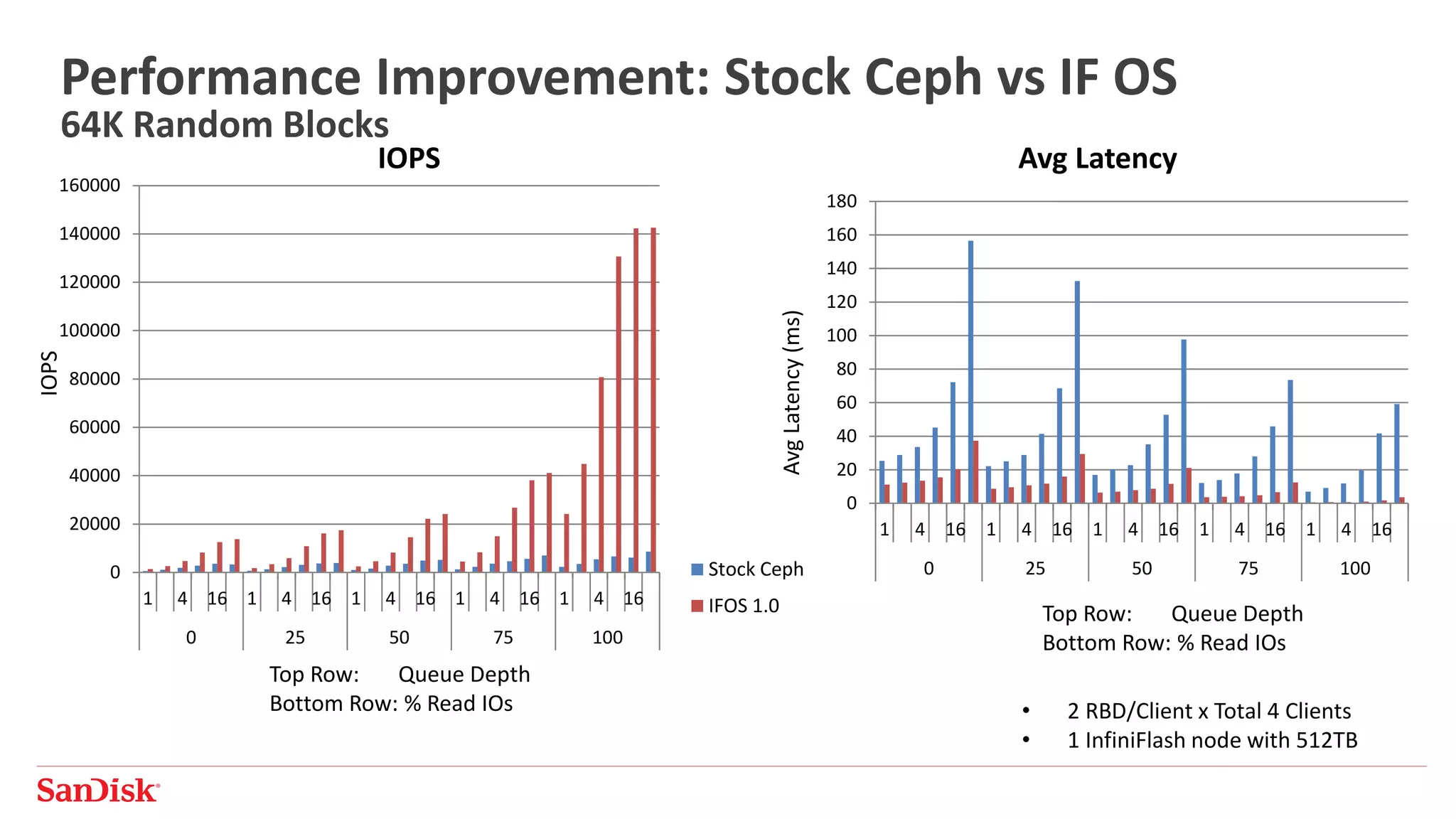

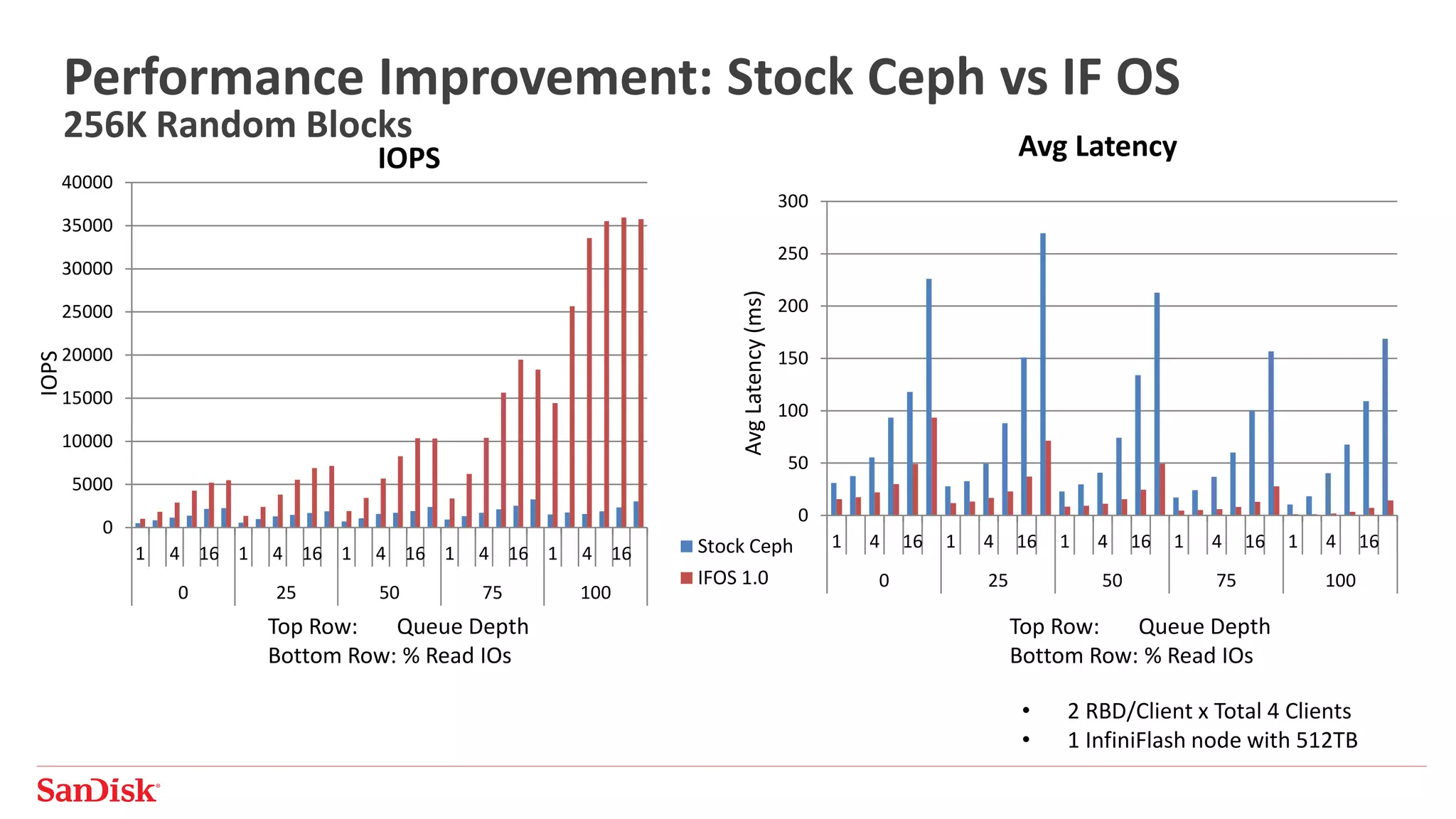

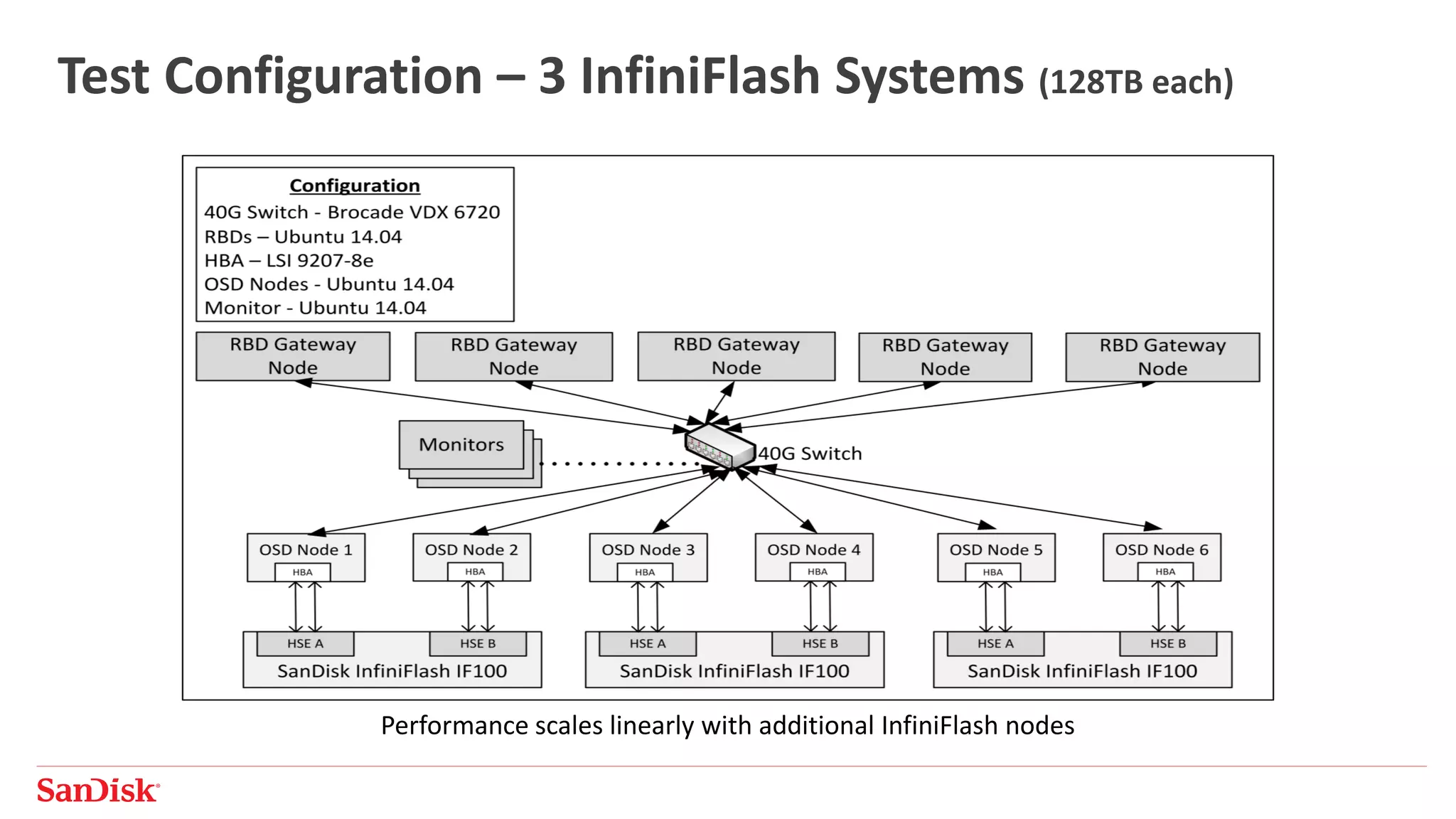

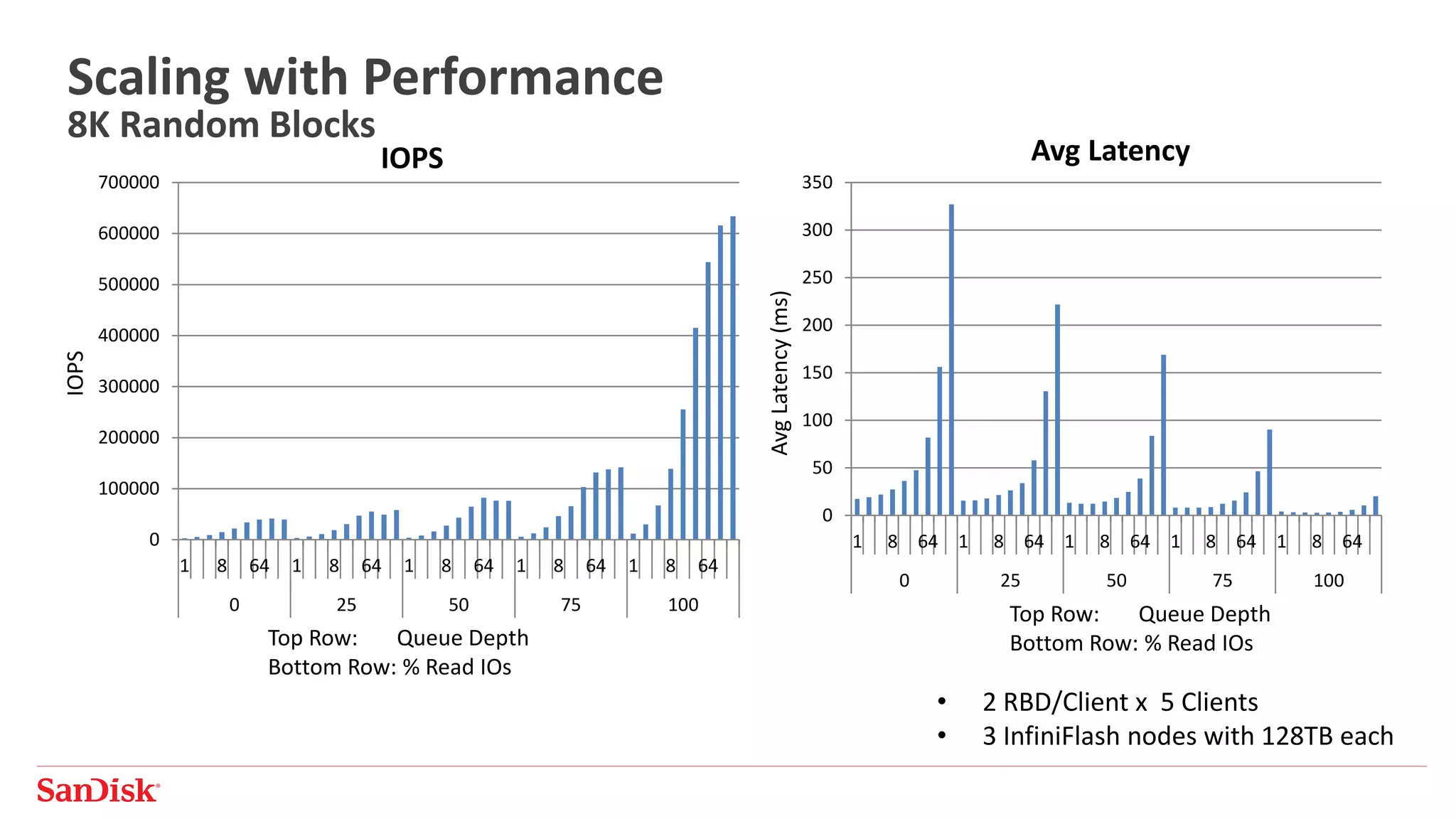

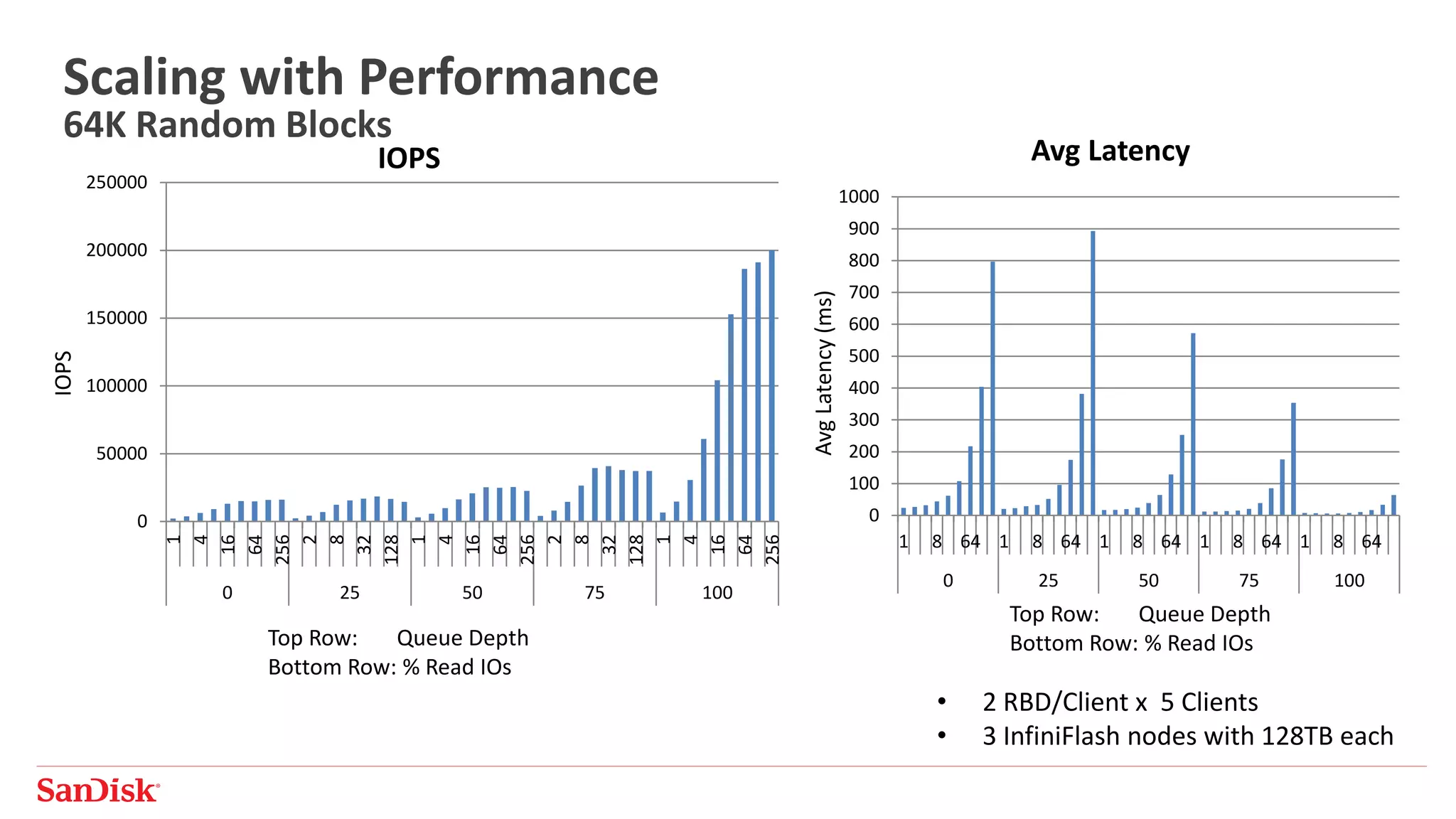

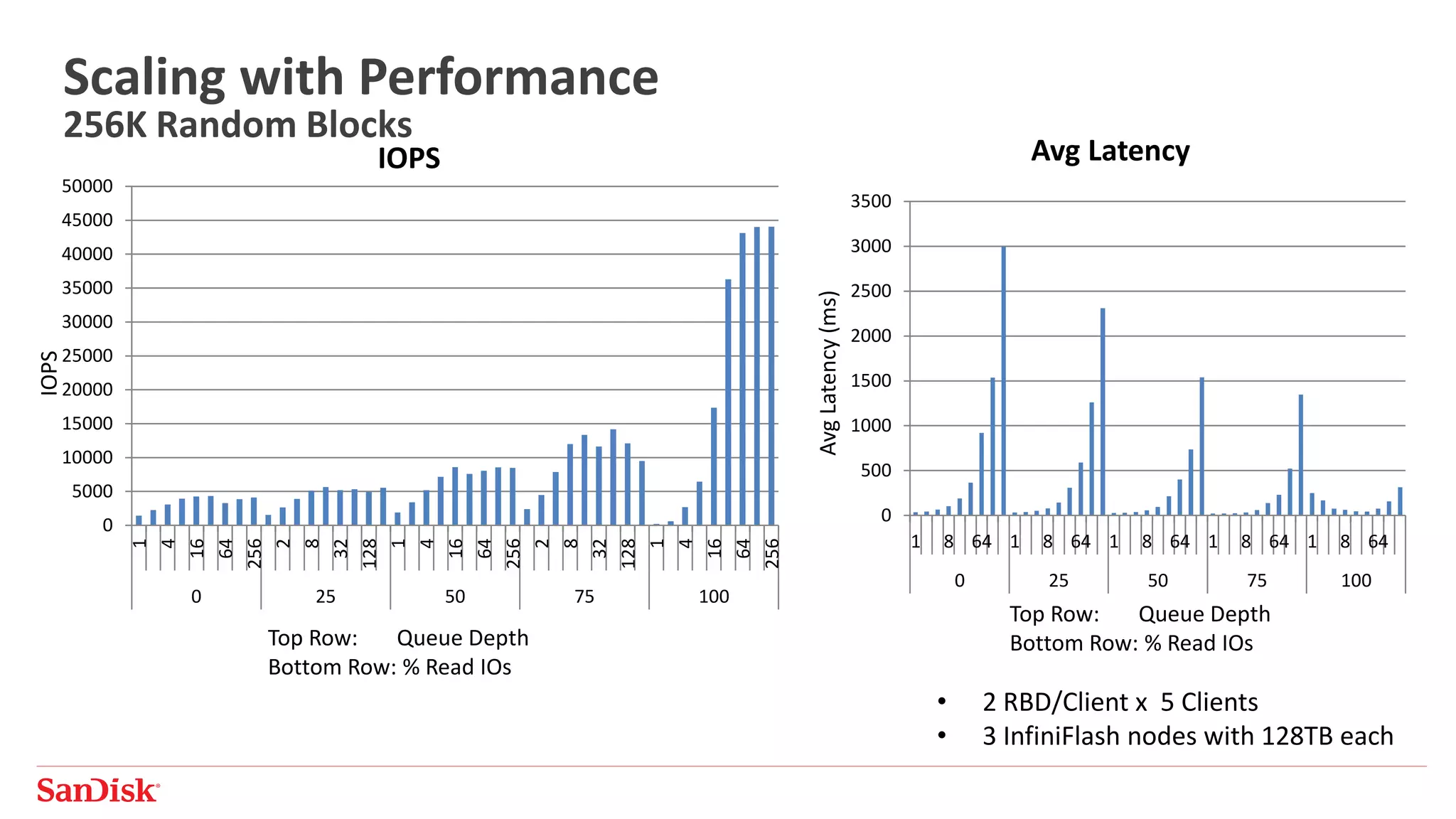

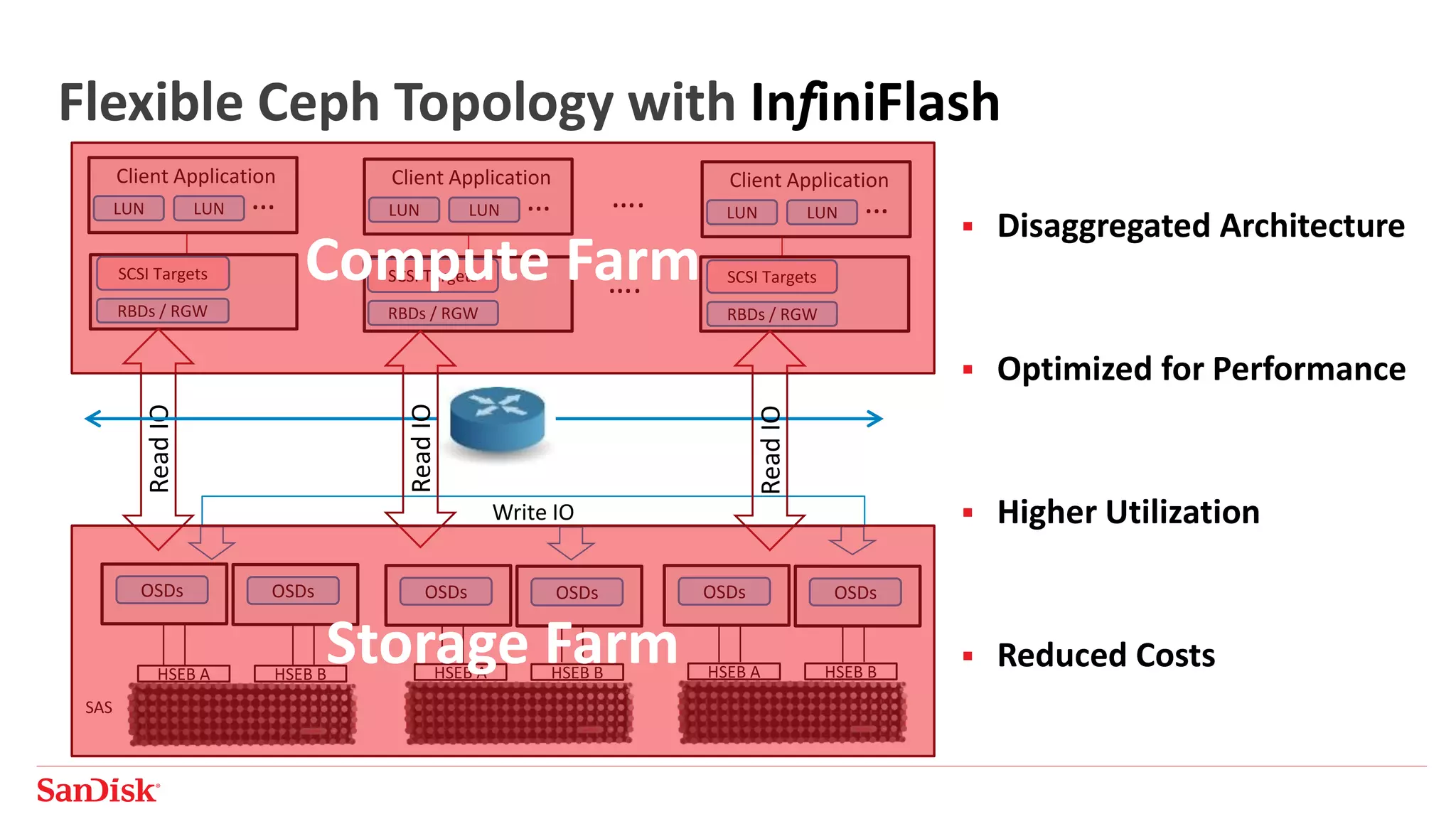

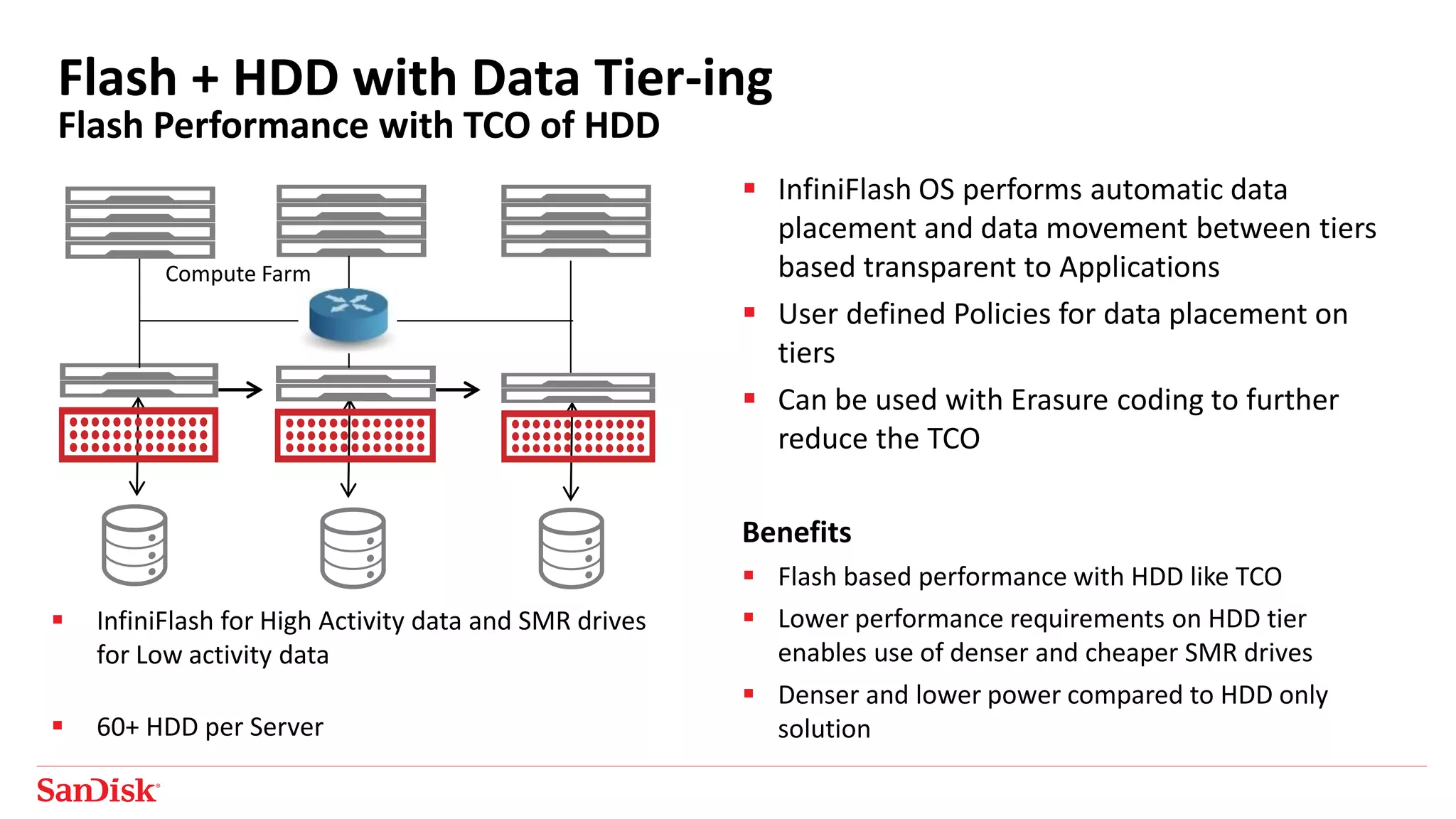

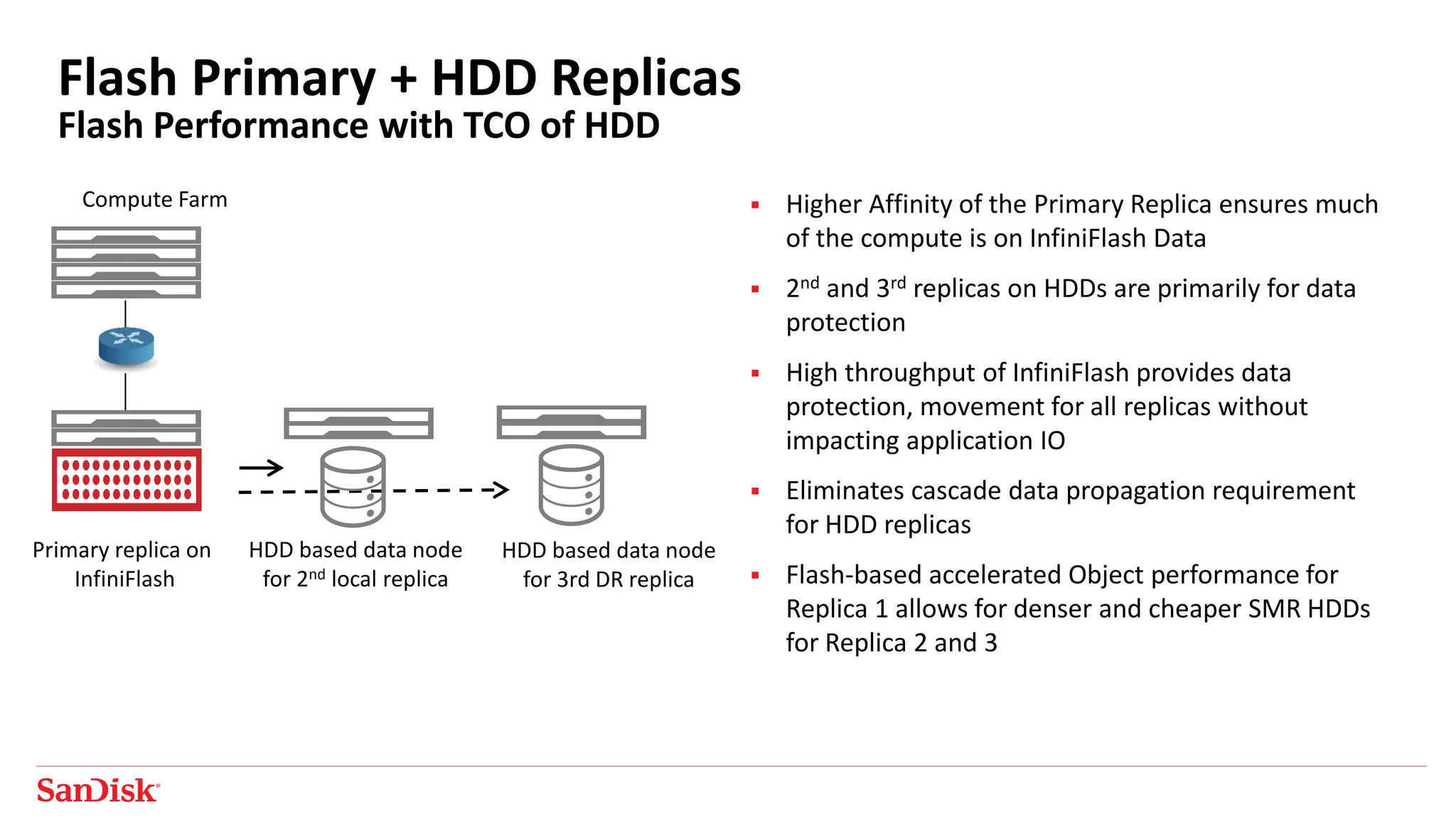

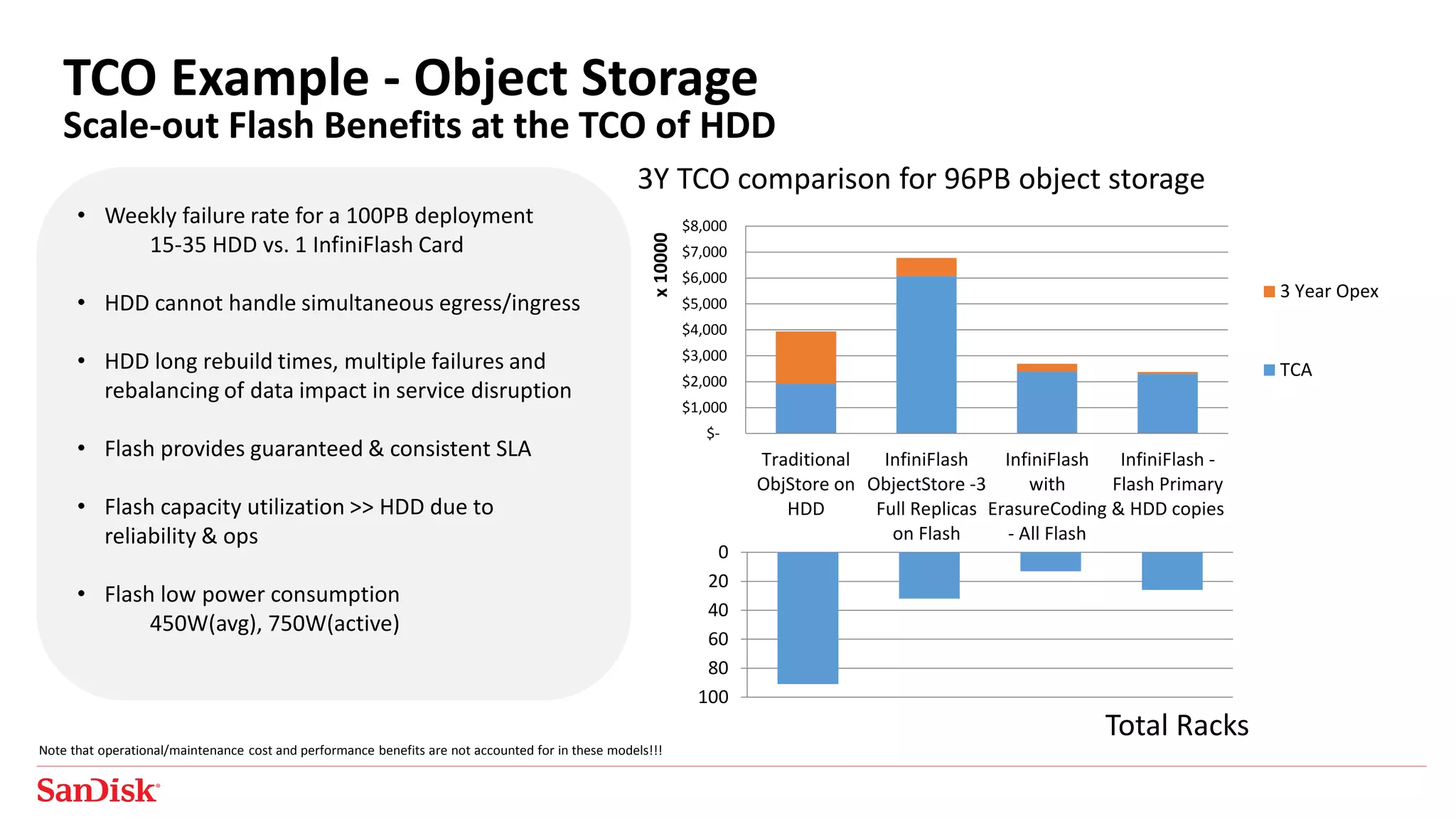

The document discusses using Ceph on all-flash storage systems to break performance barriers. It summarizes the performance improvements seen when using Ceph on InfiniFlash all-flash storage systems compared to traditional HDD-based Ceph deployments. Performance is shown to increase 2-12x for random read/write operations depending on block size. Scaling performance linearly by adding more InfiniFlash nodes is also demonstrated. The document argues that InfiniFlash allows achieving flash-level performance at HDD-level total cost of ownership through techniques like flash-optimized data placement policies and hybrid flash/HDD configurations.