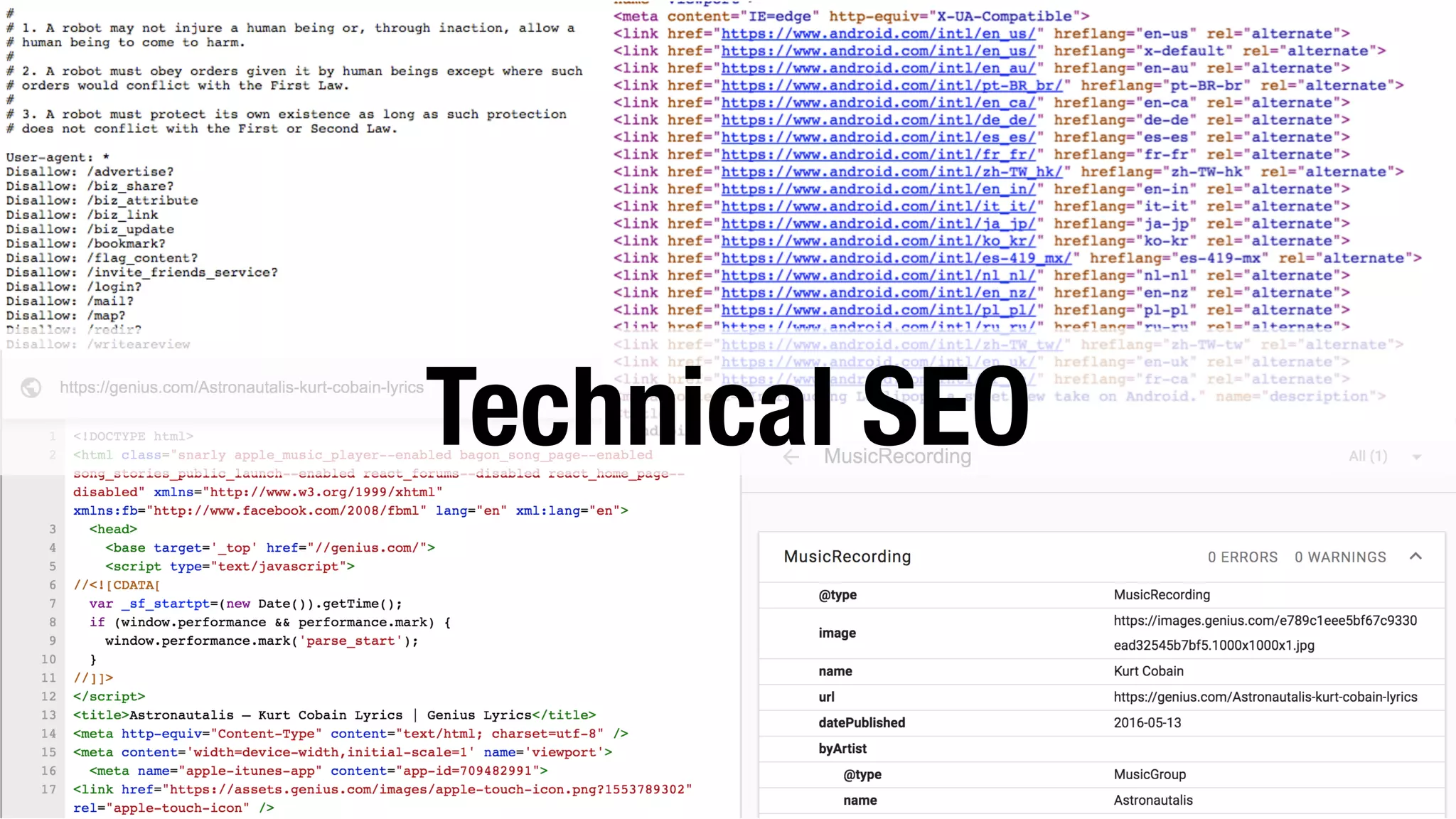

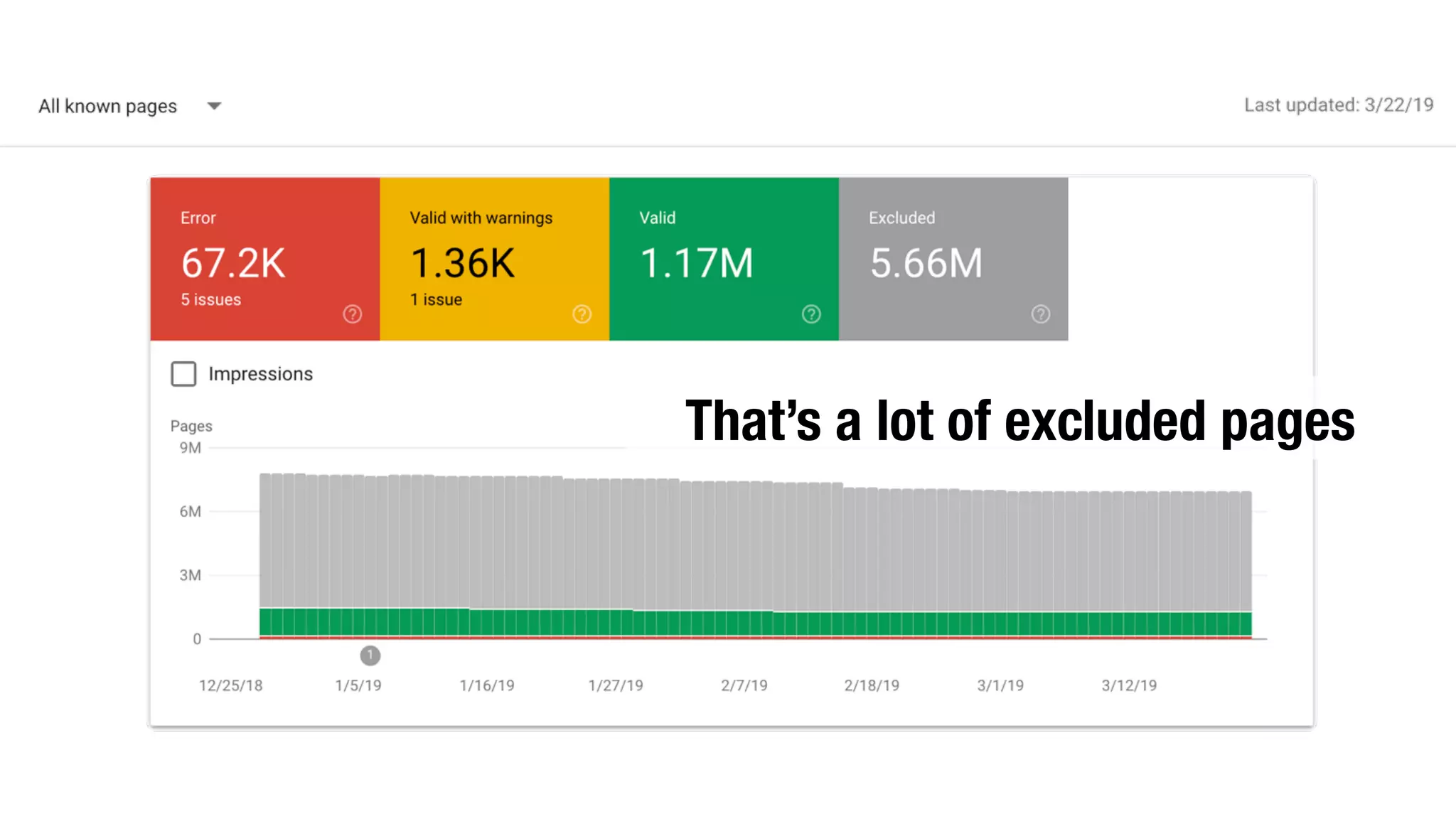

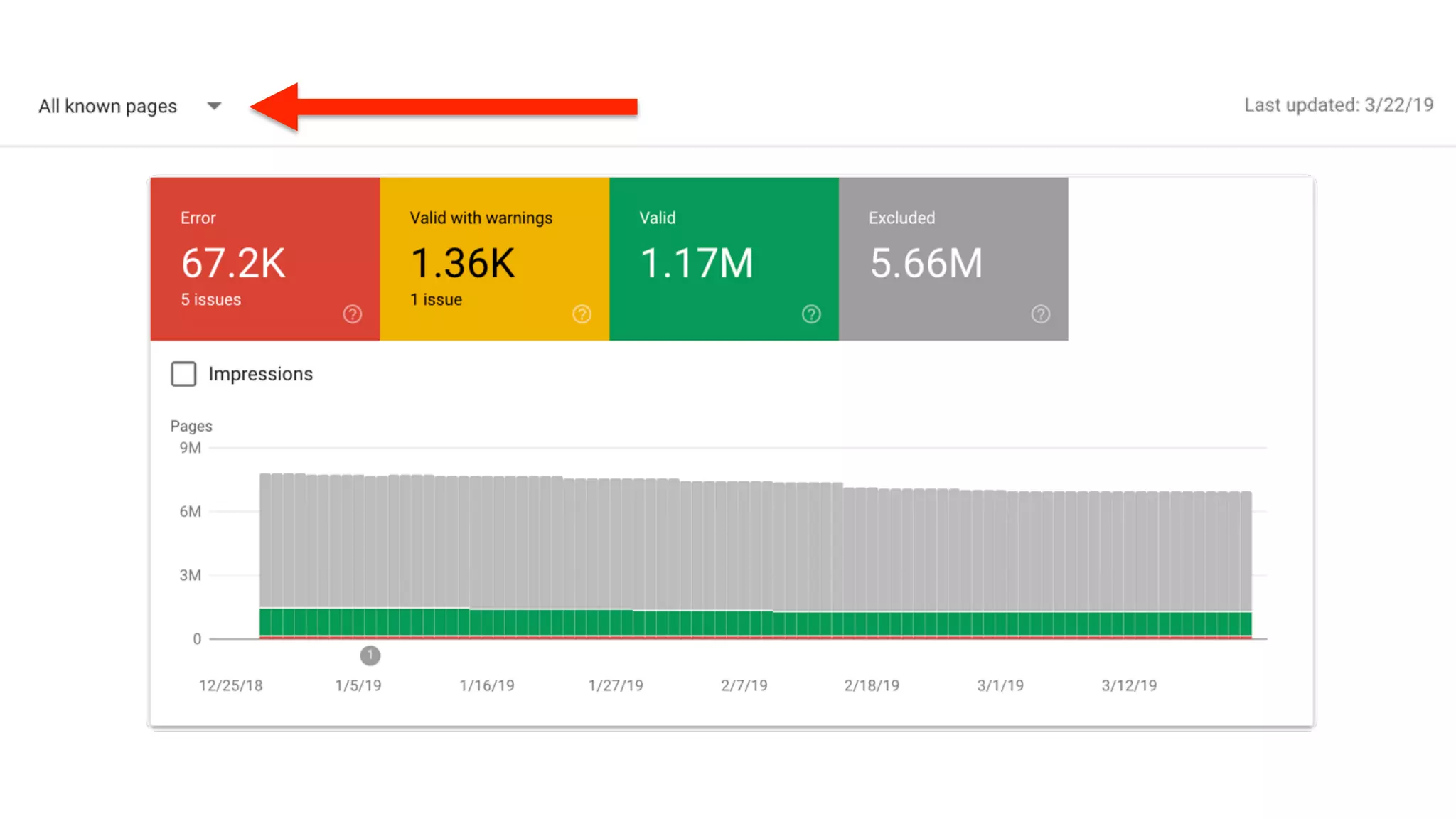

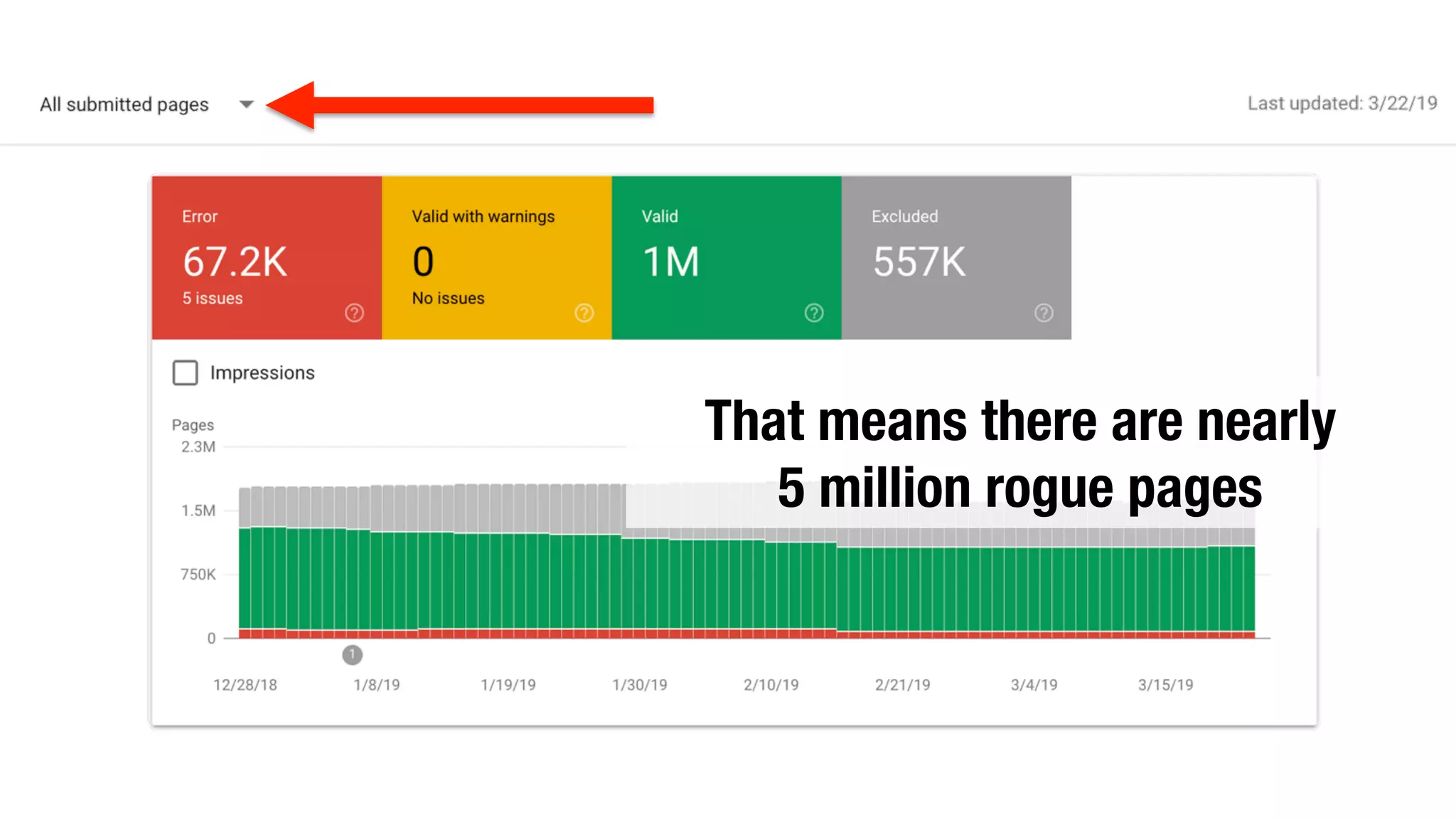

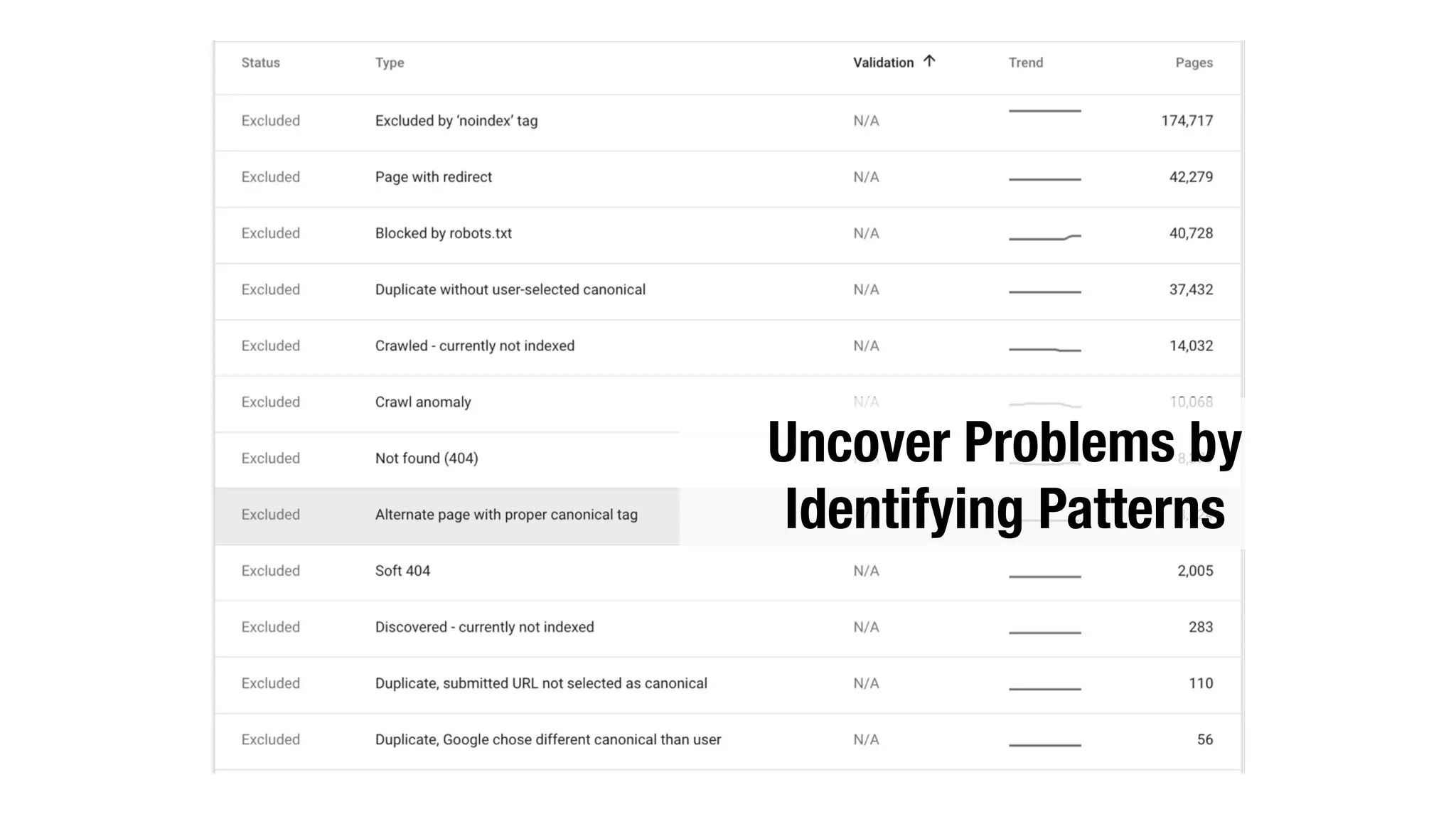

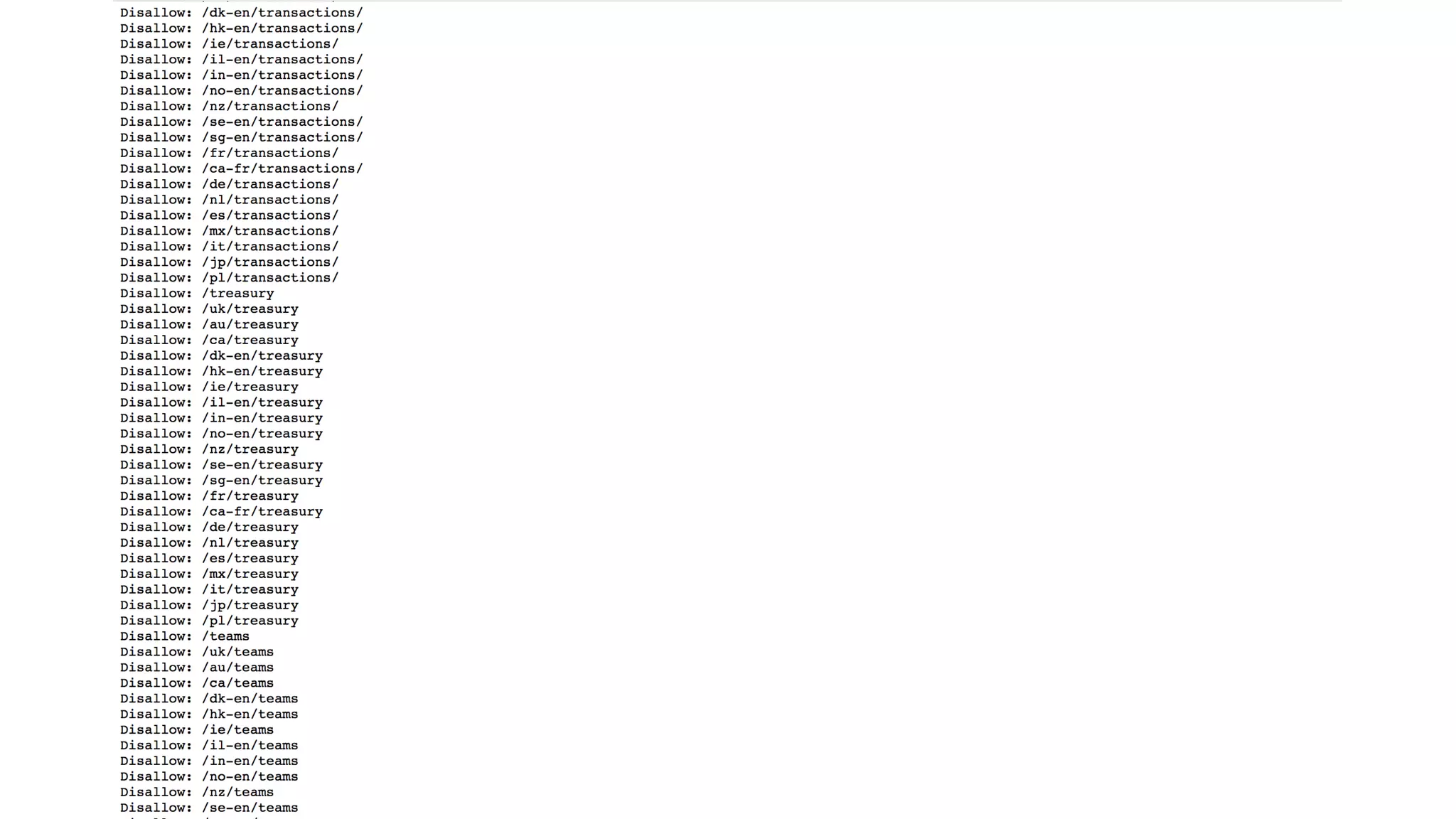

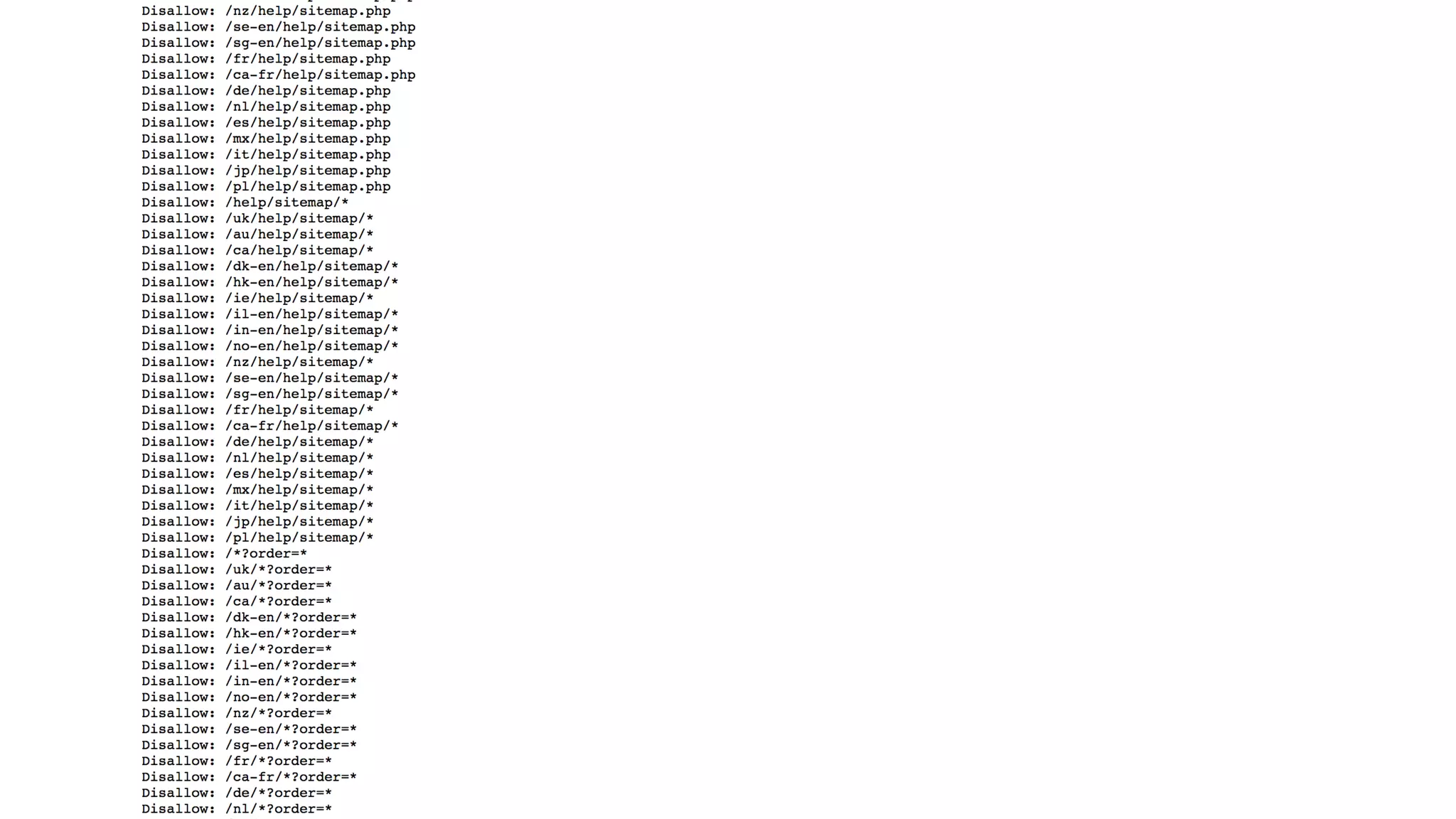

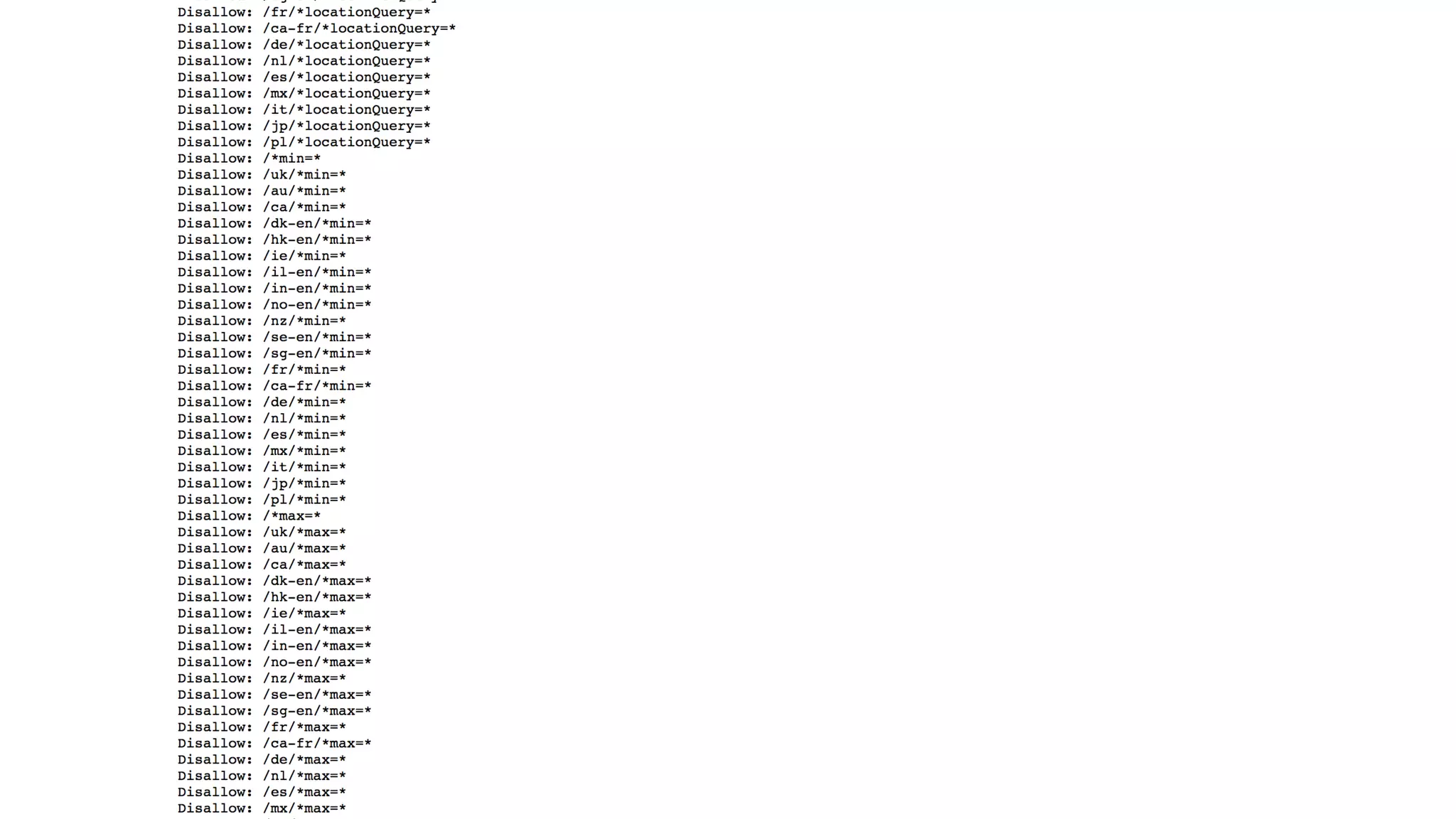

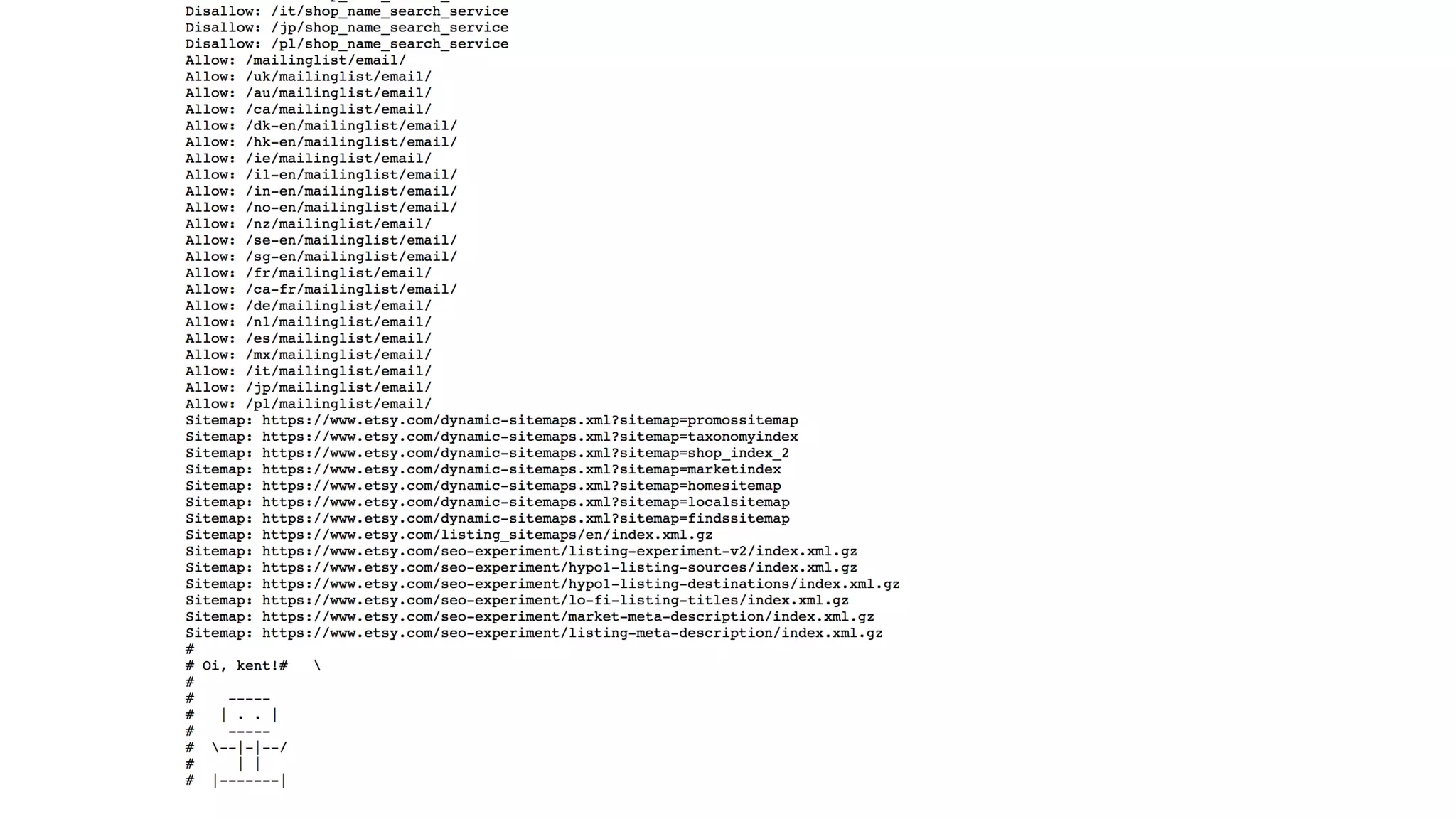

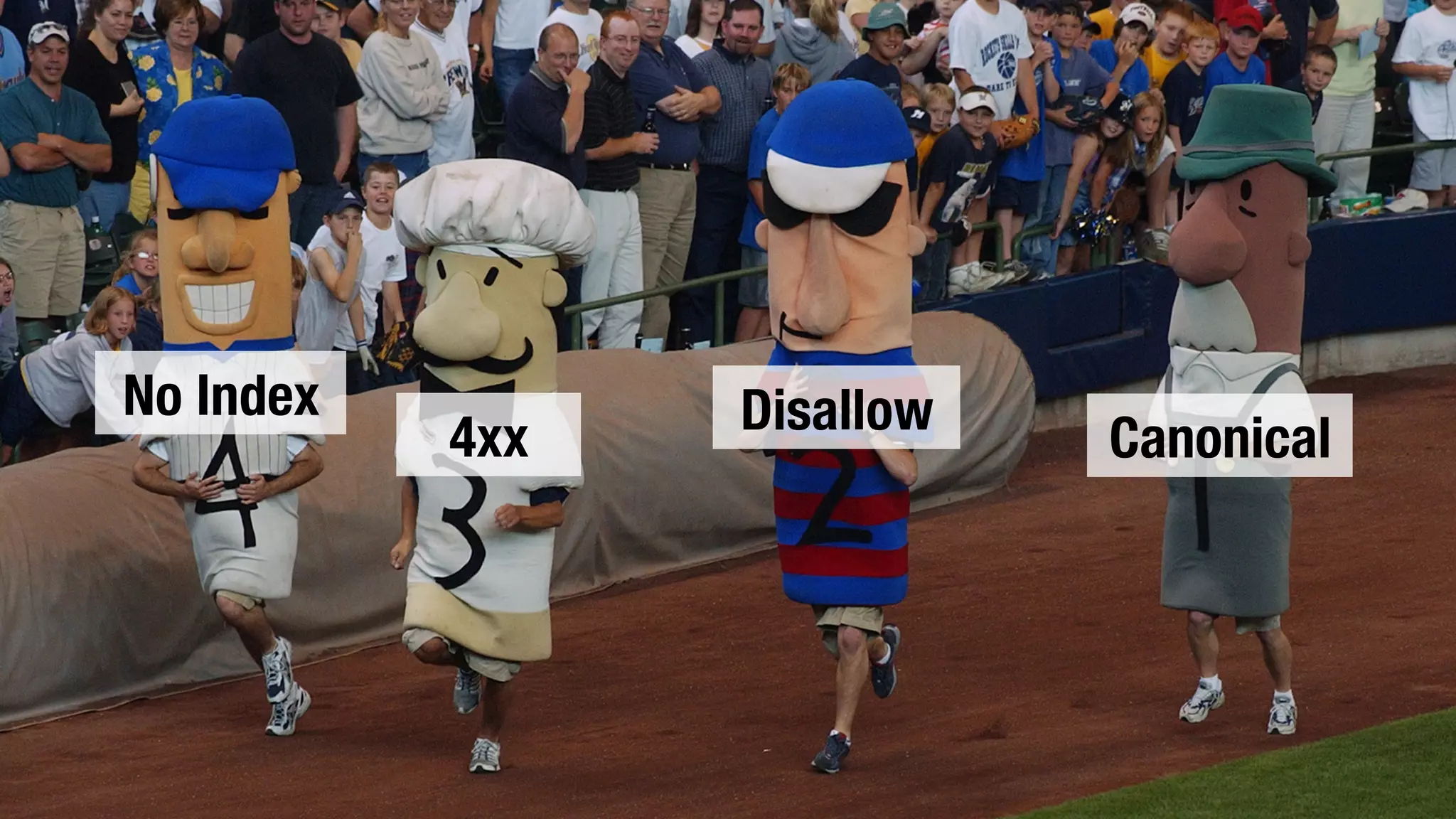

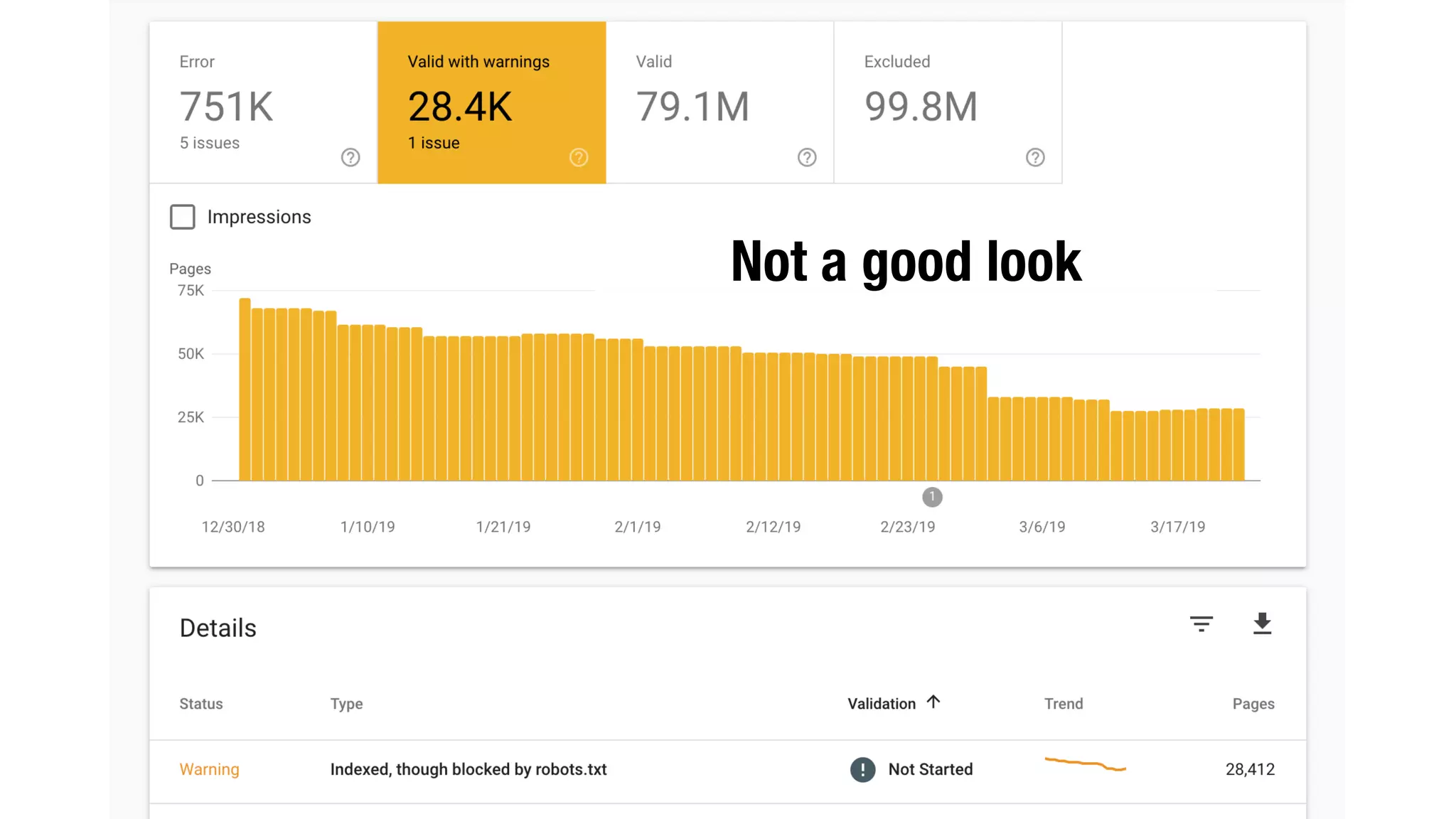

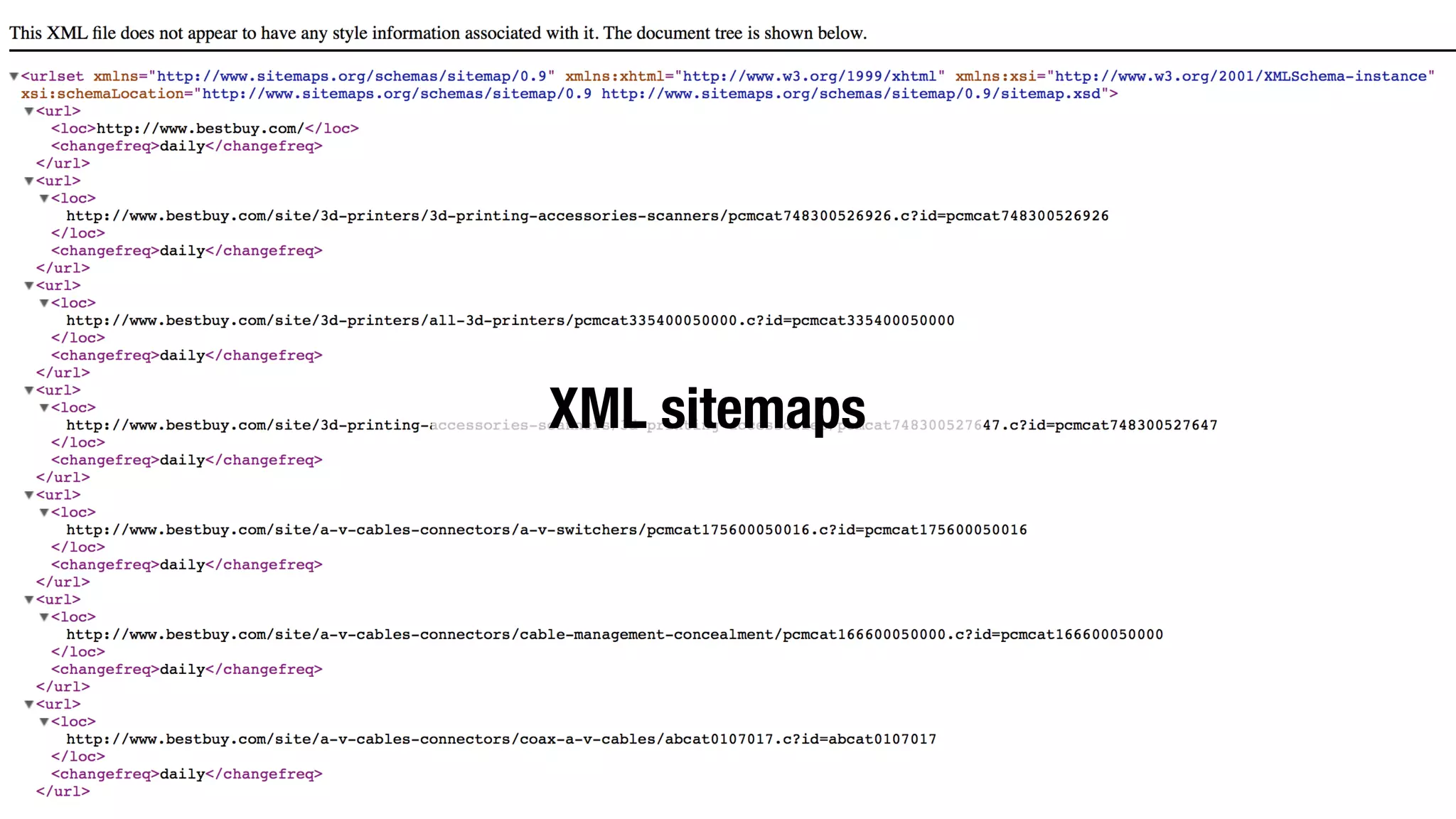

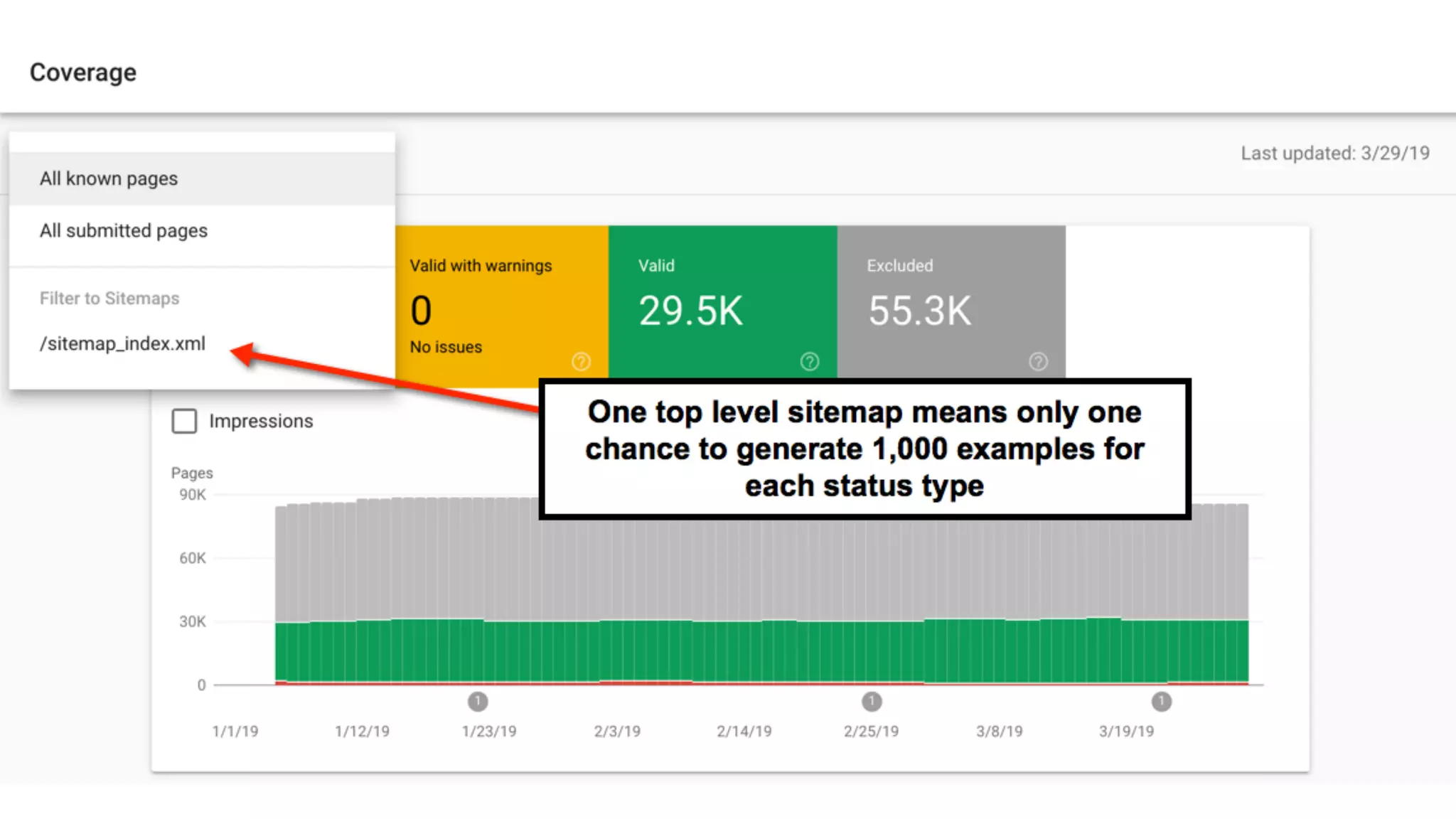

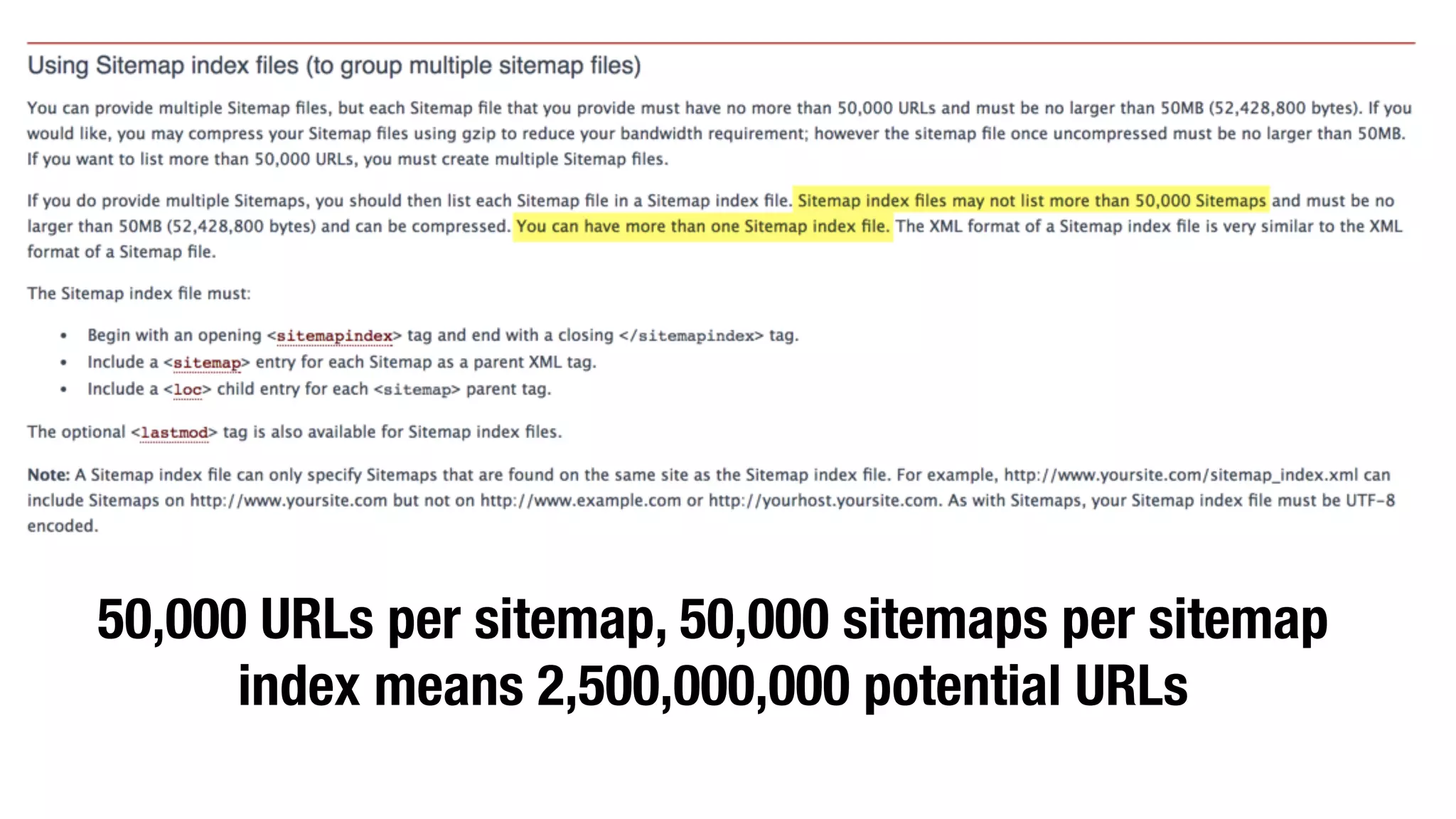

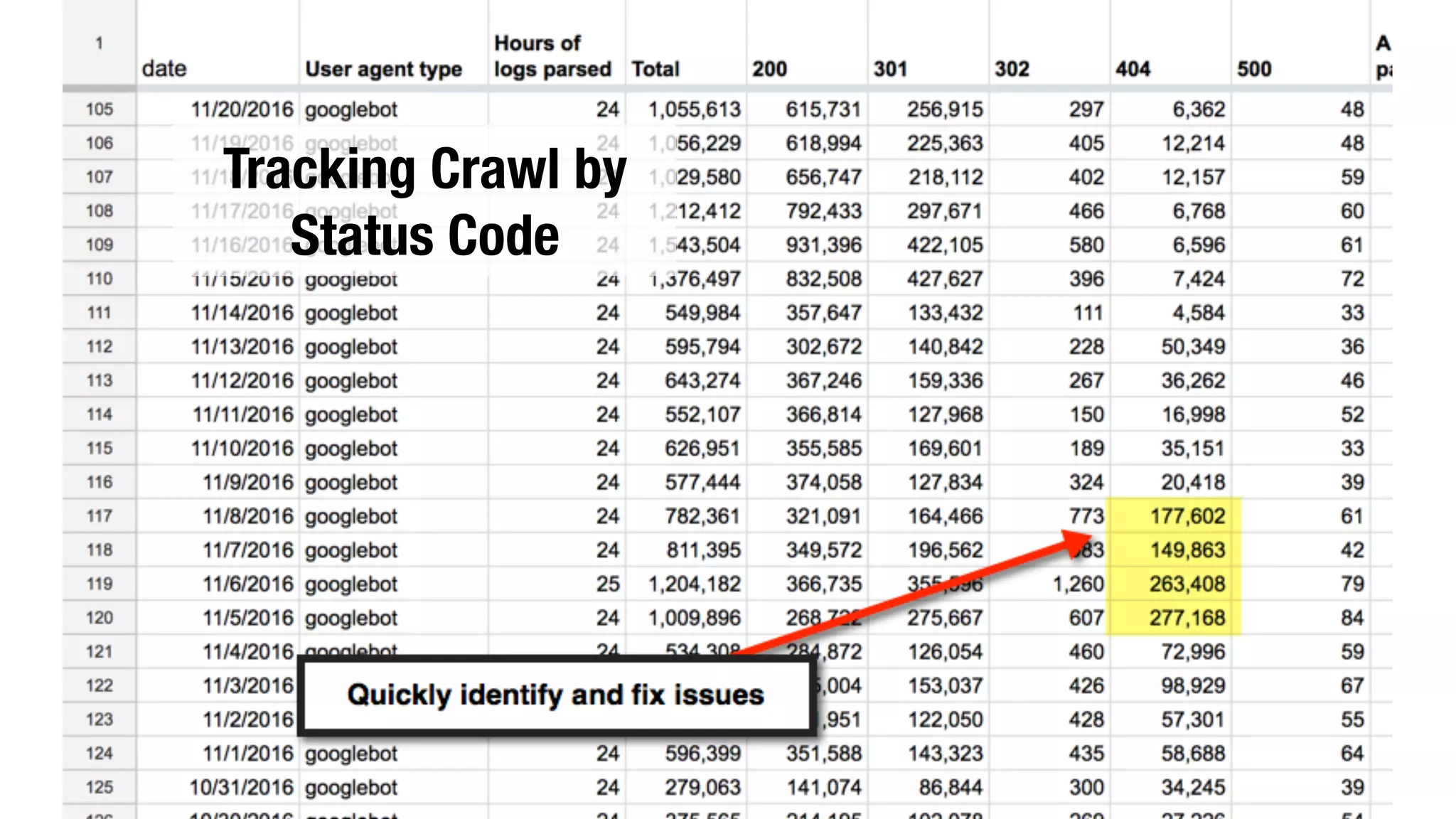

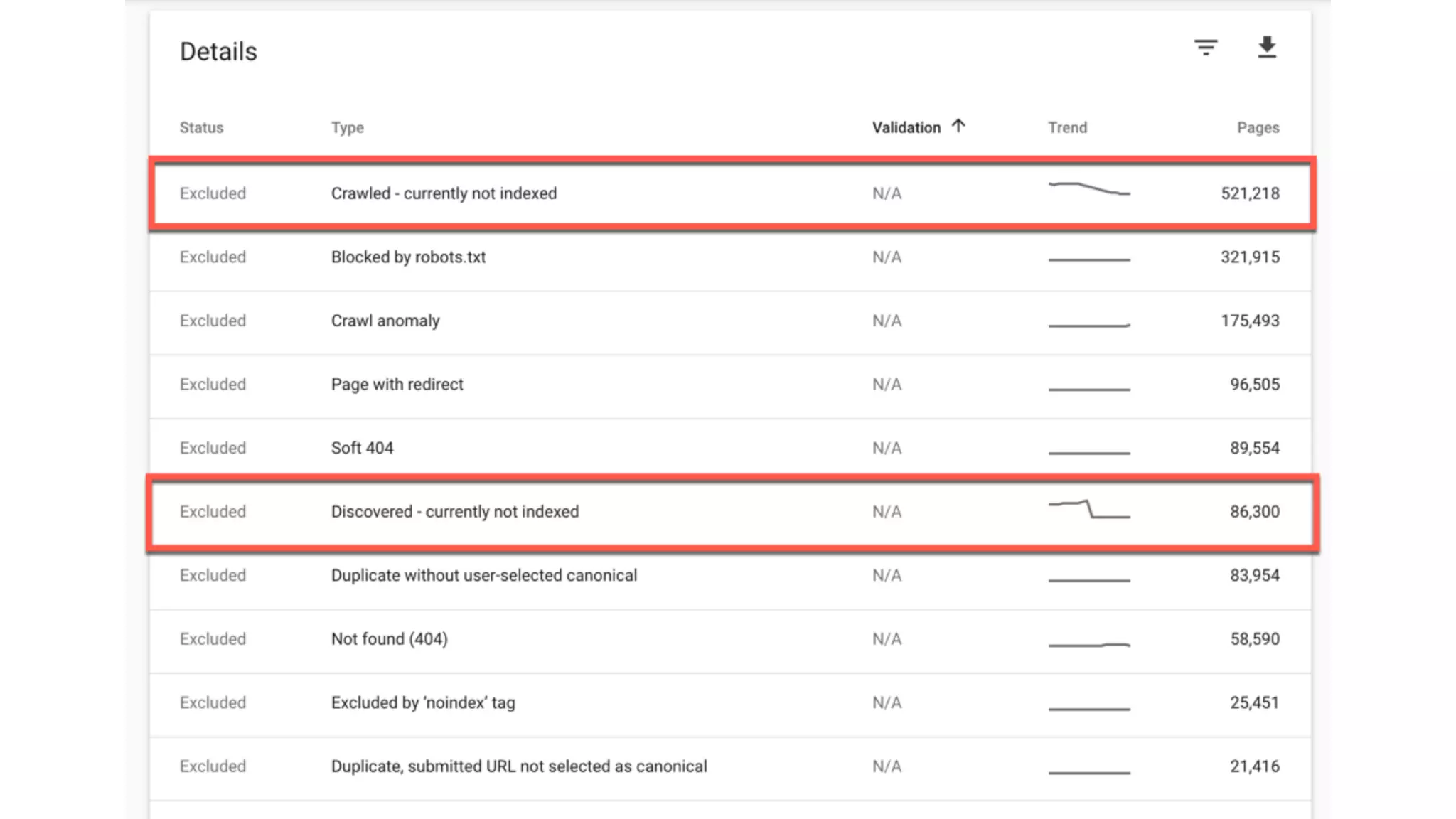

The document discusses the importance of SEO and technical SEO, emphasizing the need for comprehensive URL management and optimization of XML sitemaps. It highlights techniques for tracking website crawling and diagnosing issues using tools like Google Search Console and robots.txt. Additionally, it stresses the necessity of measuring website performance to ensure proper indexing and ranking by search engines.