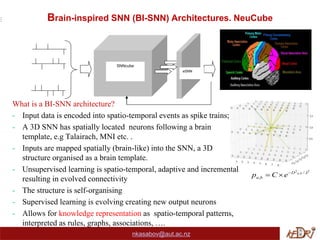

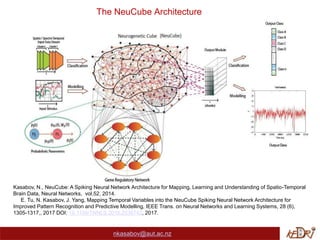

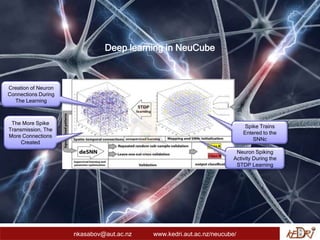

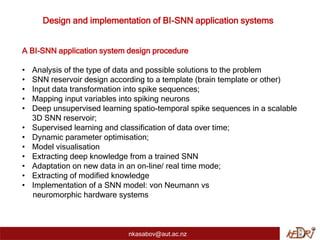

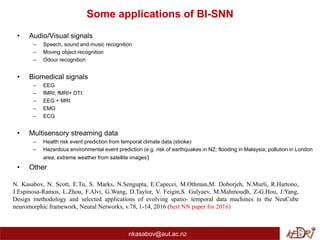

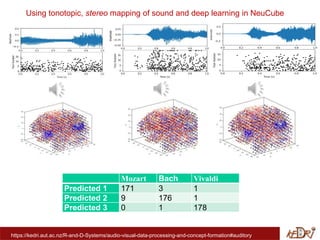

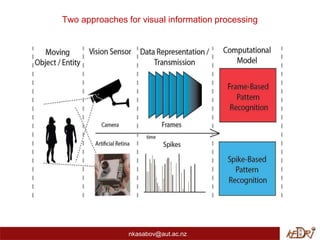

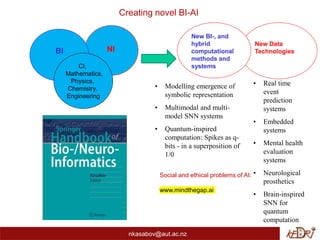

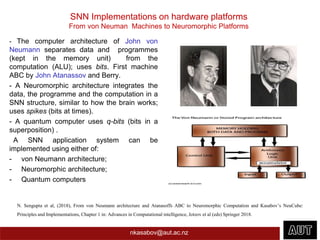

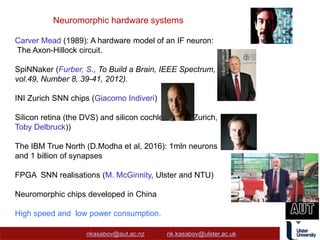

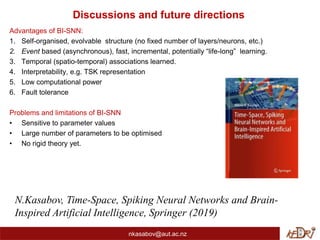

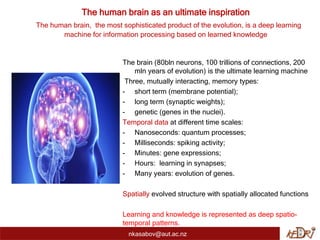

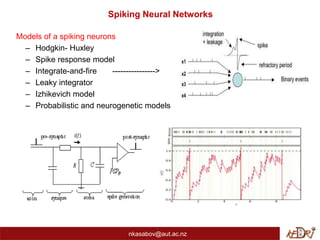

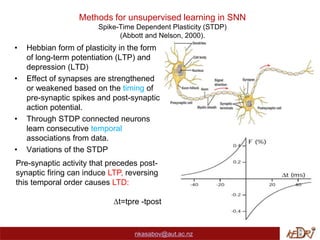

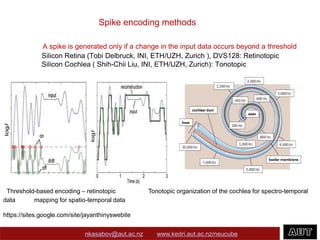

The document discusses brain-inspired computation utilizing spiking neural networks (SNNs), emphasizing the human brain's sophisticated learning and memory mechanisms. It details various architectures and models for SNNs, including unsupervised and supervised learning methods, and their applications across diverse fields such as biomedical signals and environmental prediction. Additionally, it highlights the advantages and limitations of SNNs and outlines current advancements in neuromorphic hardware systems.

![Methods for supervised learning in SNN

Rank order (RO) learning rule (Thorpe et al, 1998)

nkasabov@aut.ac.nz

)

(

order j

ji m

w

else

fired

if

0

)

(

)

(

|

)

(

order

t

j

f

j

j

i

ji

i m

w

t

u

PSP max (T) = SUM [(m order (j(t)) wj,i(t)], for j=1,2.., k; t=1,2,...,T;

PSPTh=C. PSPmax (T)

- Earlier coming spikes are more important (carry more information)

- Early spiking can be achieved, depending on the parameter C.](https://image.slidesharecdn.com/brain-inspiredcomputaionbasedonsnn-220602110526-7ce00b18/85/Brain-Inspired-Computation-based-on-Spiking-Neural-Networks-7-320.jpg)