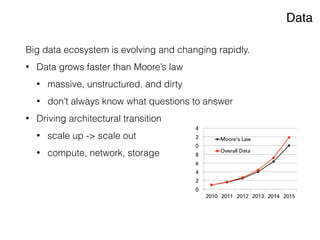

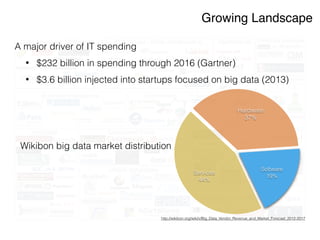

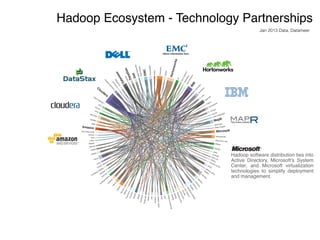

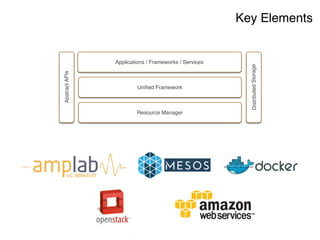

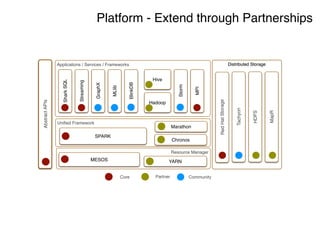

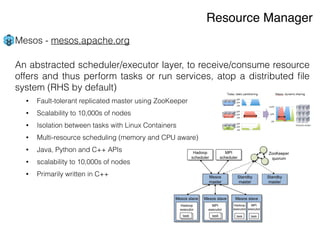

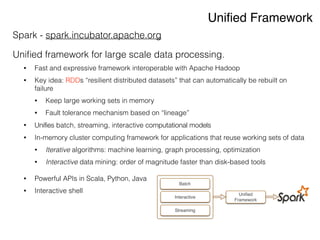

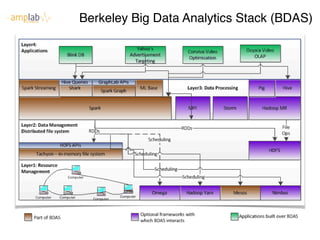

The document discusses the rapidly evolving big data ecosystem, highlighting the challenges of data processing and the architectural transition from scaling up to scaling out. It emphasizes the significant IT spending on big data technologies and the complexities involved in extracting business value from diverse data sources. Key technologies and frameworks, including Hadoop and Spark, are mentioned as vital to managing and processing vast datasets efficiently.