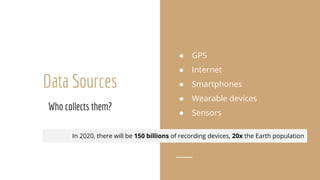

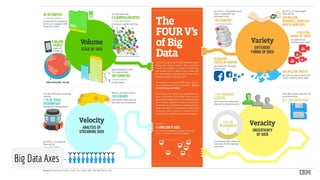

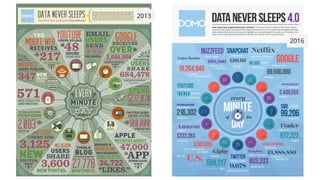

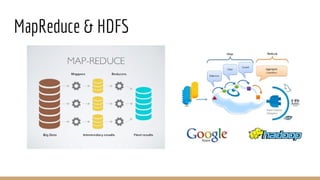

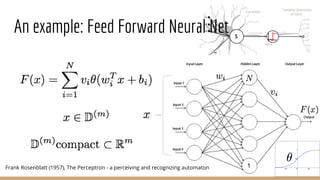

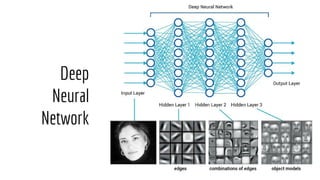

This document discusses big data and machine learning. It defines big data as large amounts of data that are analyzed by machines. It describes how data is increasingly coming from sources like smartphones, sensors, and the Internet. It also discusses how machine learning allows computers to learn from large amounts of data without being explicitly programmed, and how this is enabling automation and new applications of artificial intelligence.