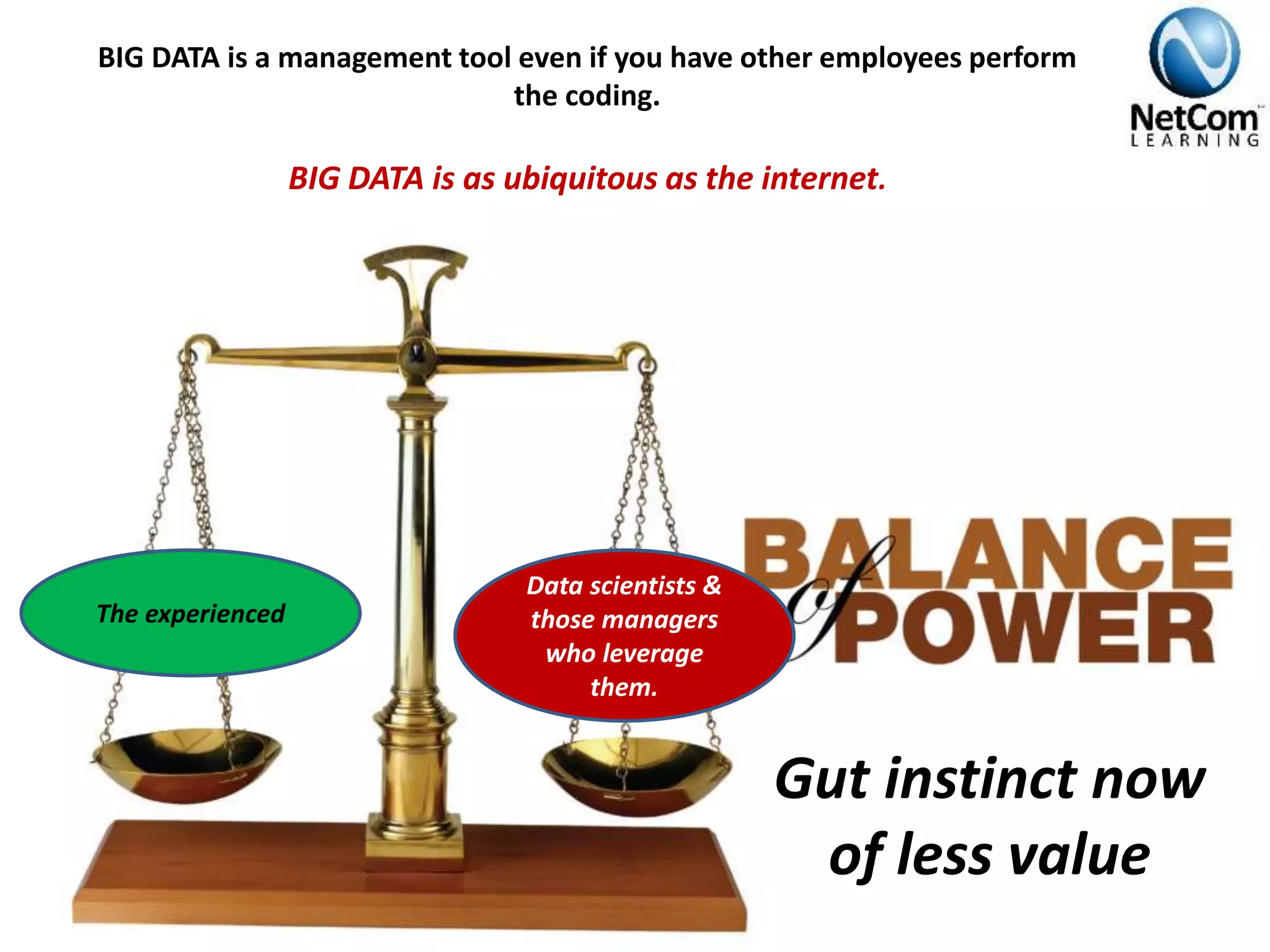

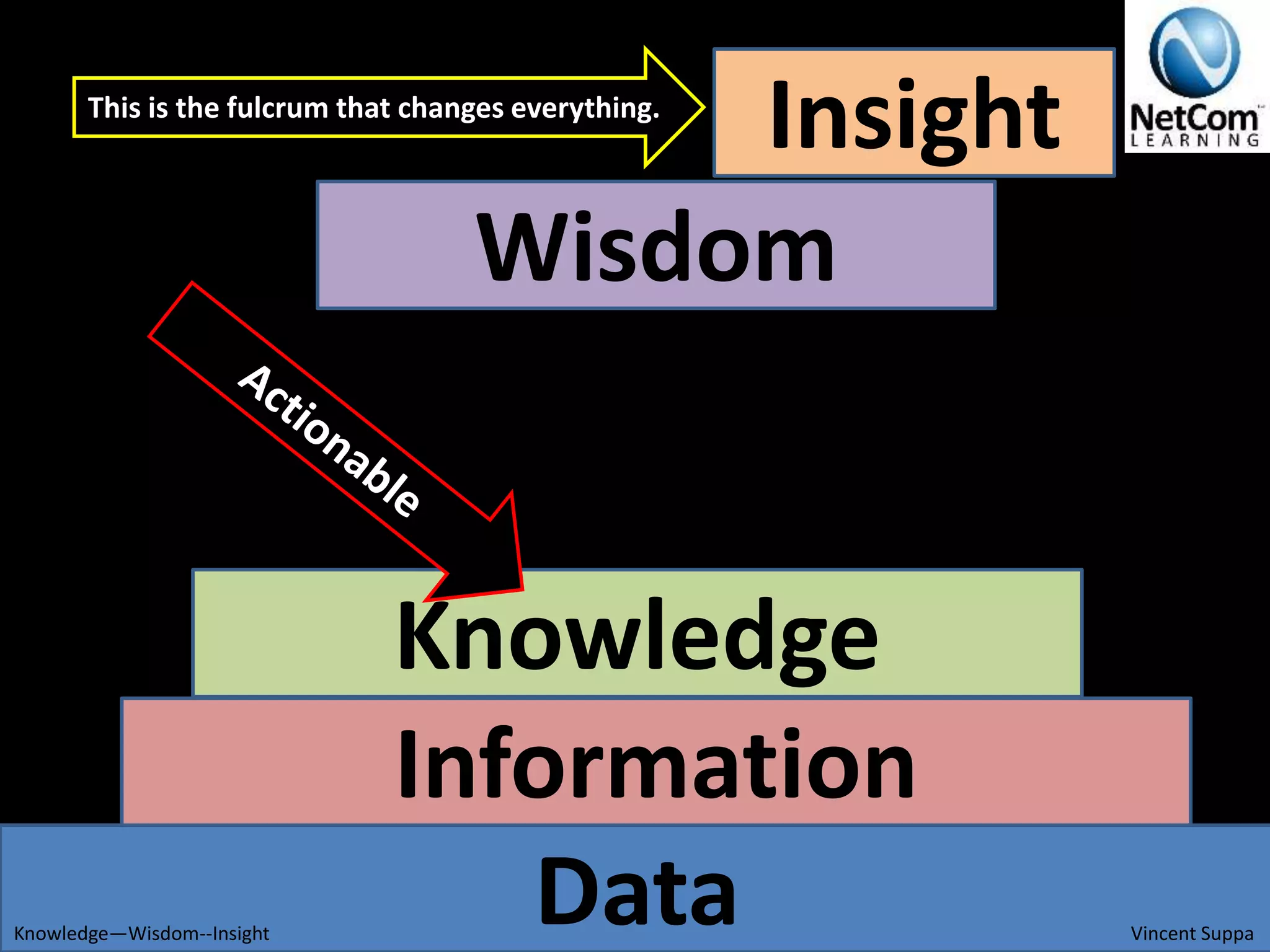

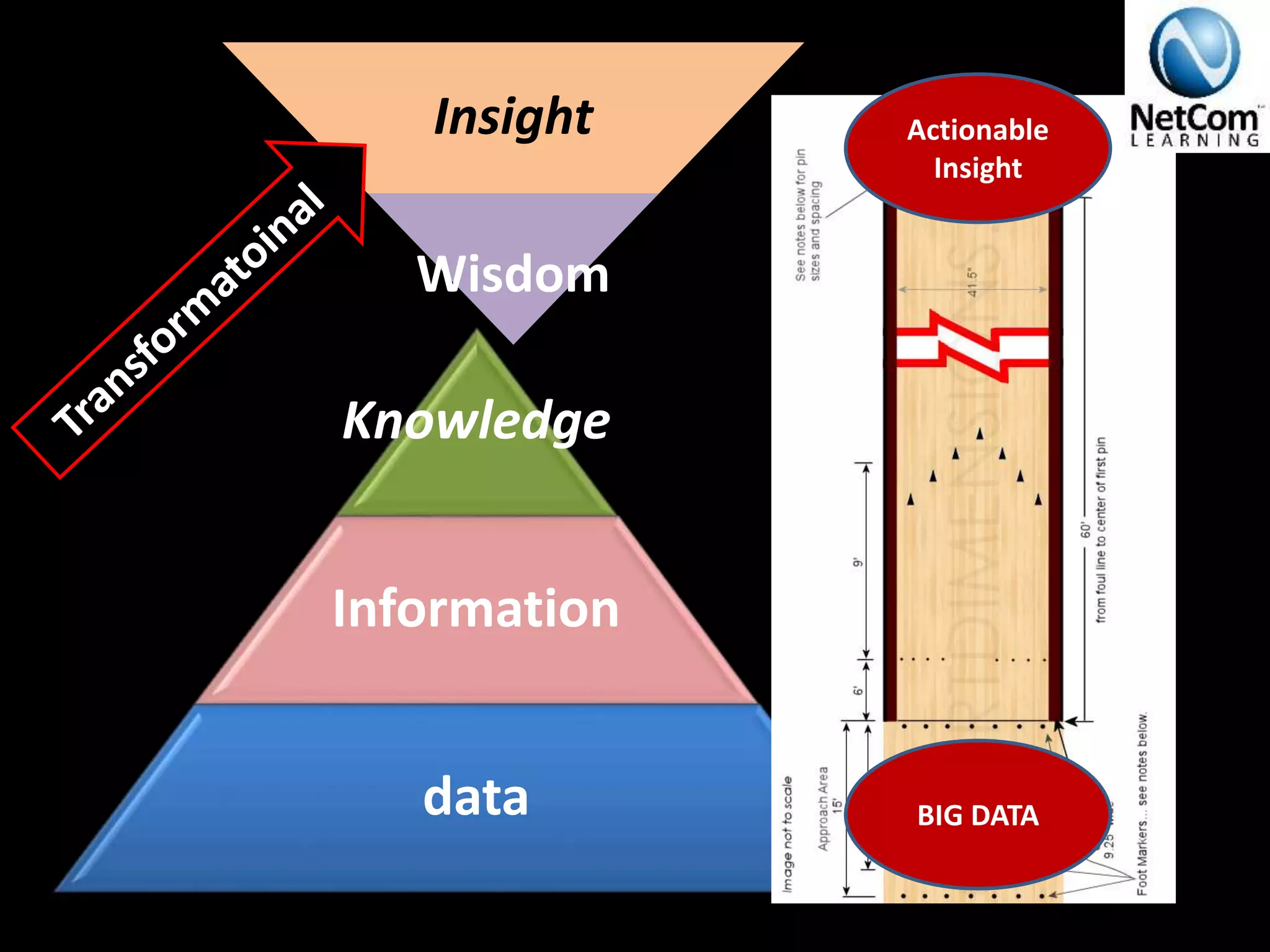

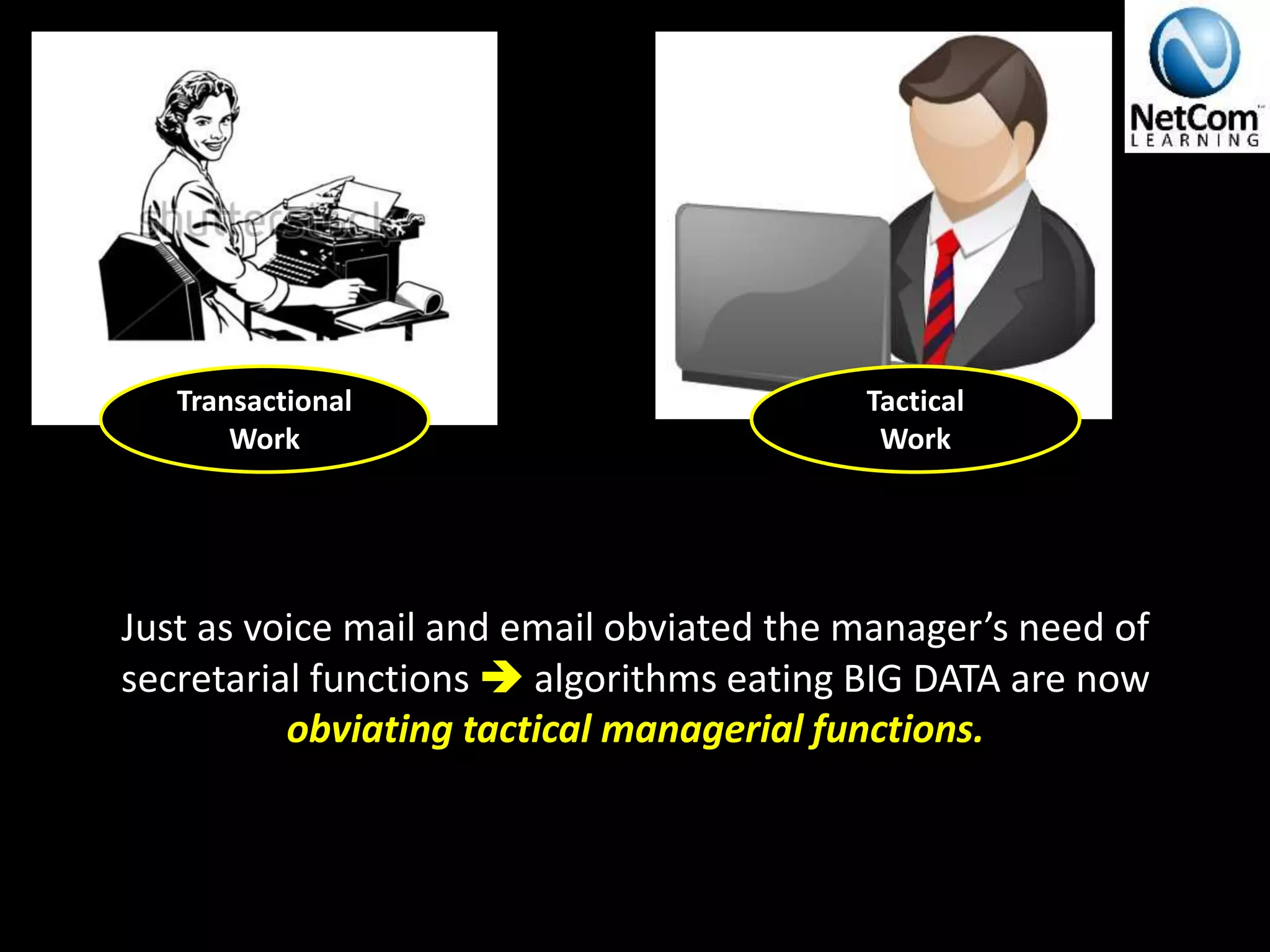

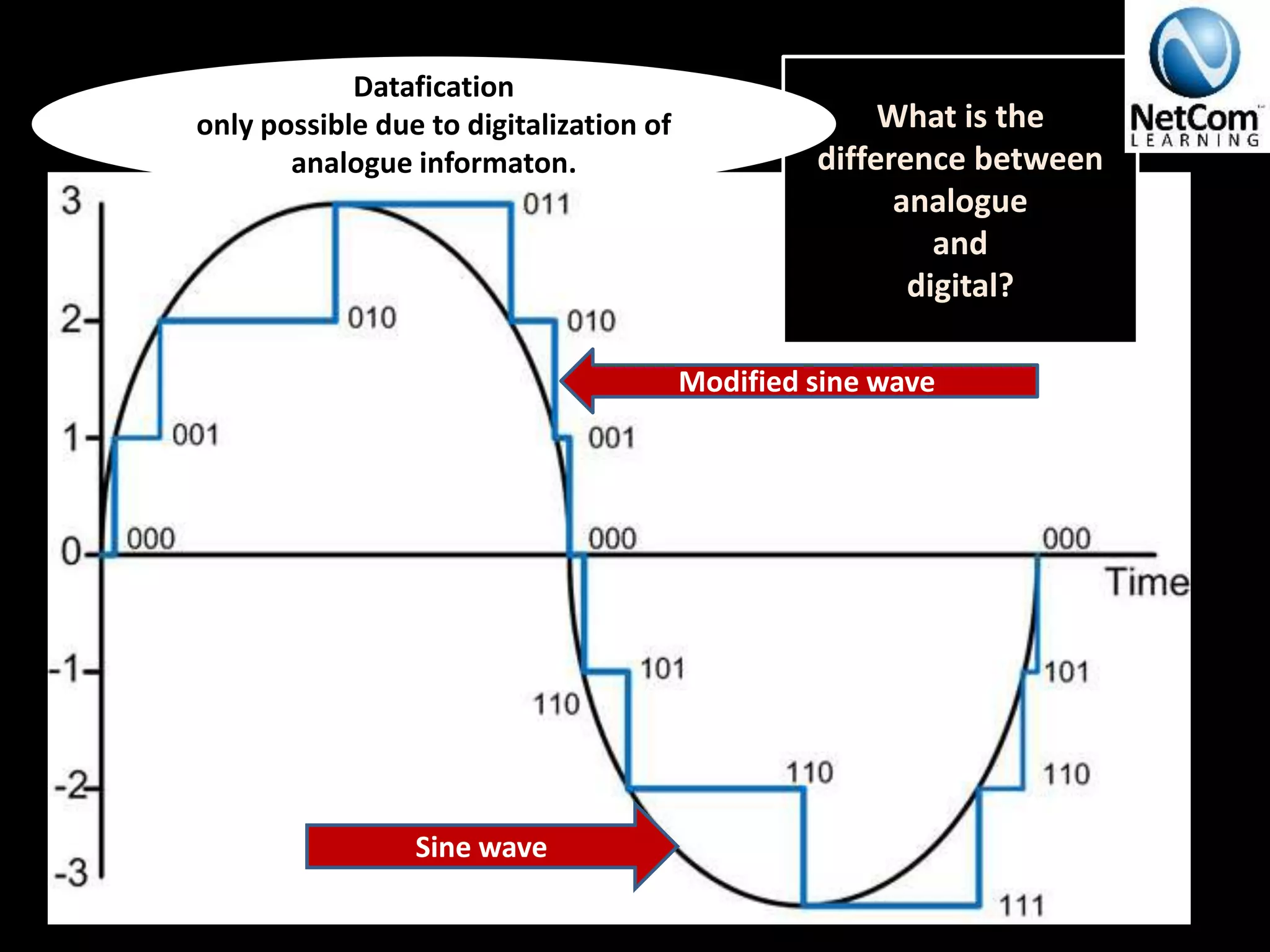

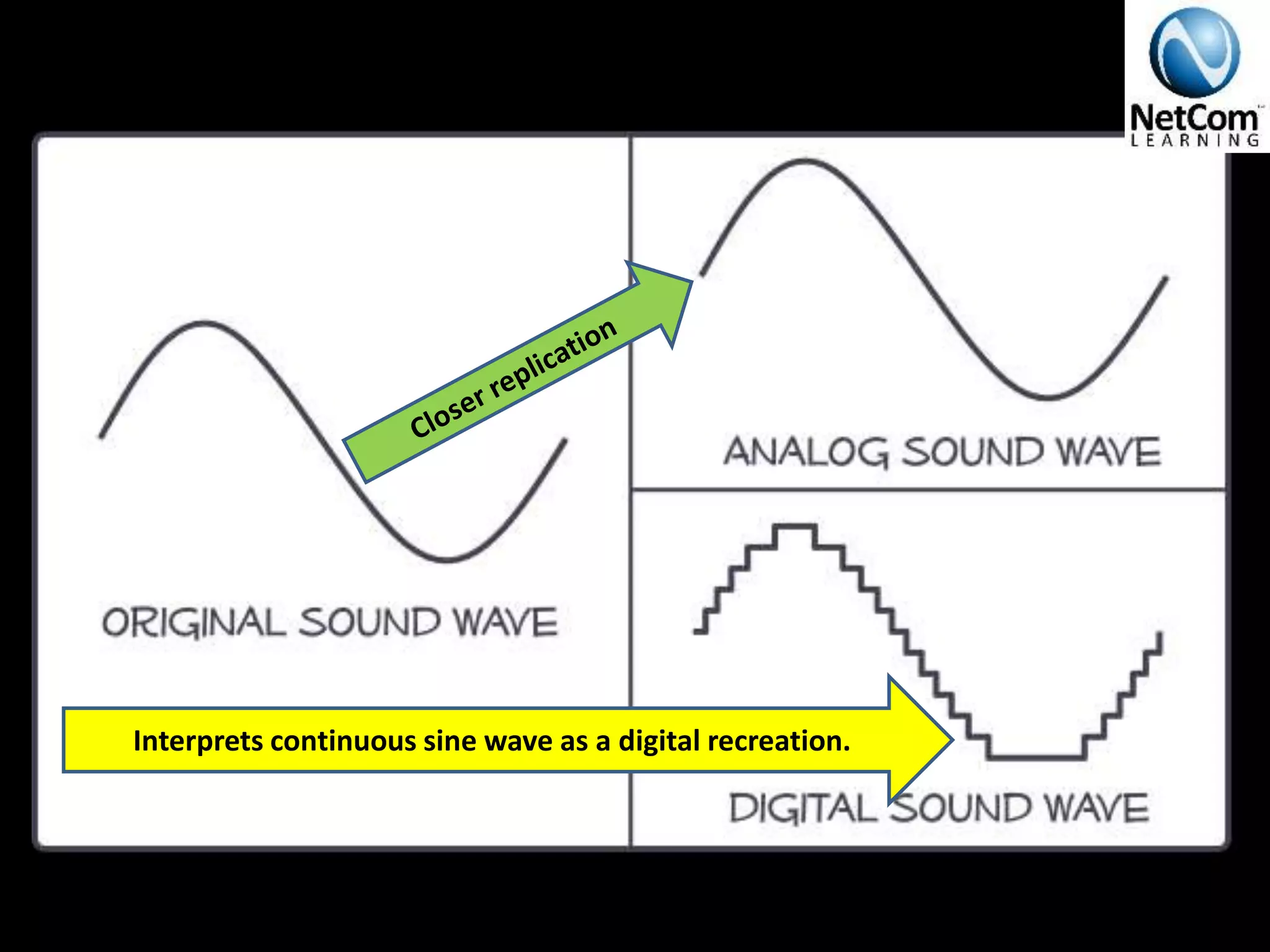

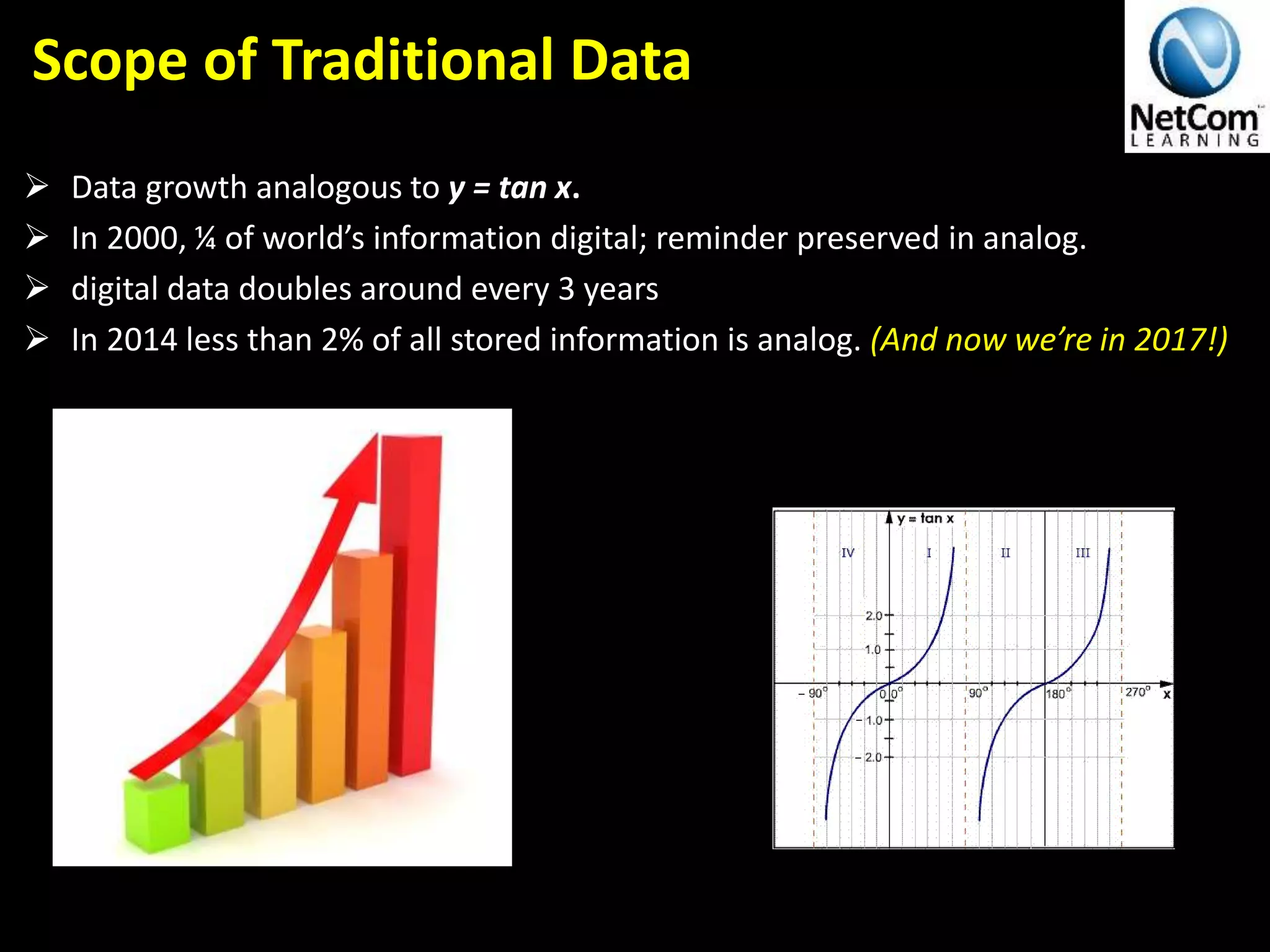

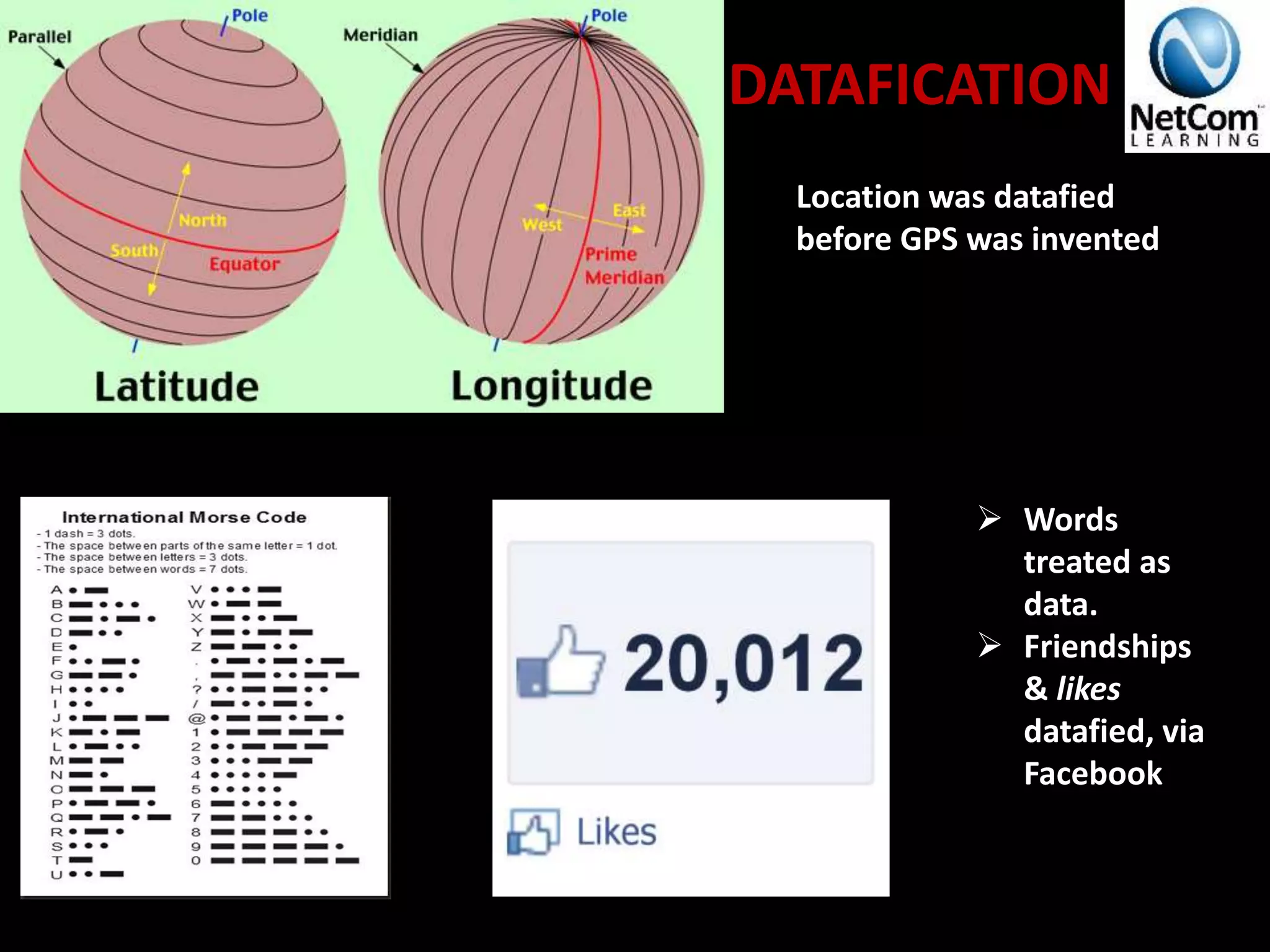

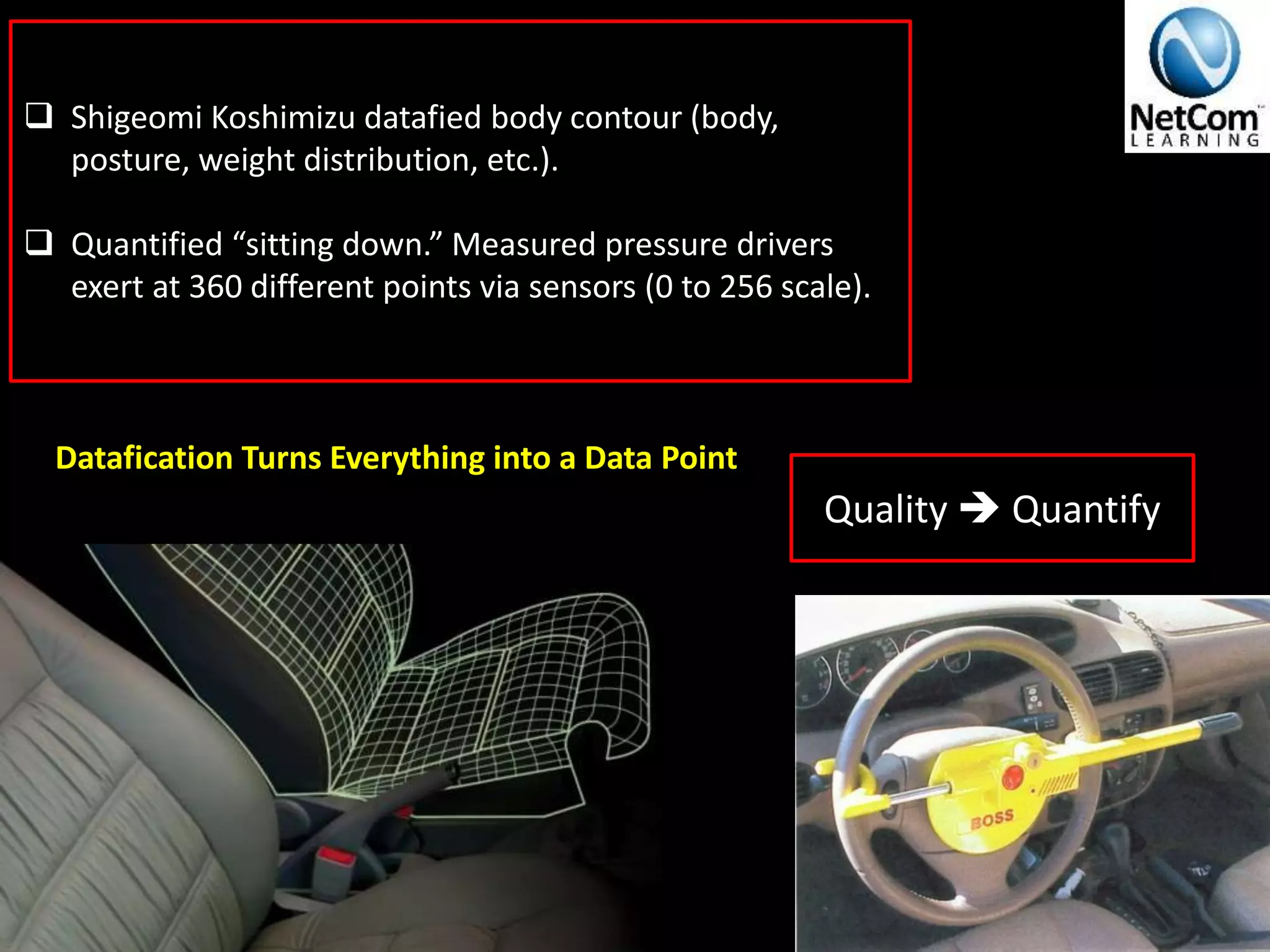

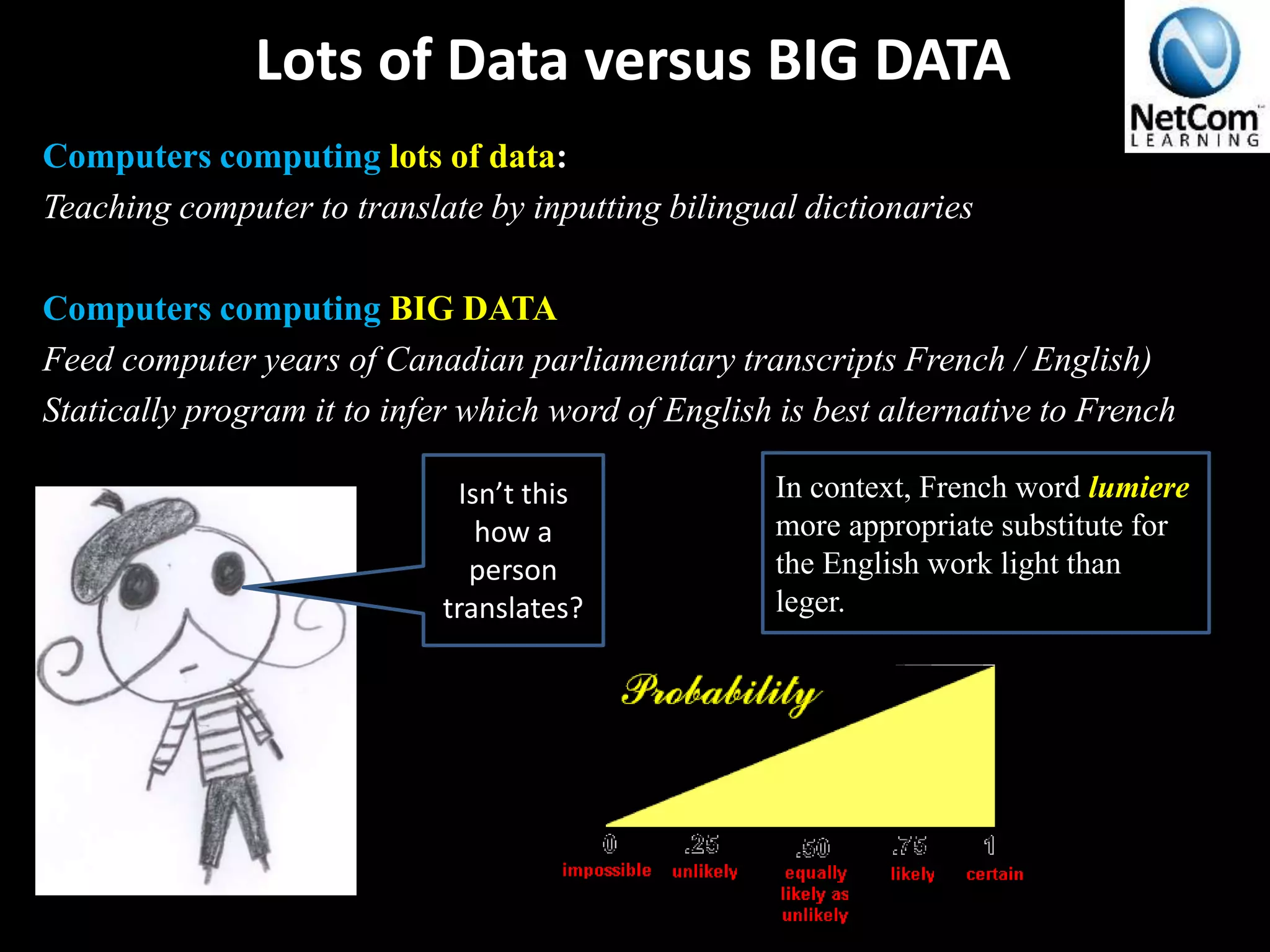

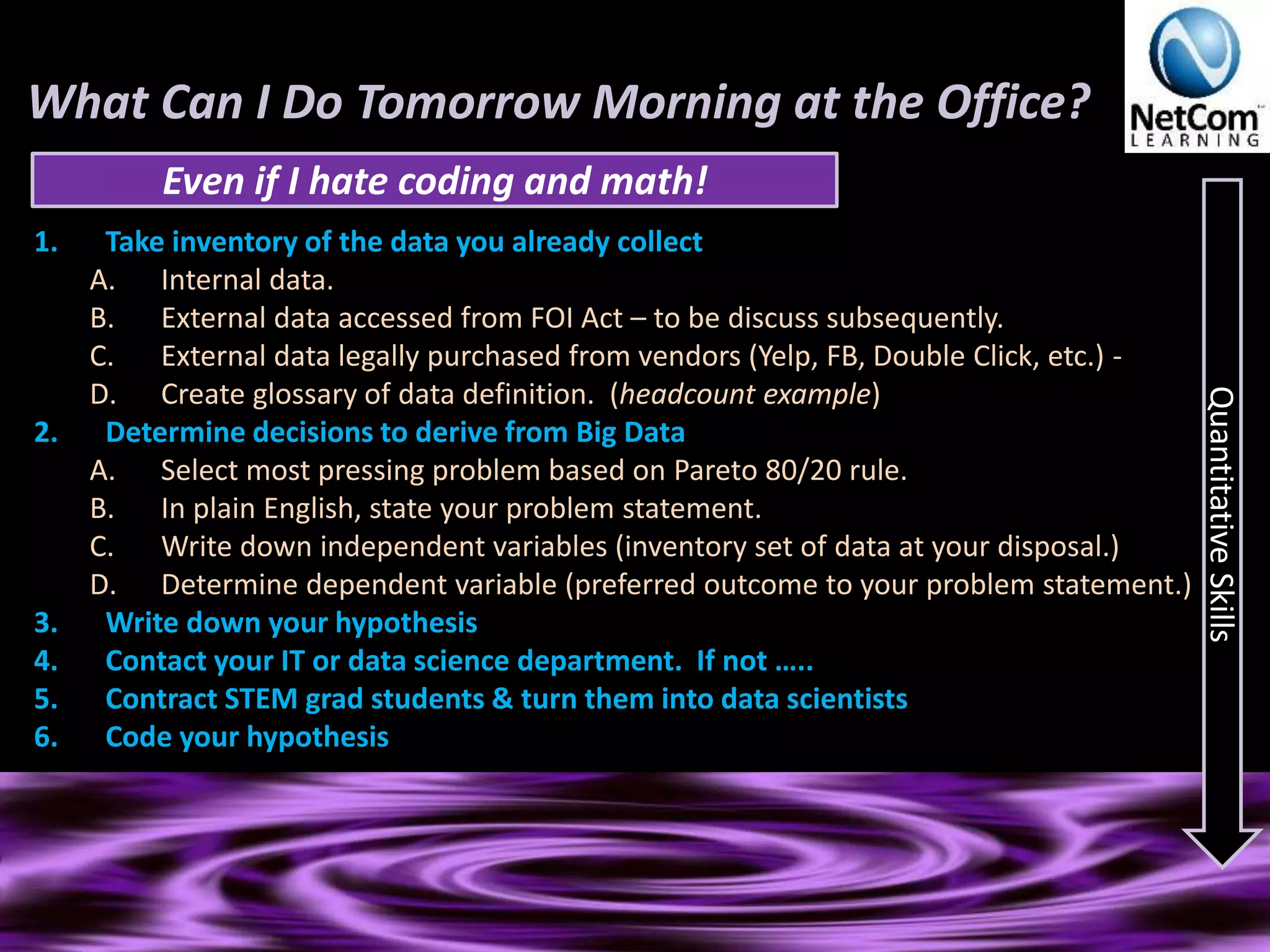

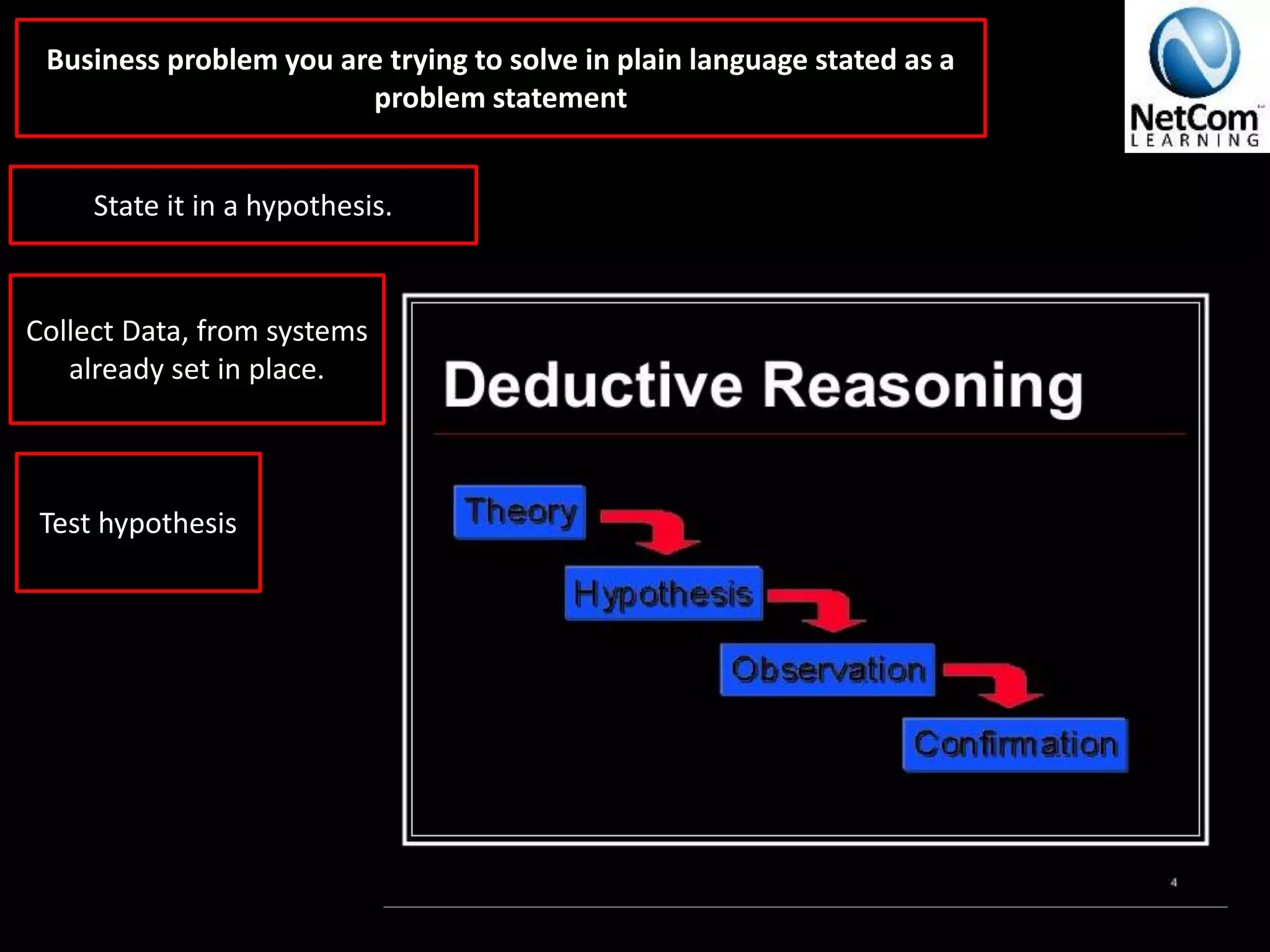

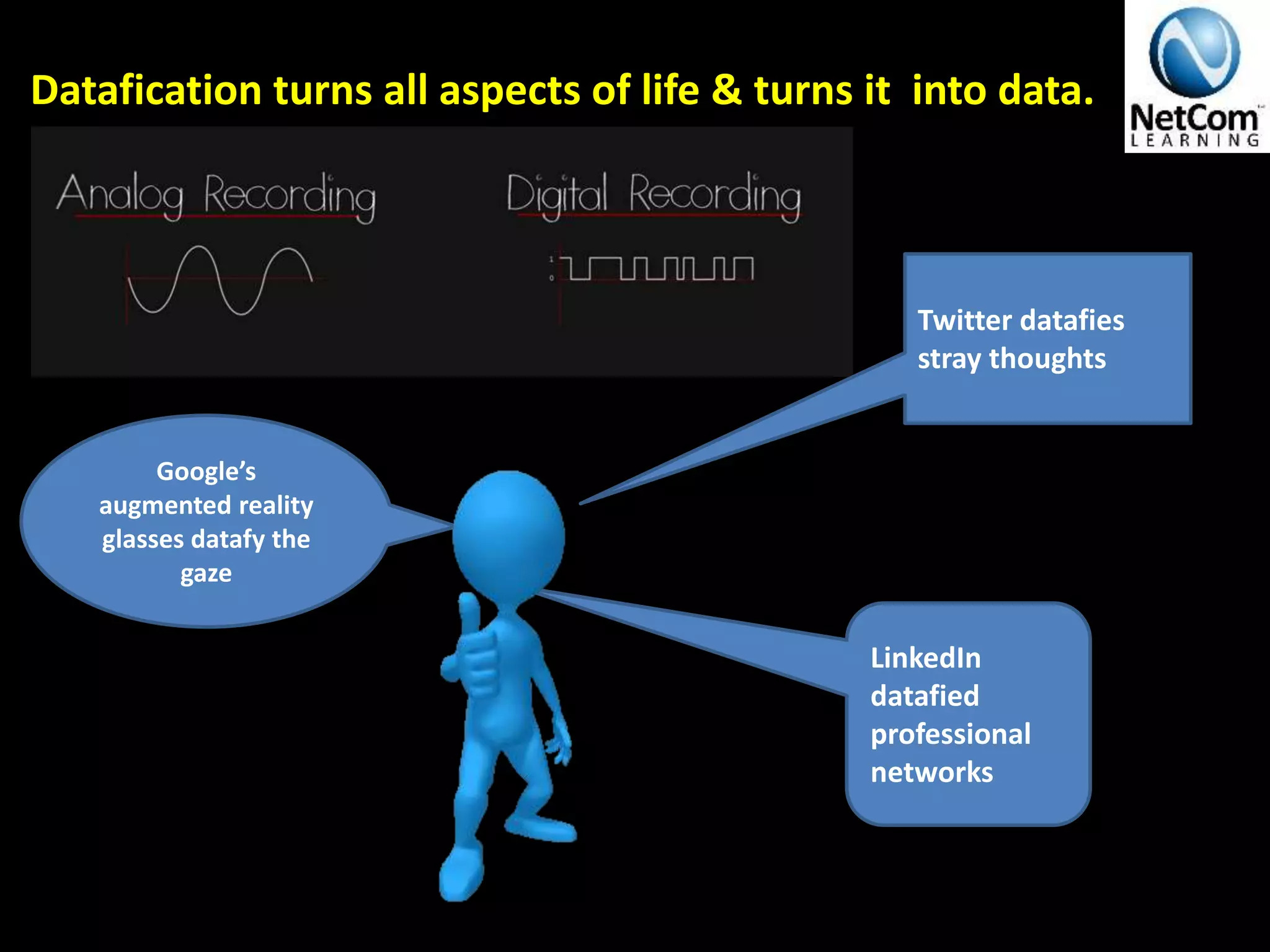

The document discusses the concept of big data, emphasizing its role in transforming business decision-making and career paths. It introduces 'datafication' as the process of converting aspects of life into data points that enhance value, and outlines the skills necessary to leverage big data in the workplace. Additionally, it highlights the importance of distinguishing between causation and correlation, offering practical steps for integrating big data into everyday business operations.