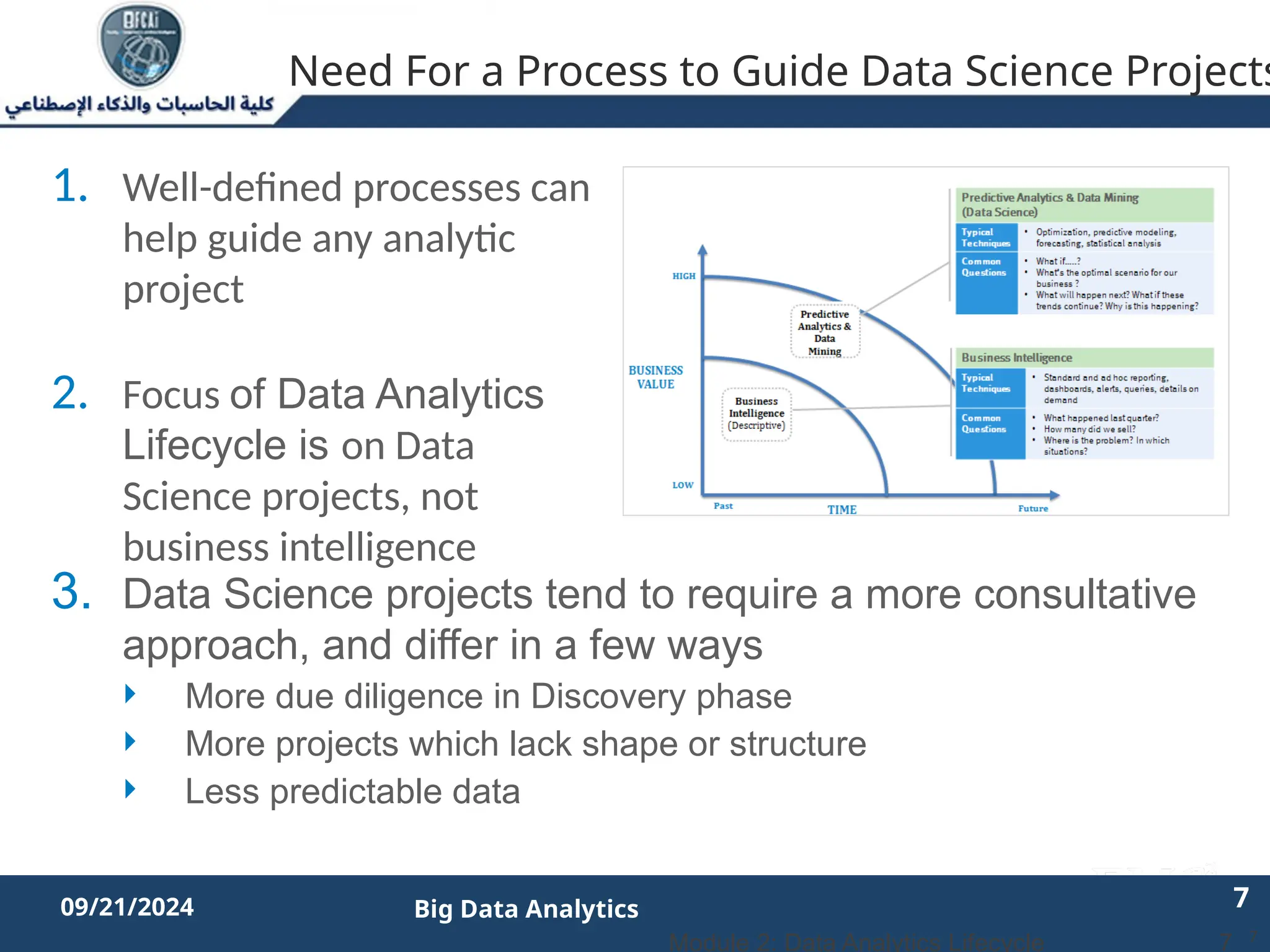

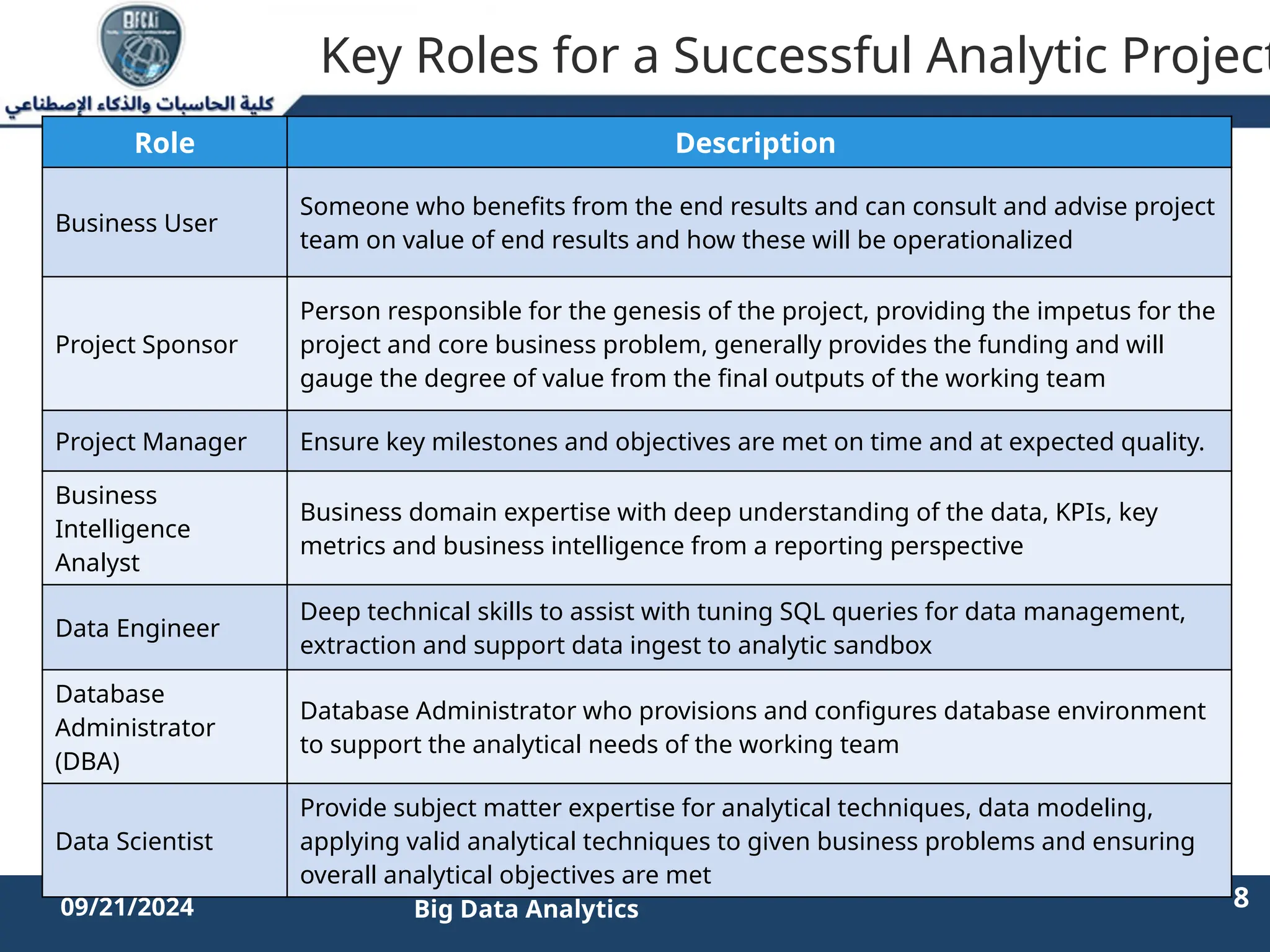

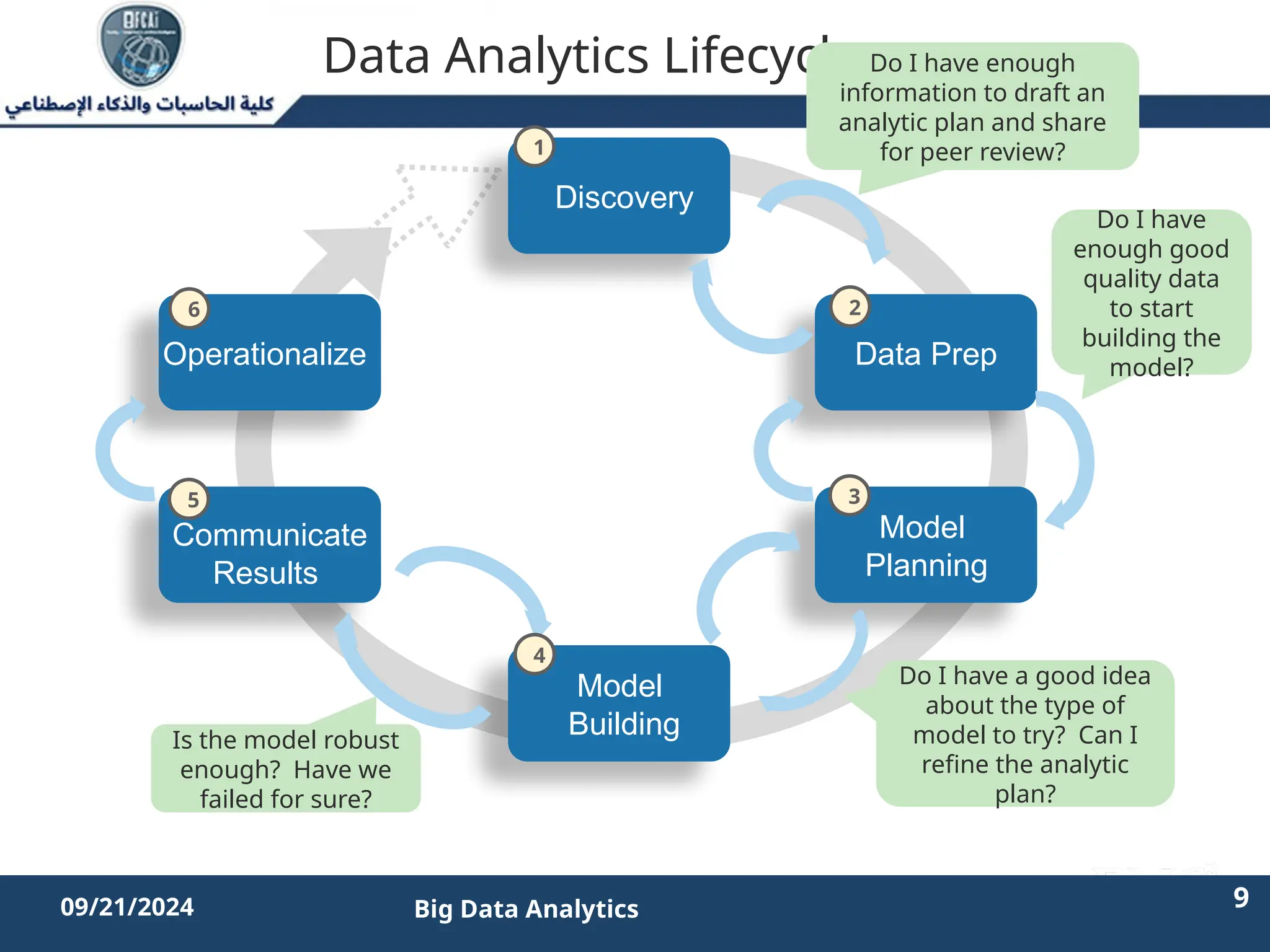

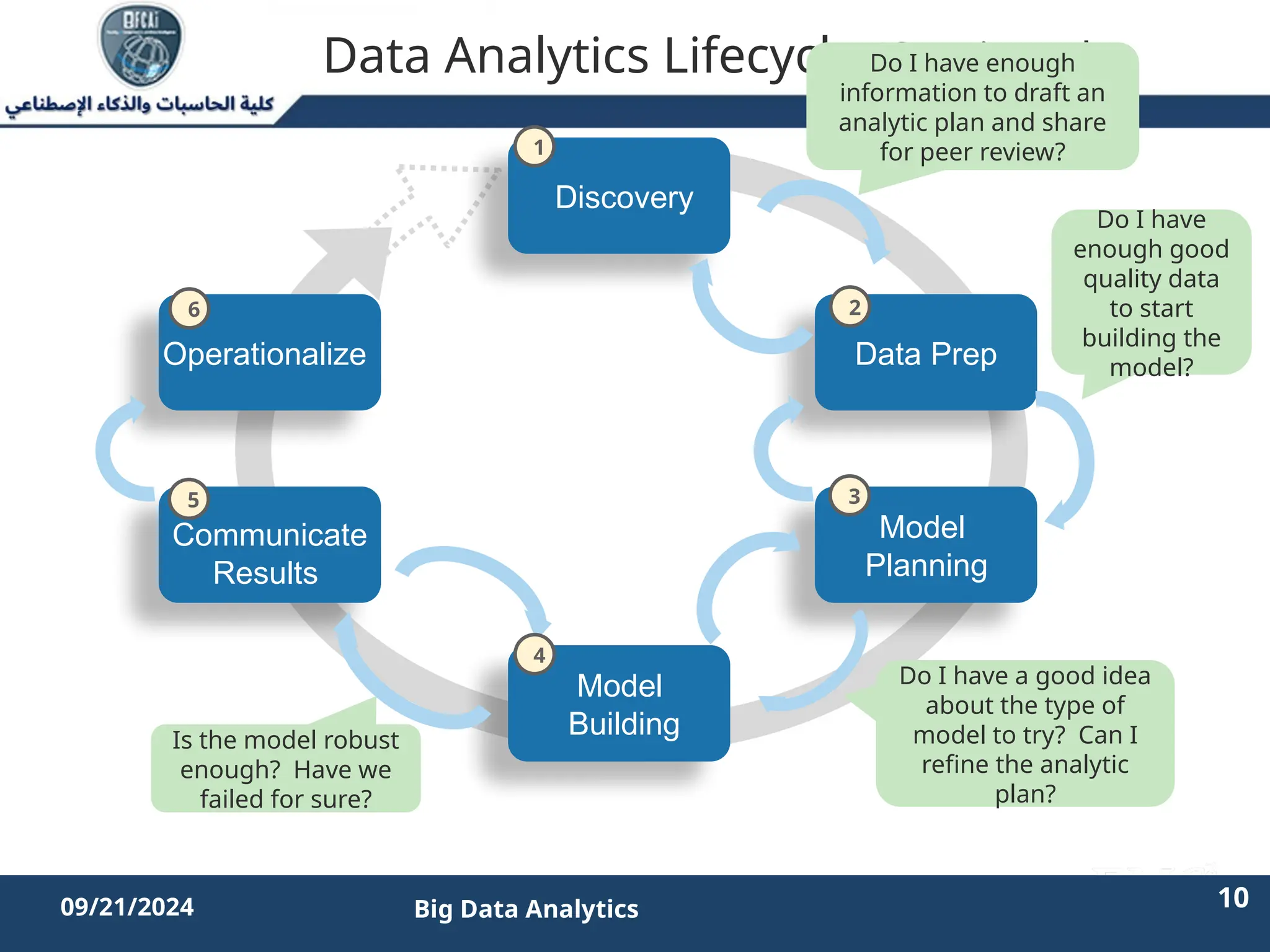

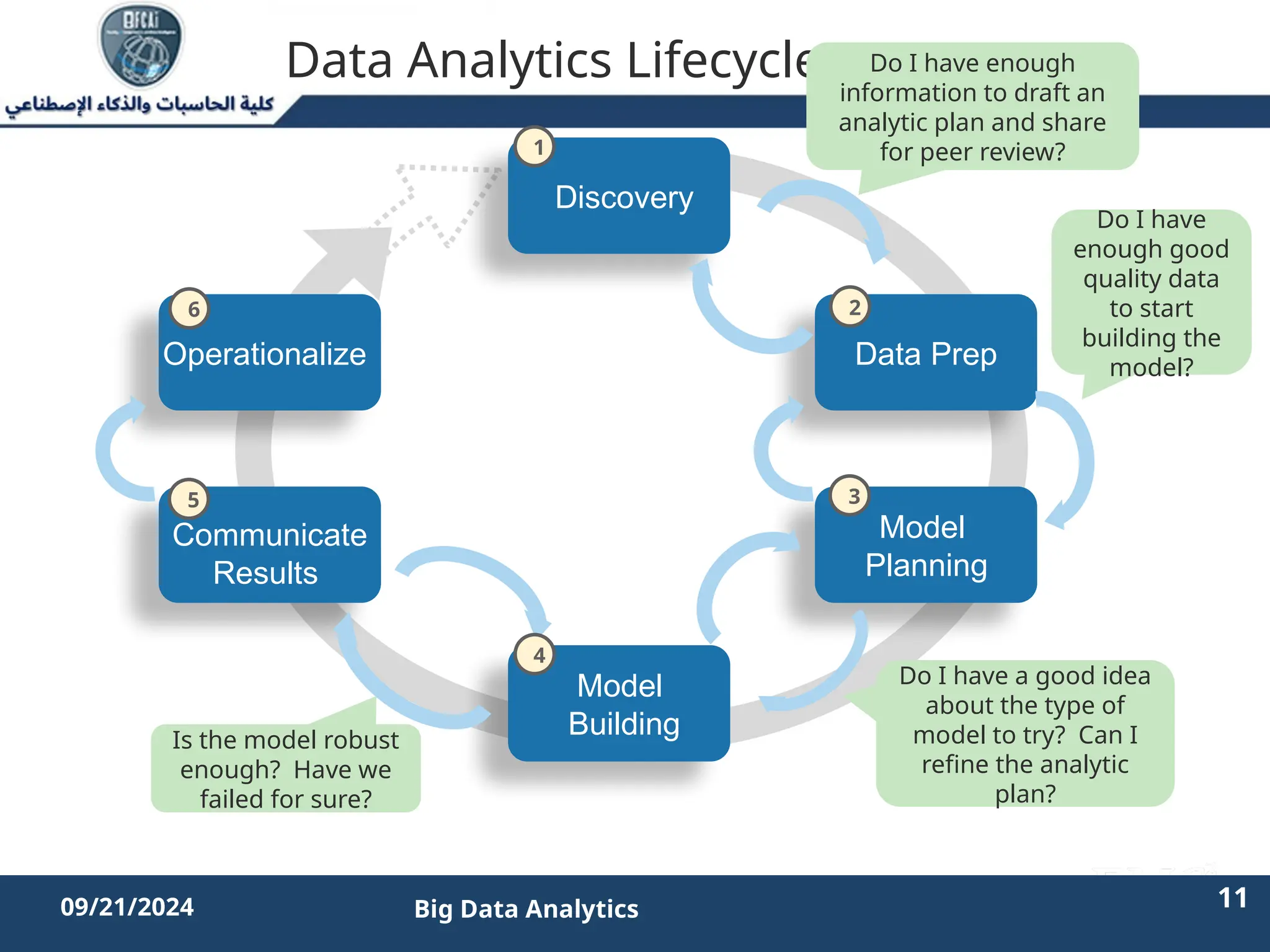

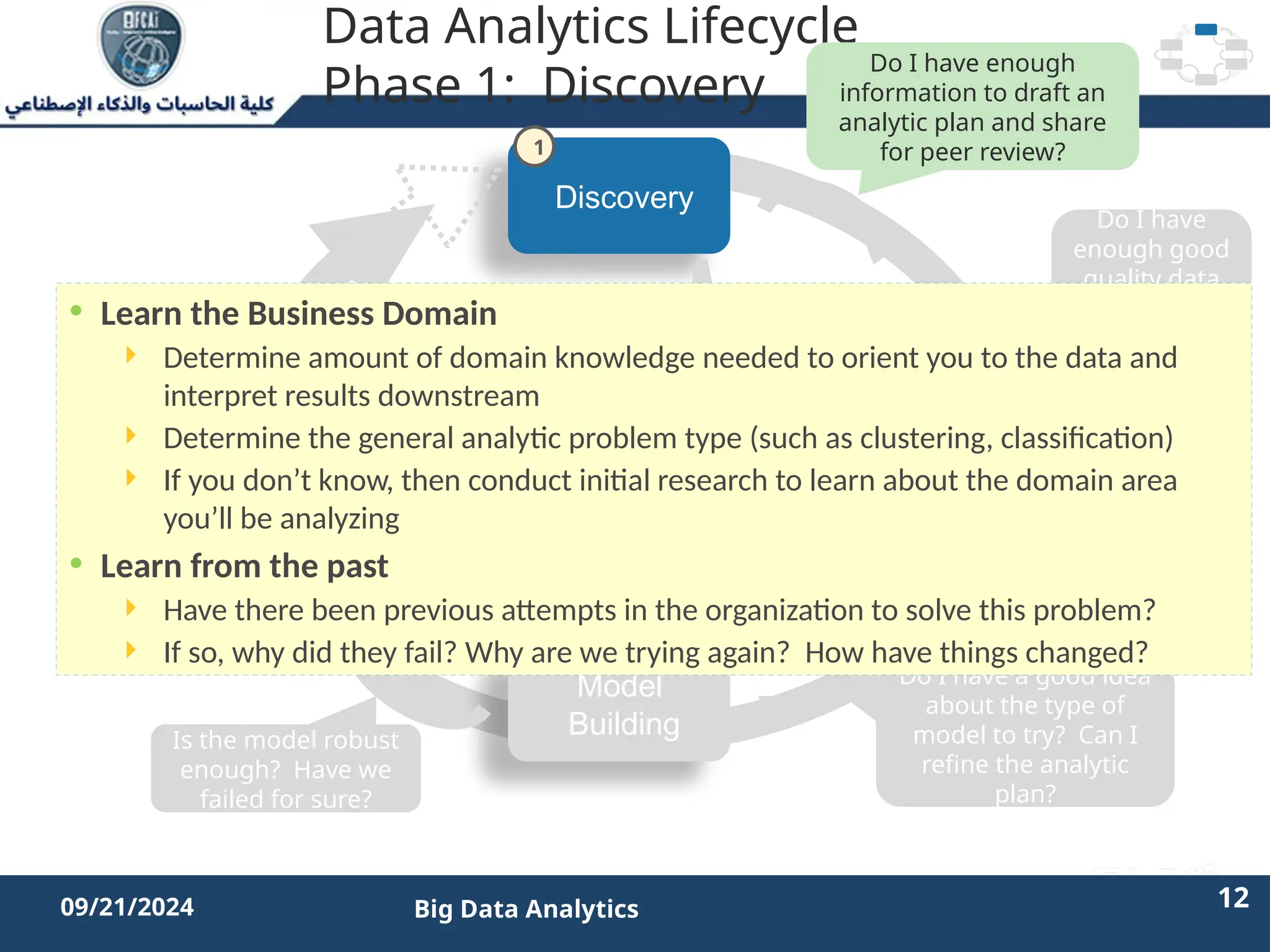

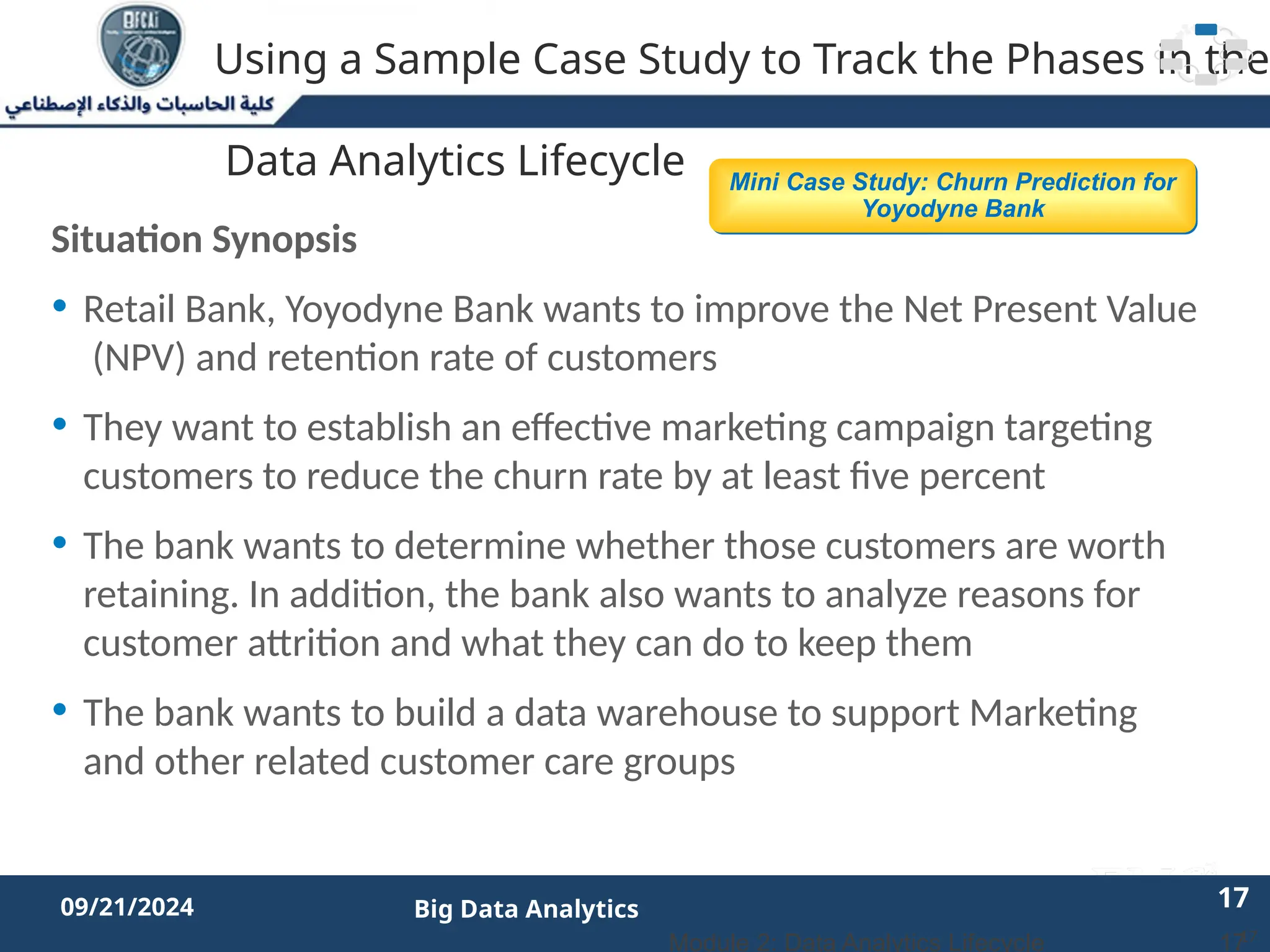

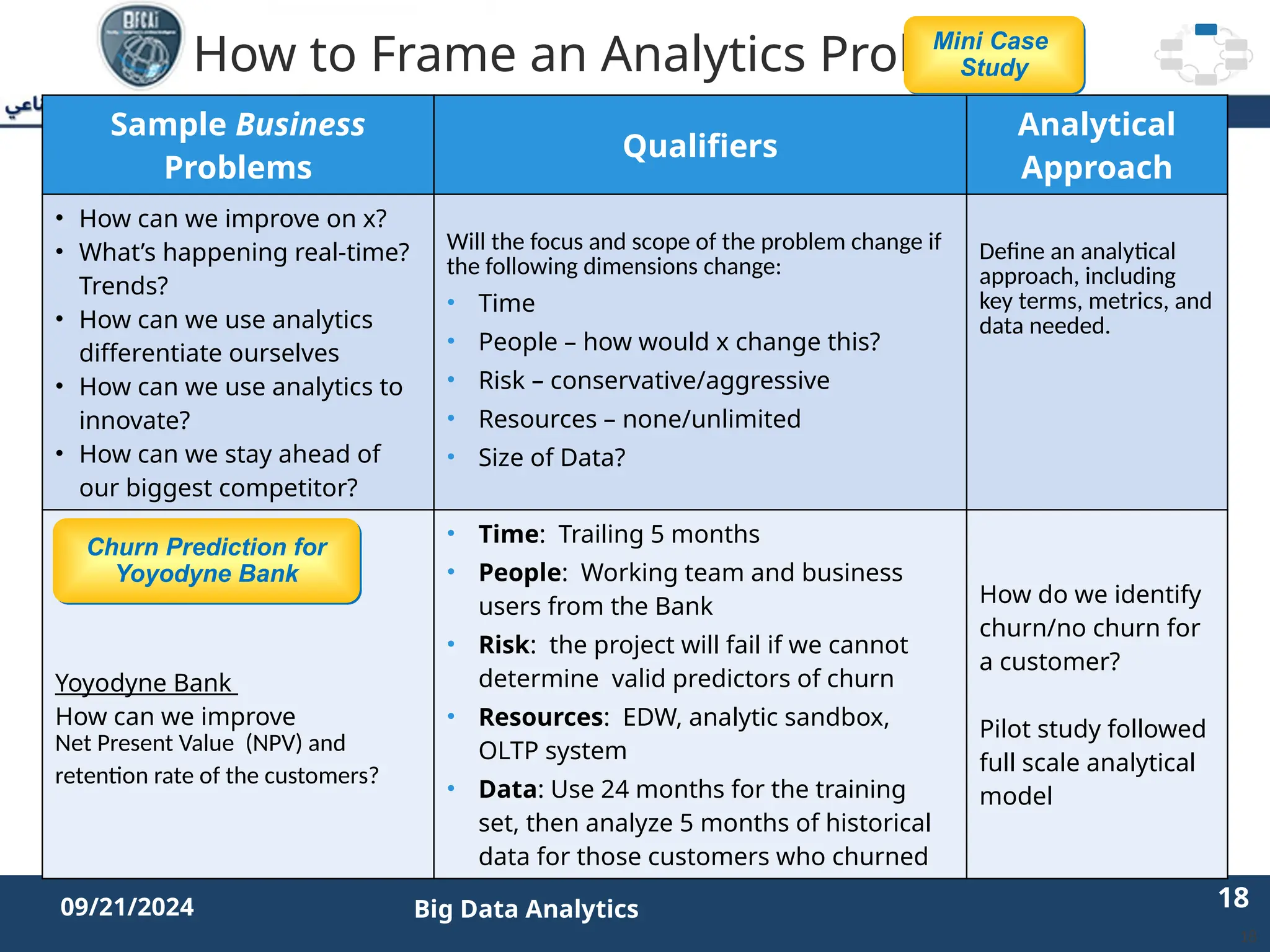

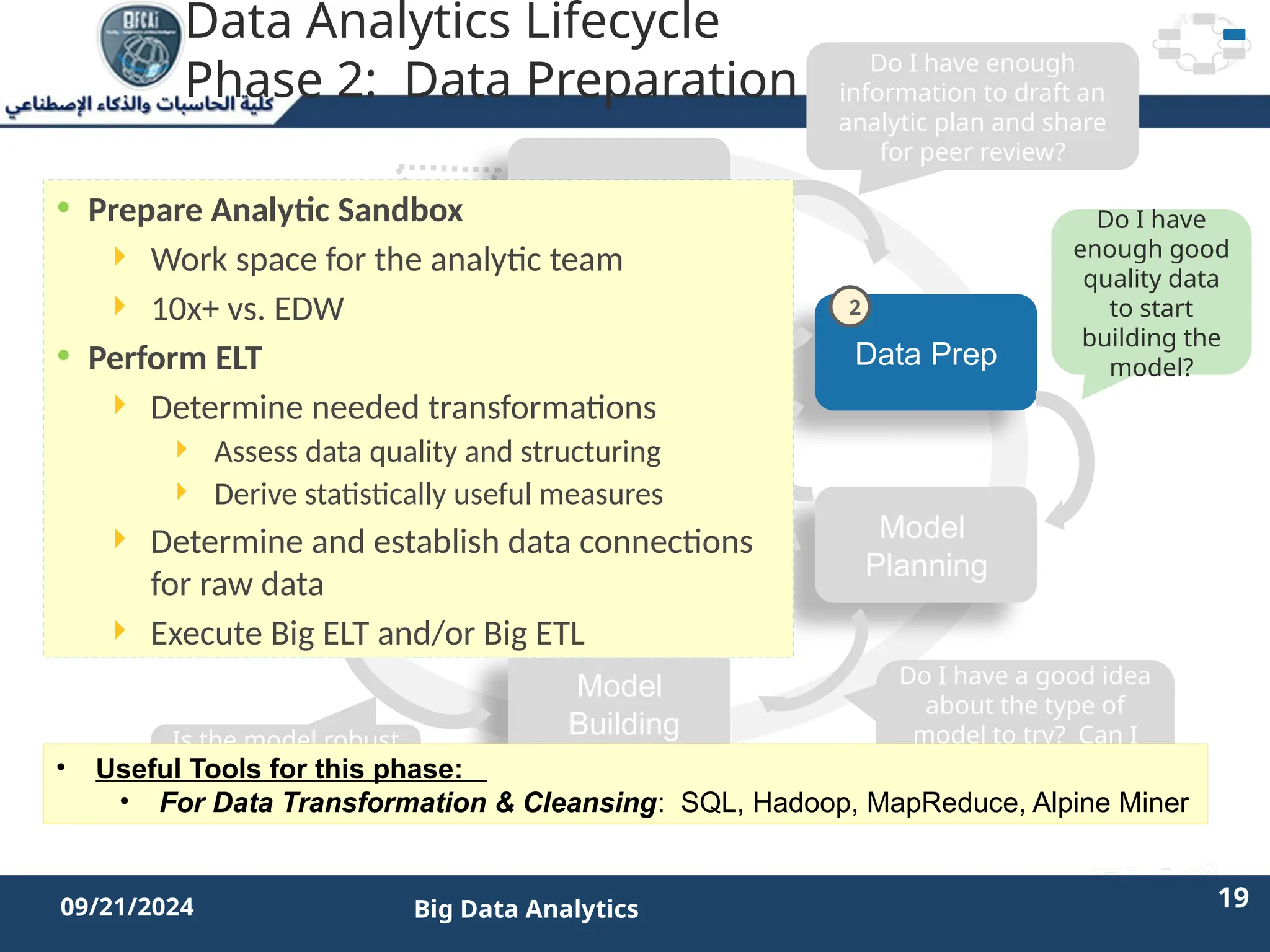

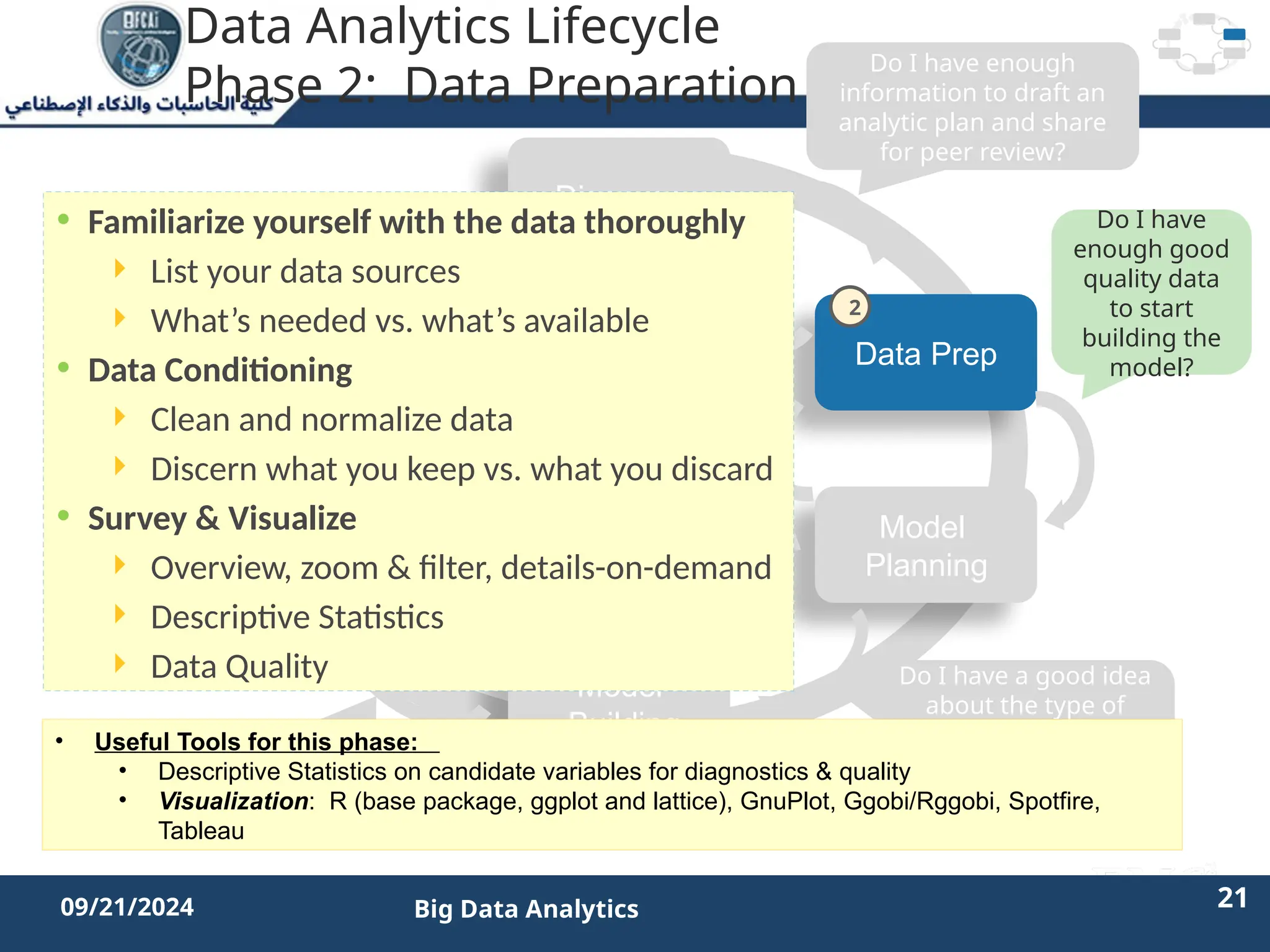

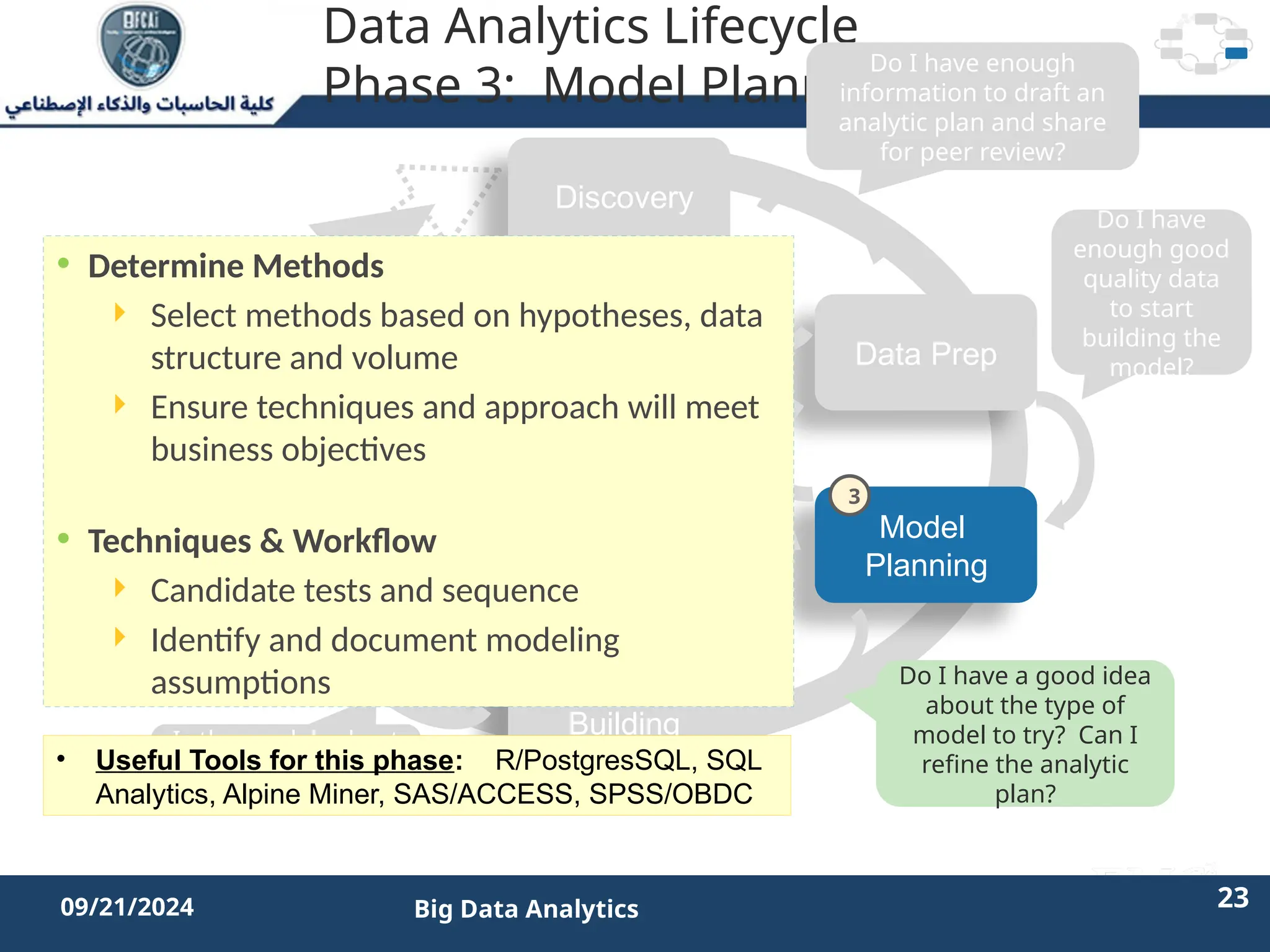

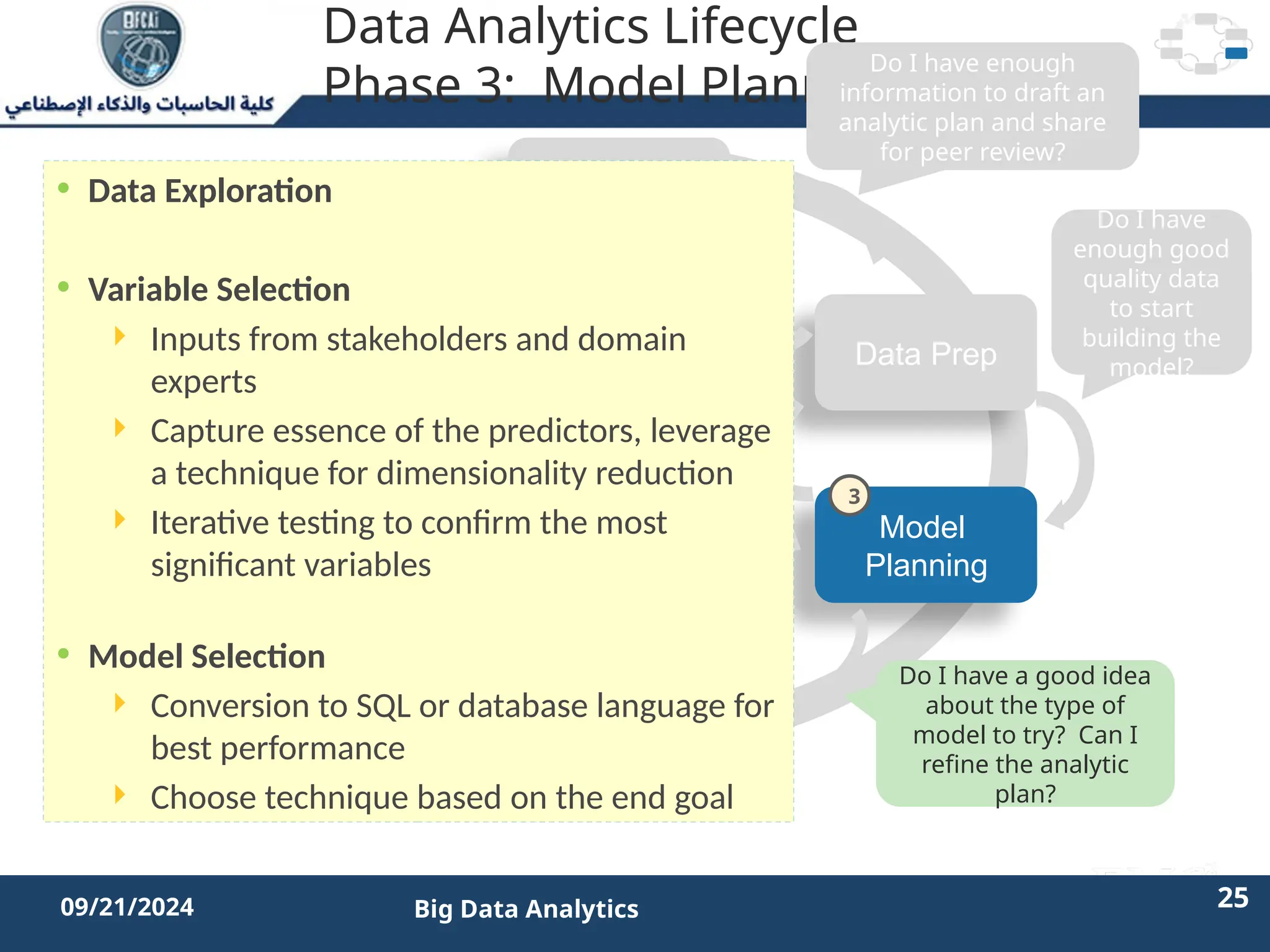

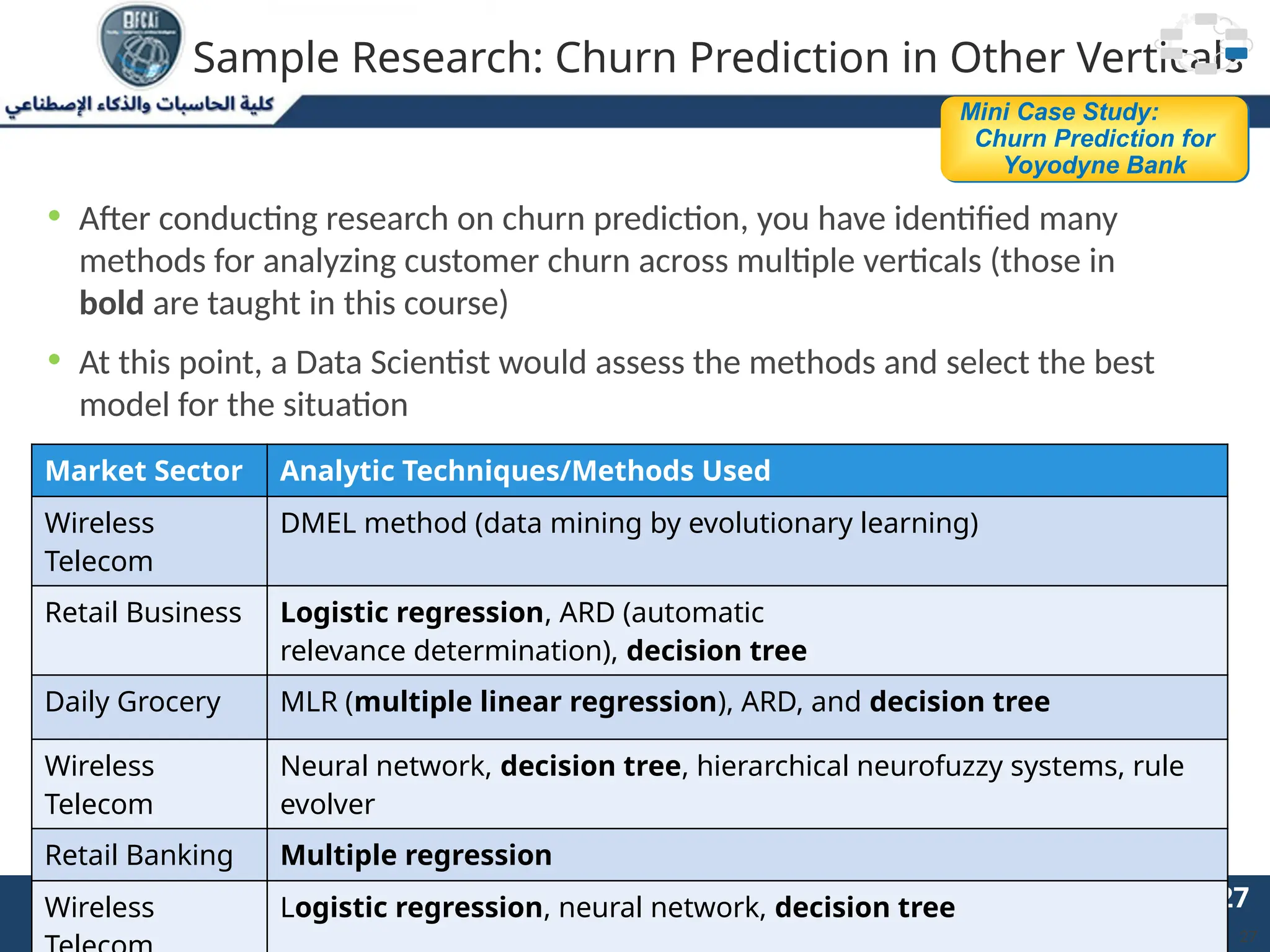

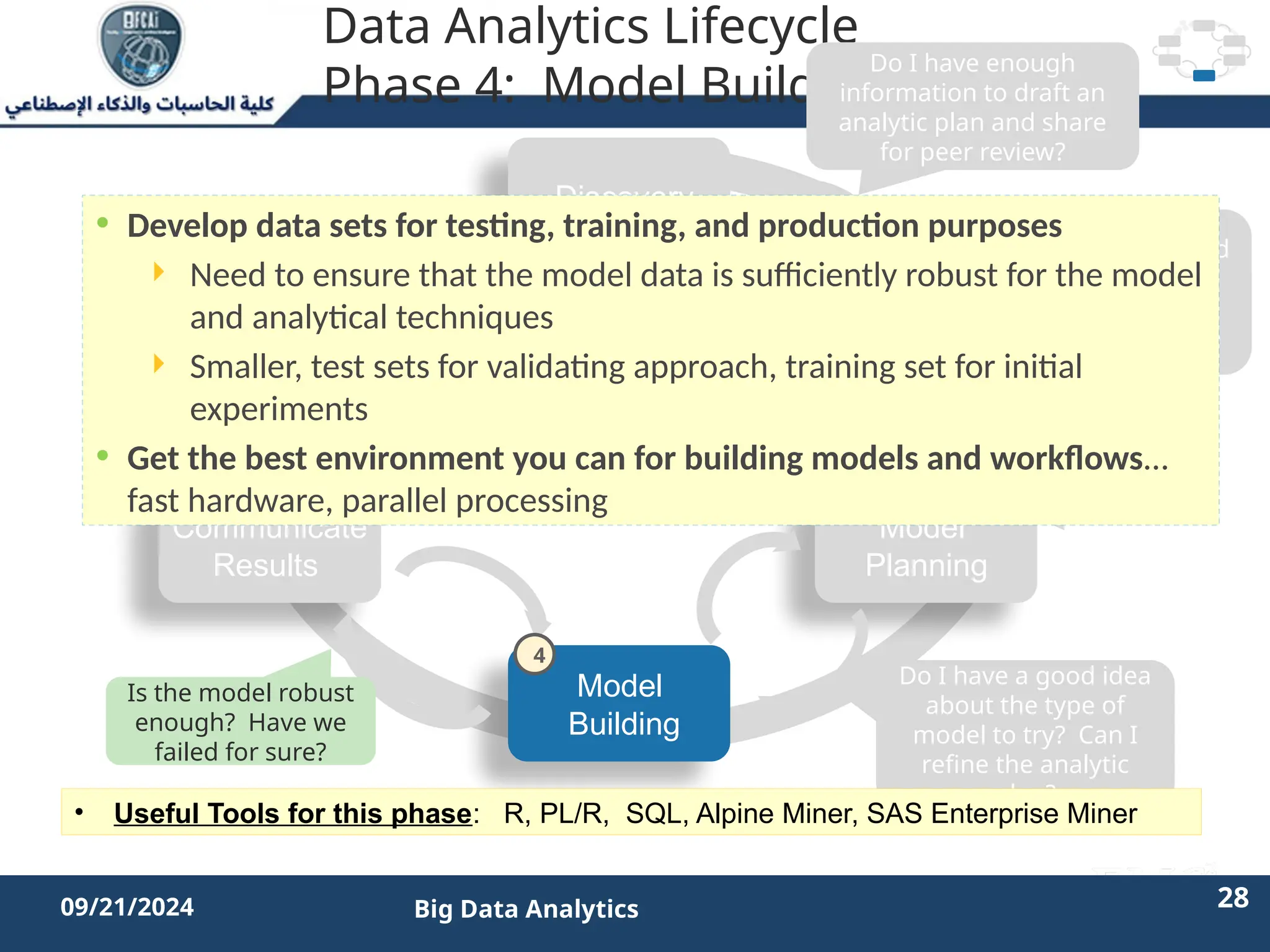

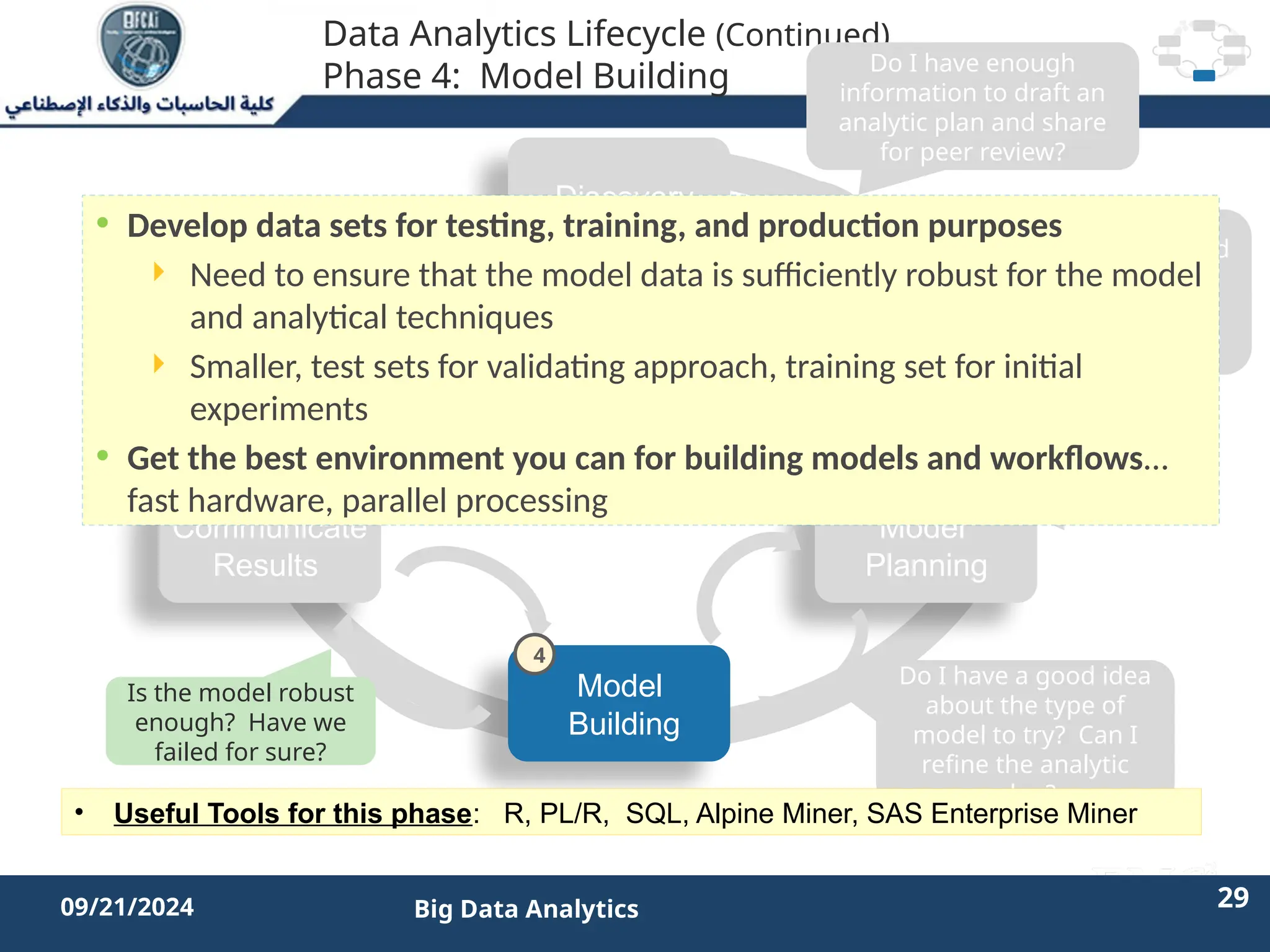

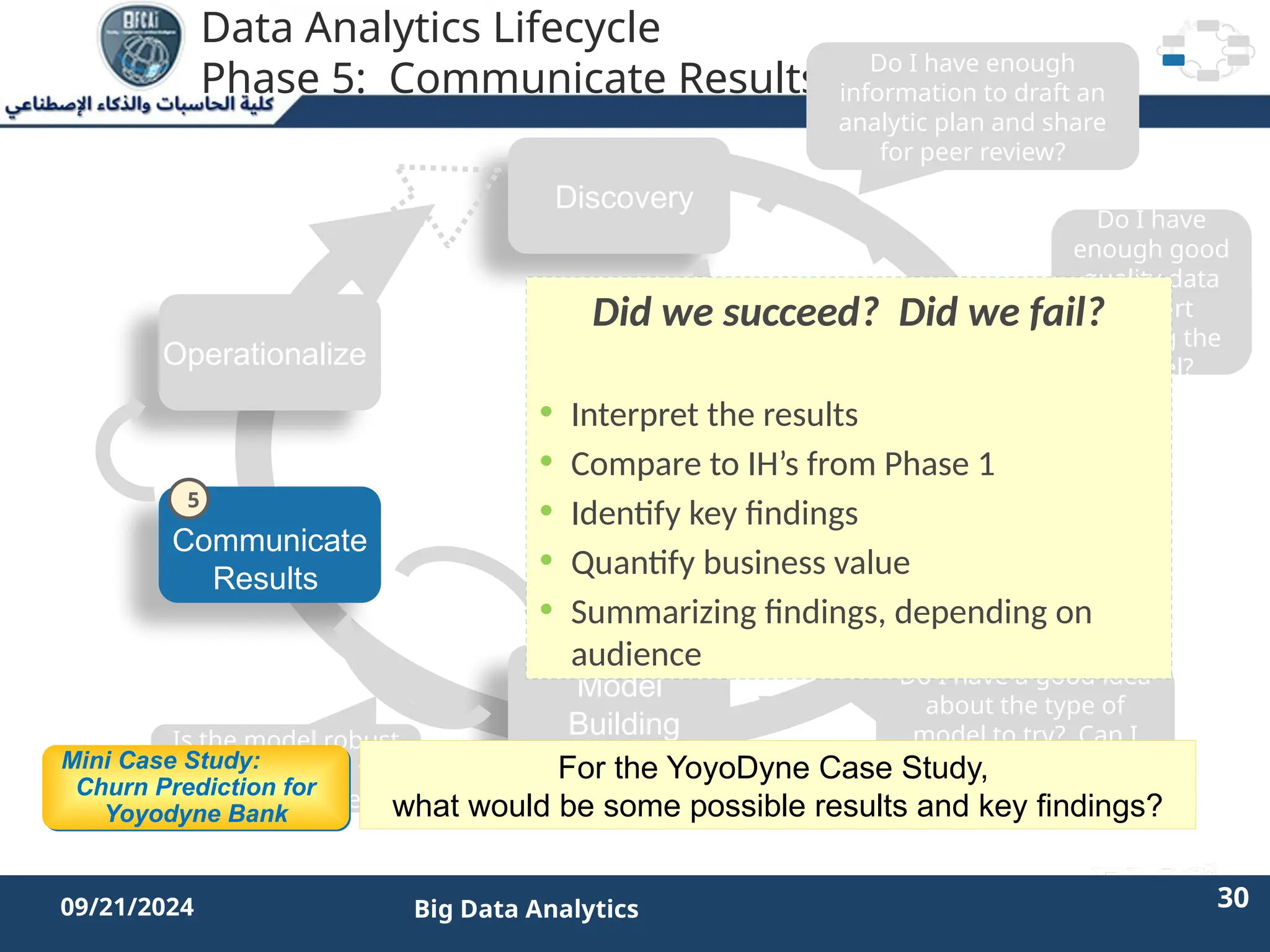

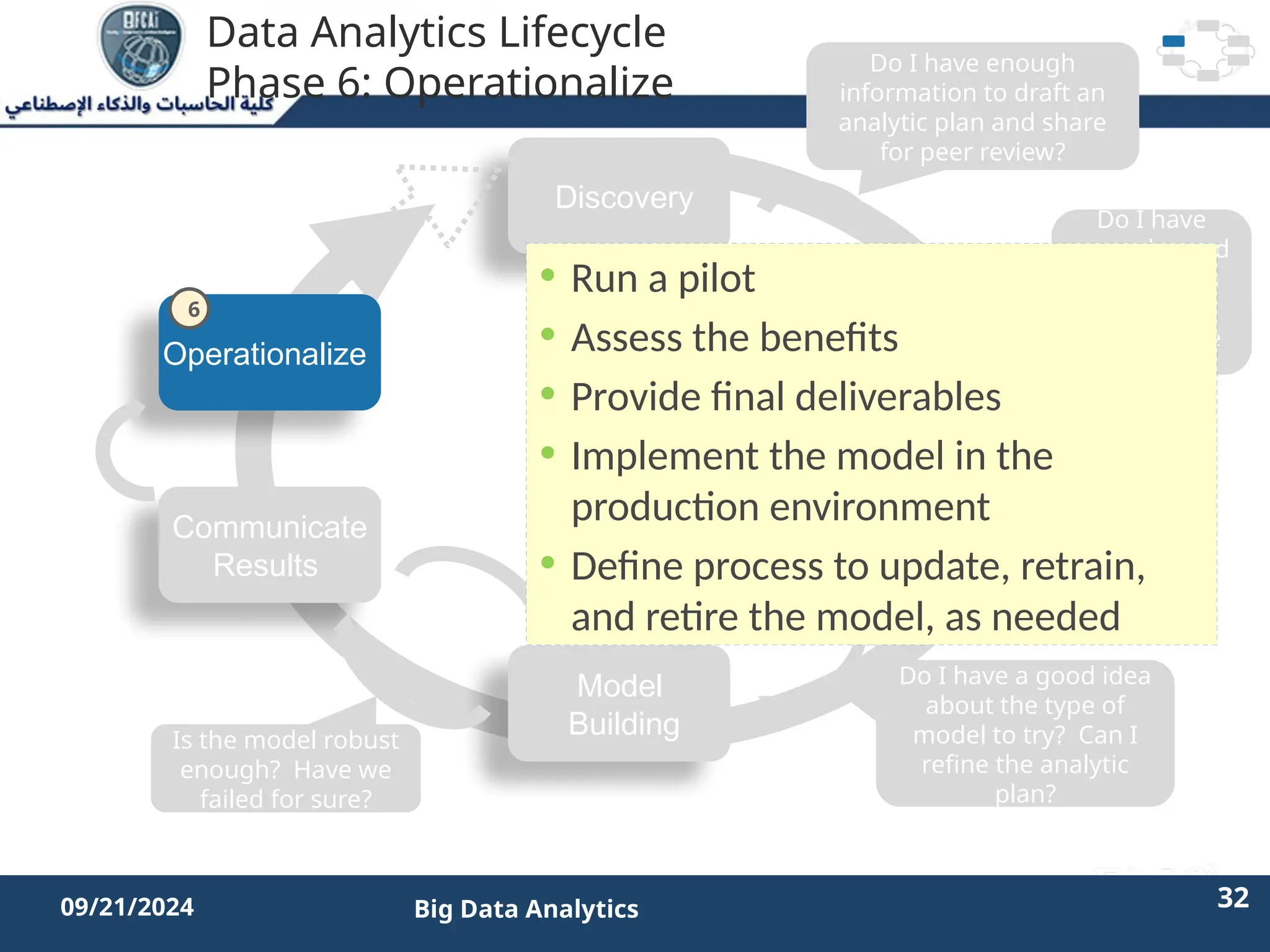

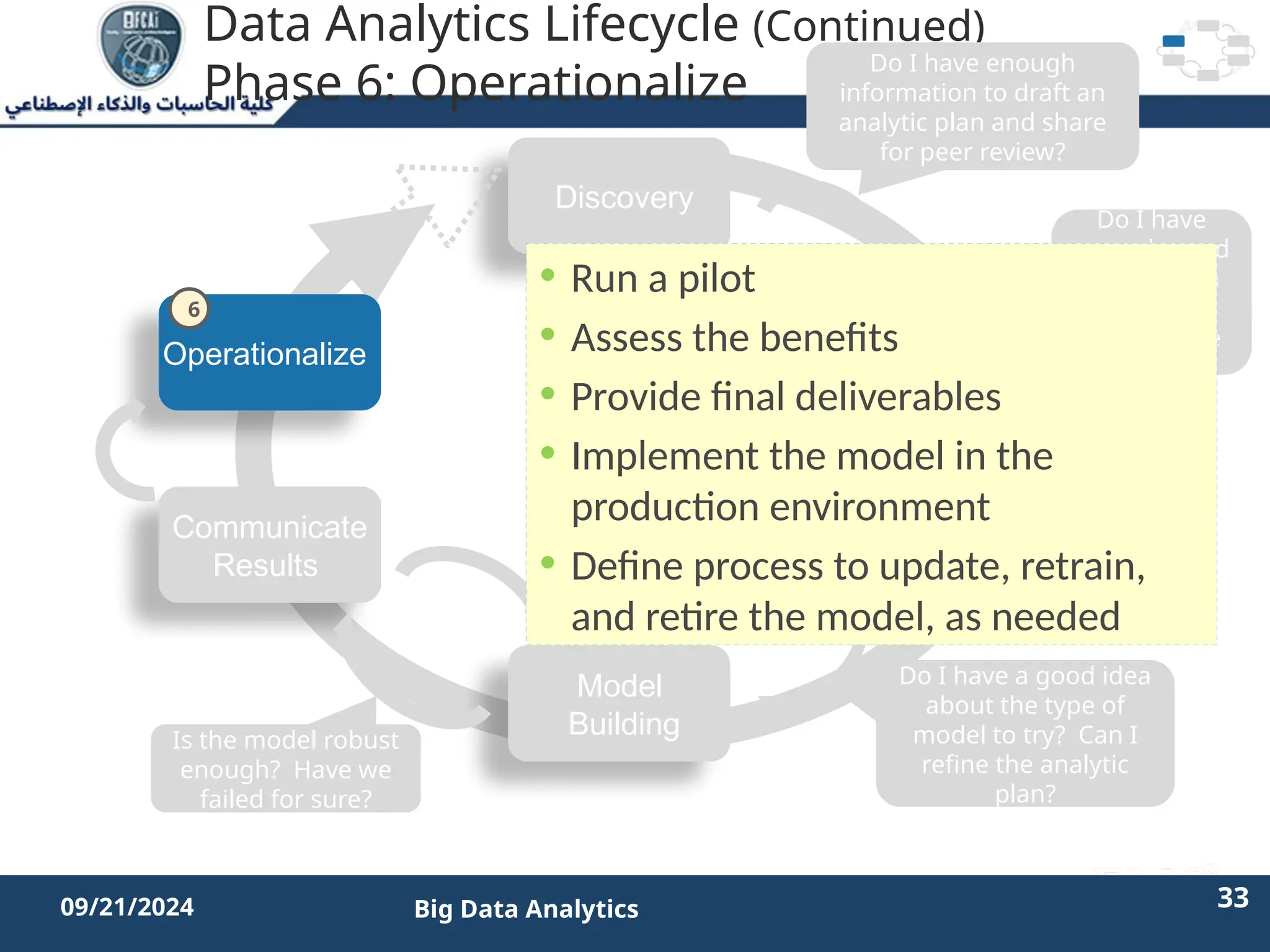

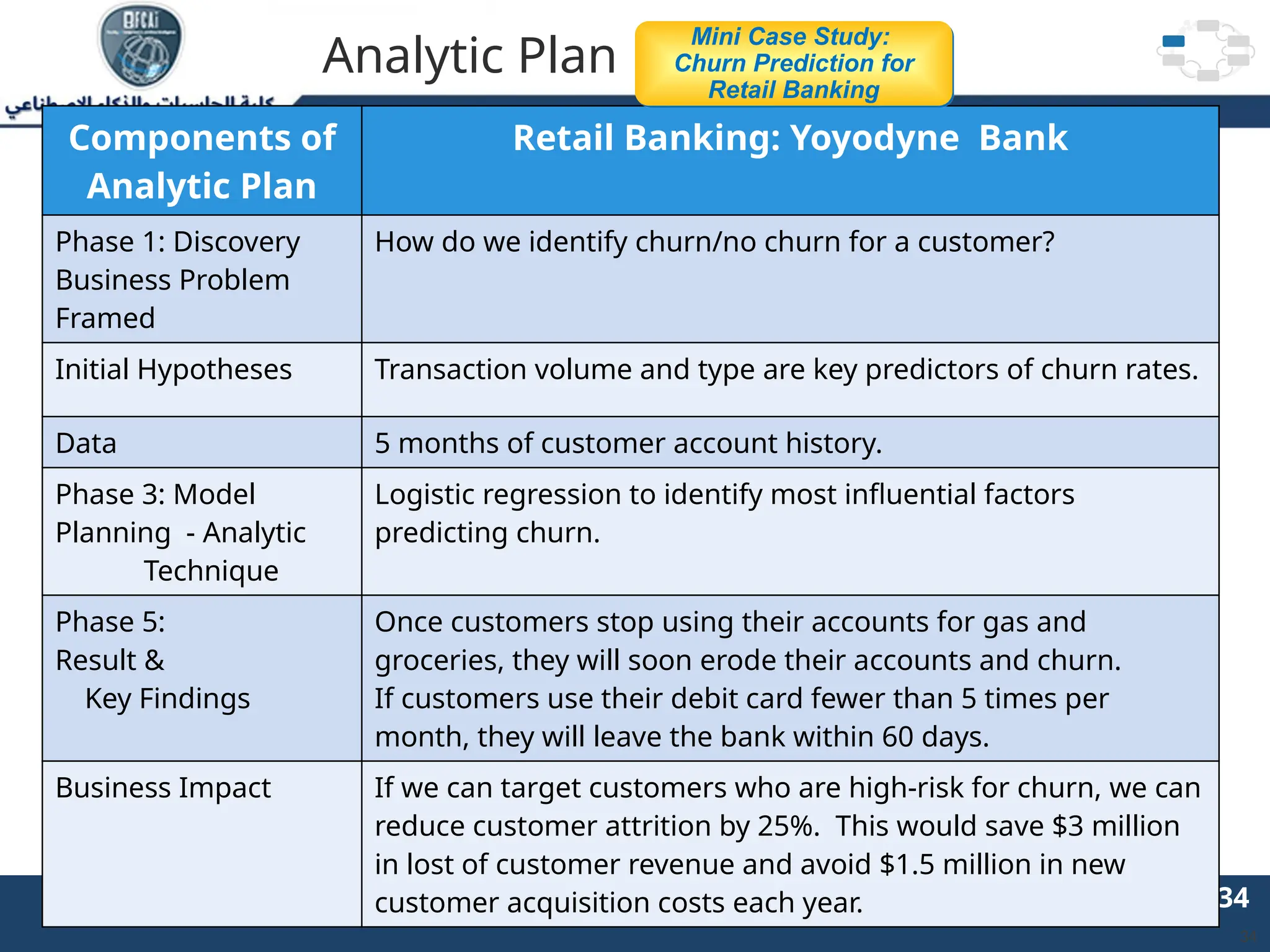

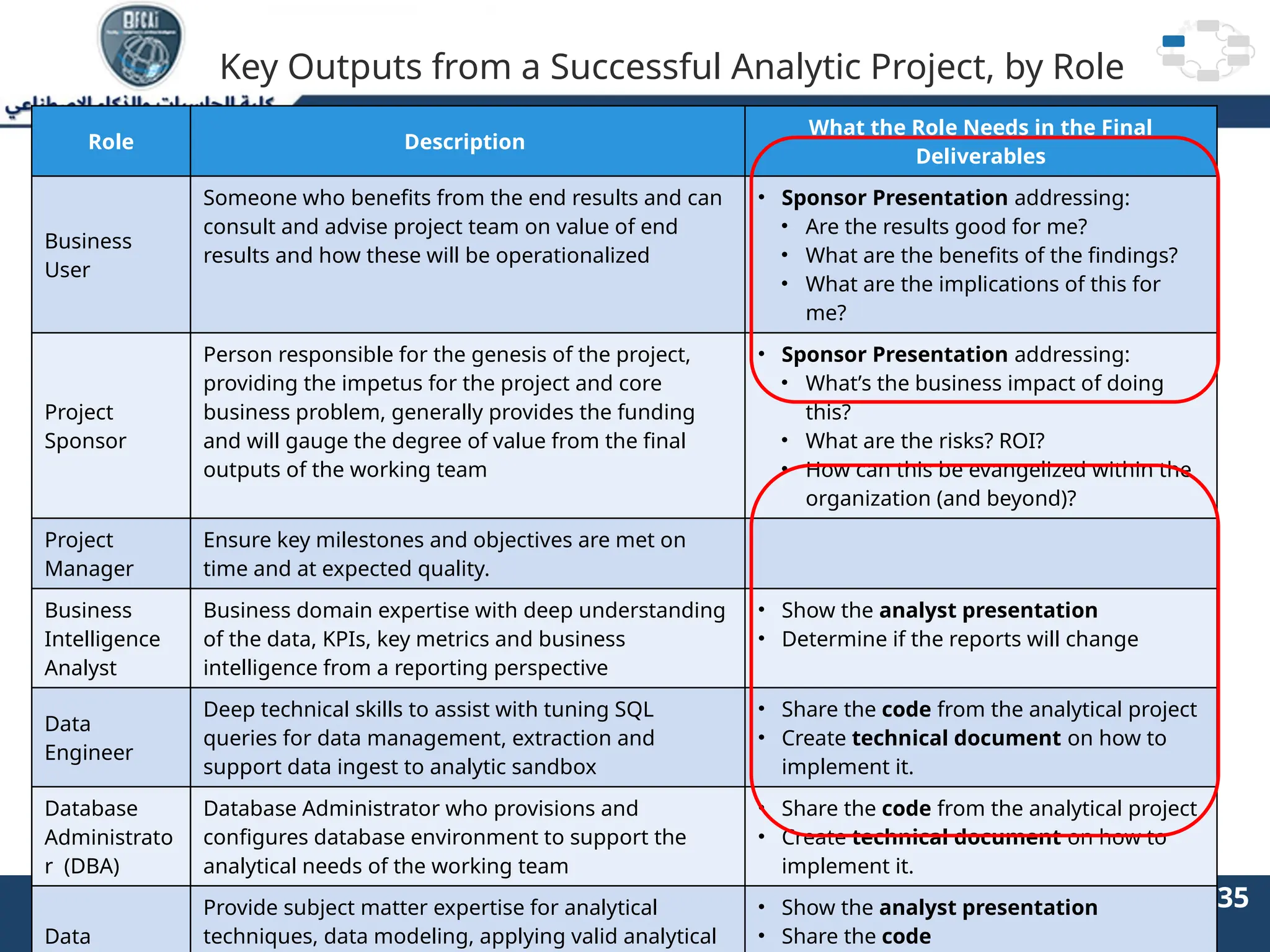

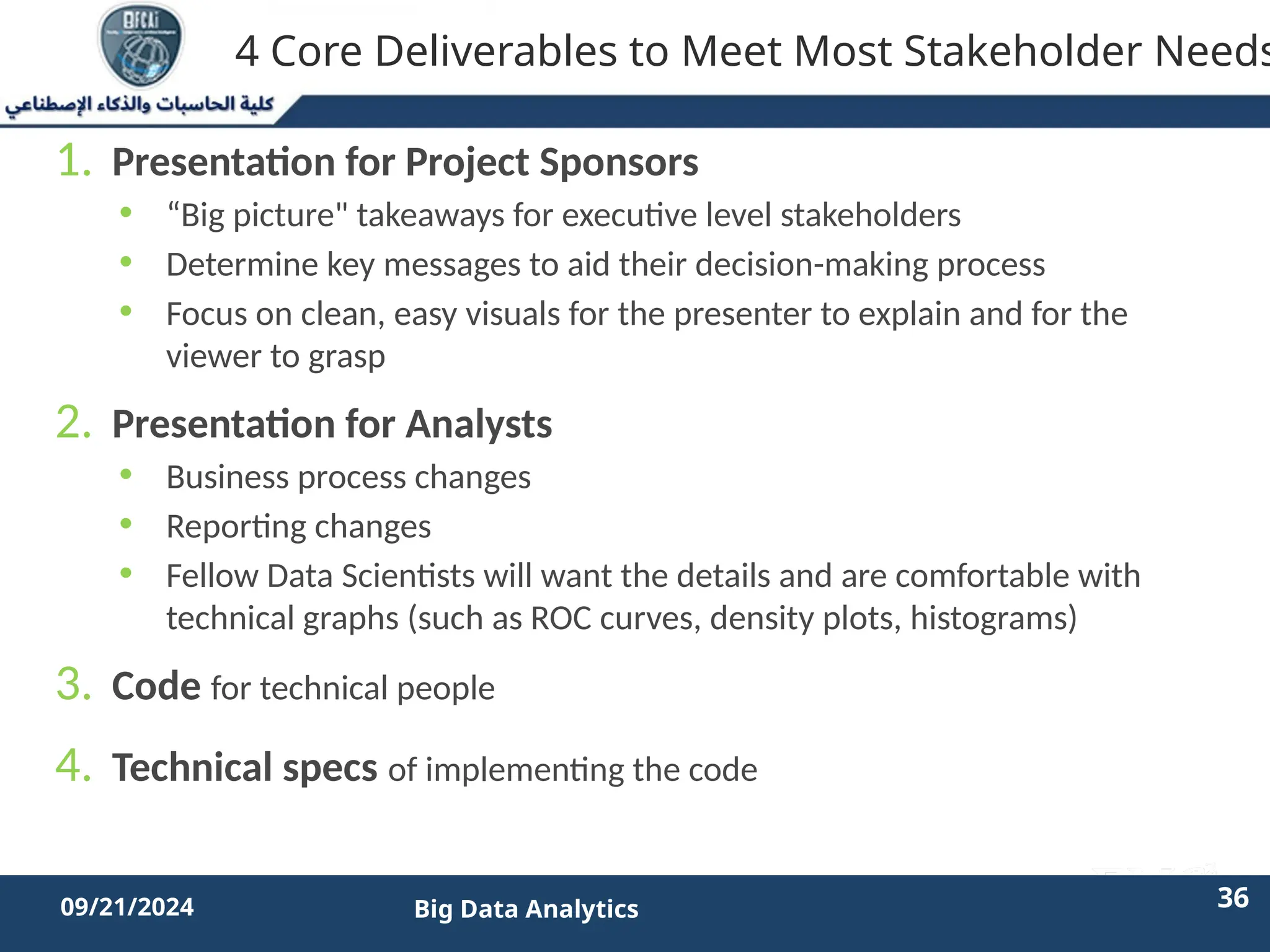

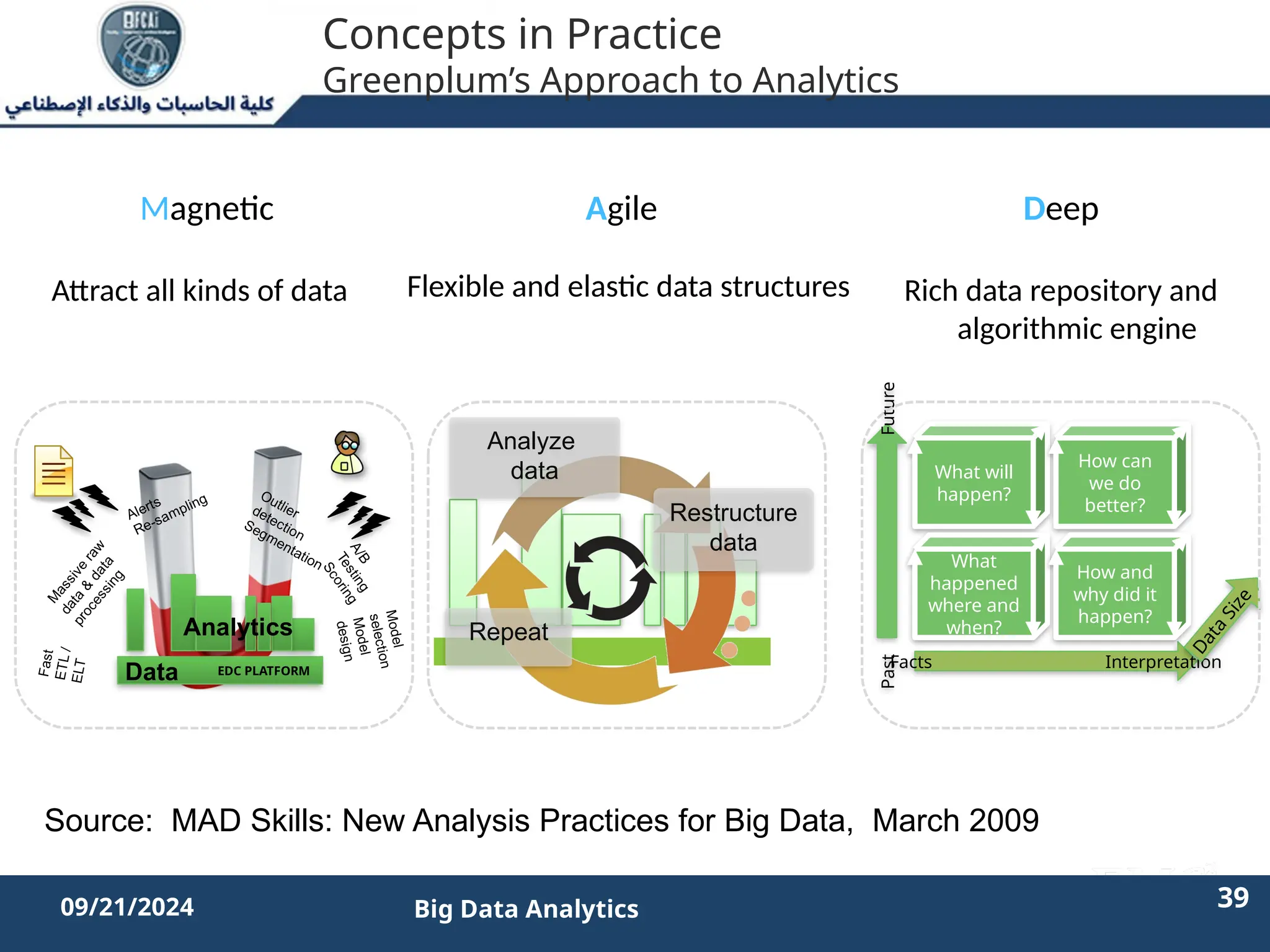

The document outlines the data analytics lifecycle, aimed to teach students the strategic application of analytics in business contexts, particularly in identifying and framing business problems and delivering analytics solutions. It introduces key roles within analytics projects, emphasizes the importance of a structured approach, and discusses phases like discovery, data preparation, and model planning, utilizing case studies for practical insights. Students will learn to apply these concepts to develop actionable analytics strategies, ultimately aiming to improve business metrics such as customer retention.