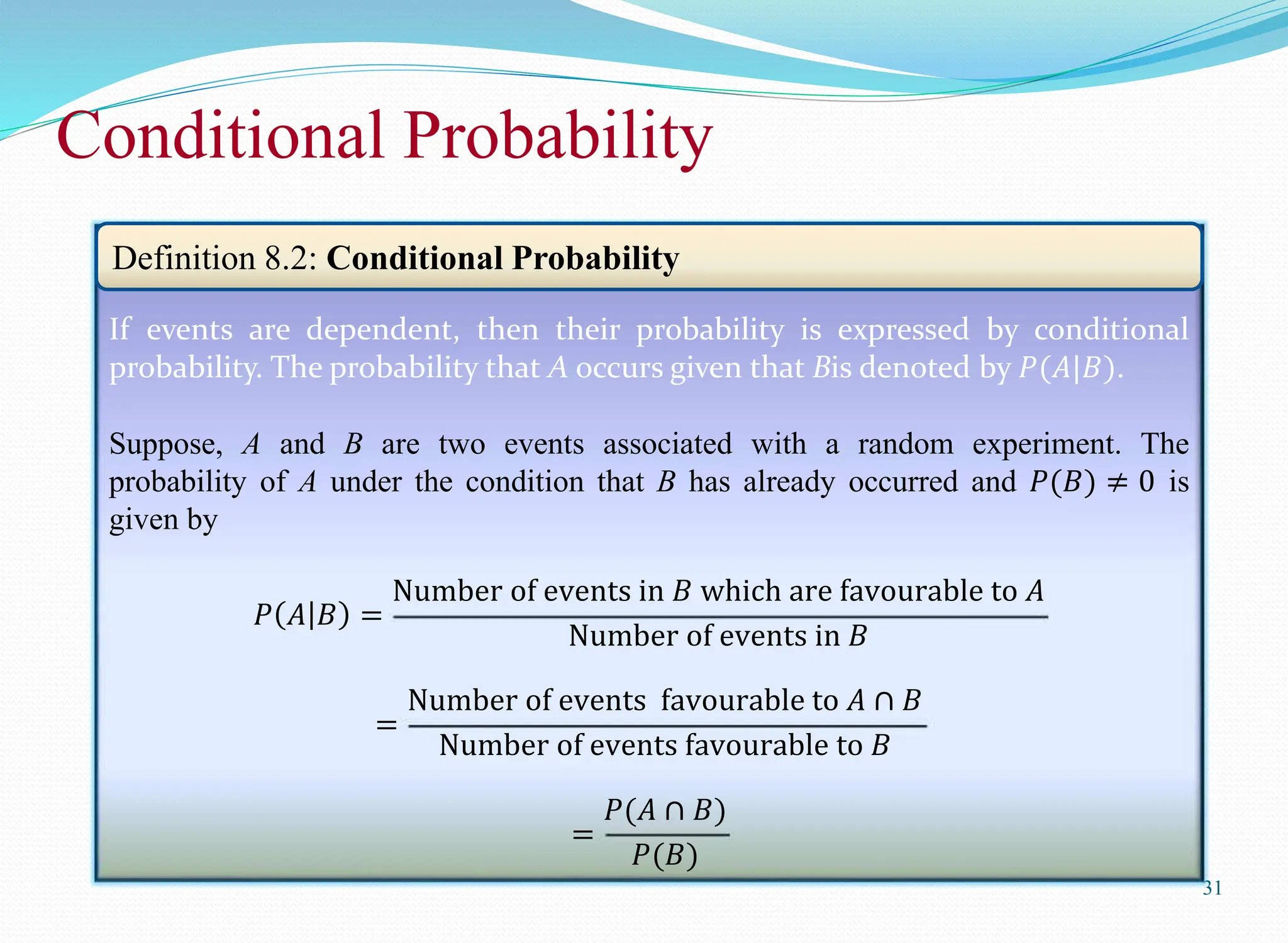

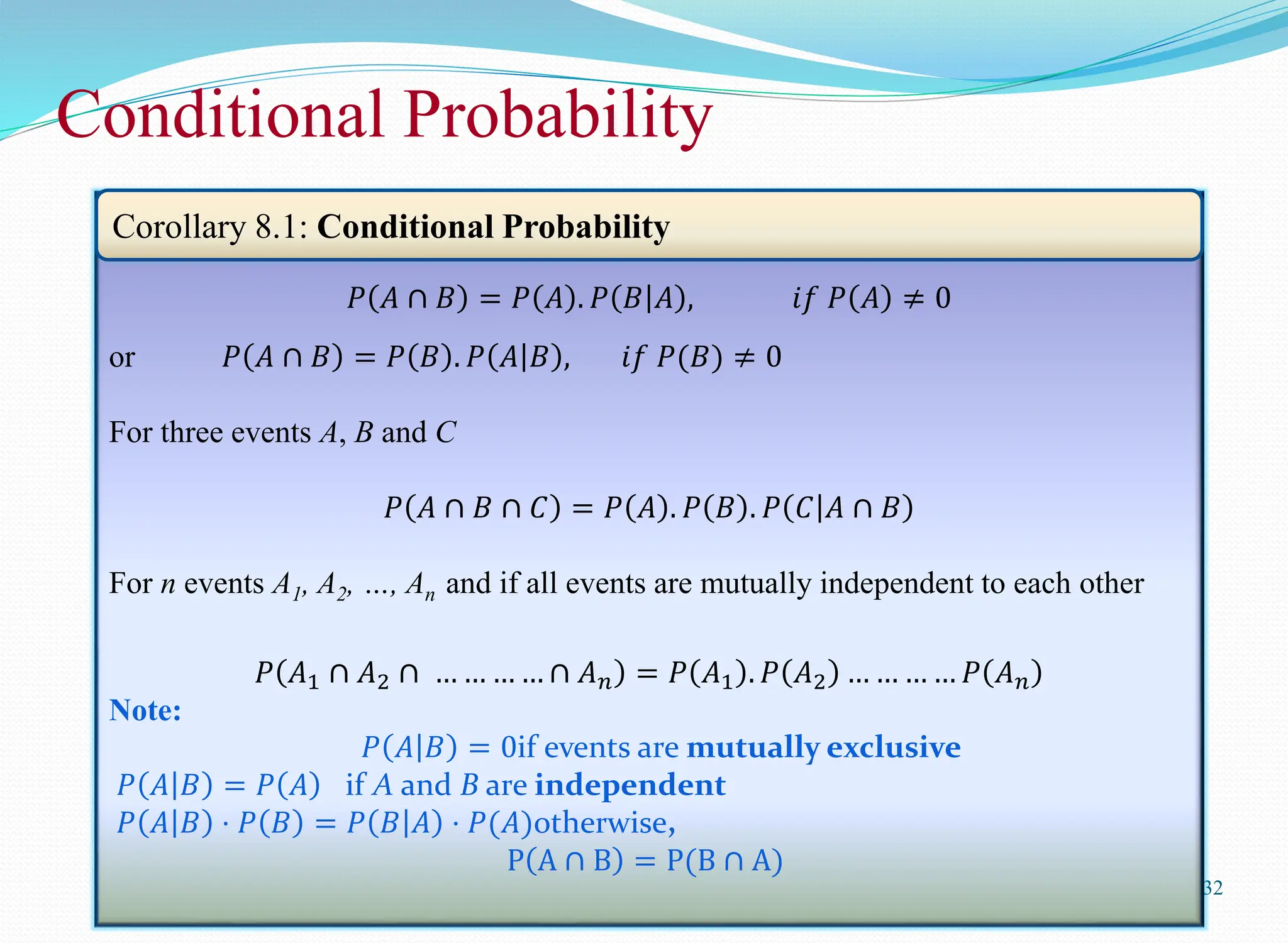

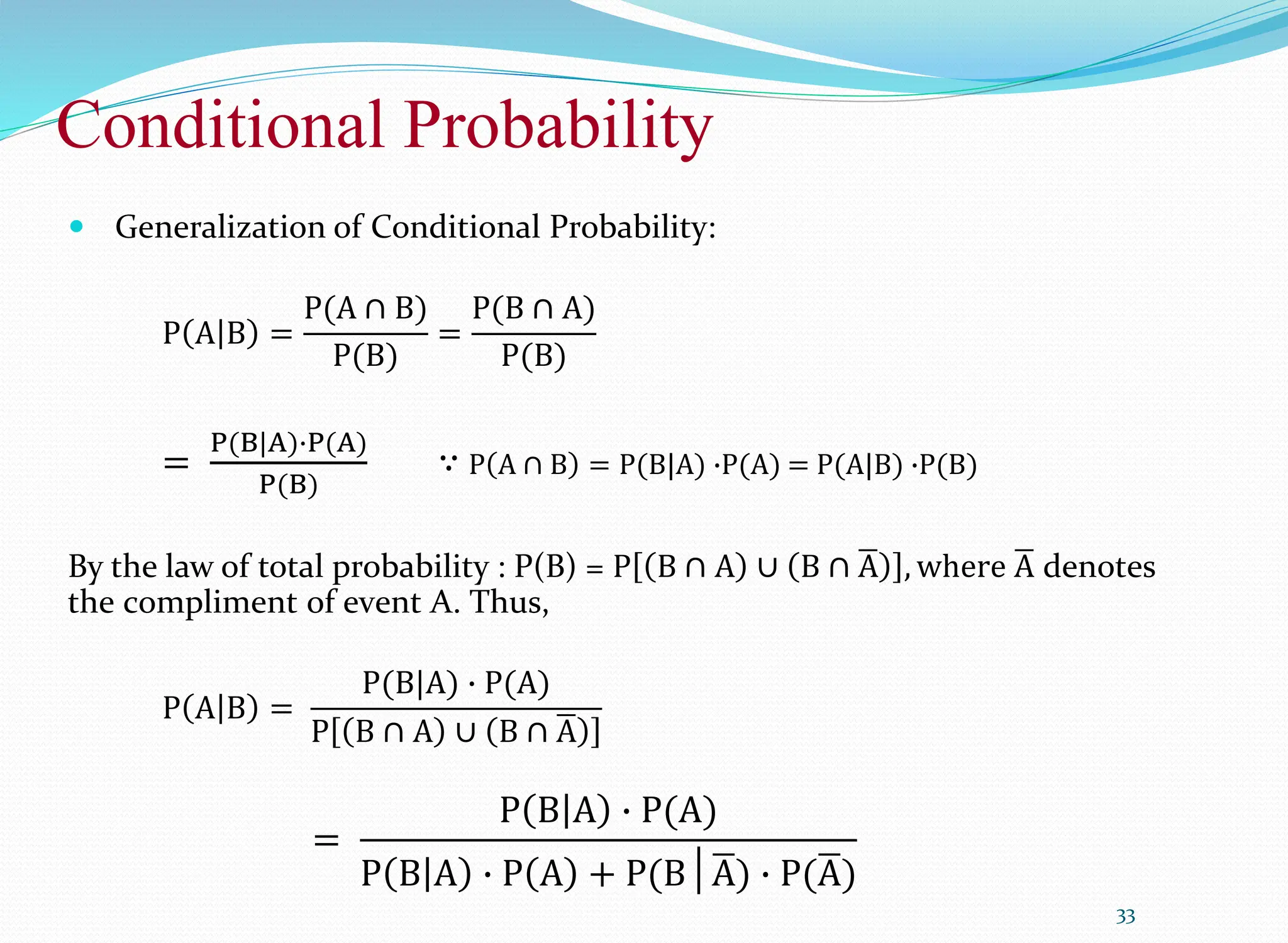

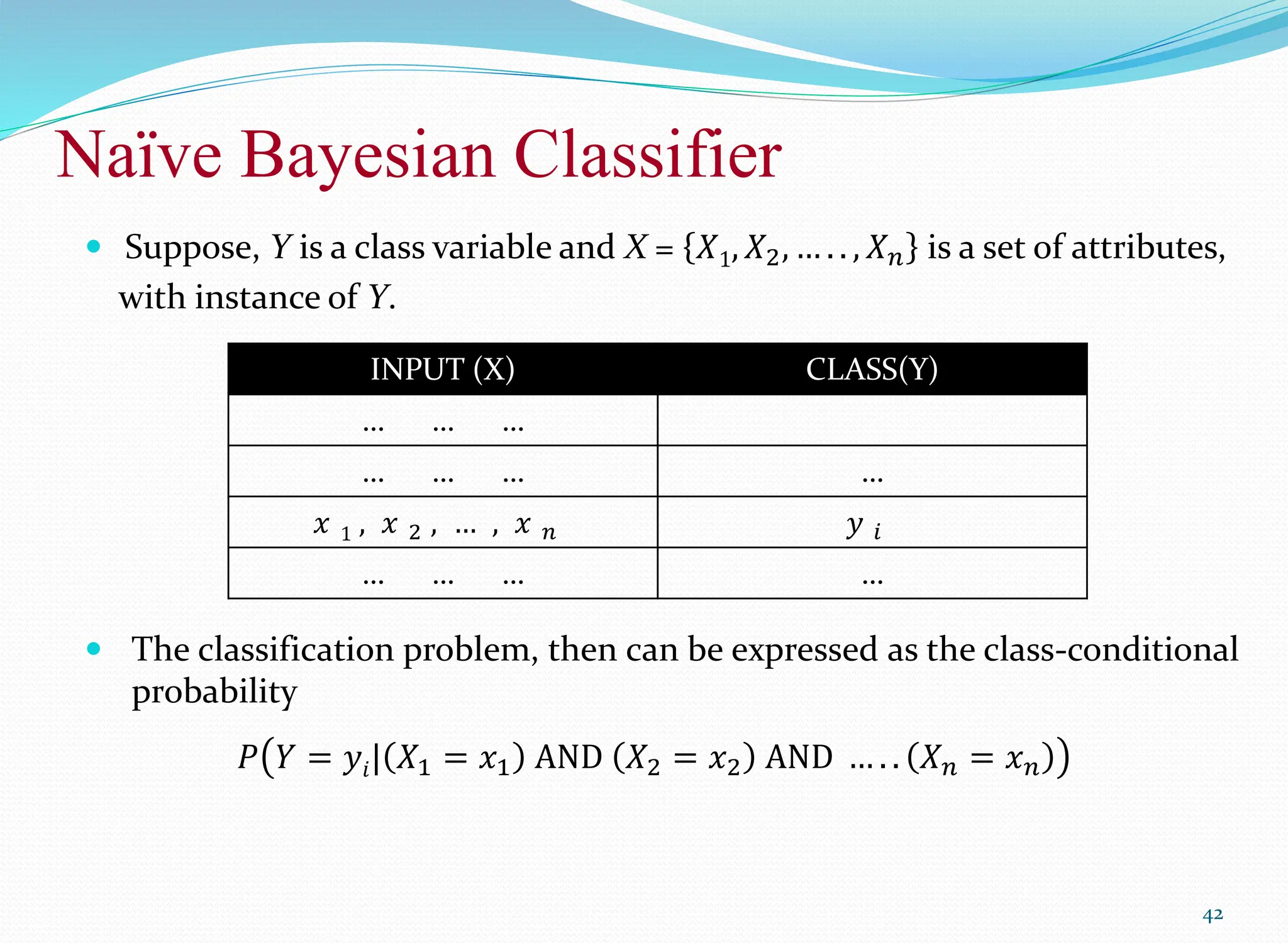

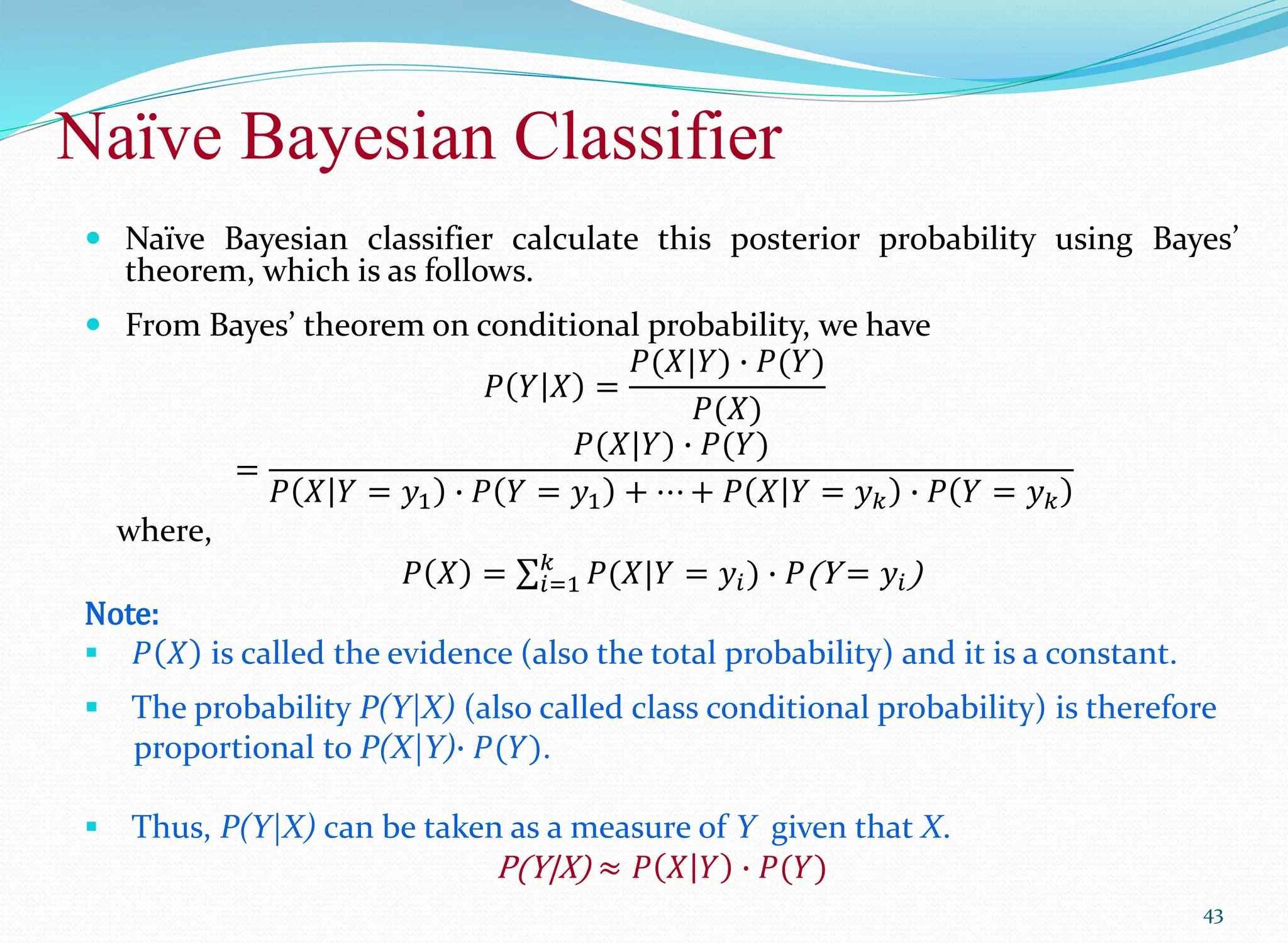

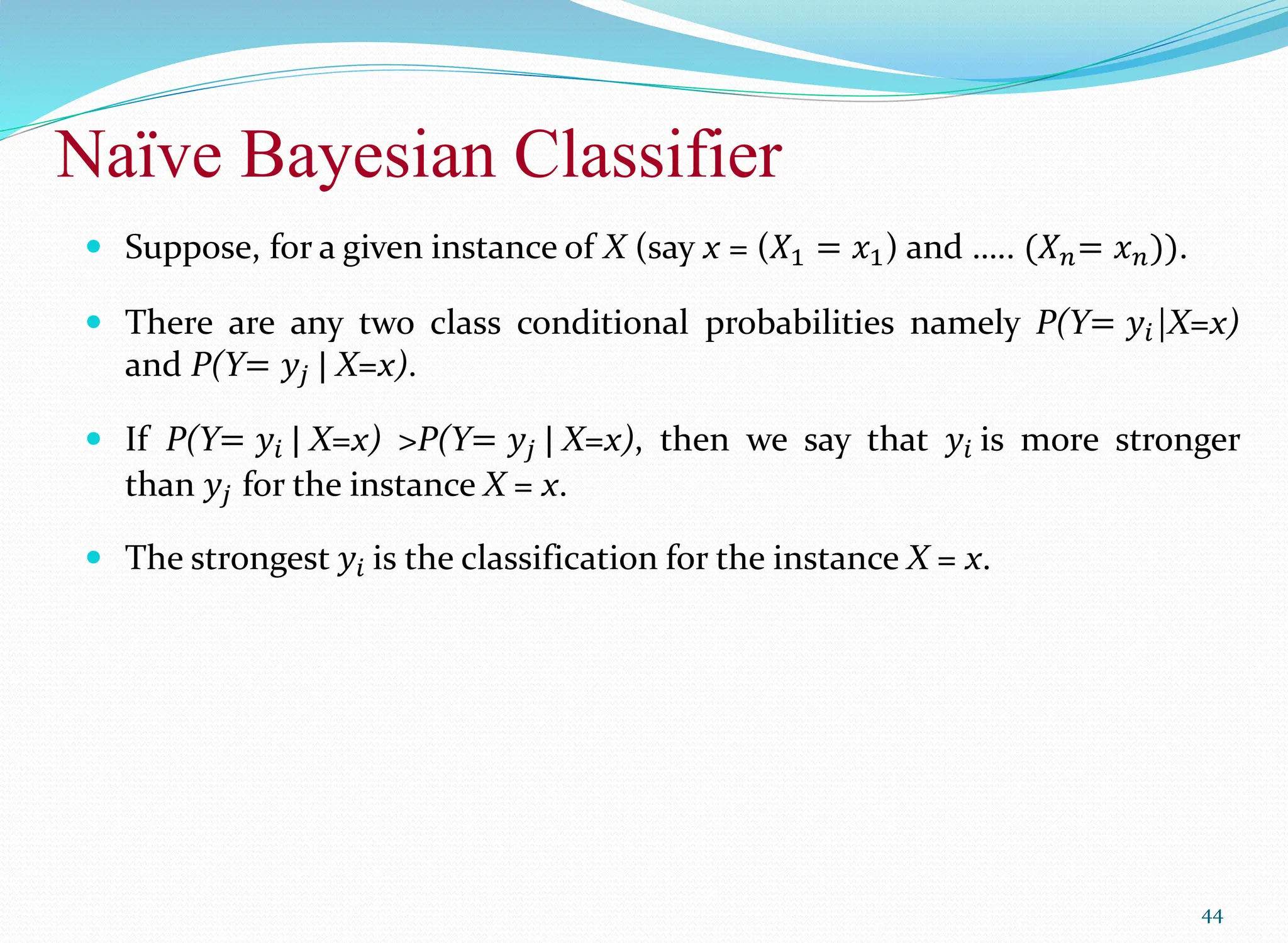

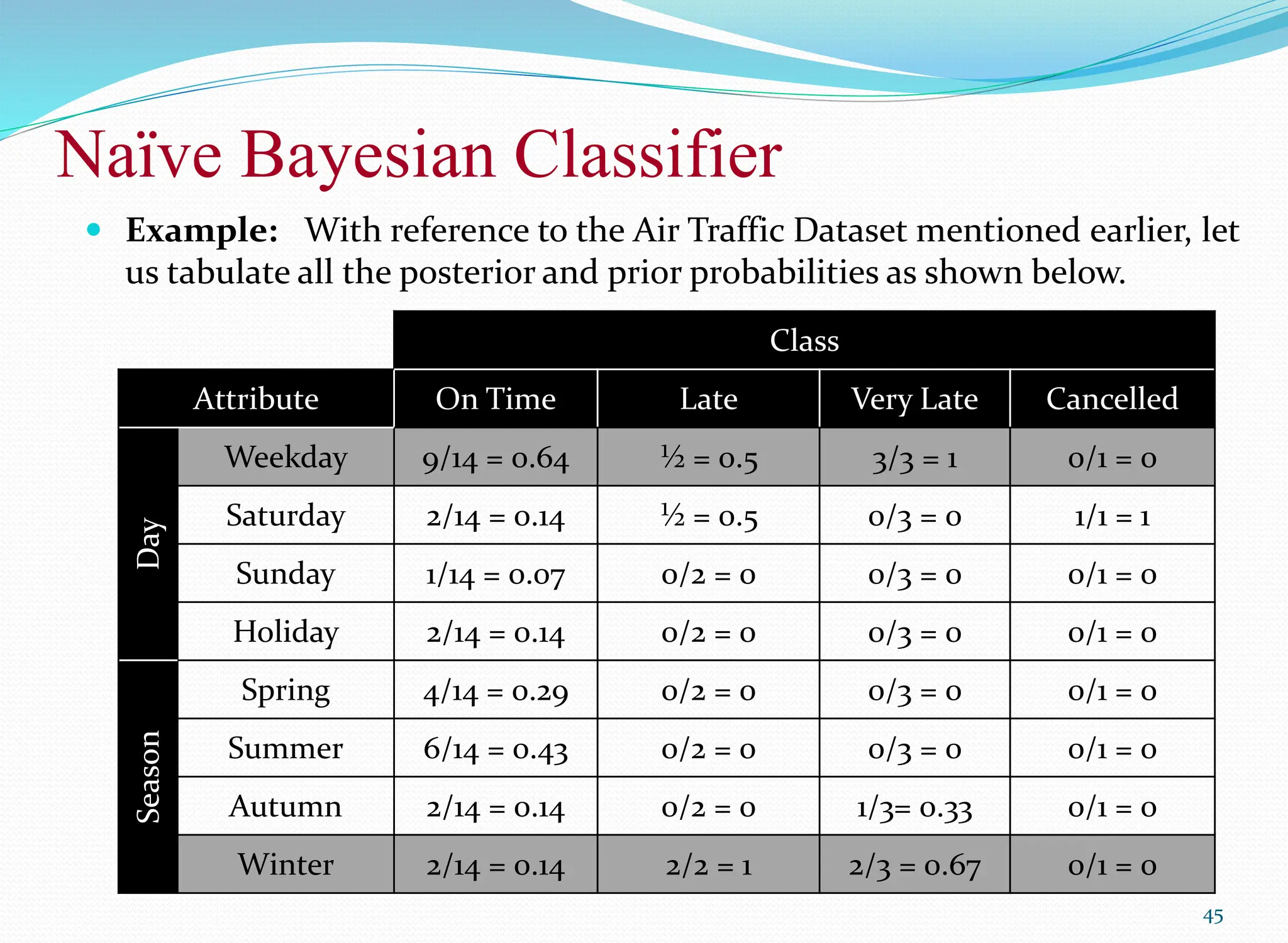

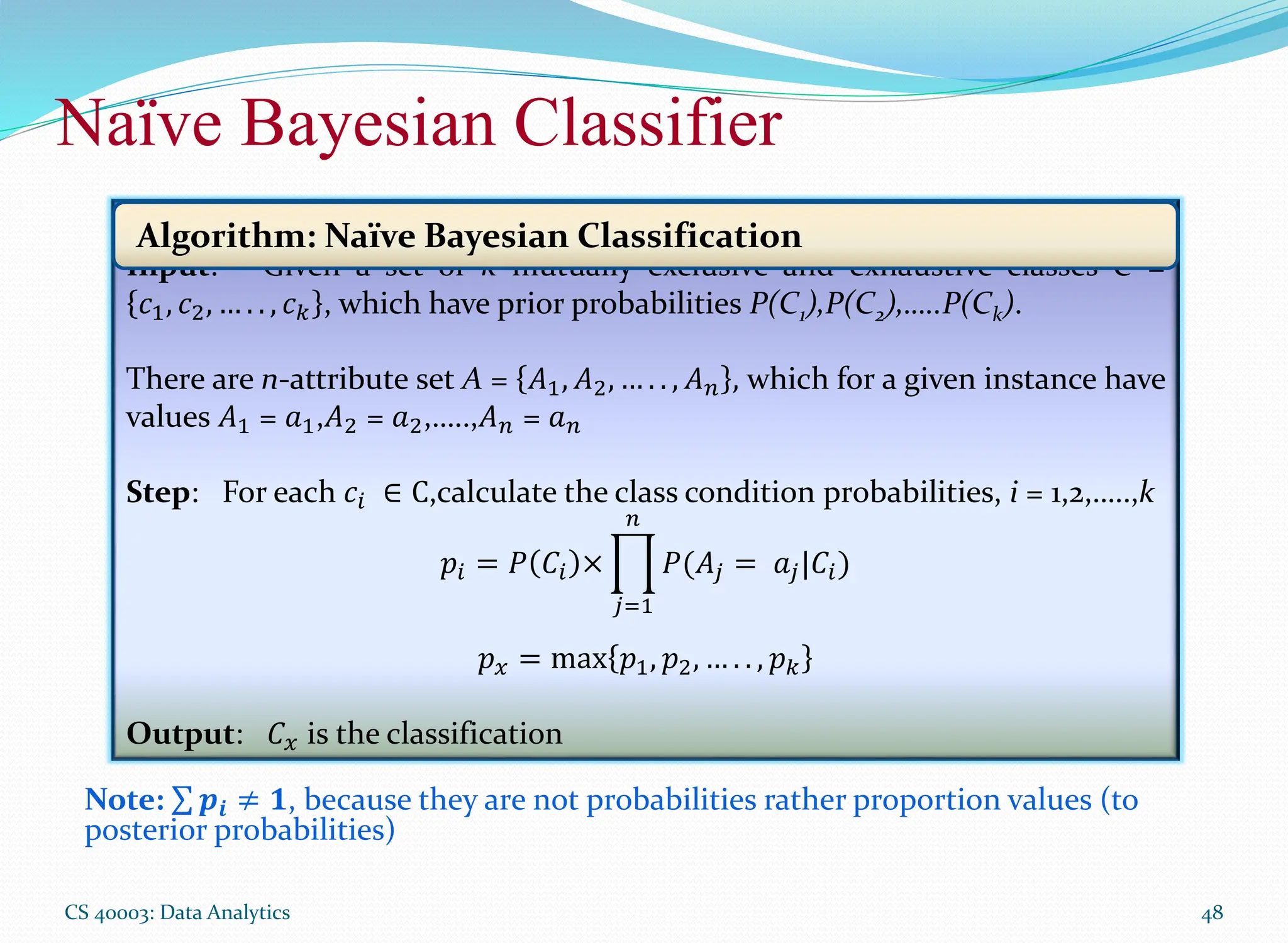

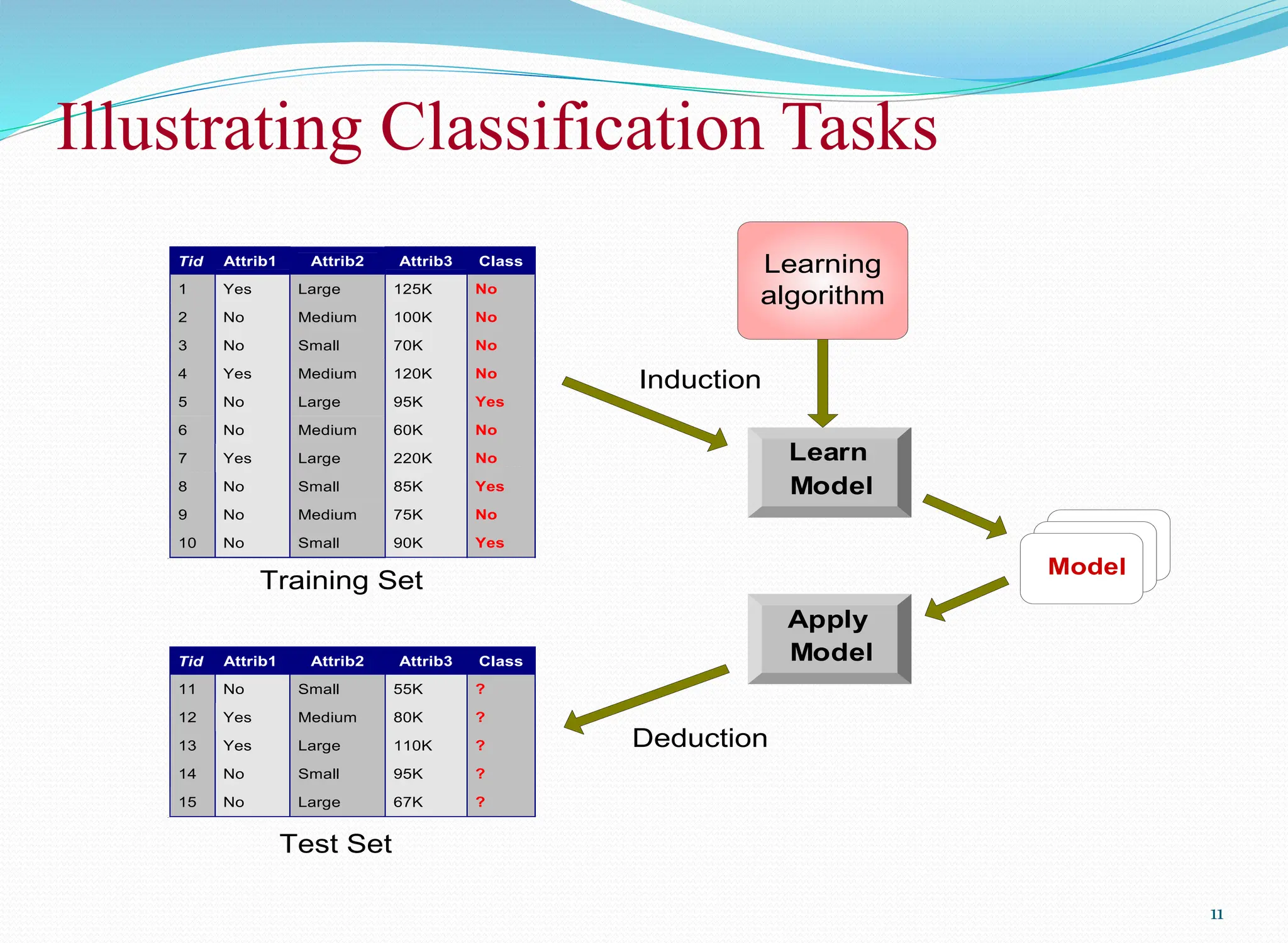

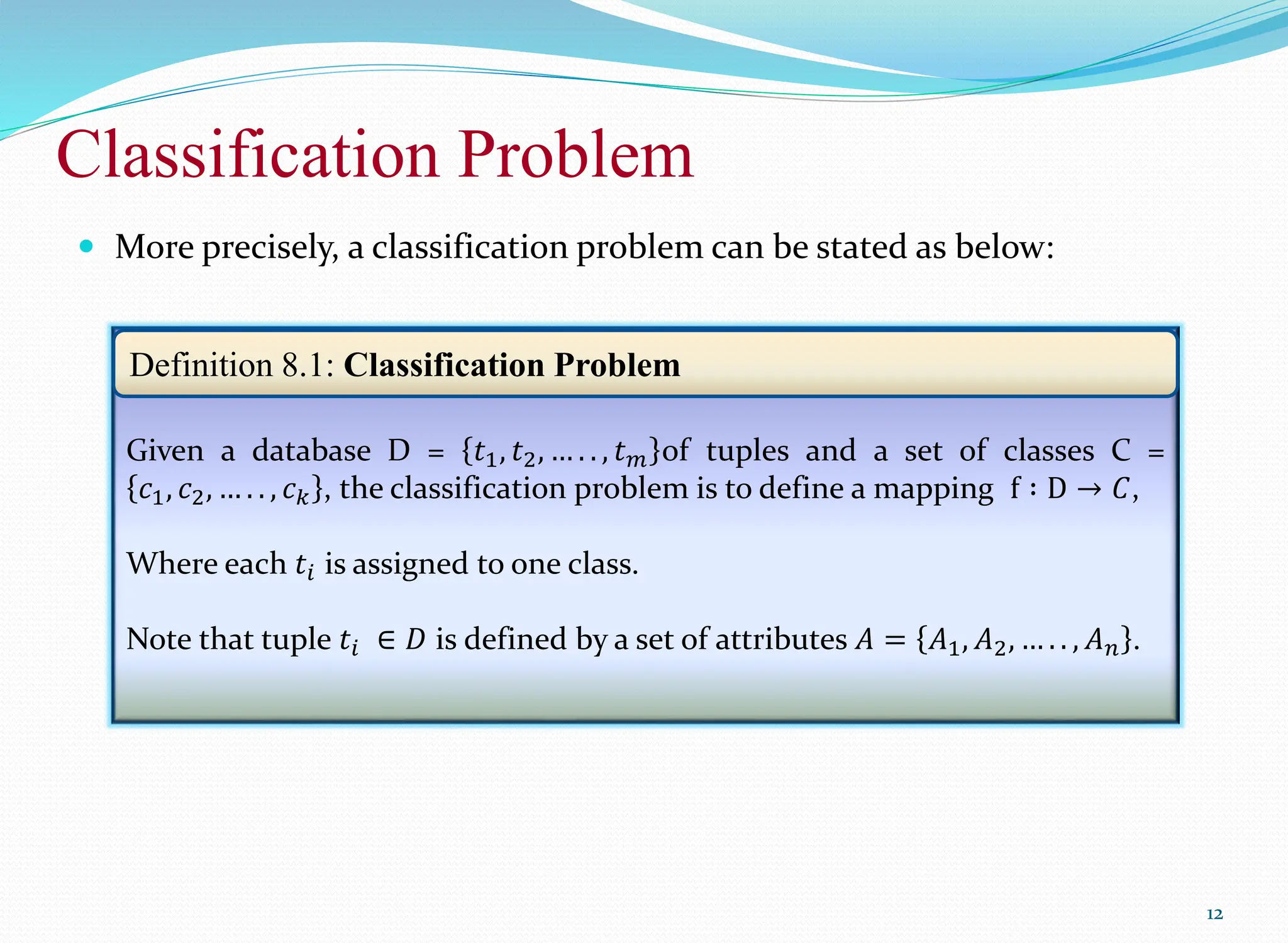

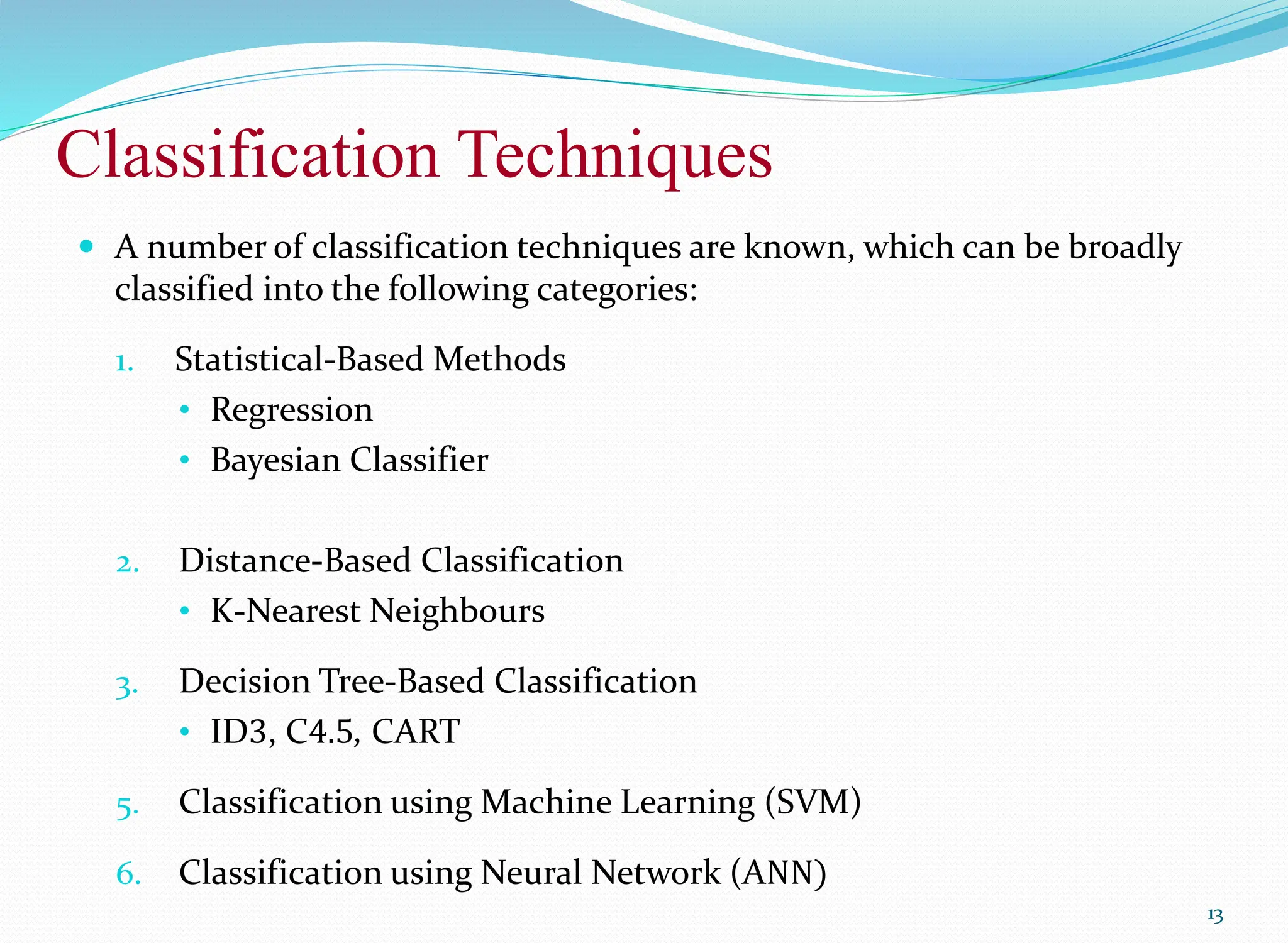

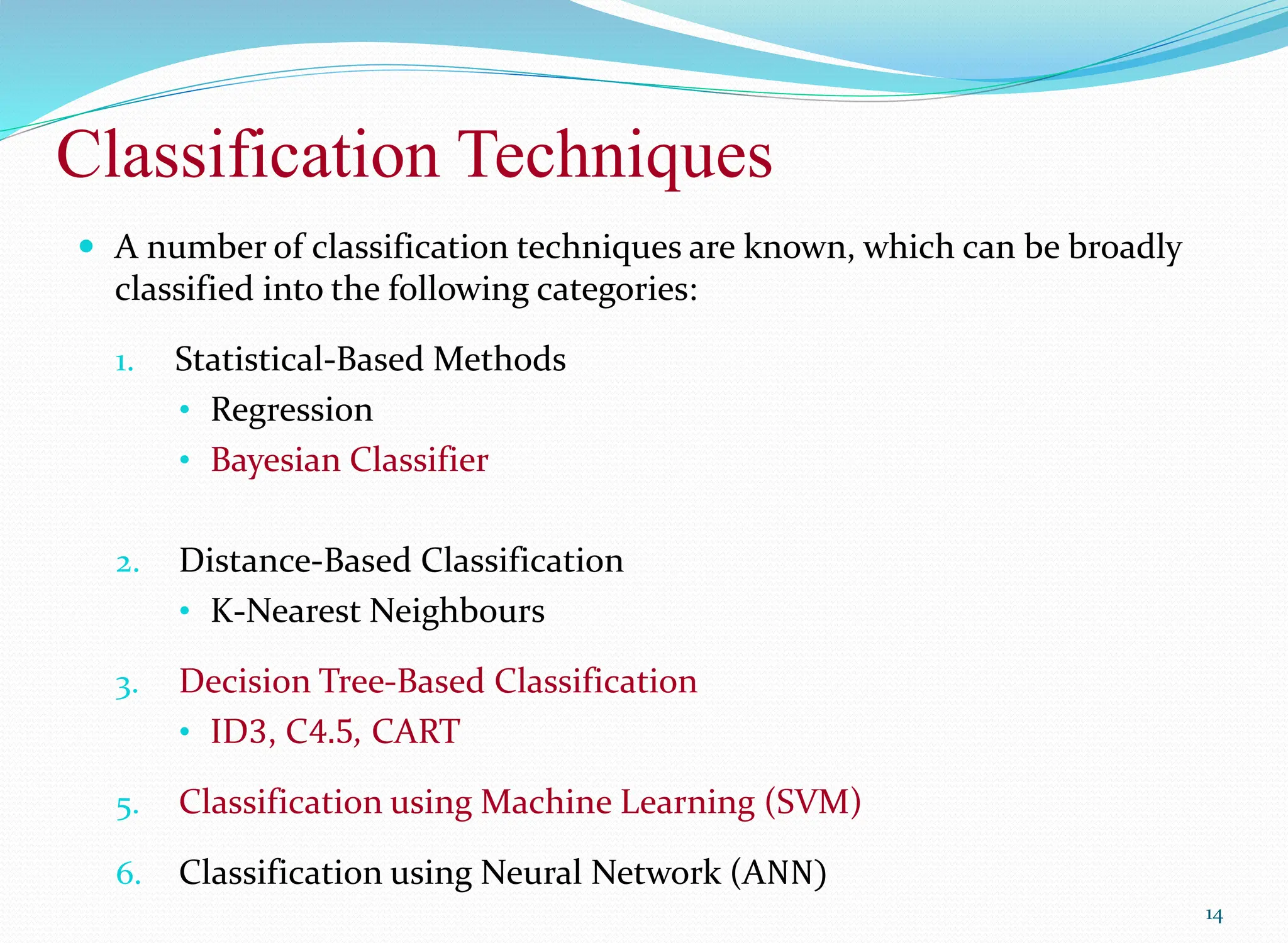

The document discusses classification techniques, focusing on the naïve bayes classifier, its principles, and applications in various fields such as life sciences and security. It explains the difference between supervised and unsupervised classification, the formal definition of classification problems, and the use of Bayes' theorem for probabilistic classification. Additionally, it highlights various classification techniques, including statistical-based methods, distance-based classification, decision trees, and machine learning approaches.

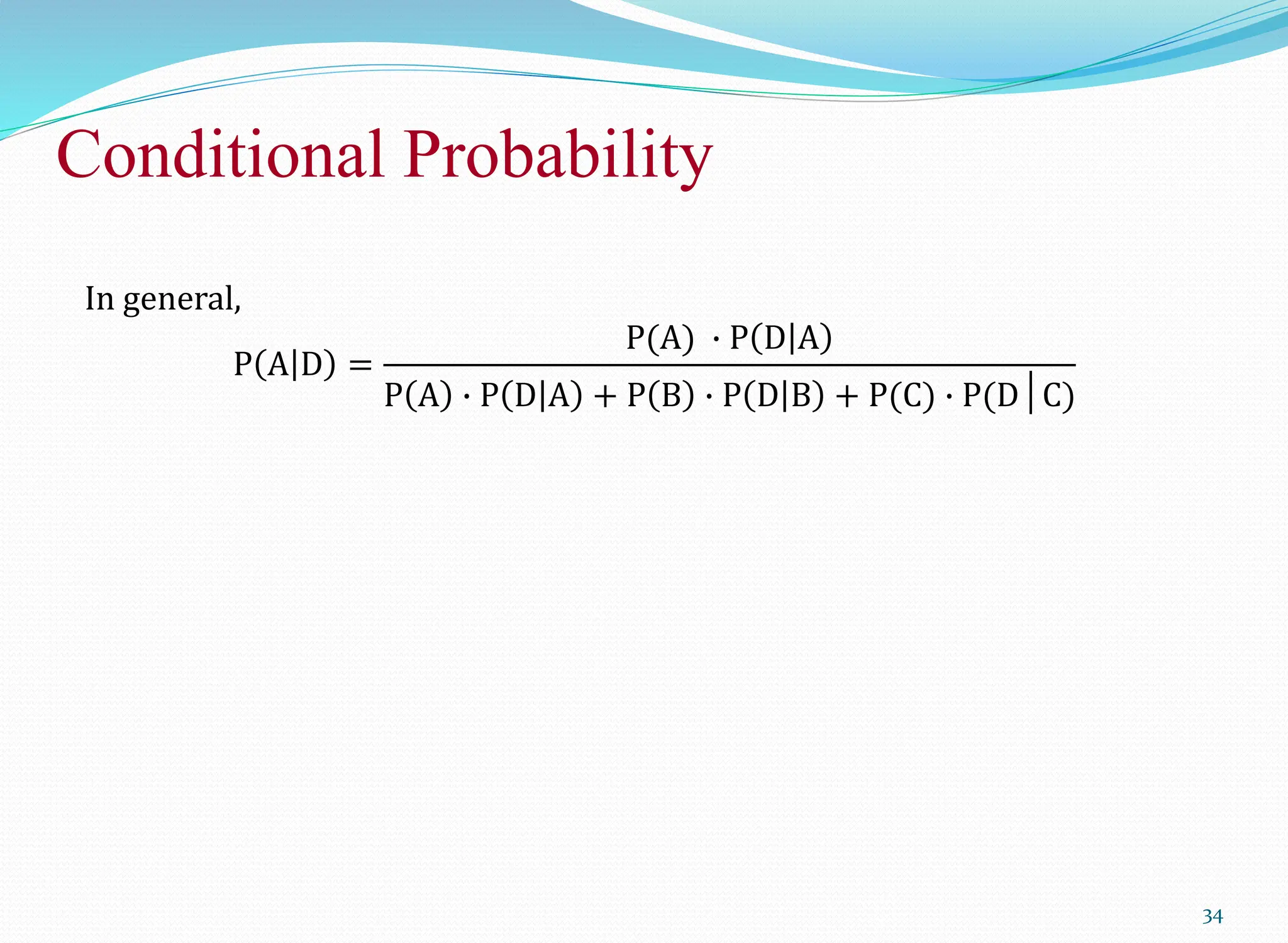

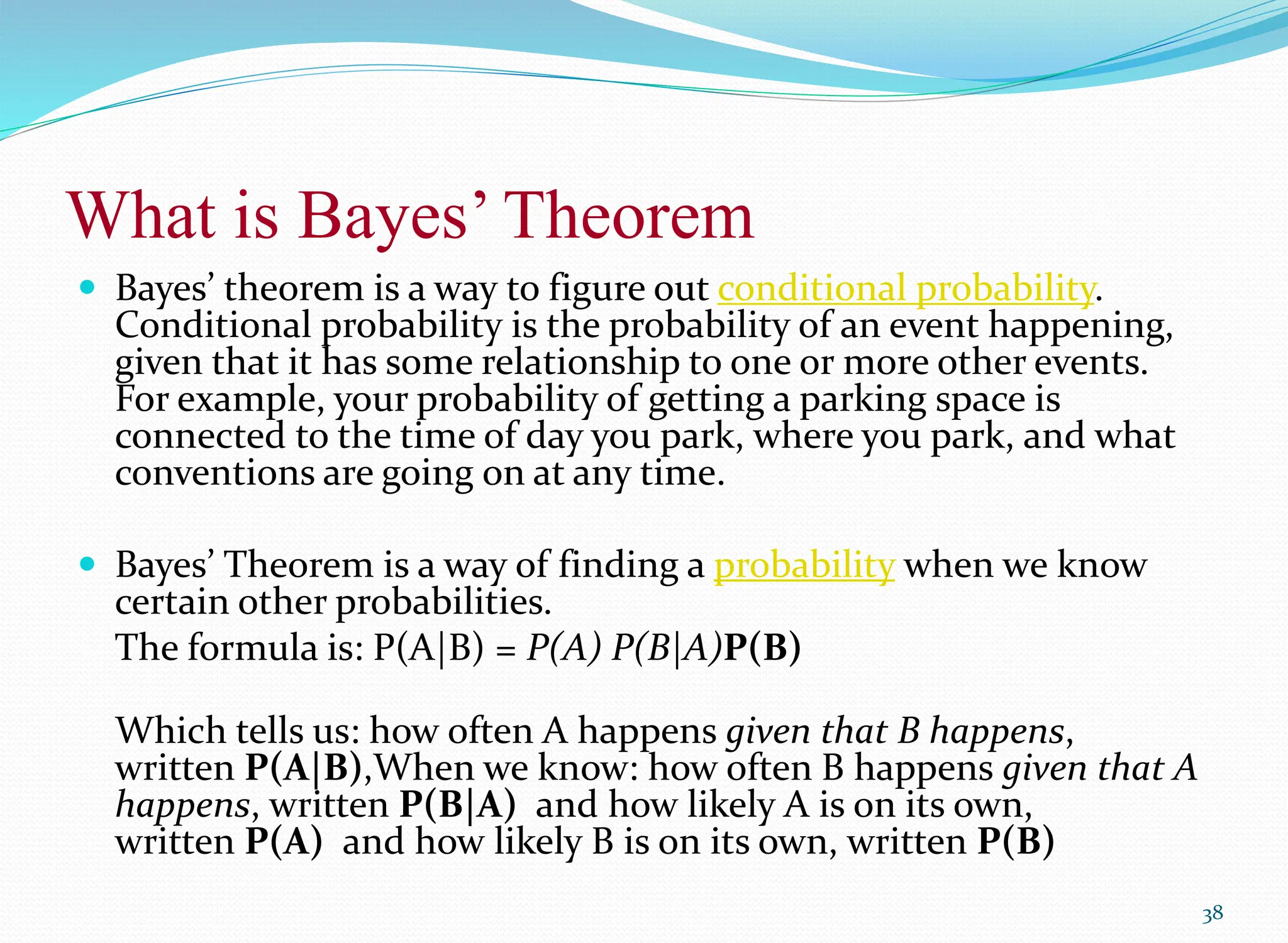

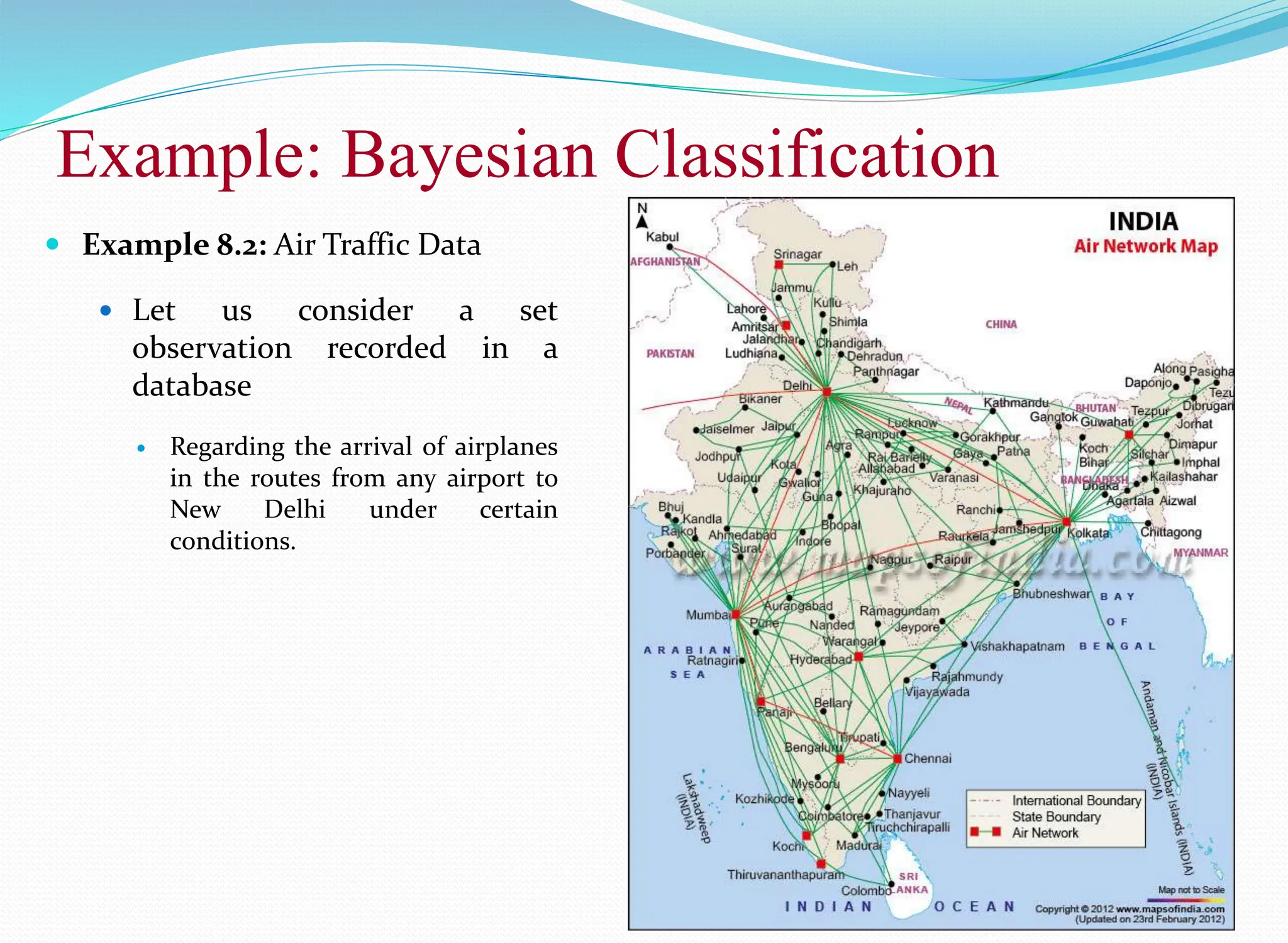

![Air-Traffic Data

21

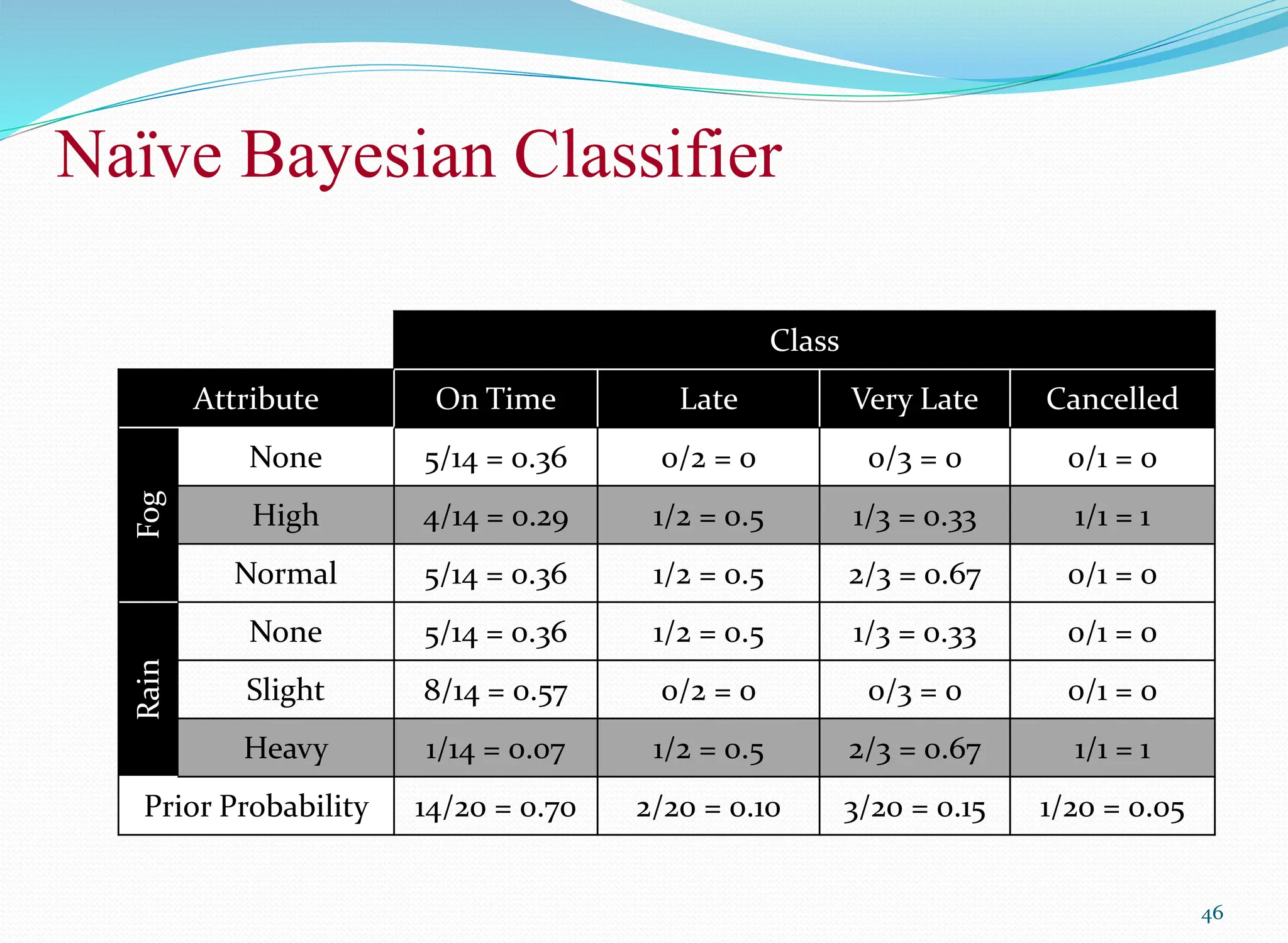

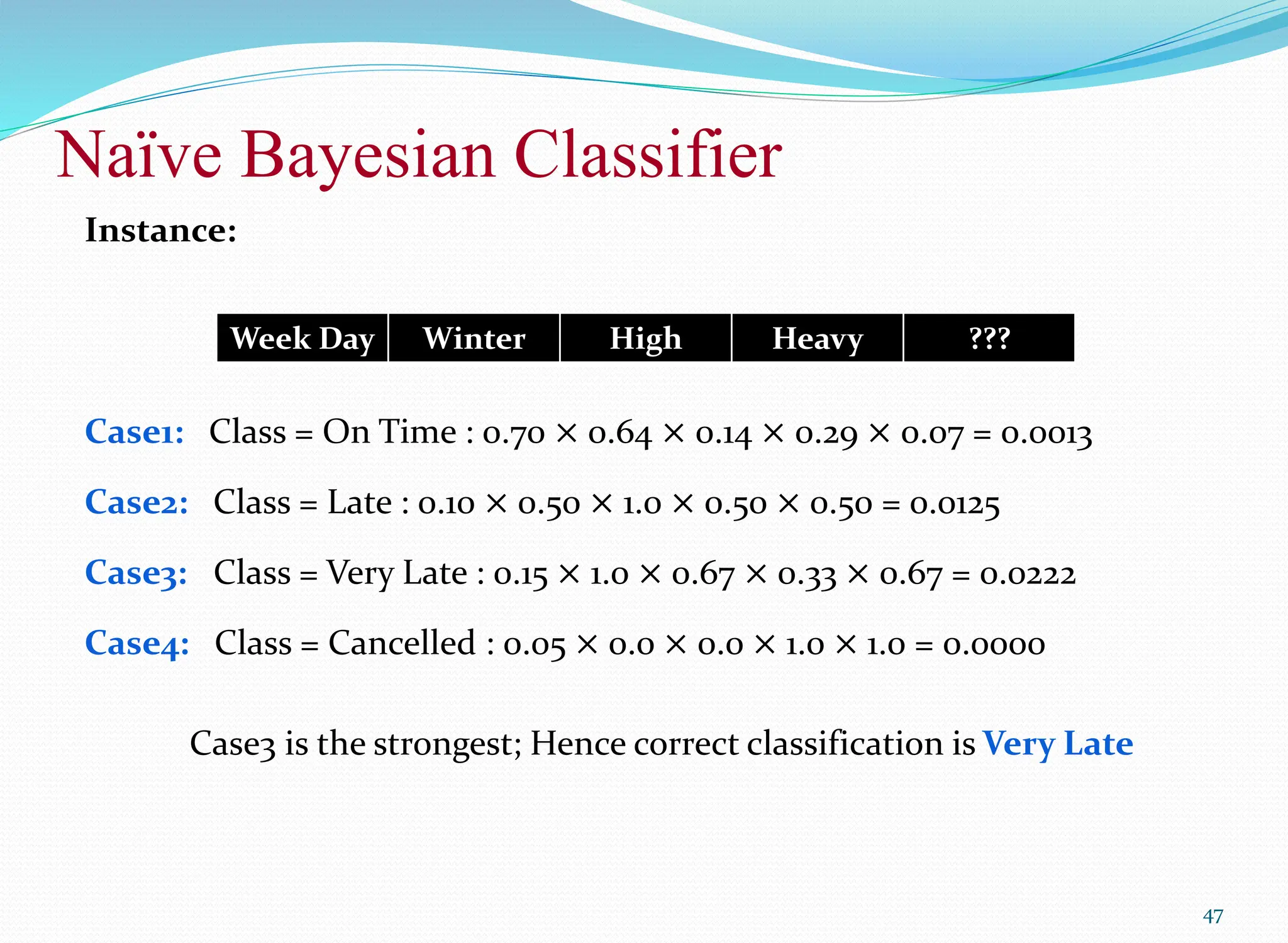

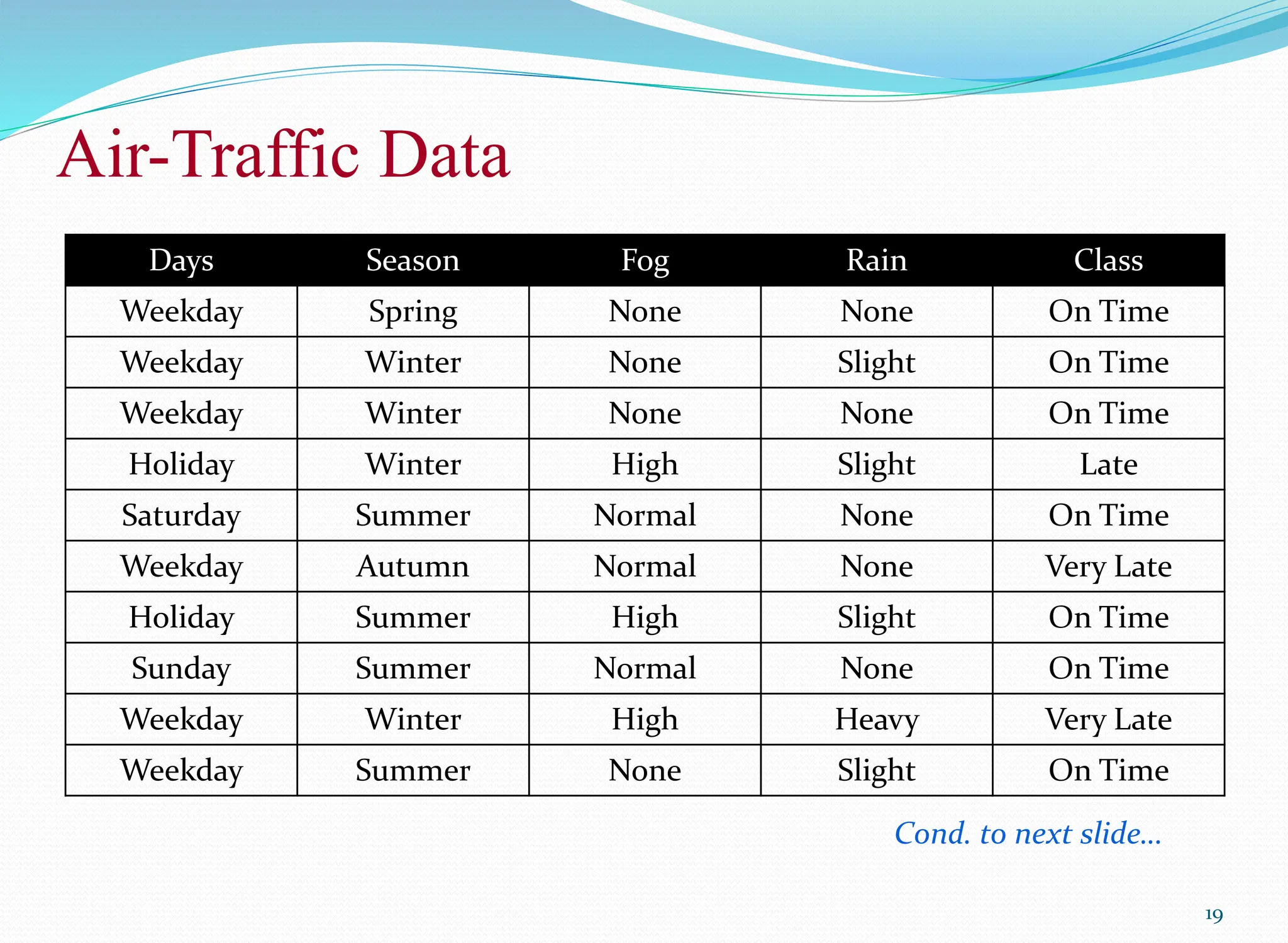

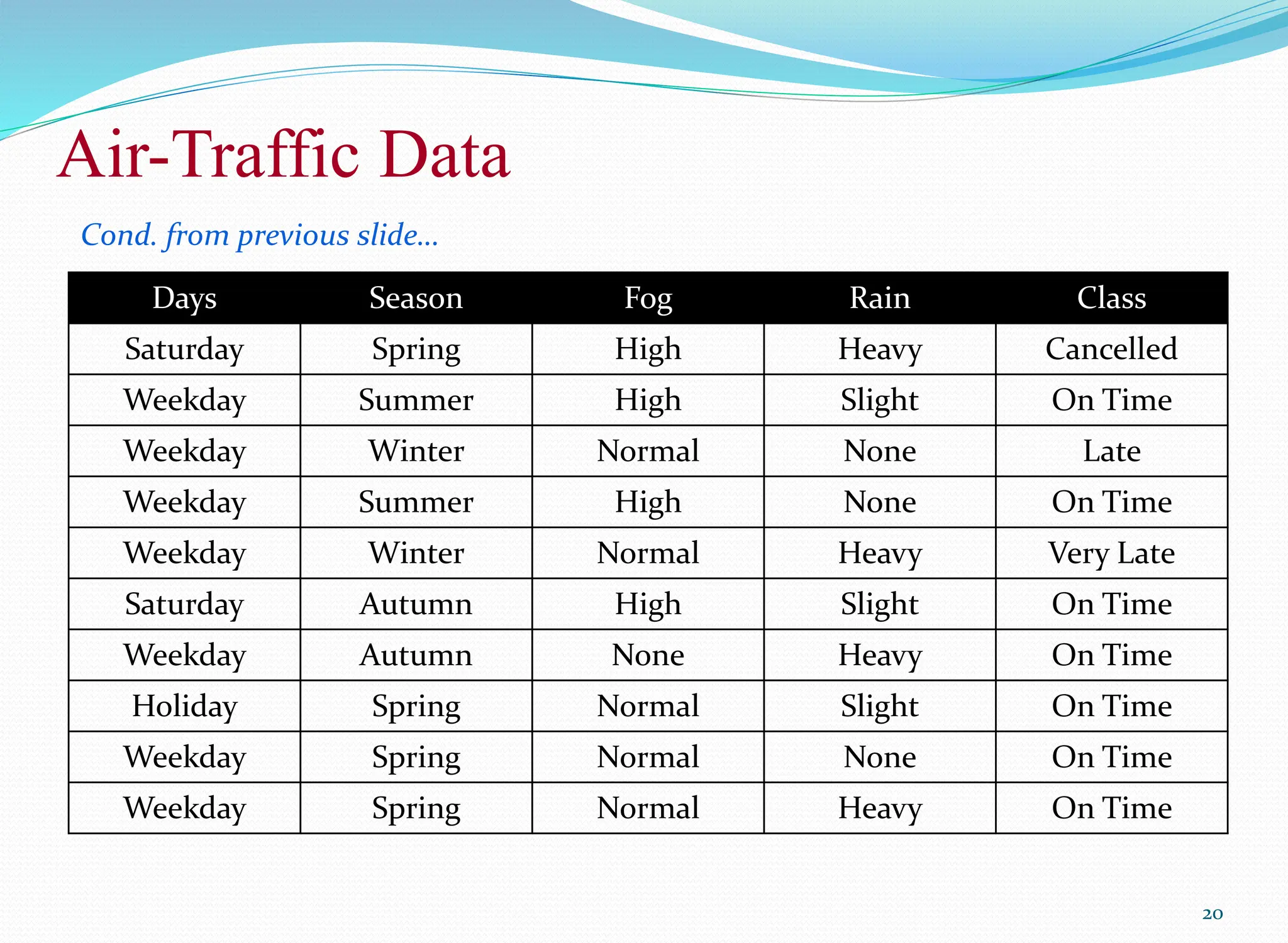

In this database, there are four attributes

A = [ Day, Season, Fog, Rain]

with 20 tuples.

The categories of classes are:

C= [On Time, Late, Very Late, Cancelled]

Given this is the knowledge of data and classes, we are to find most likely

classification for any other unseen instance, for example:

Classification technique eventually to map this tuple into an accurate class.

Week Day Winter High None ???](https://image.slidesharecdn.com/08bayesianclassifier-240608105138-7e470c1e/75/BayesianClassifierAndConditionalProbability-pptx-21-2048.jpg)