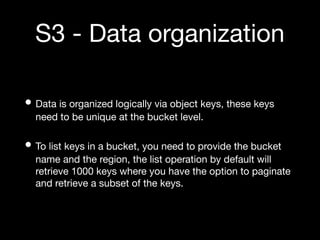

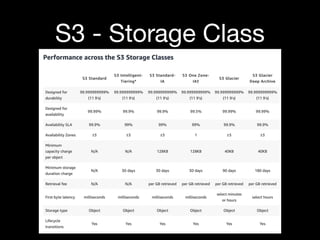

The document provides an overview of AWS storage options, focusing on services such as Relational Database Service (RDS), Simple Storage Service (S3), and DynamoDB. It details the functionalities and features of each service, including data handling, storage types, event notifications, and cost management strategies. Real-world examples, performance metrics, and guidelines for optimizing use of these services are also discussed.