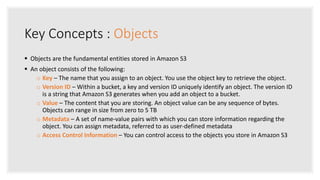

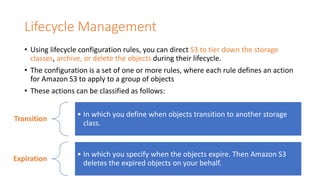

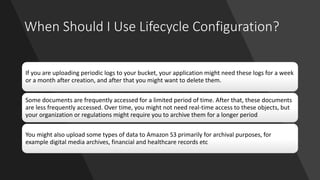

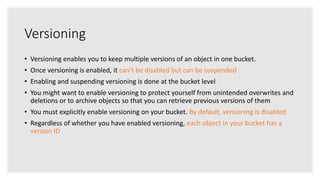

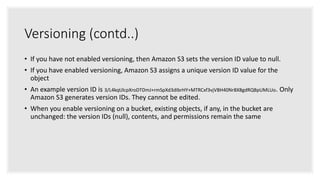

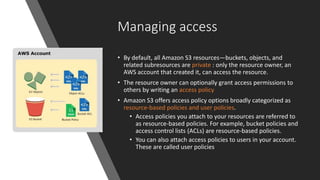

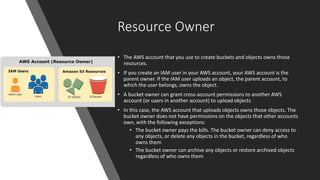

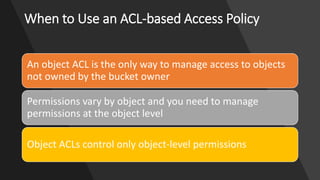

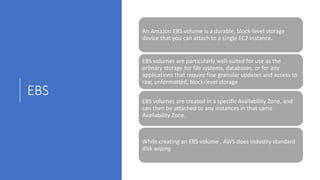

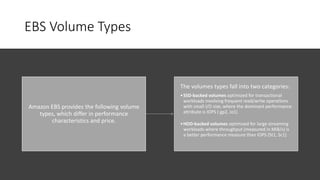

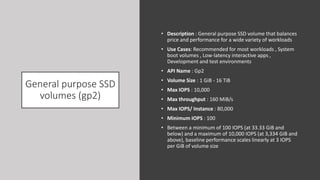

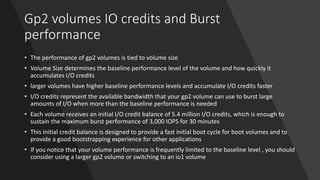

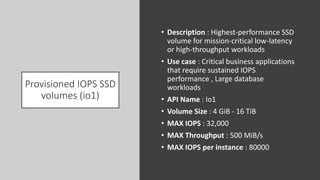

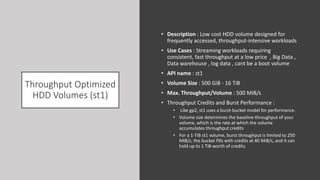

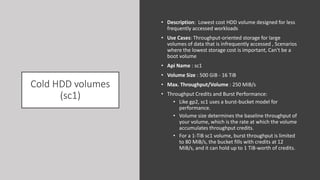

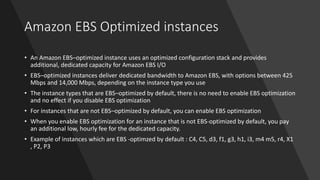

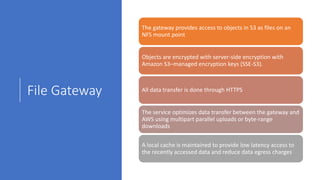

This document provides information about Amazon S3, Amazon EBS, and storage classes in AWS. It discusses key concepts of S3 including objects, buckets, and keys. It describes the different S3 storage classes like STANDARD, STANDARD_IA, GLACIER and their use cases. The document also covers S3 features like access control, versioning, lifecycle management and managing access. Finally, it provides an overview of Amazon EBS volumes, volume types, snapshots and EBS optimized instances.