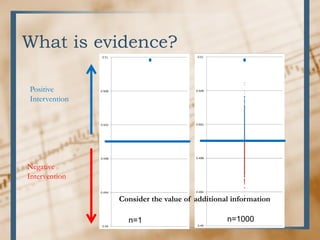

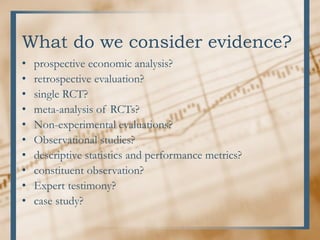

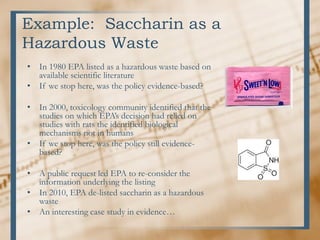

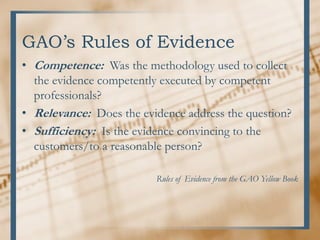

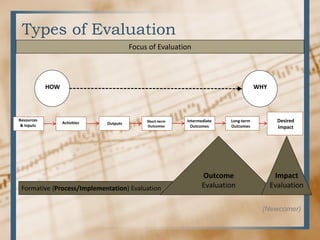

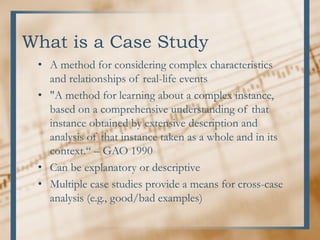

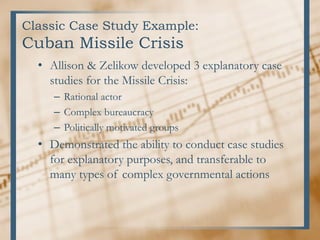

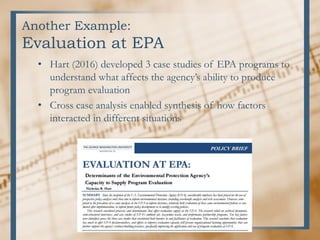

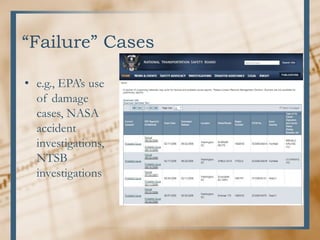

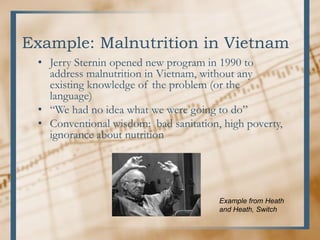

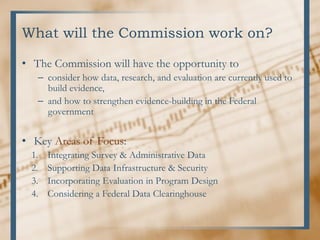

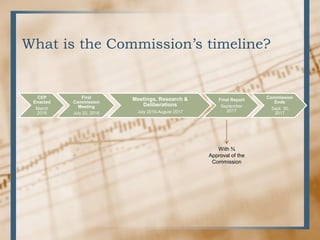

The document discusses the definition and evaluation of evidence in policy-making, highlighting various types of evaluations and the importance of context in determining evidence quality. It includes case studies, such as the EPA's reconsideration of saccharin's hazardous waste status and the success story of addressing malnutrition in Vietnam, to illustrate the value of qualitative approaches in understanding program impacts. Additionally, it outlines the creation and objectives of the Commission on Evidence-Based Policymaking, emphasizing the integration of survey and administrative data to improve government program performance.