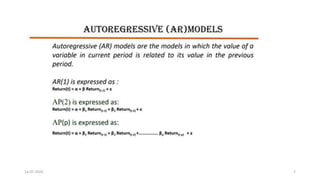

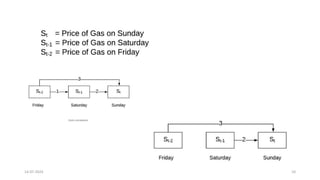

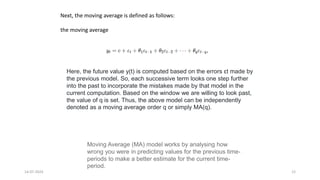

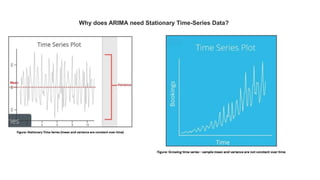

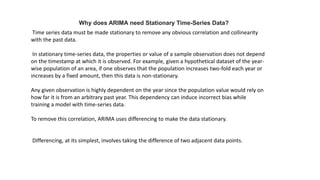

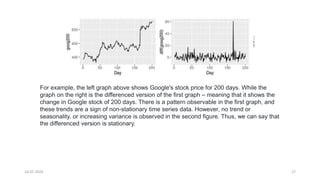

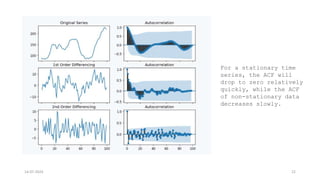

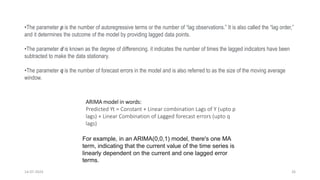

The document discusses the ARIMA model (AutoRegressive Integrated Moving Average) and its components, defined as ARIMA(p, d, q), where p, d, and q represent the autoregressive terms, degree of differencing, and moving average terms, respectively. It emphasizes the importance of using stationary time-series data for effective modeling, describing the process of differencing to achieve stationarity and outlining steps for forecasting. The document also mentions the significance of parameters p, d, and q in predicting future values based on past observations and errors.