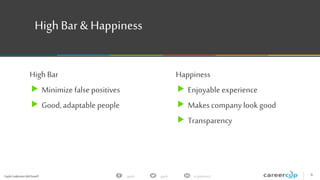

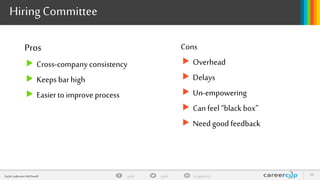

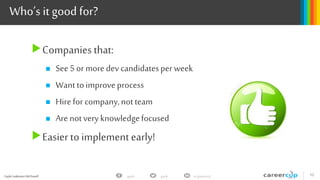

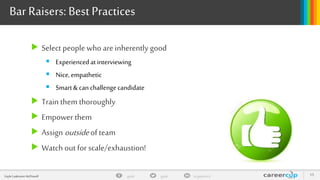

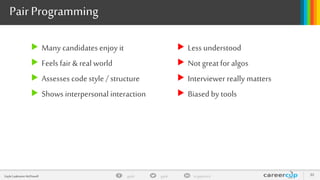

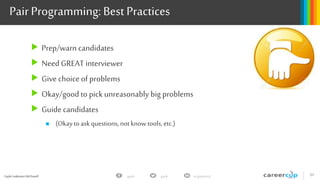

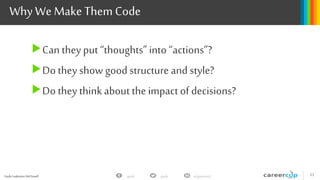

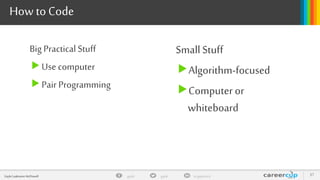

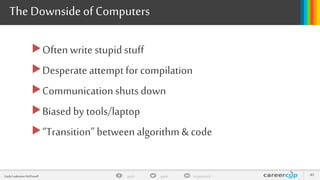

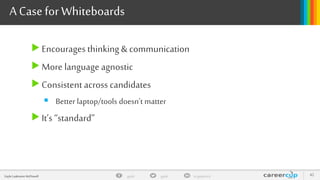

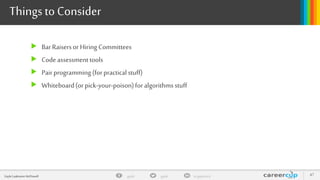

Gayle McDowell discusses best practices for interview architecture. Consistency, efficiency, high standards and candidate happiness should be priorities. She recommends either a bar raiser model or hiring committee to maintain consistency. Code assessments or homework projects can efficiently evaluate candidates before onsite interviews. A variety of question styles like algorithms, design and pair programming assess different skills. Coding platforms also vary in appropriateness depending on the problem. With the right training and guidelines, many approaches can work well but there is no perfect system.