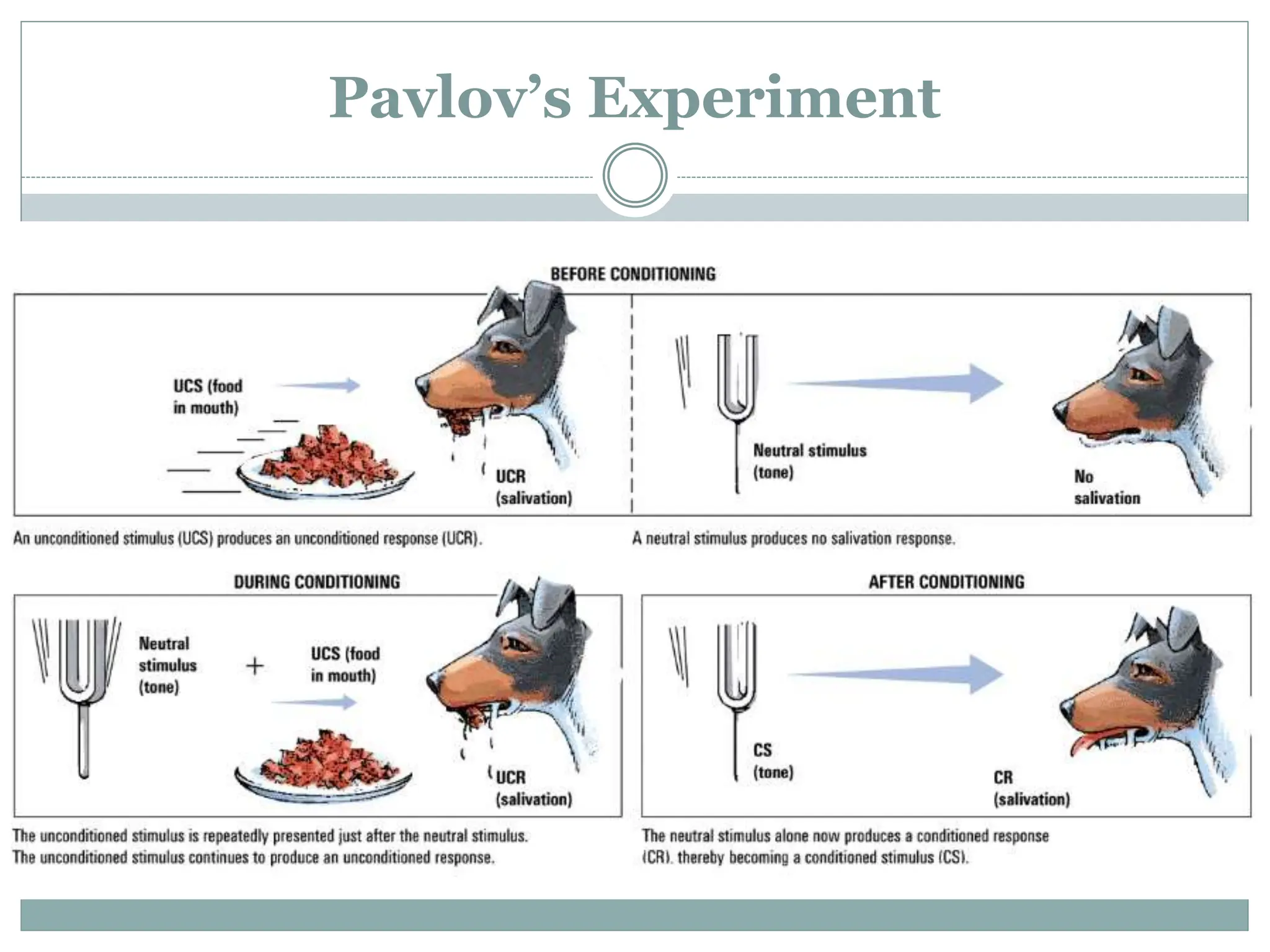

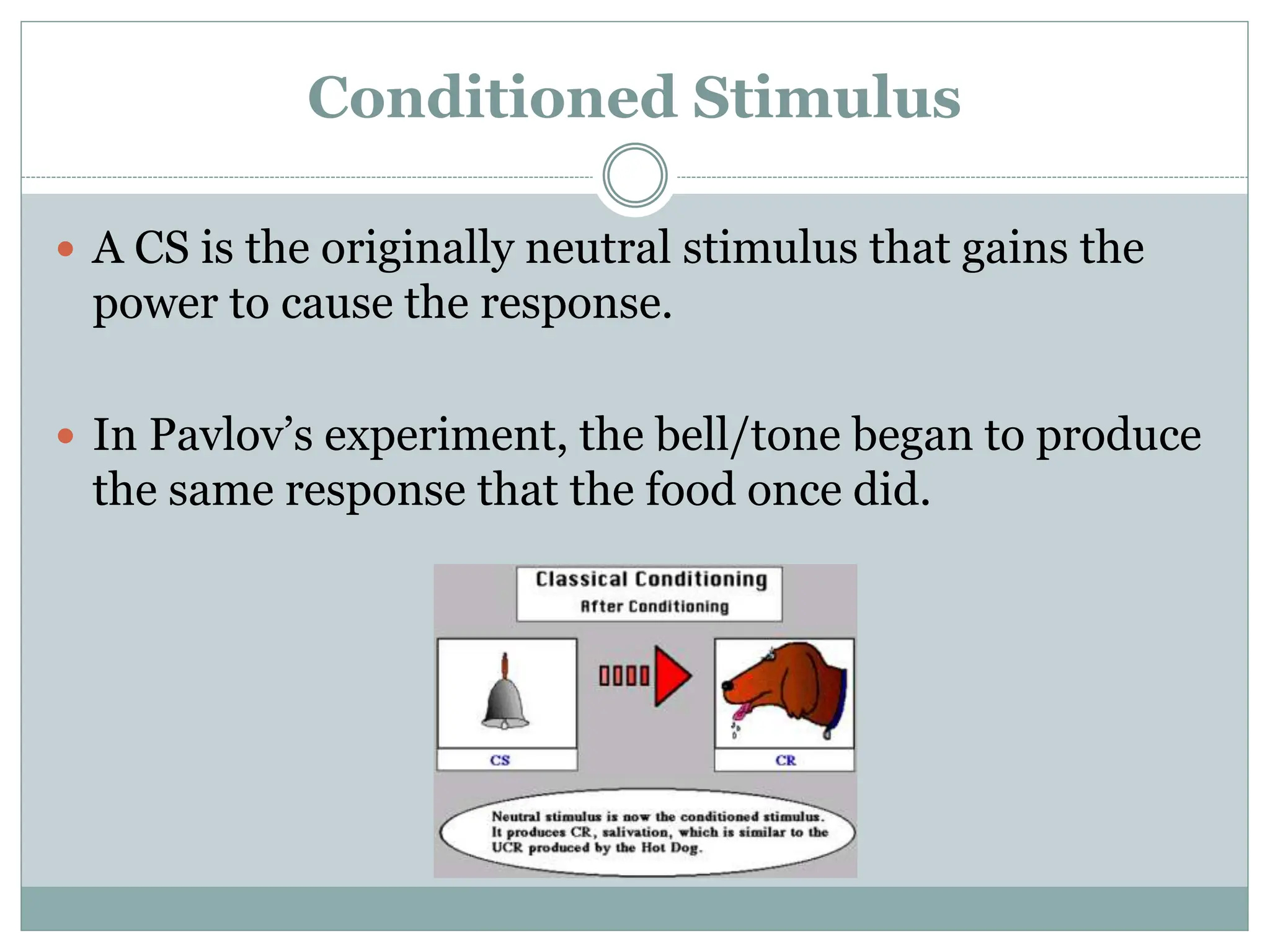

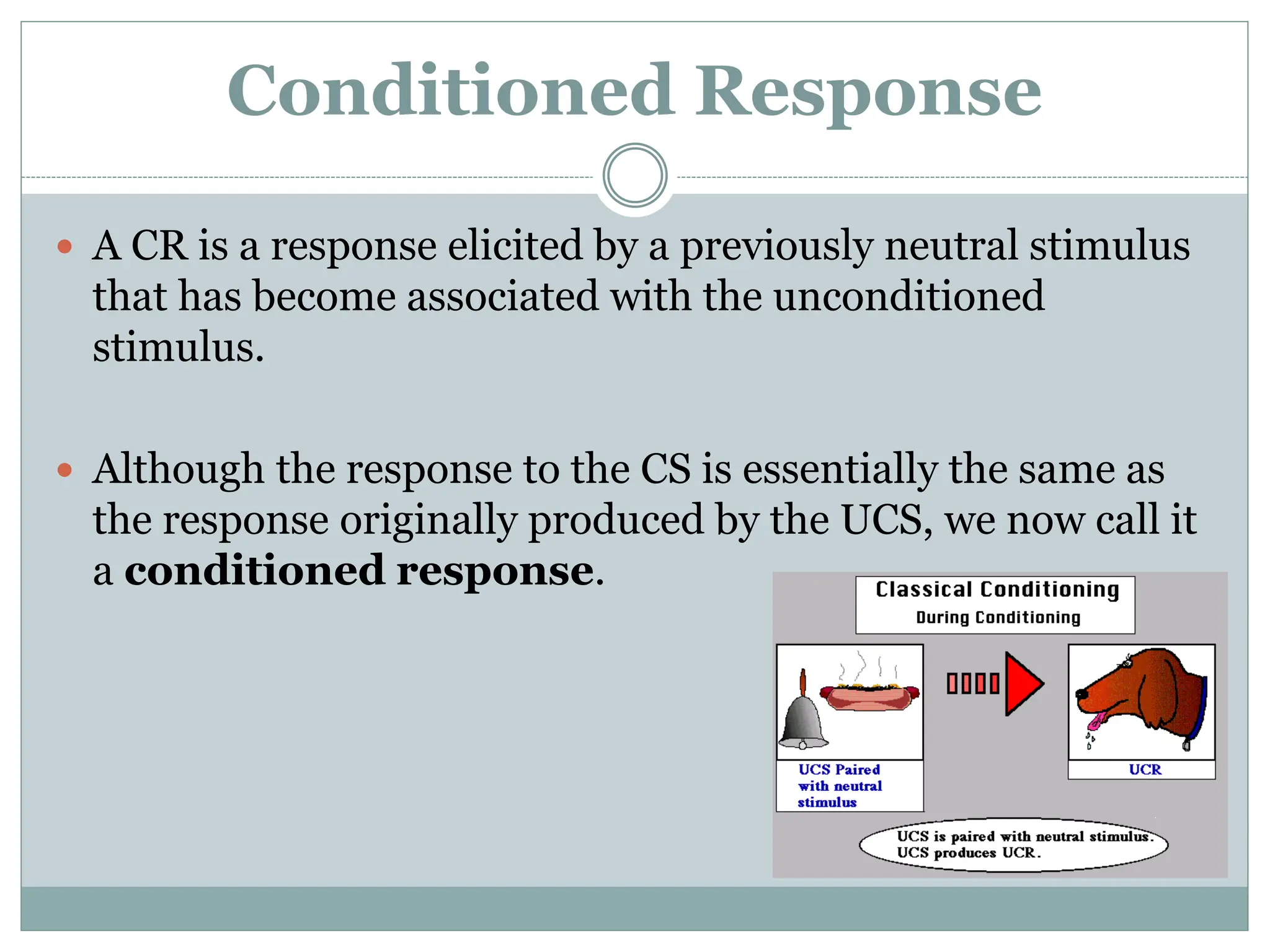

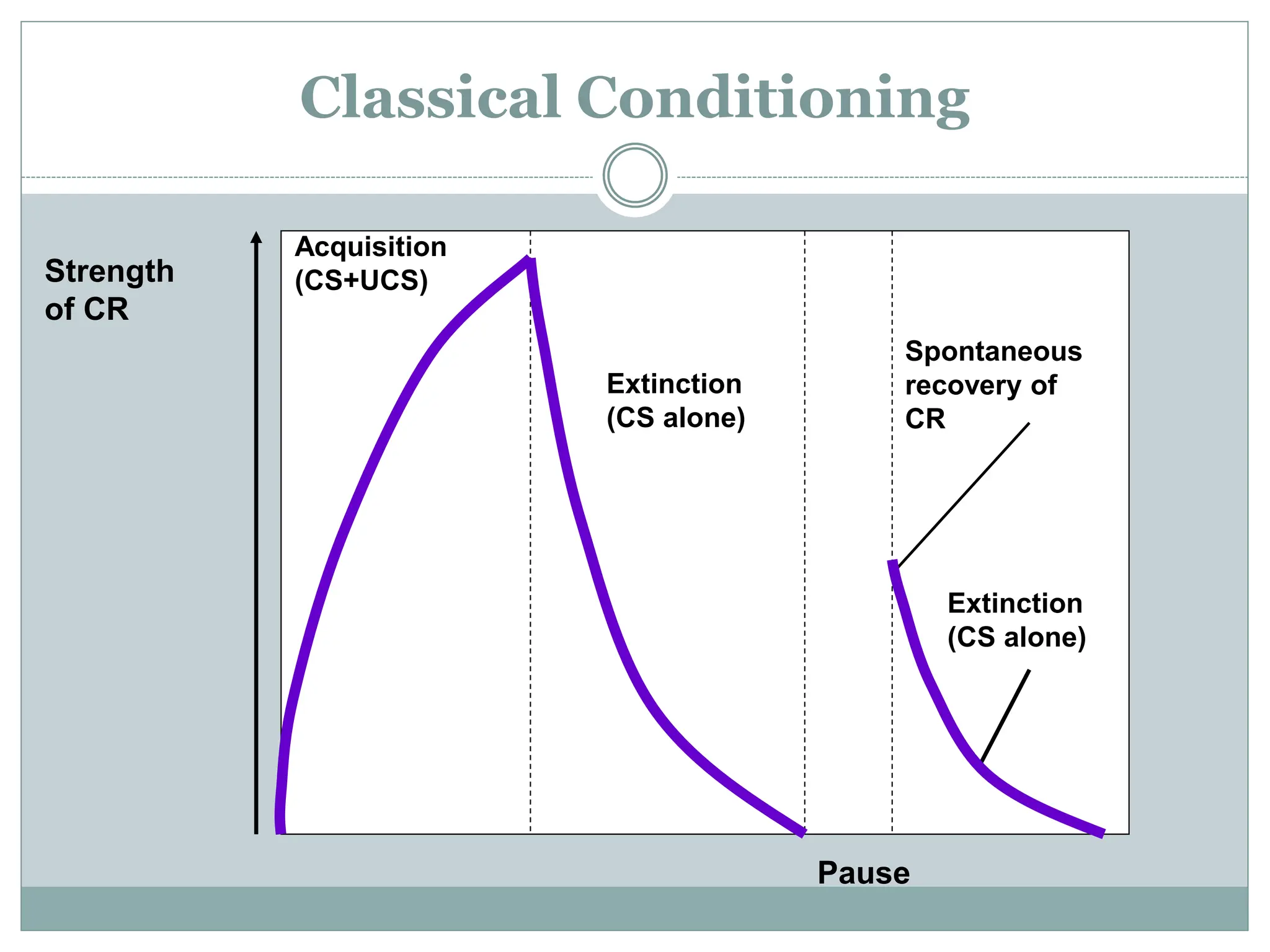

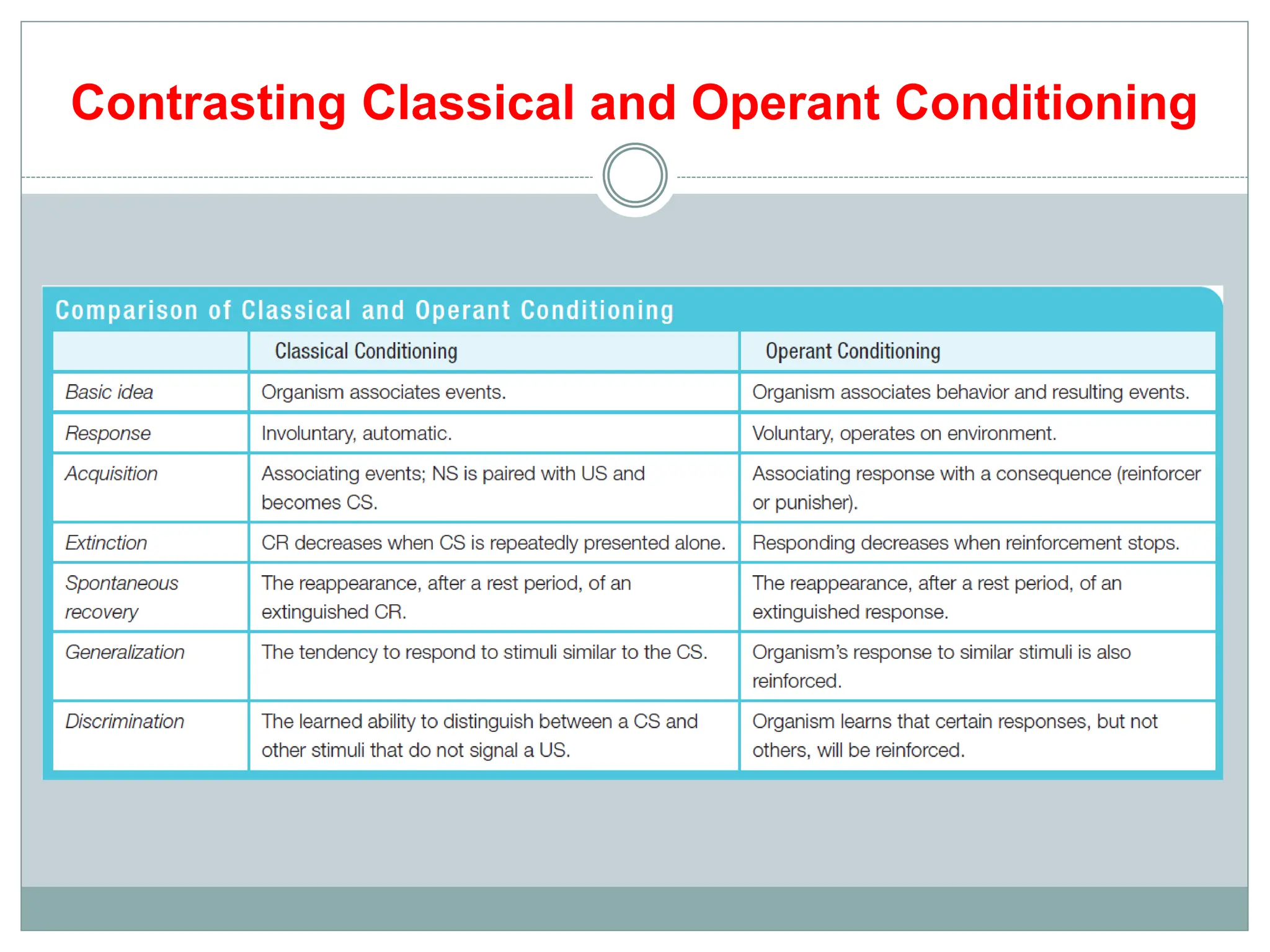

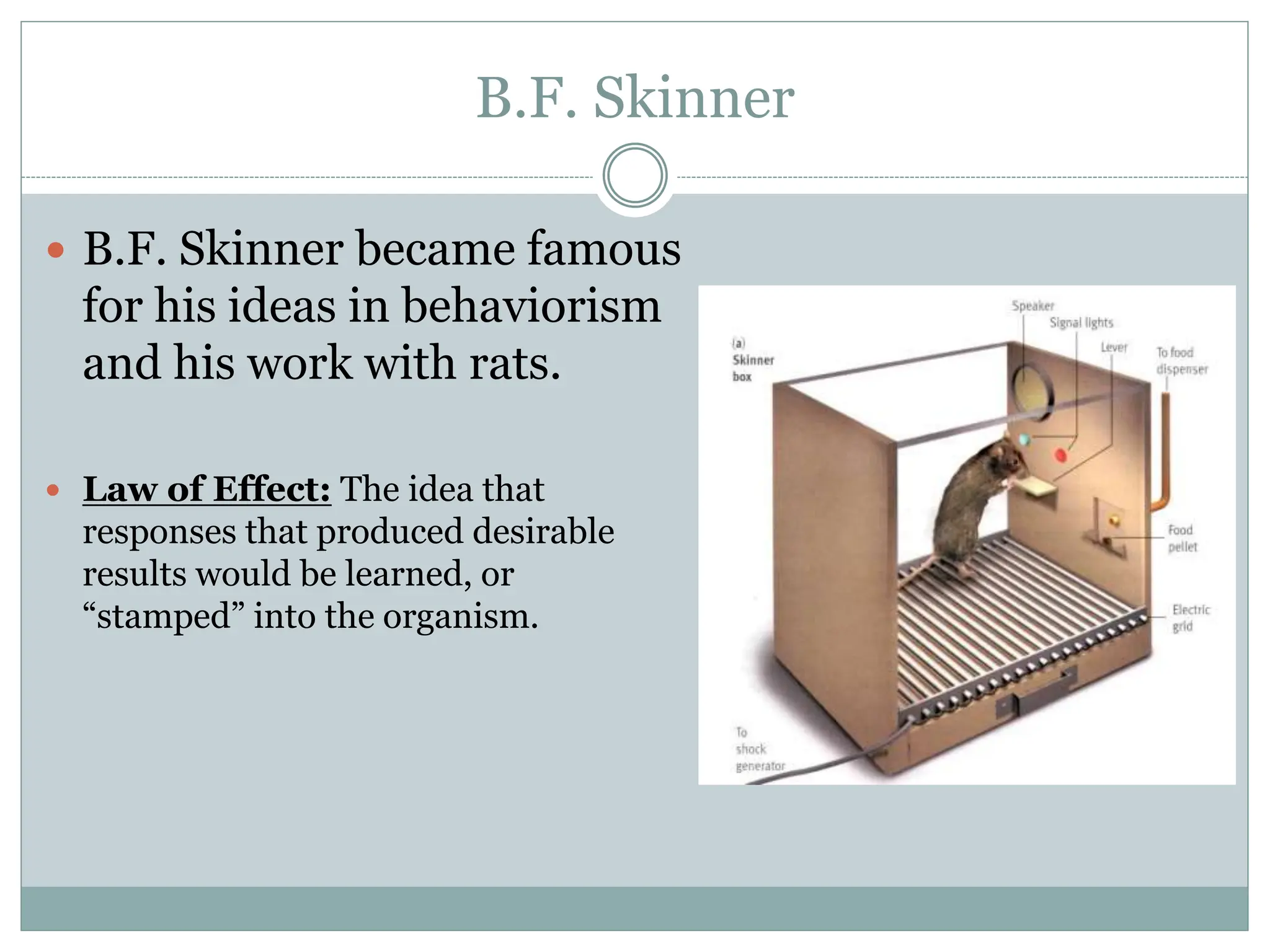

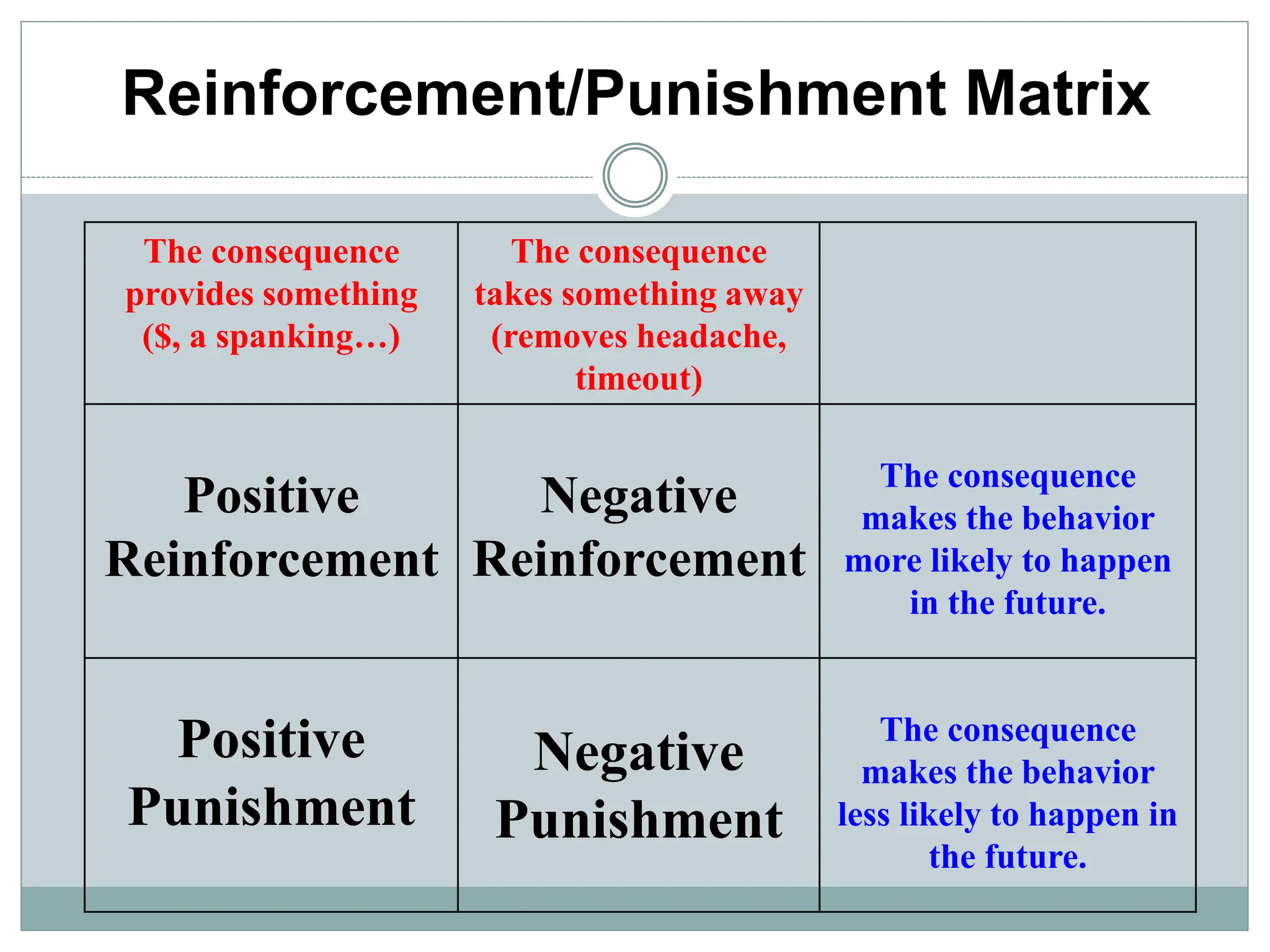

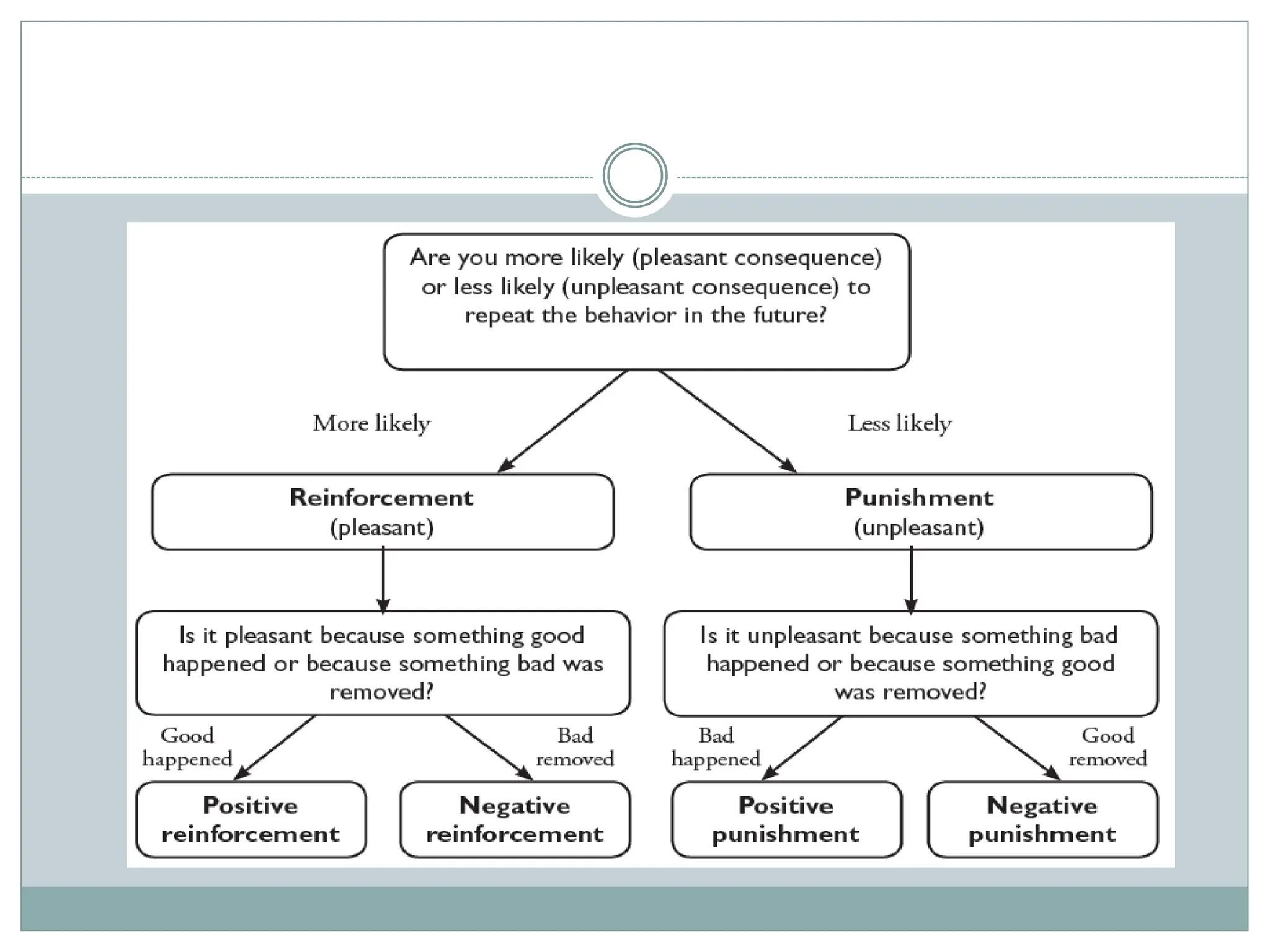

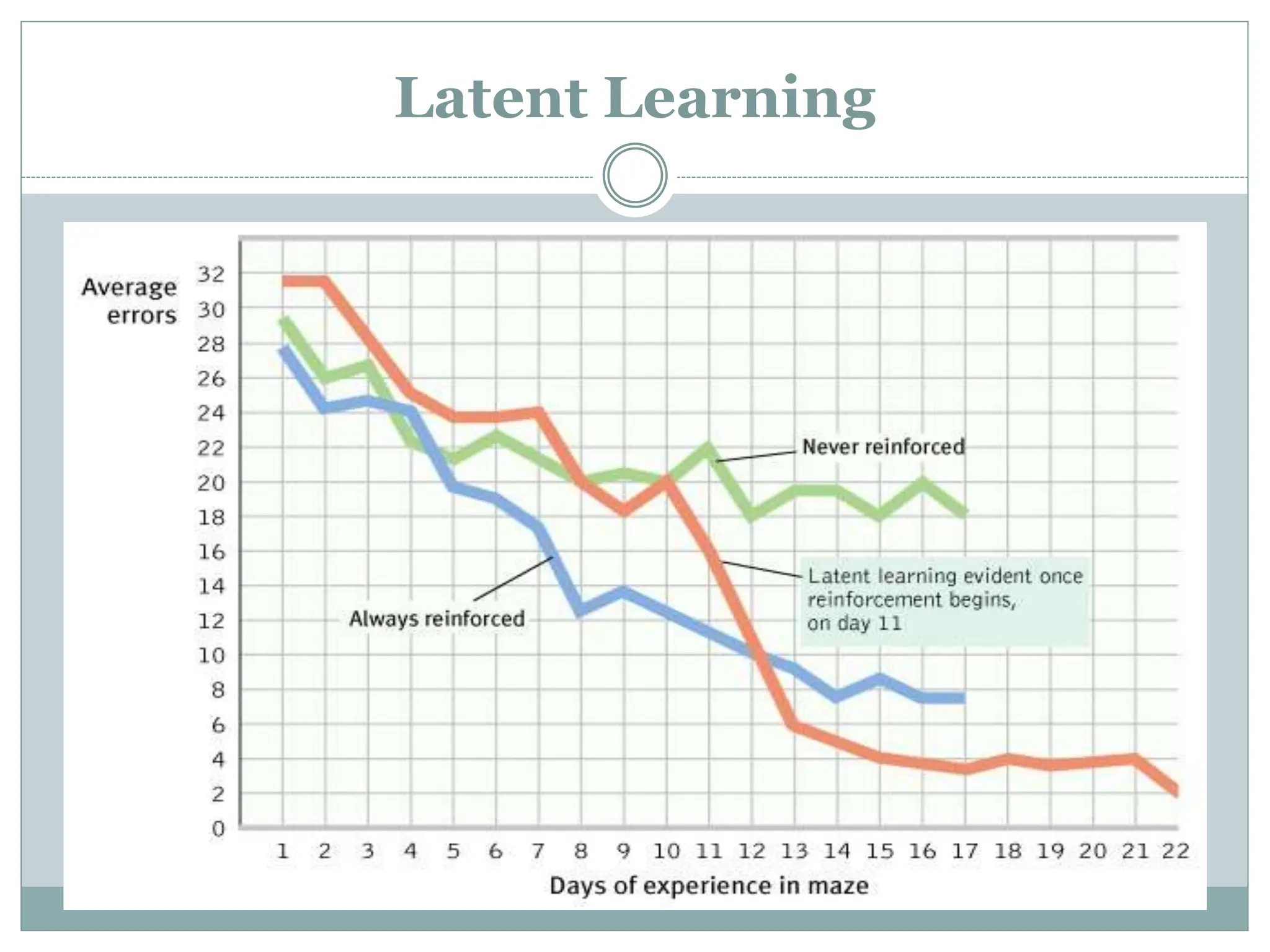

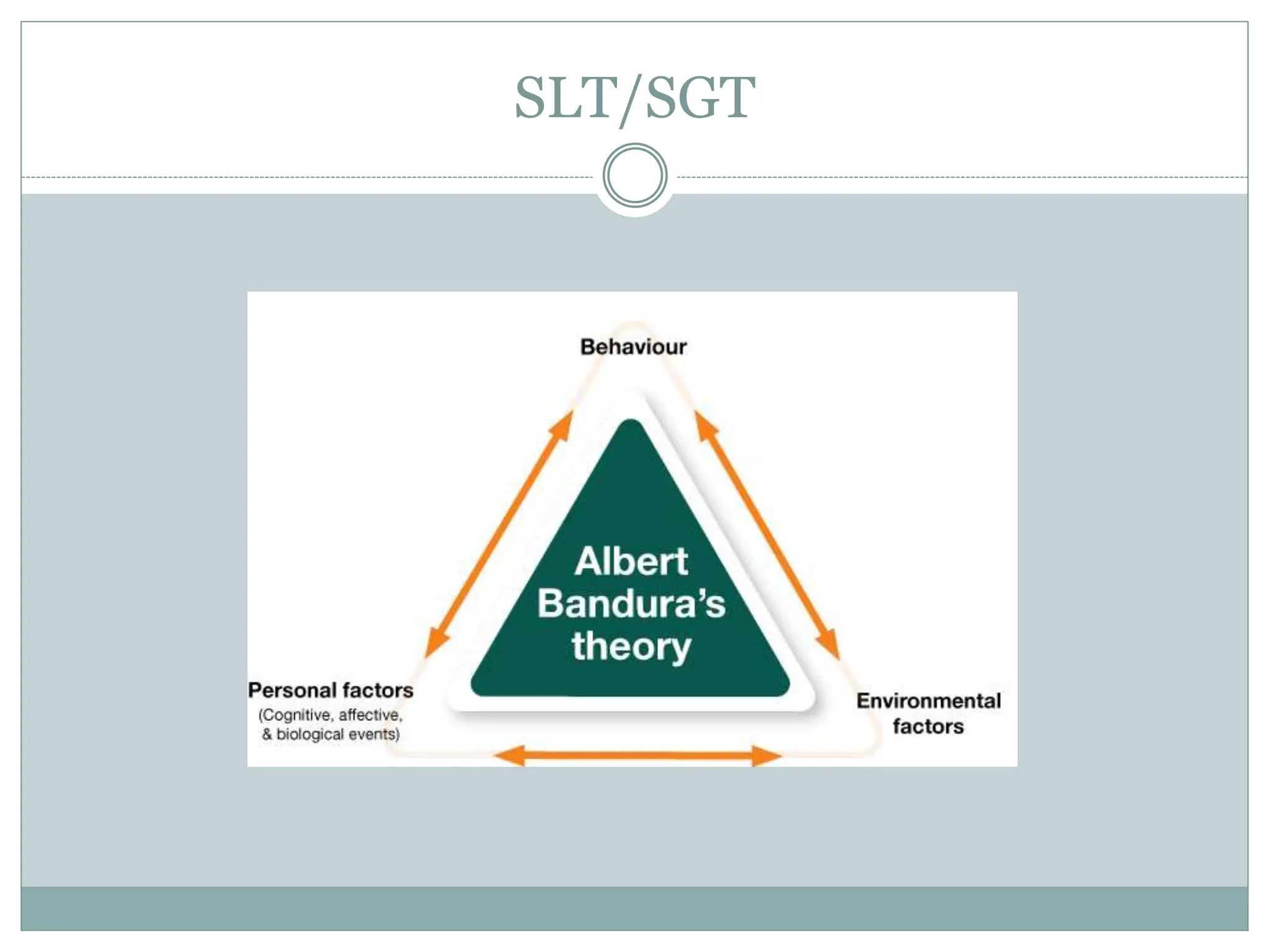

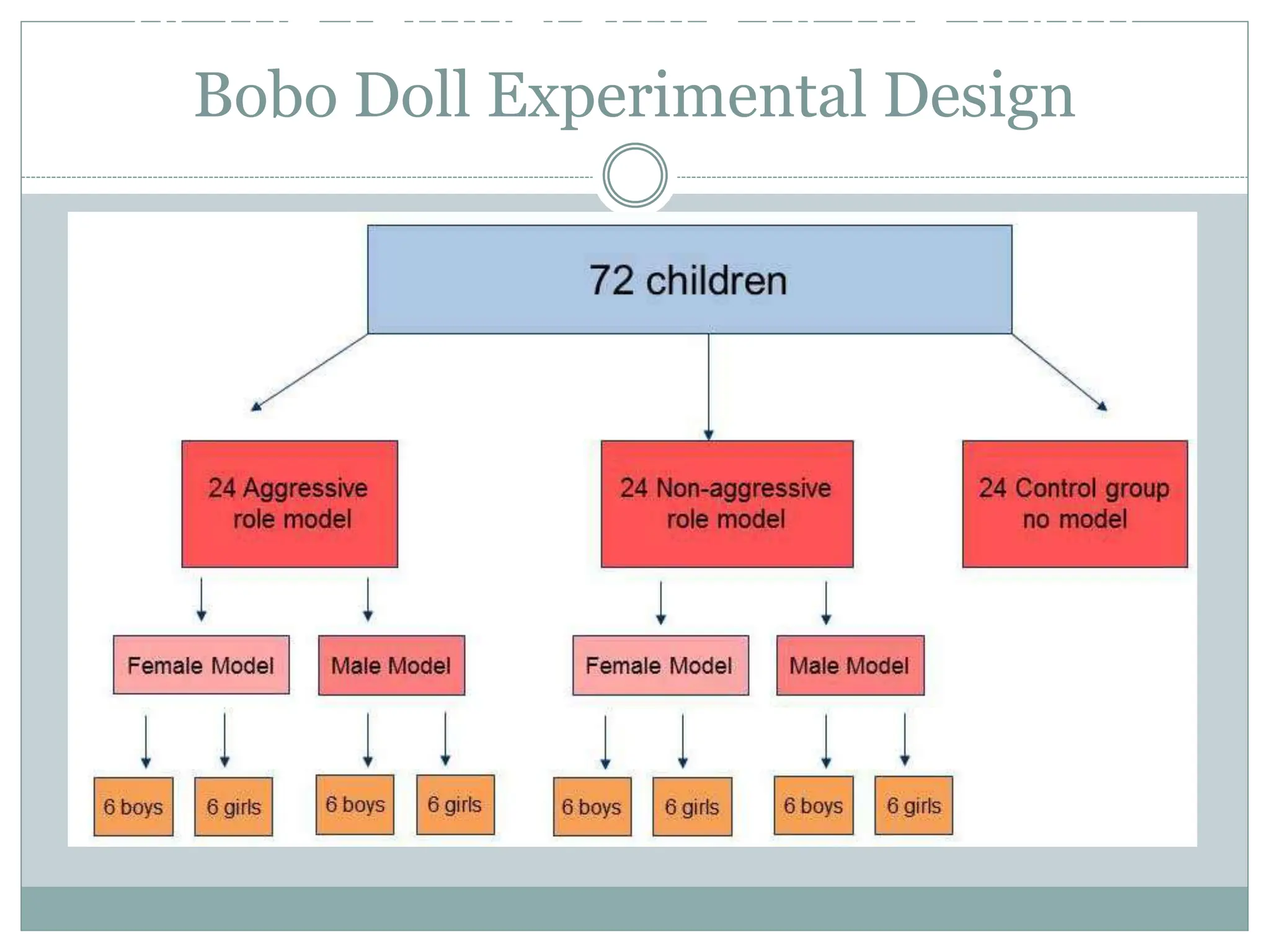

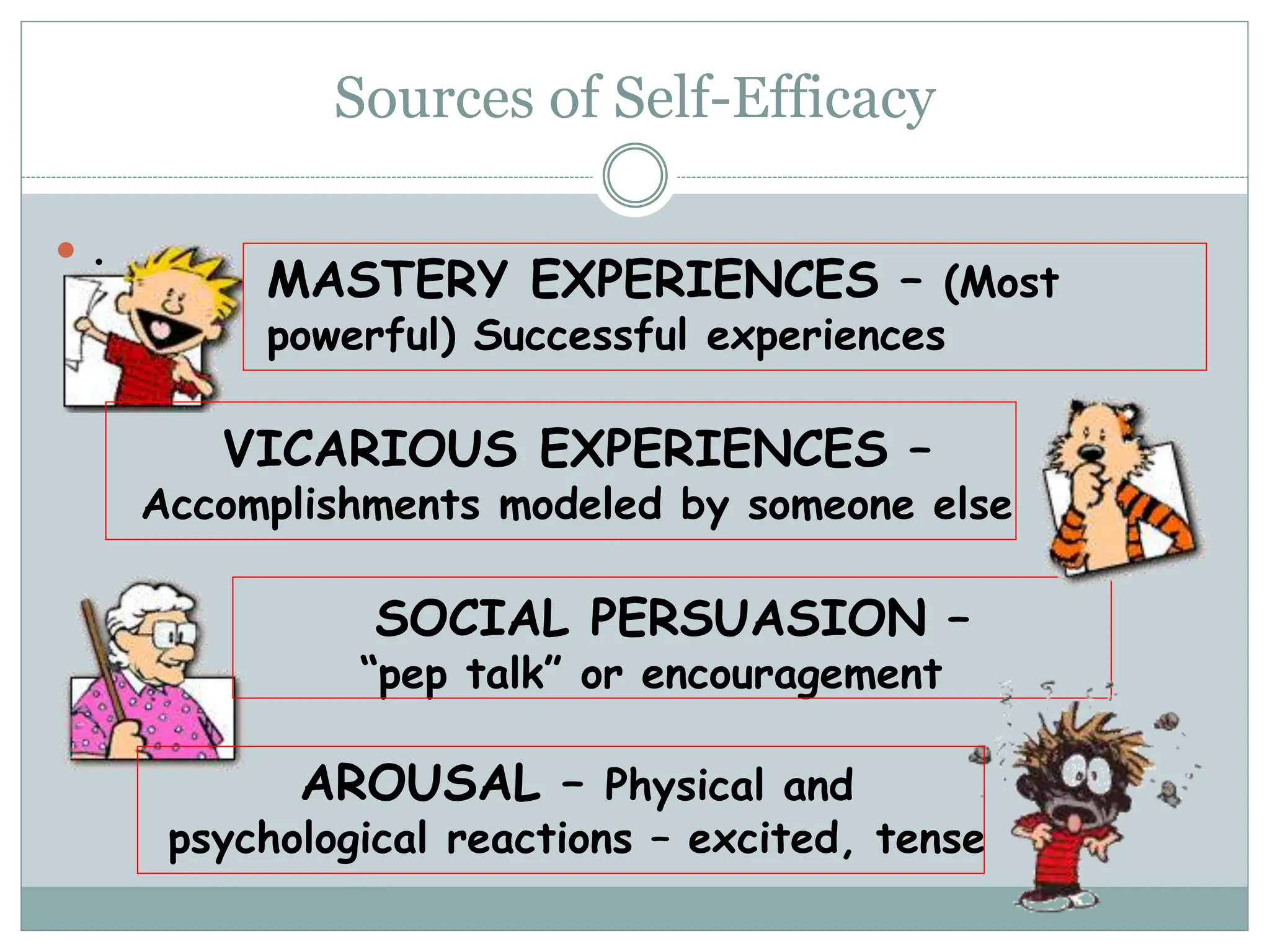

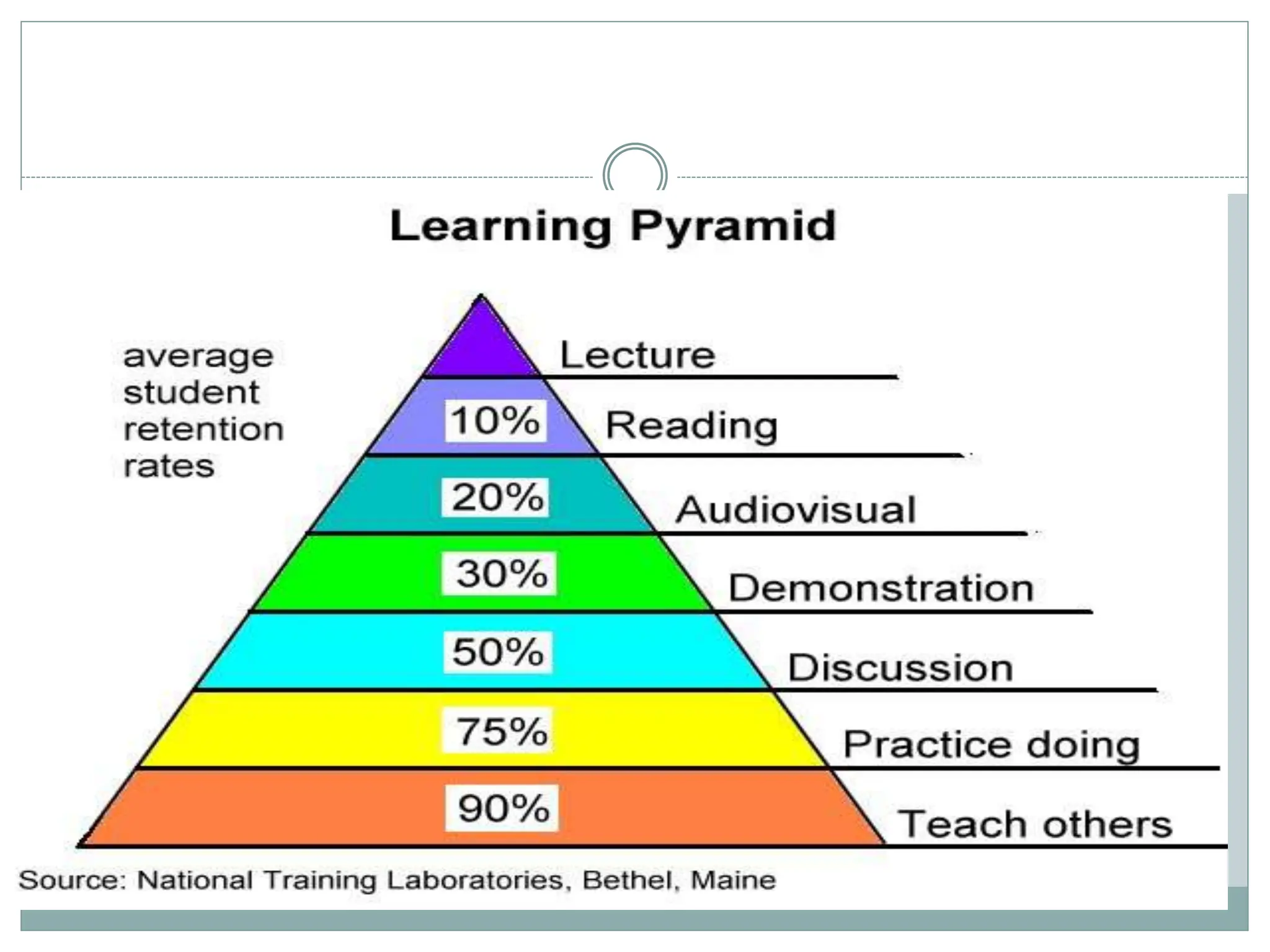

This document discusses learning theories, primarily focusing on classical and operant conditioning, their components, and key concepts introduced by Ivan Pavlov and B.F. Skinner. It highlights the differences between simple and complex learning, various reinforcement and punishment strategies, and cognitive learning processes like latent learning and observational learning. Additionally, it emphasizes the significance of self-efficacy and goal setting in the learning process, illustrating how learning is influenced by environmental factors and social interactions.