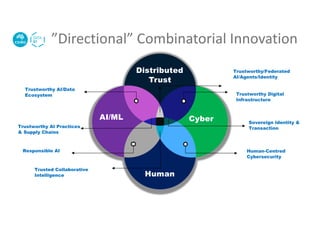

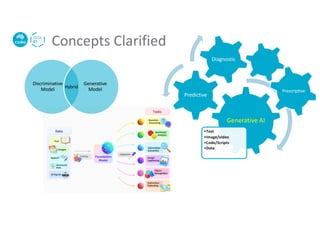

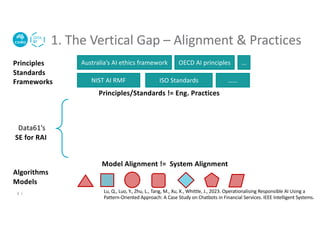

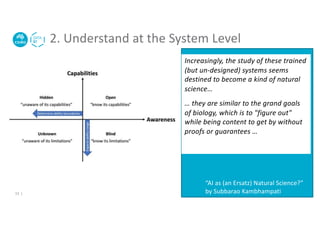

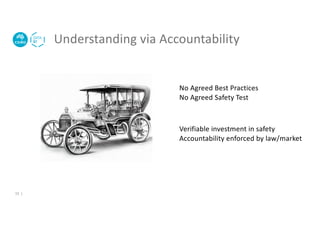

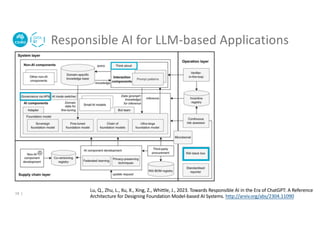

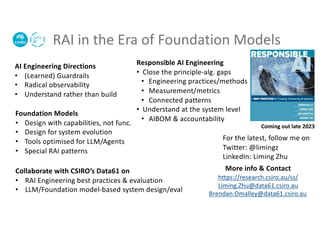

The document discusses challenges and directions for responsible AI. It outlines three gaps: 1) the need to align AI principles and standards with engineering practices; 2) the difficulty understanding inscrutable AI models; and 3) the misalignment between AI principles and system-level behaviors. It proposes closing these gaps through engineering practices, operationalizable frameworks, and connected design patterns. It also advocates understanding AI systems through testing and accountability measures. Finally, it discusses designing foundation model-based systems through capabilities rather than functions and ensuring tools are optimized for and trusted by humans and AI agents alike.