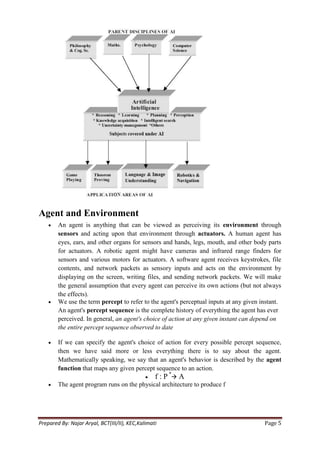

The document provides an introduction to artificial intelligence including:

- Definitions of AI as the science of making intelligent machines and duplicating human thought processes using computers.

- The goals of AI include replicating human intelligence, solving knowledge-intensive tasks, enhancing human-computer interaction, and developing intelligent agents.

- Some applications of AI are game playing, speech recognition, computer vision, expert systems, mathematical theorem proving, and scheduling/planning.

- Key issues in AI include representation of knowledge, search, inference, learning, planning, and building rational agents that can perceive environments through sensors and act through effectors.

![Fig. Agents interact with environments through sensors and actuators

Fig. Vacuum cleaner world

Percepts: location and contents, e.g., [A, Dirty]

Actions: Left, Right, Suck, NoOp

For Vacuum Cleaner Agent:

Percept sequence Action

[A, Clean] Right

[A, Dirty] Suck

[B, Clean] Left

[B, Dirty] Suck

[A, Clean], [A, Clean] Right

[A, Clean], [A, Dirty] Suck

…

function Reflex-Vacuum-Agent( [location,status]) returns an action

if status = Dirty then return Suck

else if location =A then return Right

else if location = B then return Left

Rationality

Definition of Rational Agent:

For each possible percept sequence, a rational agent should select an action that is expected to maximize its

performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge

the agent has.

Rational ≠ omniscient (percepts may not supply all relevant information)

Rational ≠ clairvoyant (action outcomes may not be as expected)

Hence, rational ≠ successful

Prepared By: Najar Aryal, BCT(III/II), KEC,Kalimati Page 6](https://image.slidesharecdn.com/aicompletenote-120914090252-phpapp01/85/Ai-complete-note-6-320.jpg)

![function REFLEX-VACUUM-AGENT ([location, status]) return an action

if status == Dirty then return Suck

else if location == A then return Right

else if location == B then return Left

Reduction from 4T to 4 entries

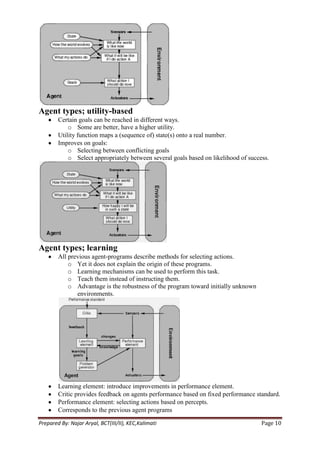

Agent types; reflex and state

To tackle partially observable environments.

Maintain internal state

Over time update state using world knowledge

How does the world change.

How do actions affect world.

⇒Model of World

Agent types; goal-based

The agent needs a goal to know which situations are desirable.

o Things become difficult when long sequences of actions are required to find

the goal.

Typically investigated in search and planning research.

Major difference: future is taken into account

Is more flexible since knowledge is represented explicitly and can be manipulated.

Prepared By: Najar Aryal, BCT(III/II), KEC,Kalimati Page 9](https://image.slidesharecdn.com/aicompletenote-120914090252-phpapp01/85/Ai-complete-note-9-320.jpg)

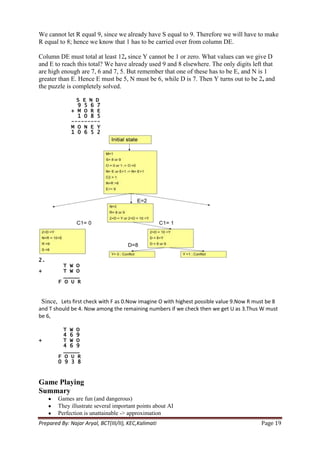

![4. Provide a description of the goal (used to check if a reached state is a goal state).

Formulating the 8-puzzle Problem

States: each represented by a 3 × 3 array of numbers in [0 . . . 8], where value 0 is for the empty cell.

Operators: 24 operators of the form Op(r,c,d) where r, c ∈ {1, 2, 3}, d ∈ {L,R,U,D}.

Op(r,c,d) moves the empty space at position (r, c) in the direction d.

Example: Op(3,2,R)

We have 24 operators in this problem formulation . . .

20 too many!

Problem types

Deterministic, fully observable ⇒single state problem

o Agent knows exactly which state it will be in; solution is a sequence.

Partial knowledge of states and actions:

o Non-observable ⇒sensorless or conformant problem

Agent may have no idea where it is; solution (if any) is a sequence.

o Nondeterministic and/or partially observable ⇒contingency problem

Percepts provide new information about current state; solution is a tree or

policy; often interleave search and execution.

If uncertainty is caused by actions of another agent: adversarial problem

o Unknown state space ⇒exploration problem (―online‖)

When states and actions of the environment are unknown.

Prepared By: Najar Aryal, BCT(III/II), KEC,Kalimati Page 16](https://image.slidesharecdn.com/aicompletenote-120914090252-phpapp01/85/Ai-complete-note-16-320.jpg)

![• Conjunction, disjunction, conditional and biconditional attach two sentences together

– It is raining and it is cold R ∙ C

– If it rains then it pours R⊃P

4.1.5. Well-Formed Formulae

Rules for WFF

1. A sentence letter by itself is a WFF

A B Z

2. The result of putting immediately in front of a WFF is a WFF

A B B (A B) ( C D)

3. The result of putting , , , or between two WFFs and surrounding the whole thing with

parentheses is a WFF

(A B) ( C D) (( C D) (E (F G)))

4. Outside parentheses may be dropped

A B C D ( C D) (E (F G))

A sentence that can be constructed by applying the rules for constructing WFFs one at a time is a

WFF

A sentence which can't be so constructed is not a WFF.

– Atomic sentences are wffs:

Propositional symbol (atom)

Examples: P, Q, R, BlockIsRed, SeasonIsWinter

– Complex or compound wffs.

Given w1 and w2 wffs:

w1 (negation)

(w1 w2) (conjunction)

(w1 w2) (disjunction)

(w1 w2) (implication; w1 is the antecedent;

w2 is the consequent)

(w1 w2) (biconditional)

4.1.6. Tautology

If a wff is True under all the interpretations of its constituents atoms, we say that

the wff is valid or it is a tautology.

Examples:

1 P P 2 (P P) 3 [P (Q P)] 4 [(P Q) P) P]

An inconsistent sentence or contradiction is a sentence that is False under all interpretations. The

world is never like what it describes, as in ―It‘s raining and it‘s not raining.‖

4.1.7.Validity

An argument is valid whenever the truth of all its premises implies the truth of its conclusion.

An argument is a sequence of propositions. The final proposition is called the conclusion of the argument while the other

proposition are called the premises or hypotheses of the argument.

one can use the rules of inference to show the validity of an argument.

Note that p1, p2, … q are generally compound propositions or wffs.

4.2.

Intelligent agents should have capacity for:

Prepared By: Najar Aryal, BCT(III/II), KEC,Kalimati Page 34](https://image.slidesharecdn.com/aicompletenote-120914090252-phpapp01/85/Ai-complete-note-34-320.jpg)

![ Clausal form represents the logical database as a set of disjunctions of literals

Resolution is applied to two clauses when one contains a literal and the other its negation

The substitutions used to produce the empty clause are those under which the opposite of the

negated goal is true

If these literals contain variables, they must be unified to make them equivalent

A new clause is then produced consisting of the disjunction of all the predicates in the two

clauses minus the literal and its negative instance (which are said to have been ―resolved

away‖)

Example:

We wish to prove that ―Fido will die‖ from the statements that

―Fido is a dog‖ and ―all dogs are animals‖ and ―all animals will die‖

Convert these predicates to clause form

Predicate Form Clause Form

x: [dog(x)animal(x)] ¬ dog(x) V animal(x)

Dog(fido) Dog(fido)

y:[animal(y) die(y)] ¬ animal(y) V die(y)

Apply Resolution

Q.1. Anyone passing the Artificial Intelligence exam and winning the lottery is happy. But anyone

who studies or is lucky can pass all their exams. Ali did not study be he is lucky. Anyone who is lucky

wins the lottery. Is Ali happy?

Anyone passing the AI Exam and winning the lottery is happy

X:[pass(x,AI) Λ win(x, lottery) happy(x)]

Anyone who studies or is lucky can pass all their exams

X Y [studies(x) V lucky(x) pass(x,y)]

Ali did not study but he is lucky

¬ study(ali) Λ lucky(ali)

Anyone who is lucky wins the lottery

X: [lucky(x) win(x,lottery)]

Change to clausal form

1. ¬pass(X,AI) V ¬win(X,lottery) V happy(X)

2. ¬study(Y) V pass(Y,Z)

3. ¬lucky(W) V pass(W,V)

Prepared By: Najar Aryal, BCT(III/II), KEC,Kalimati Page 45](https://image.slidesharecdn.com/aicompletenote-120914090252-phpapp01/85/Ai-complete-note-45-320.jpg)