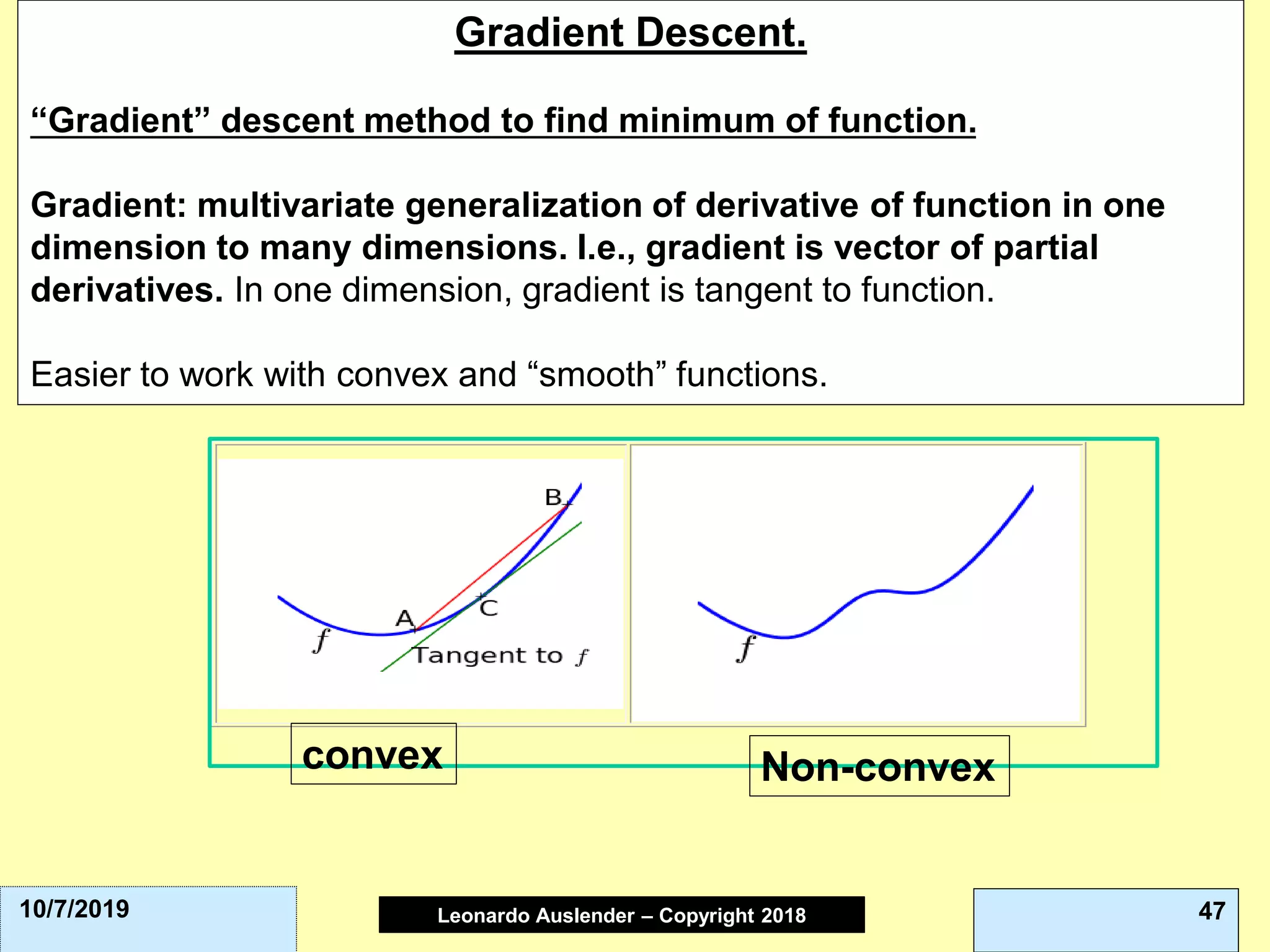

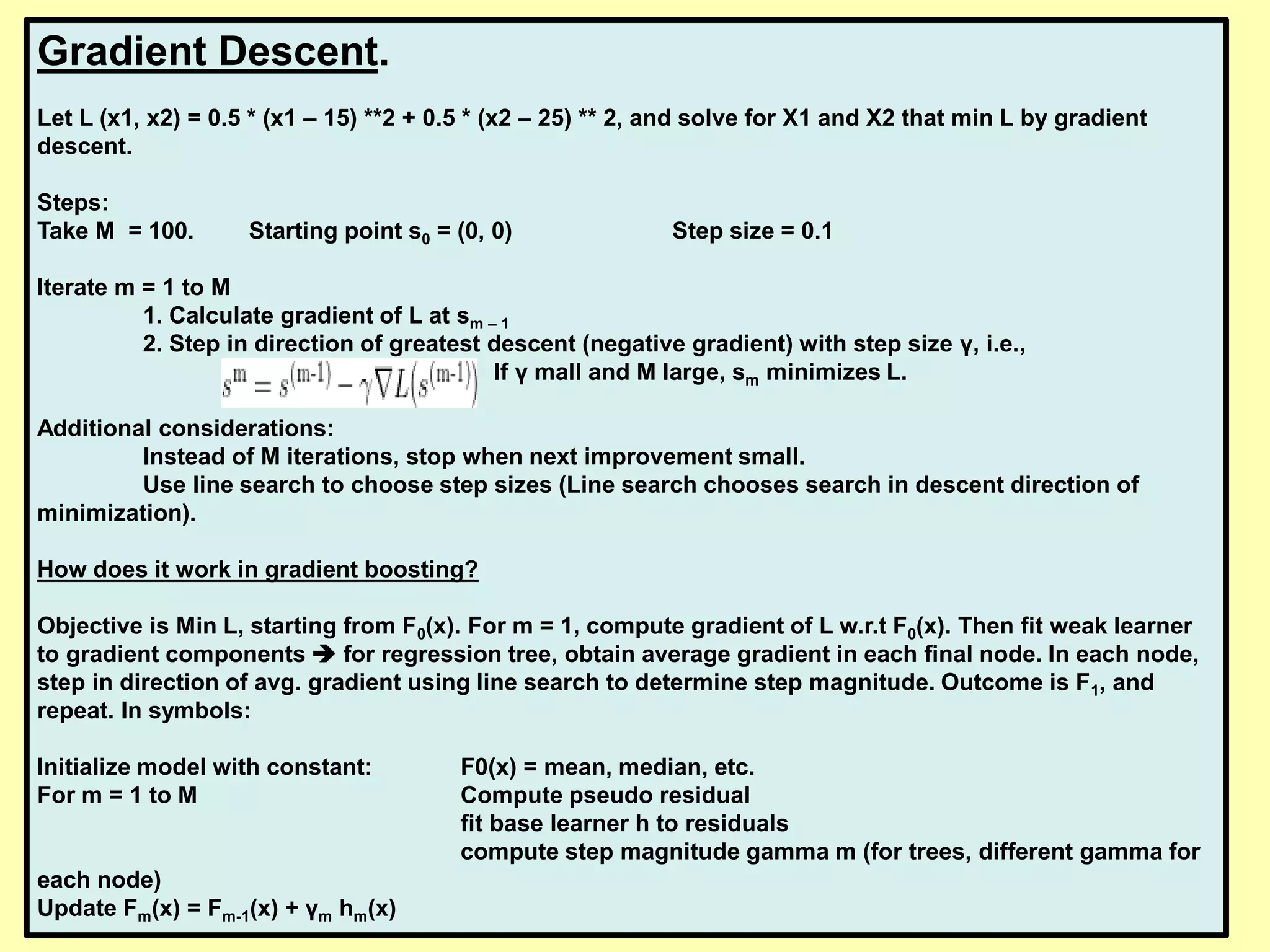

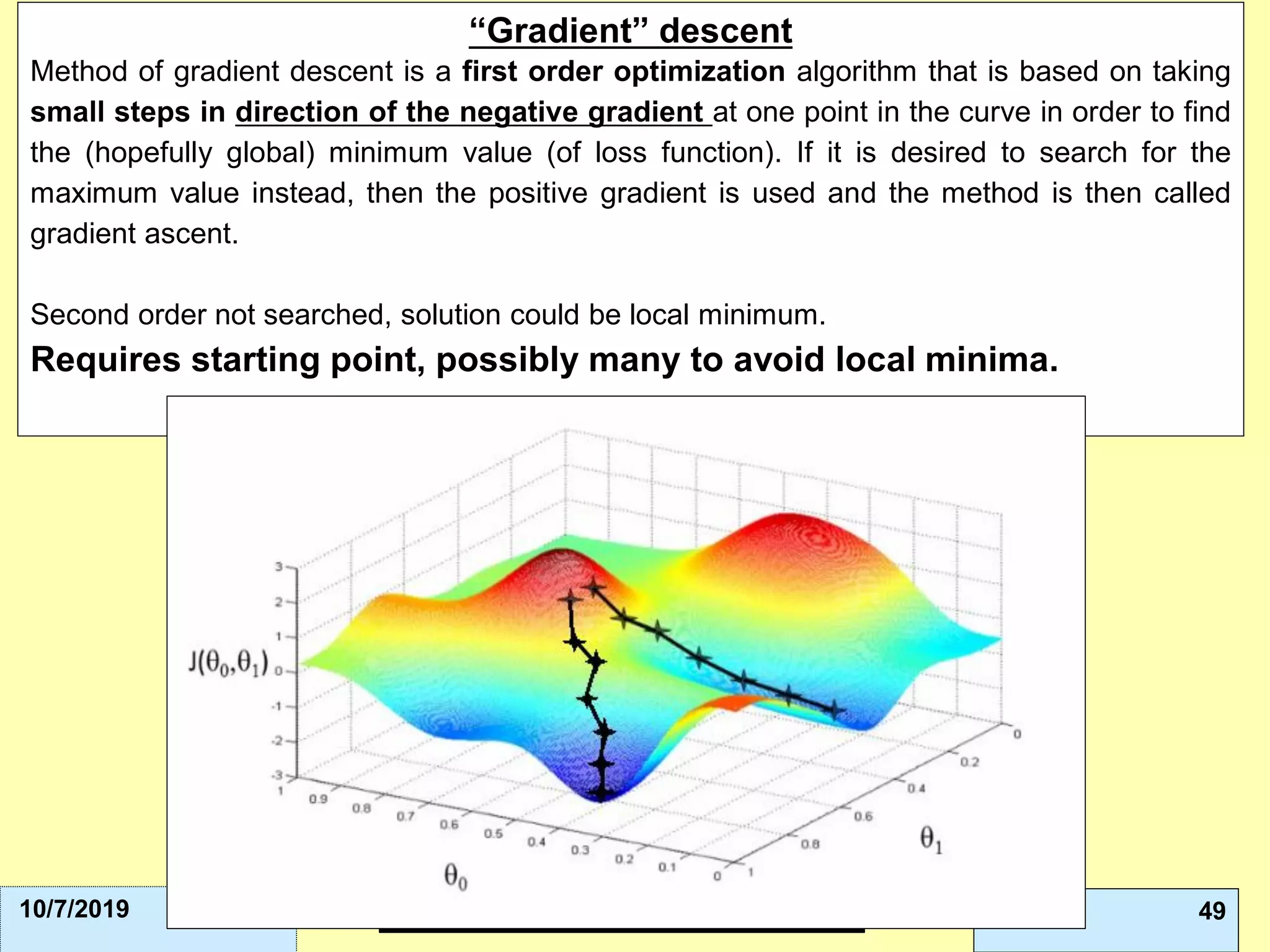

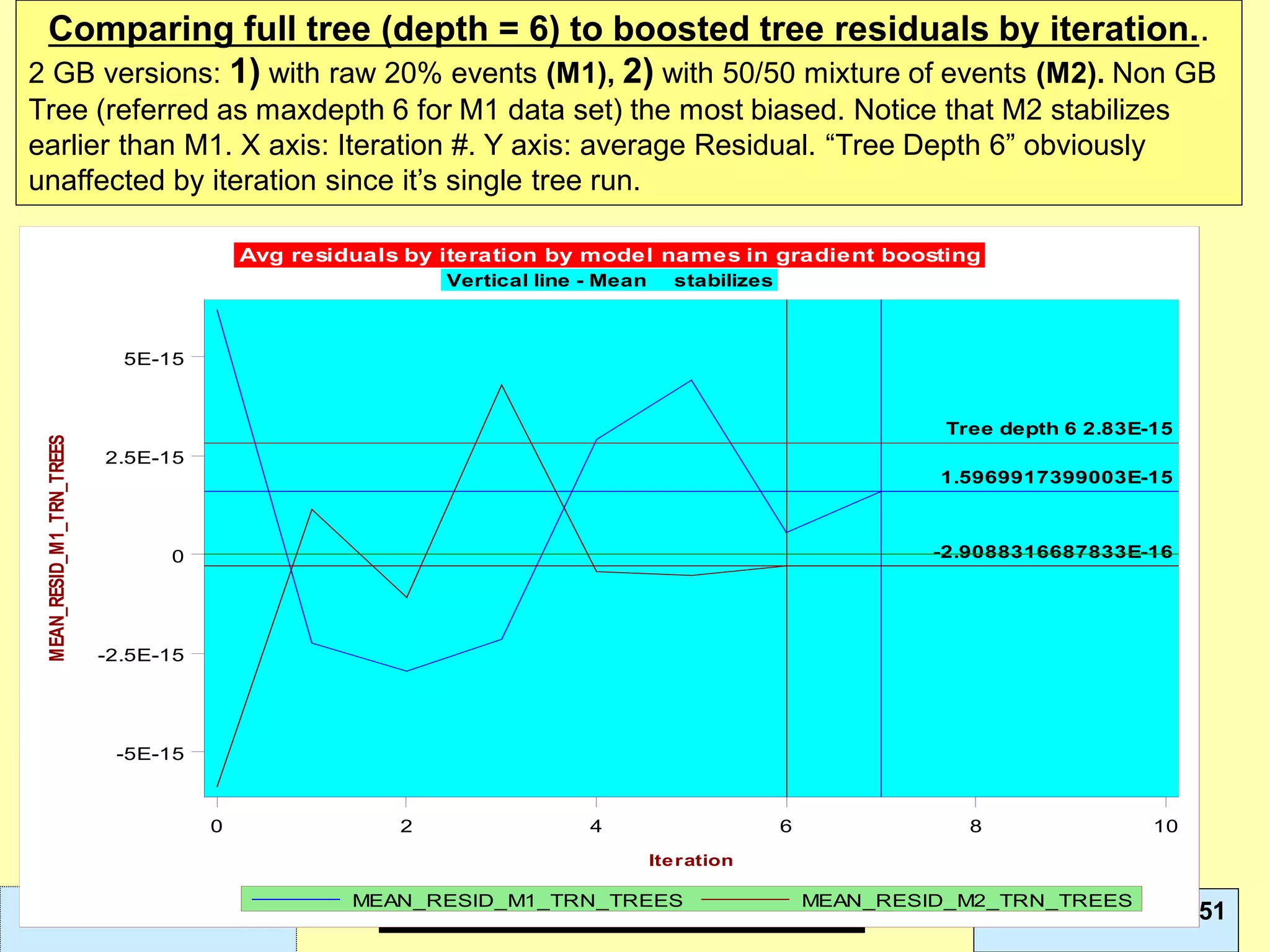

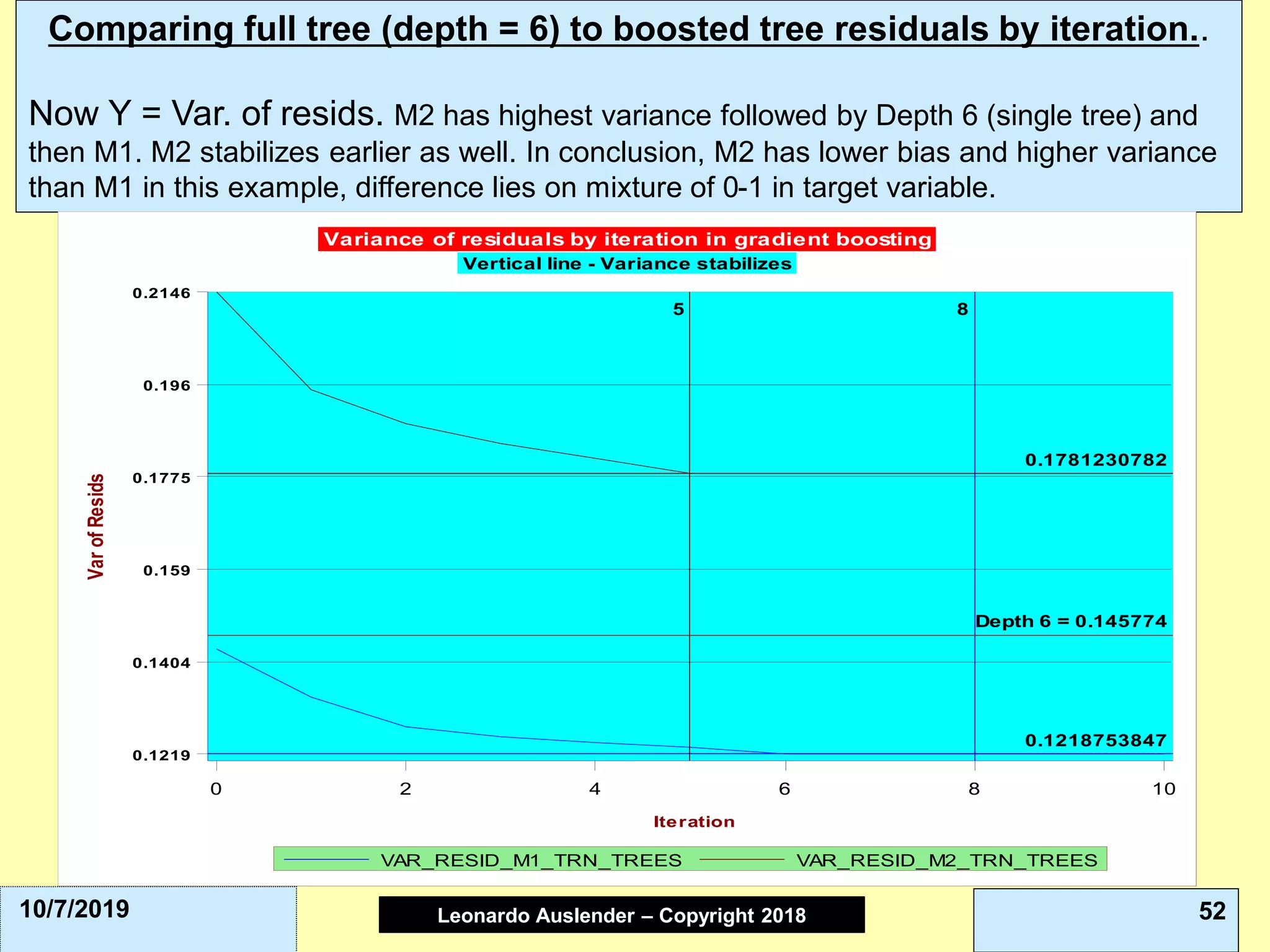

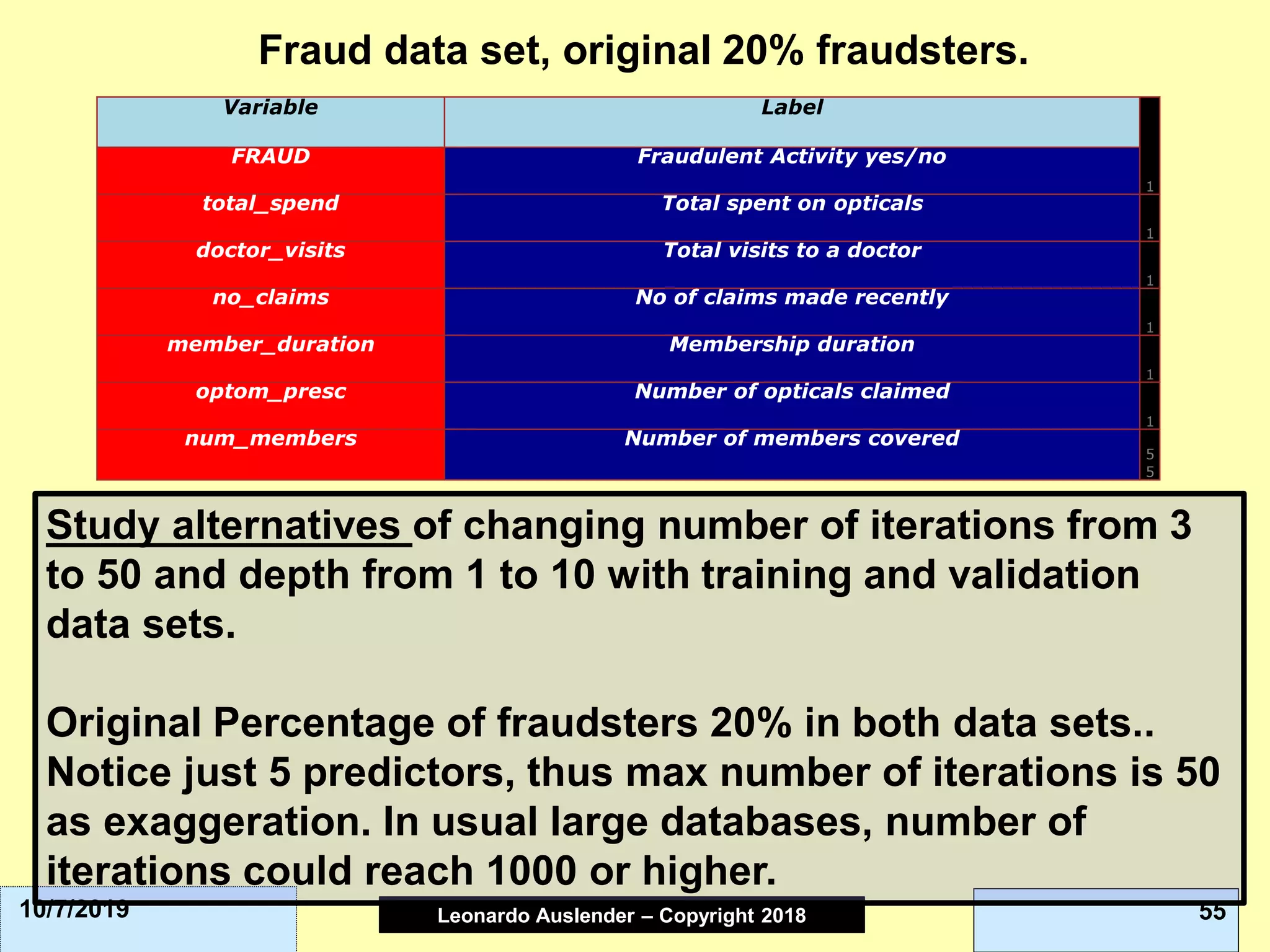

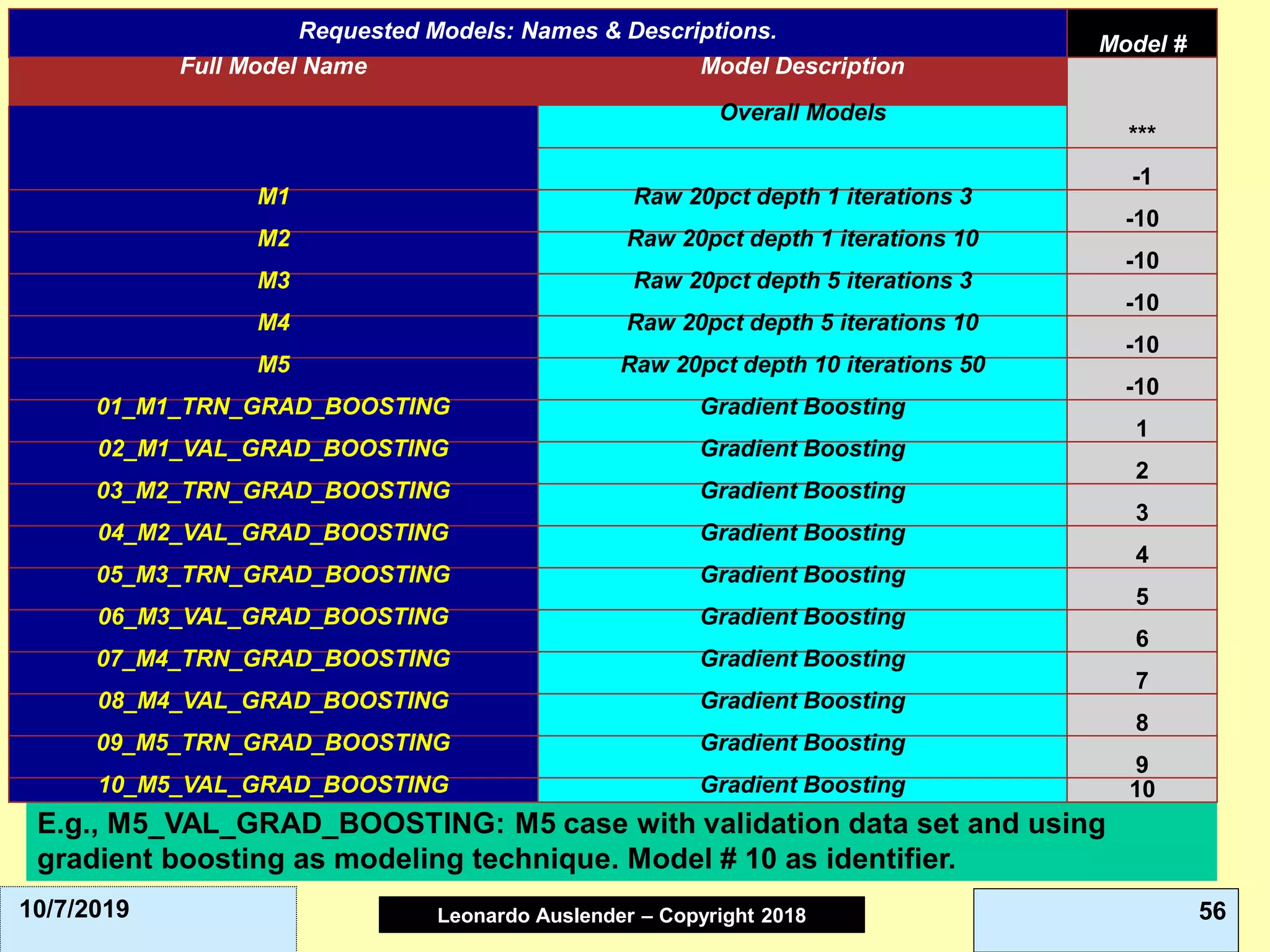

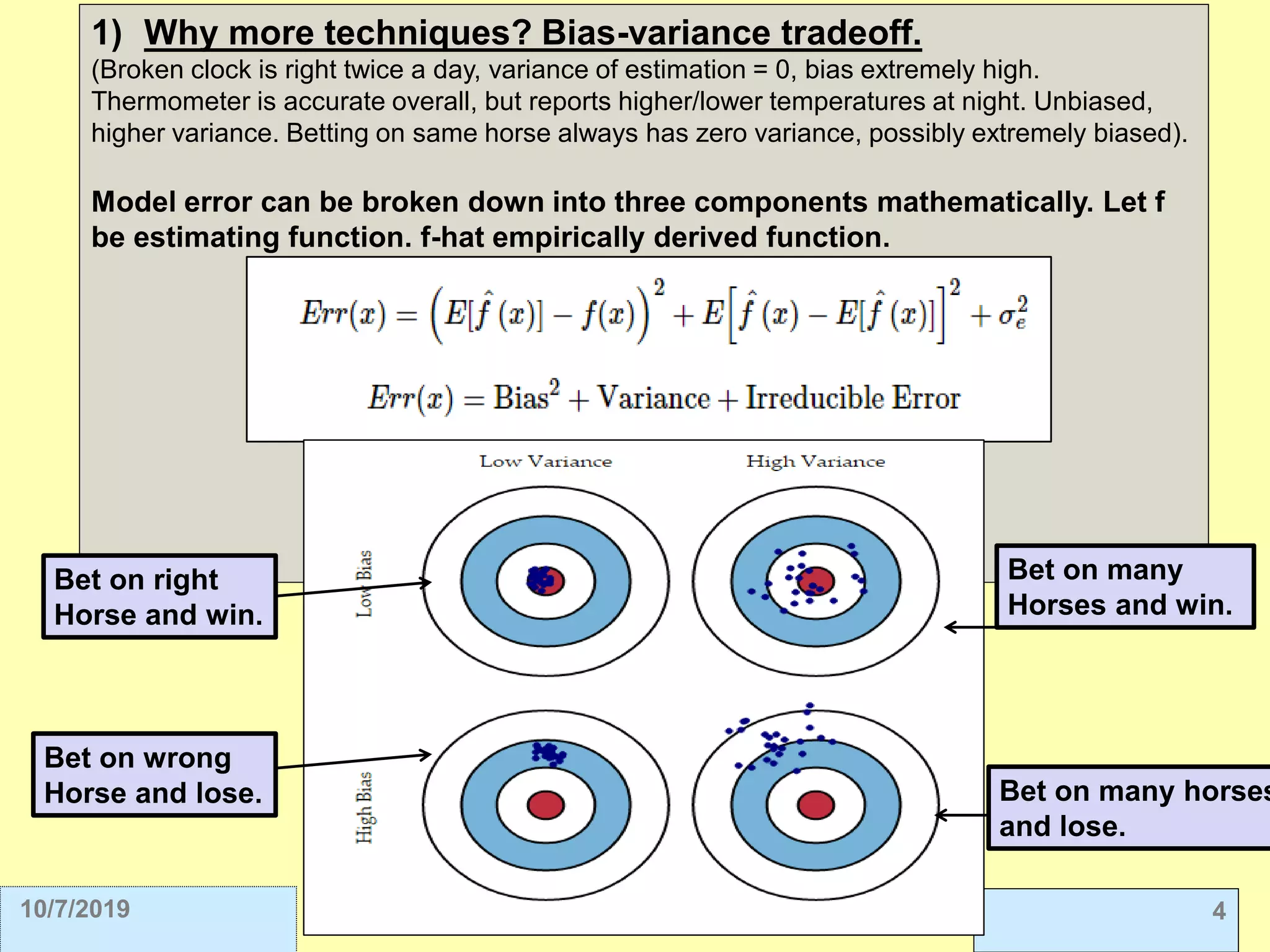

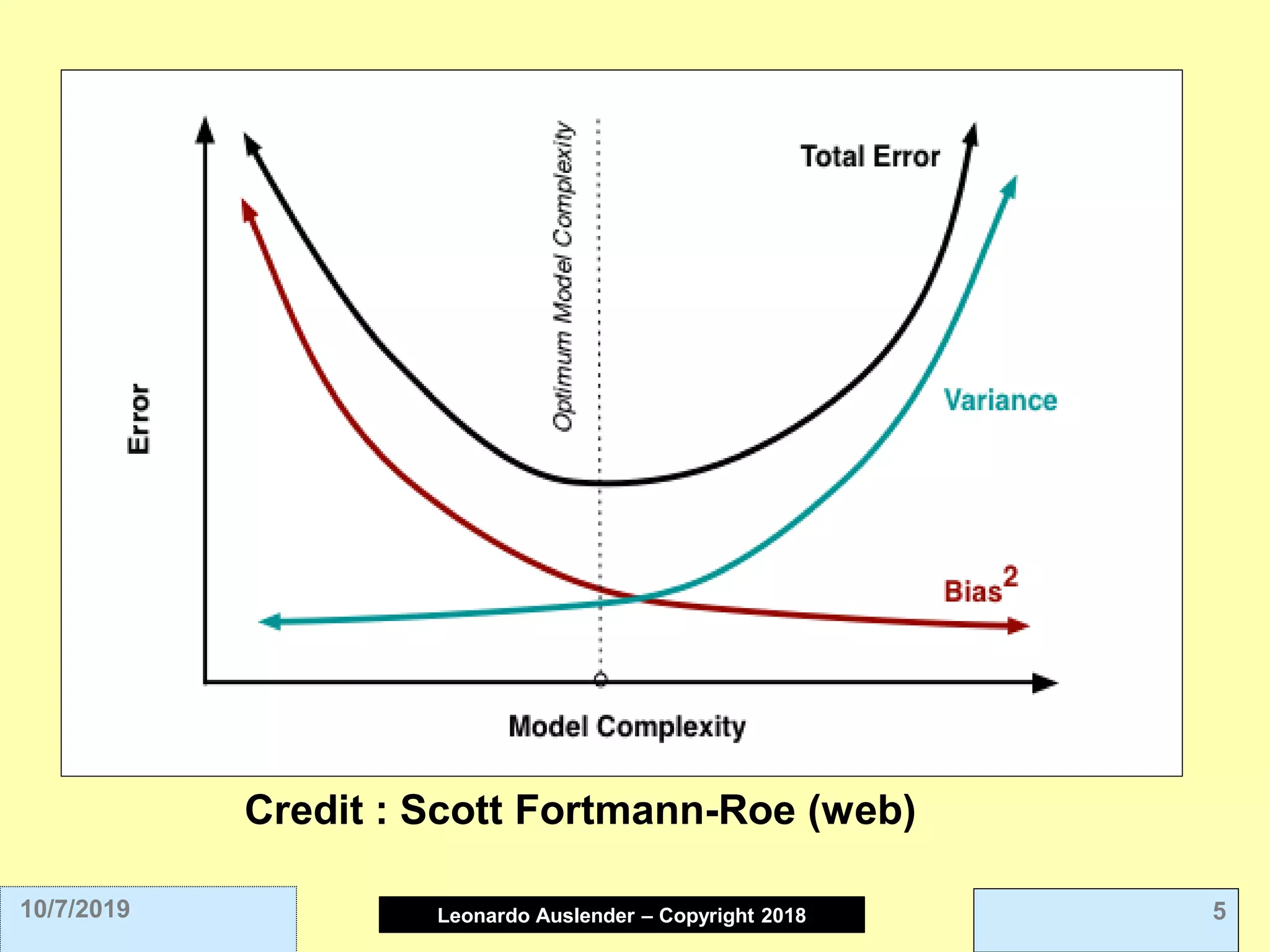

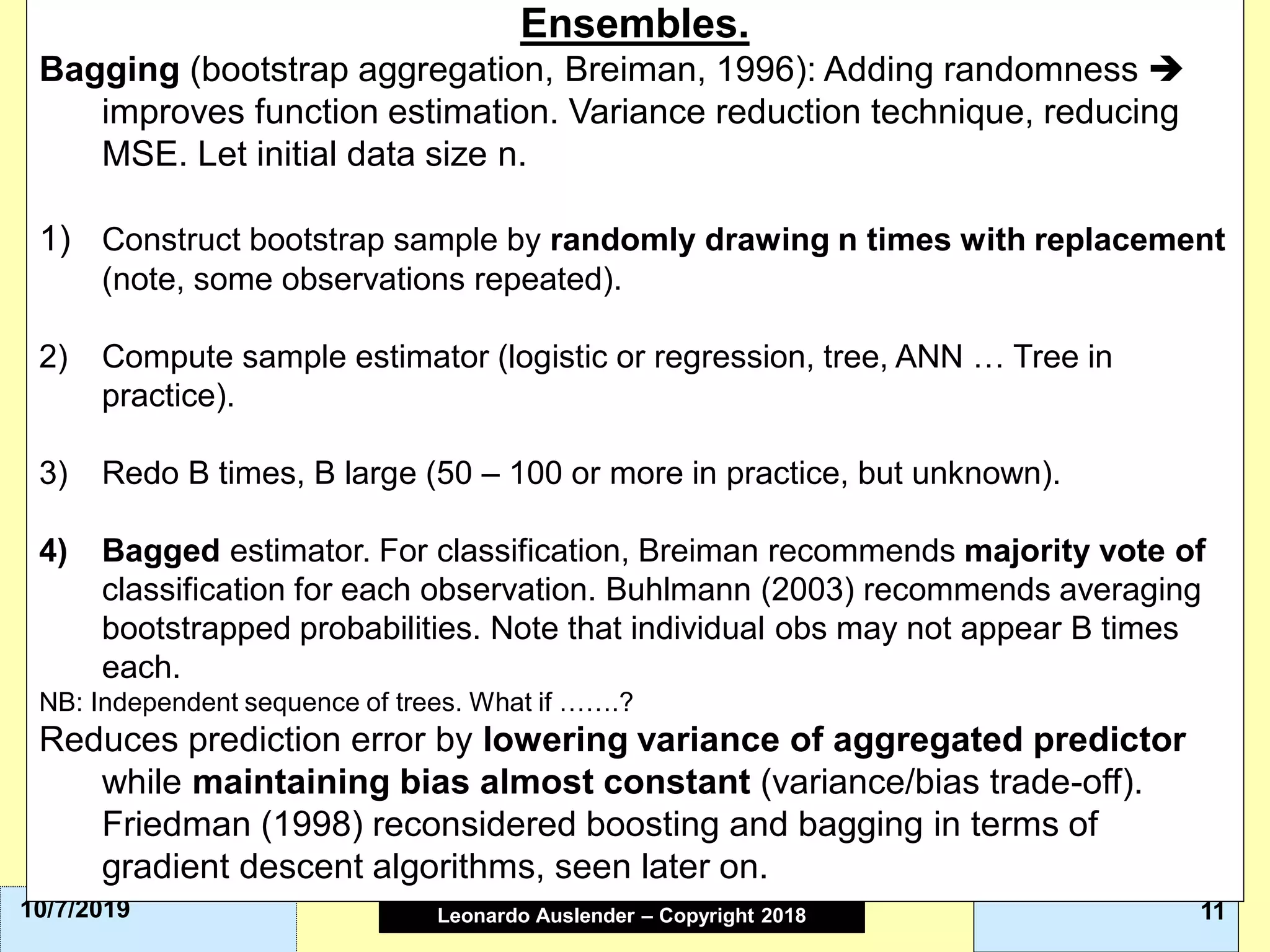

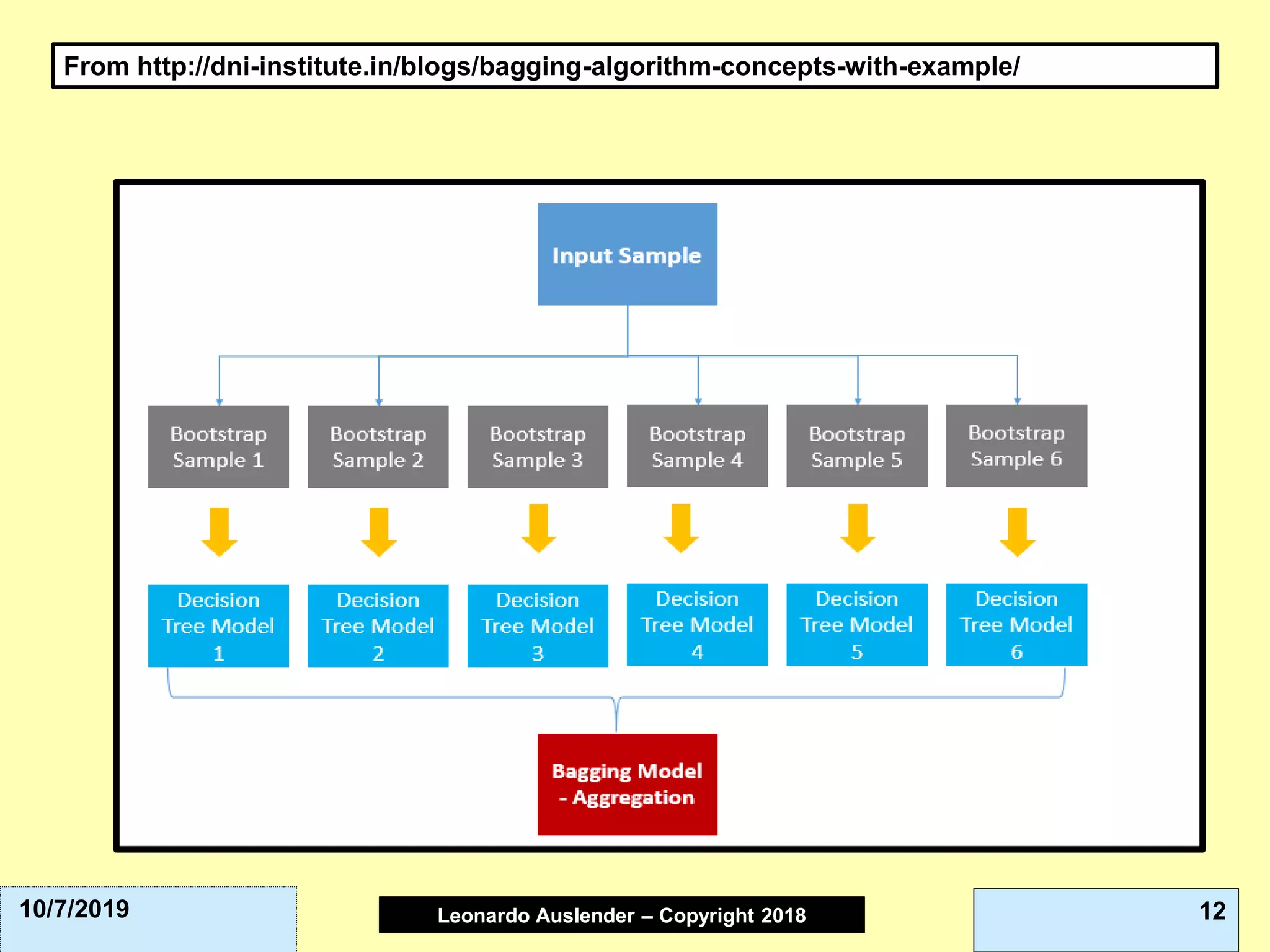

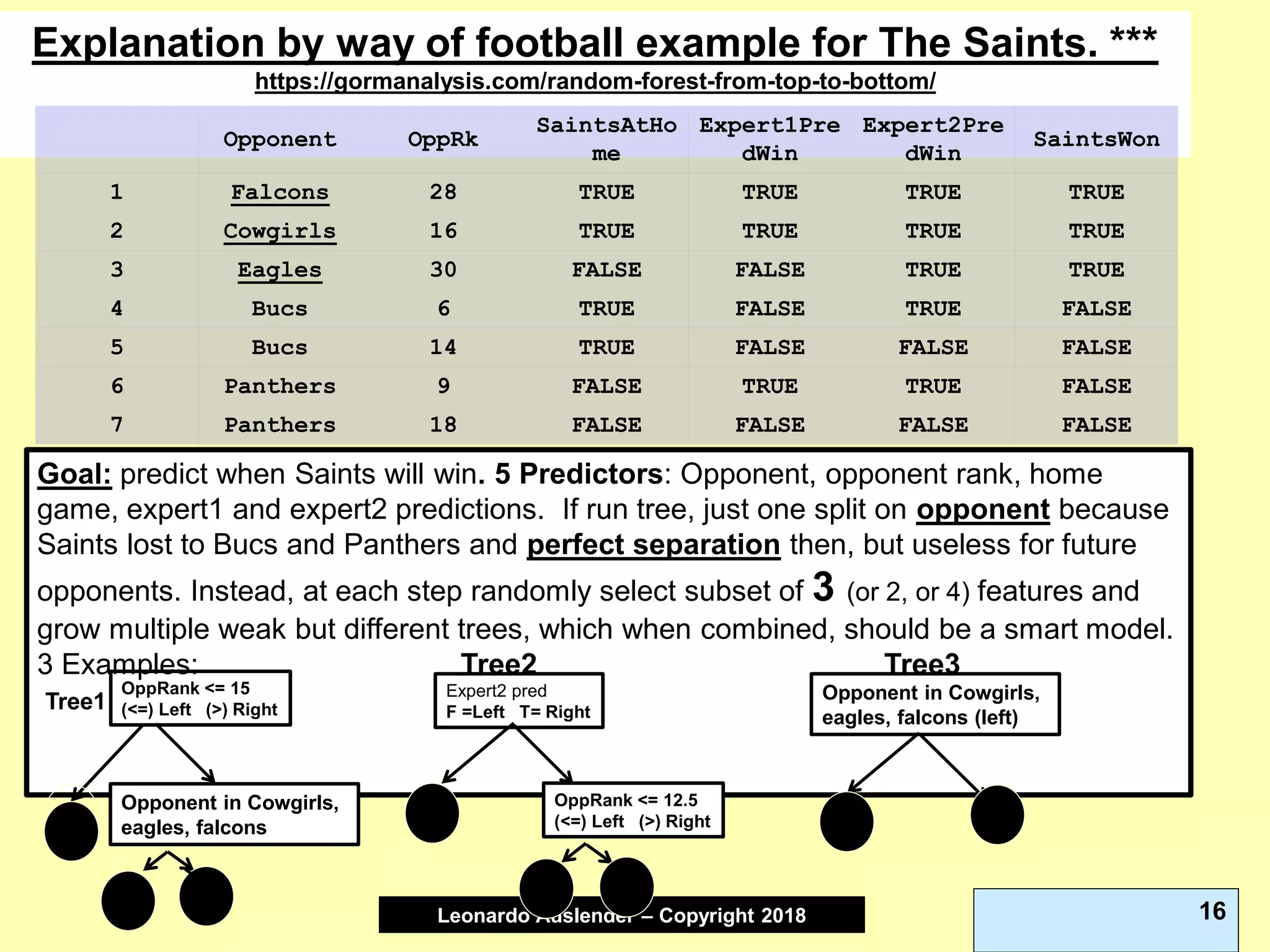

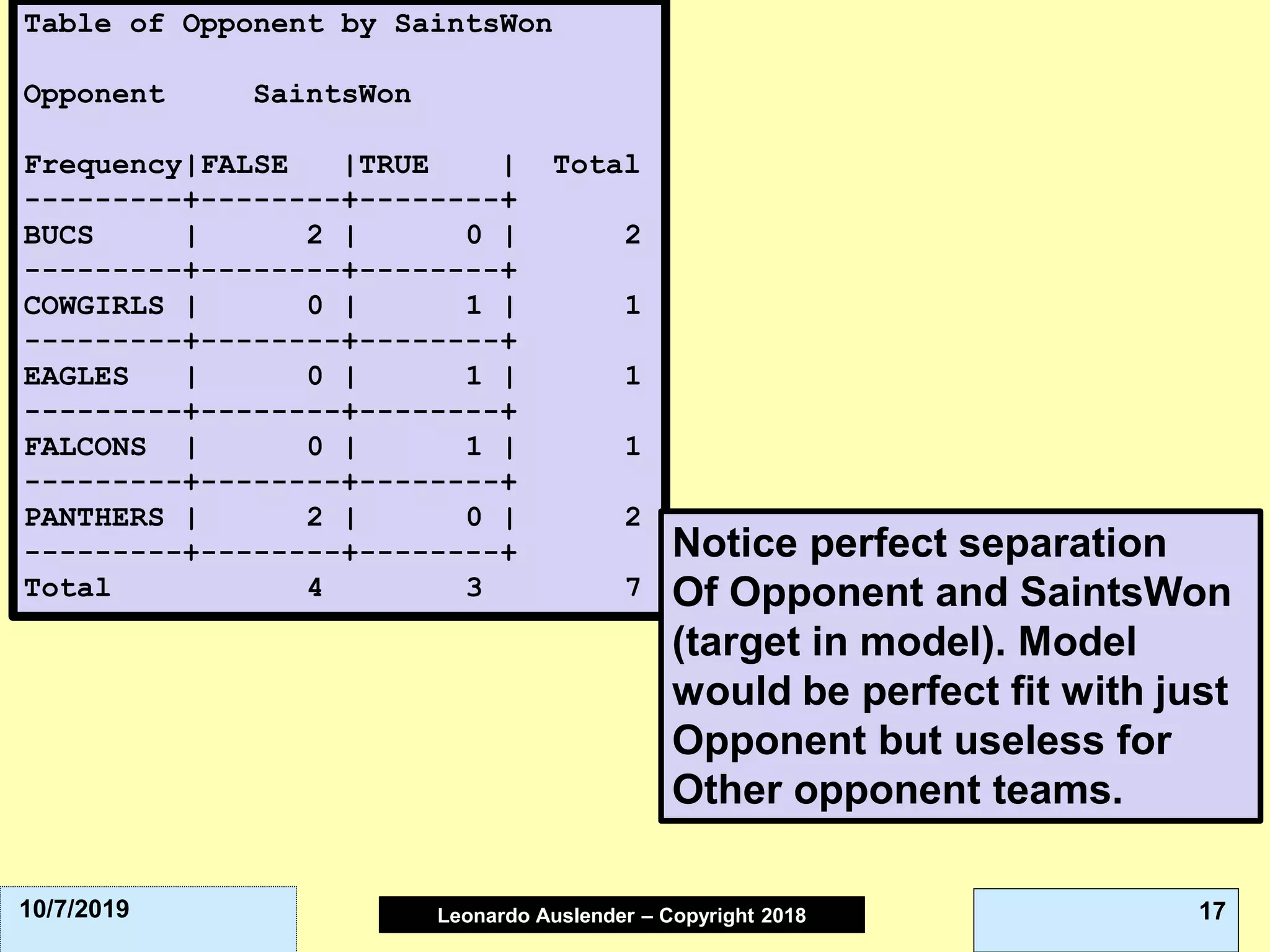

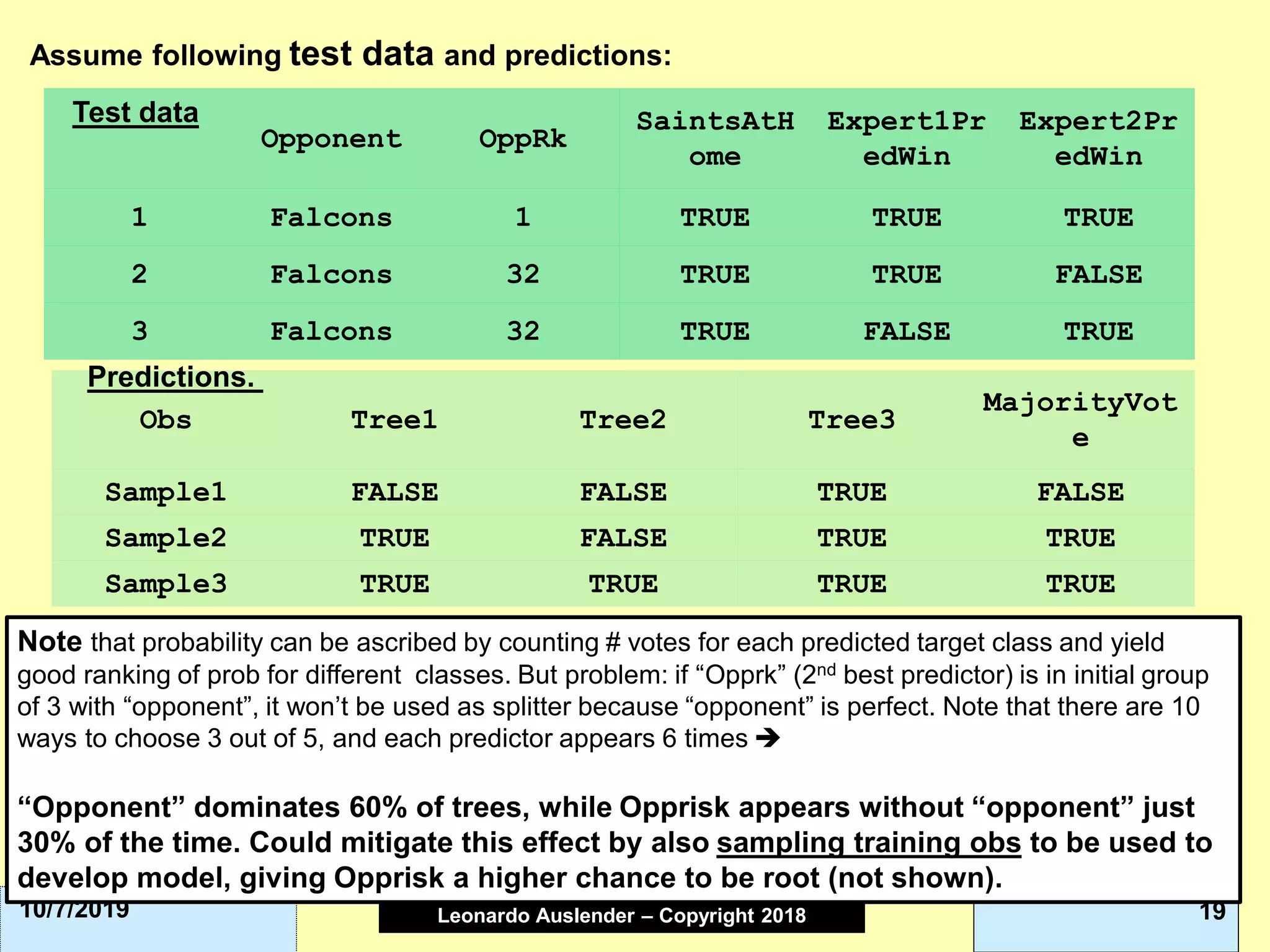

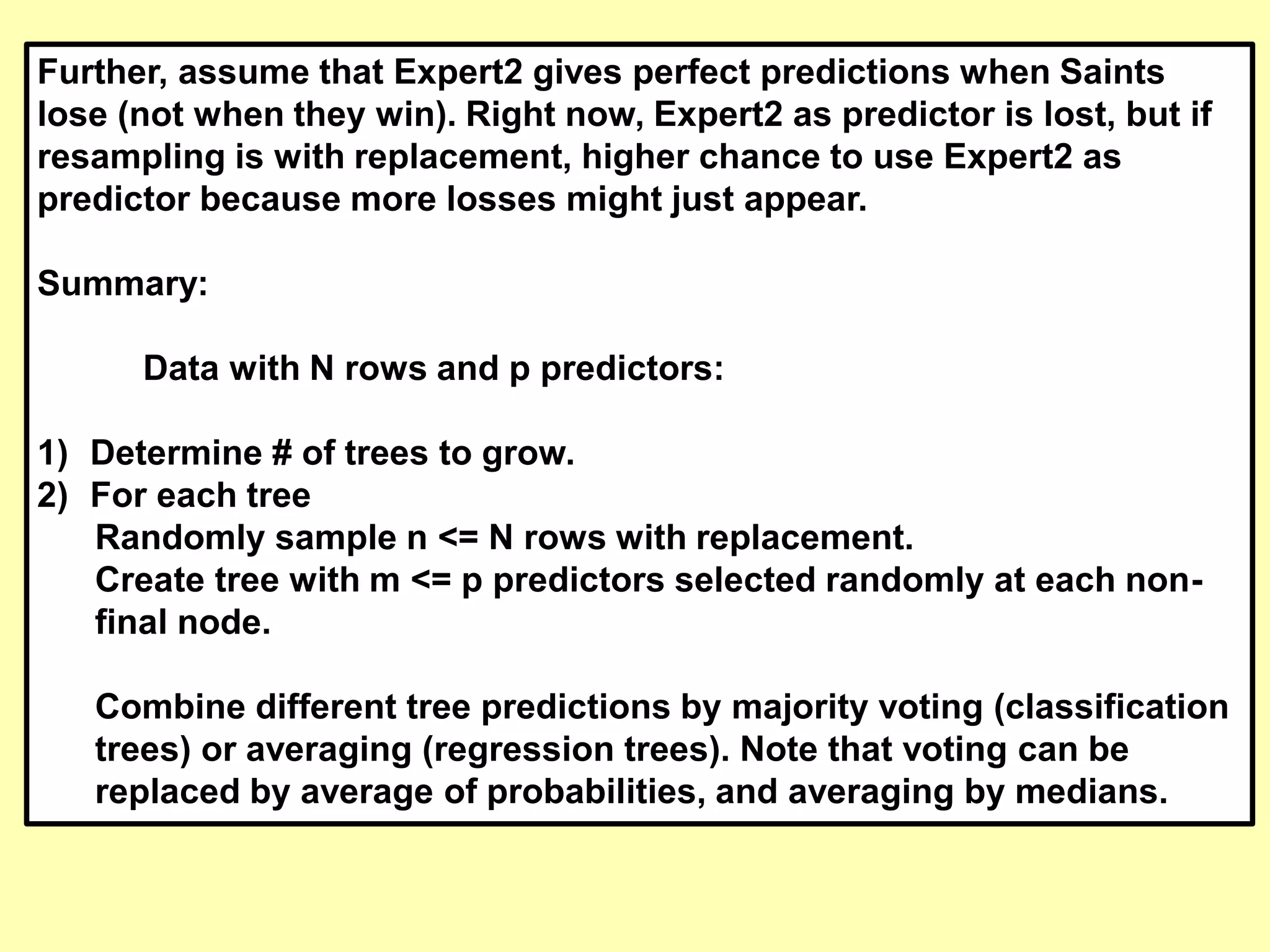

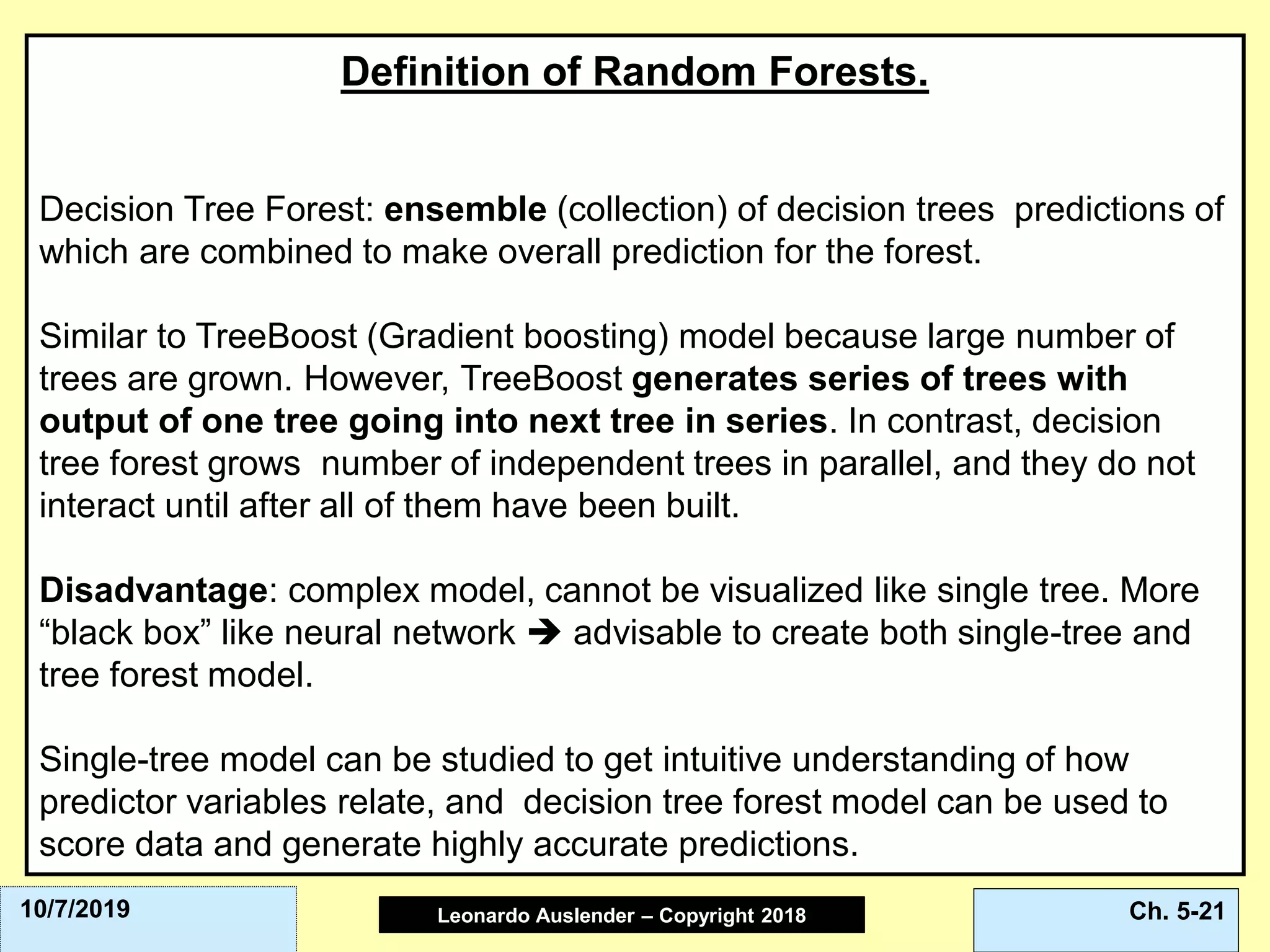

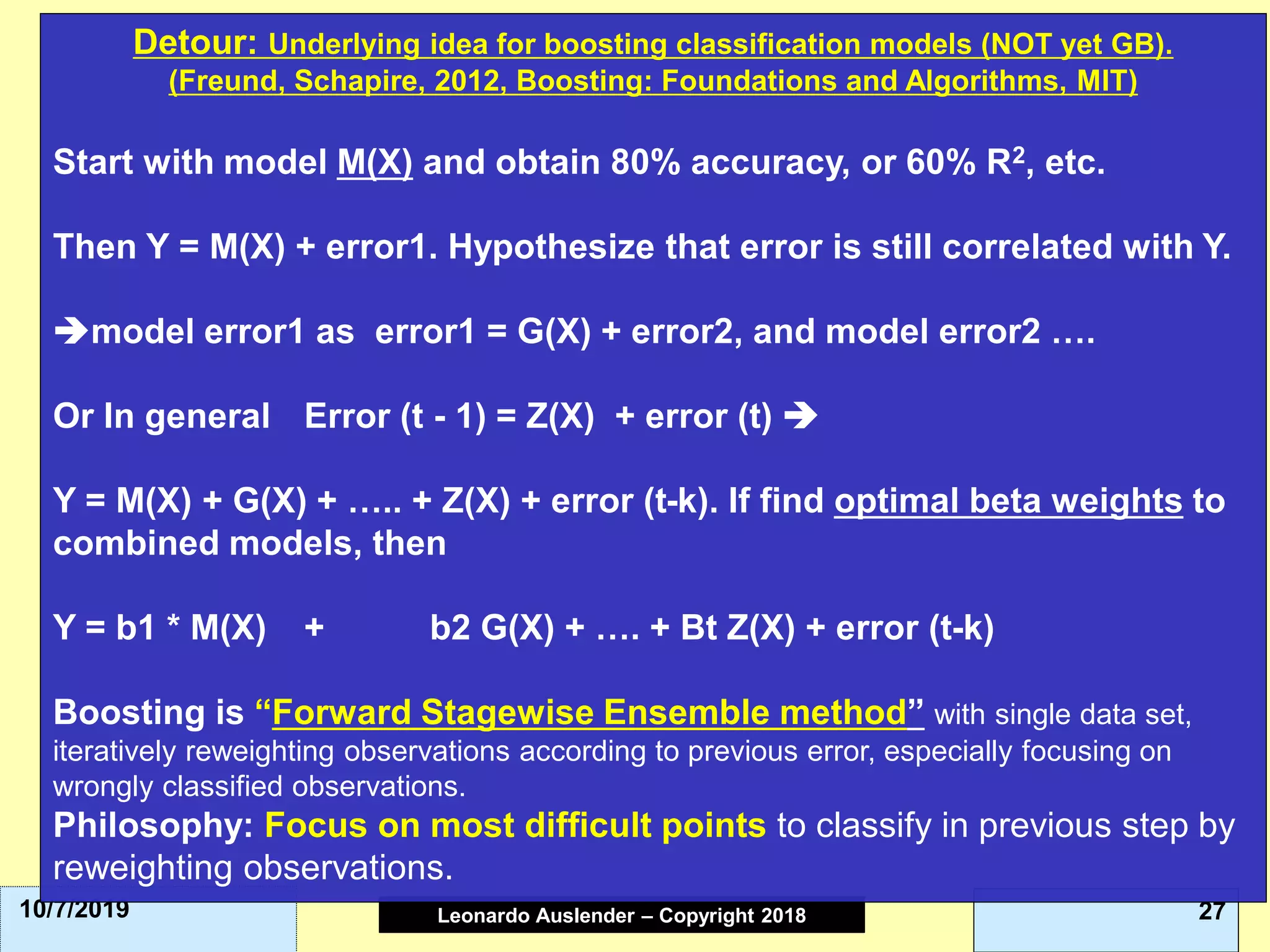

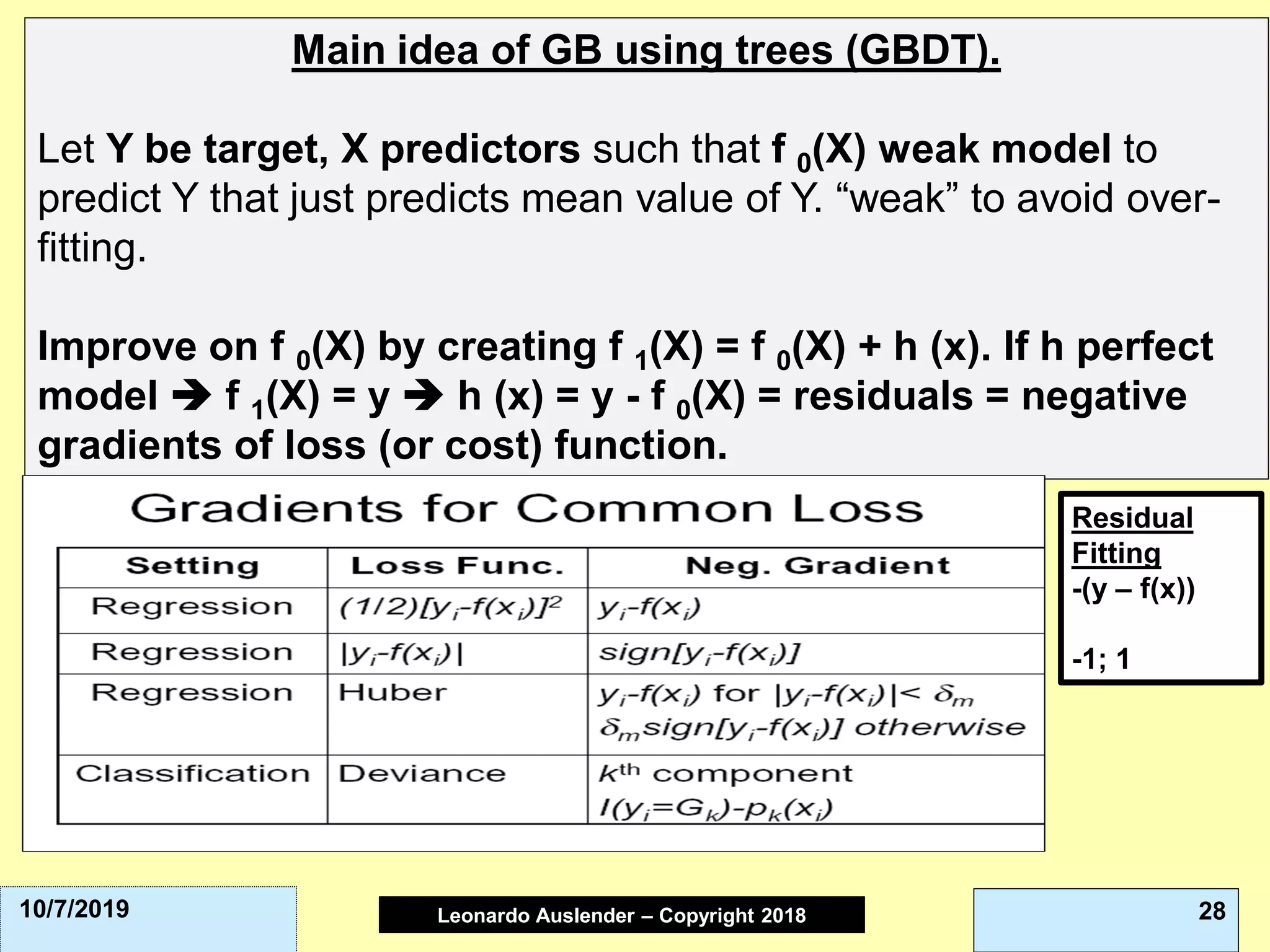

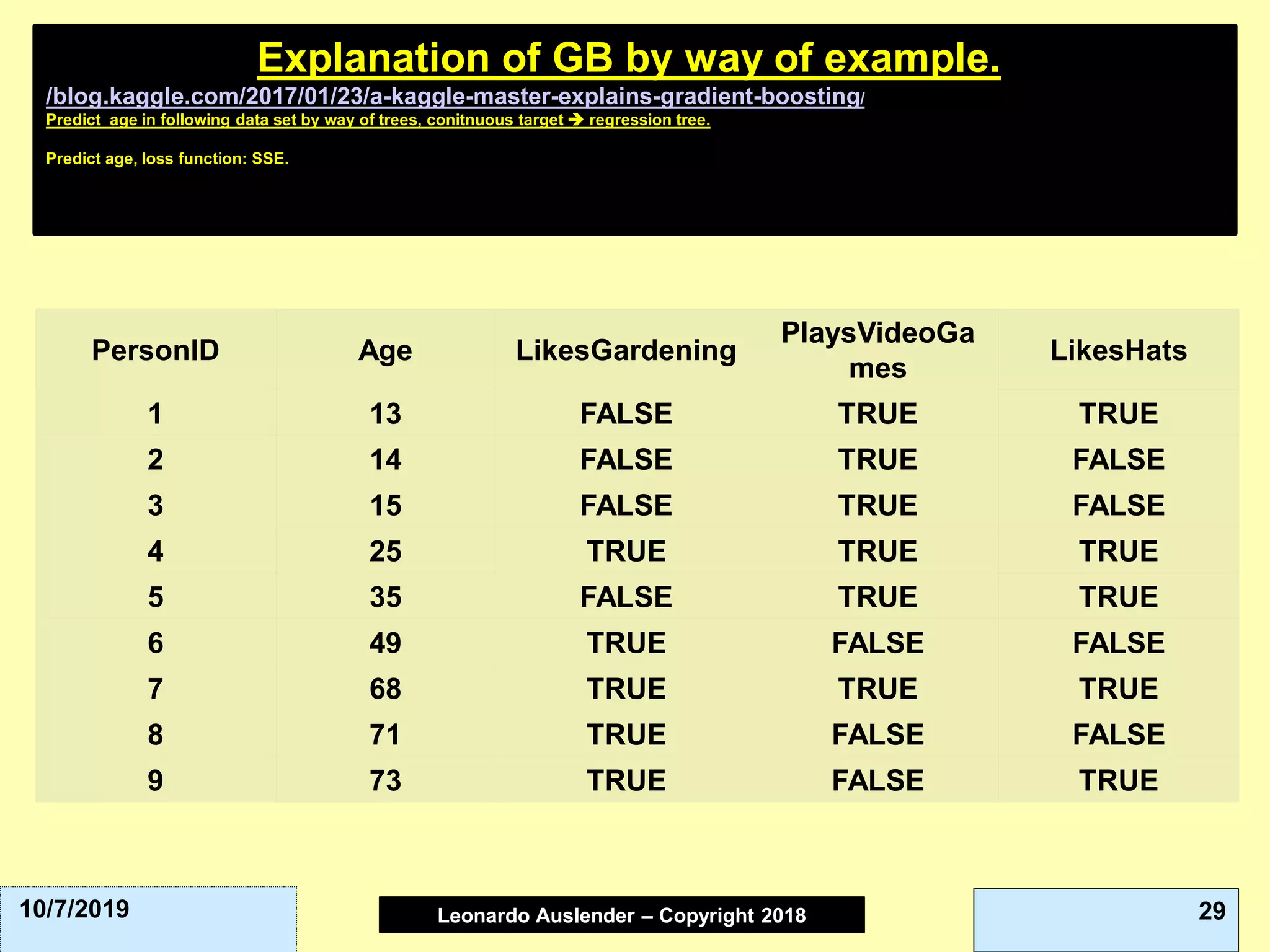

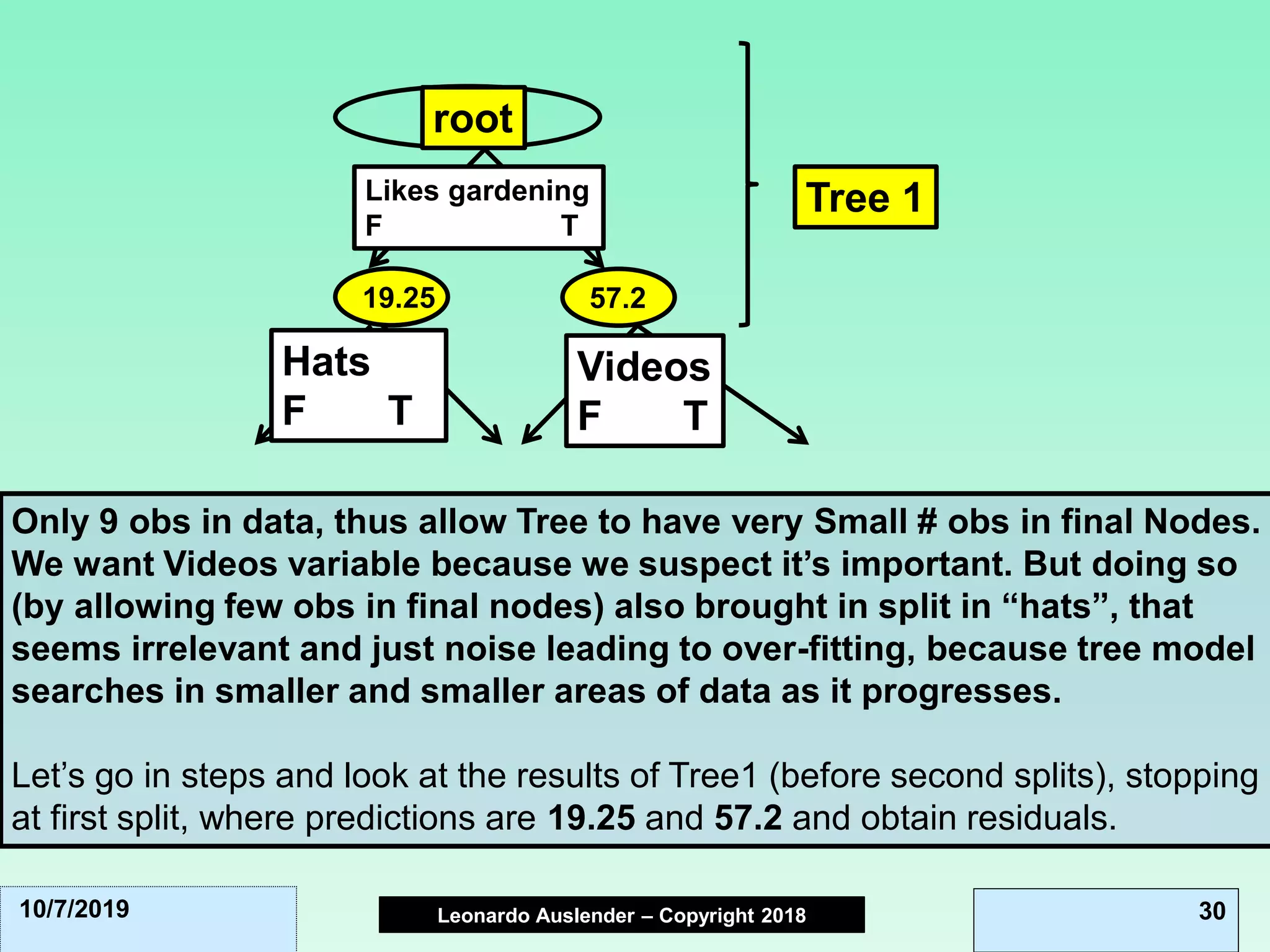

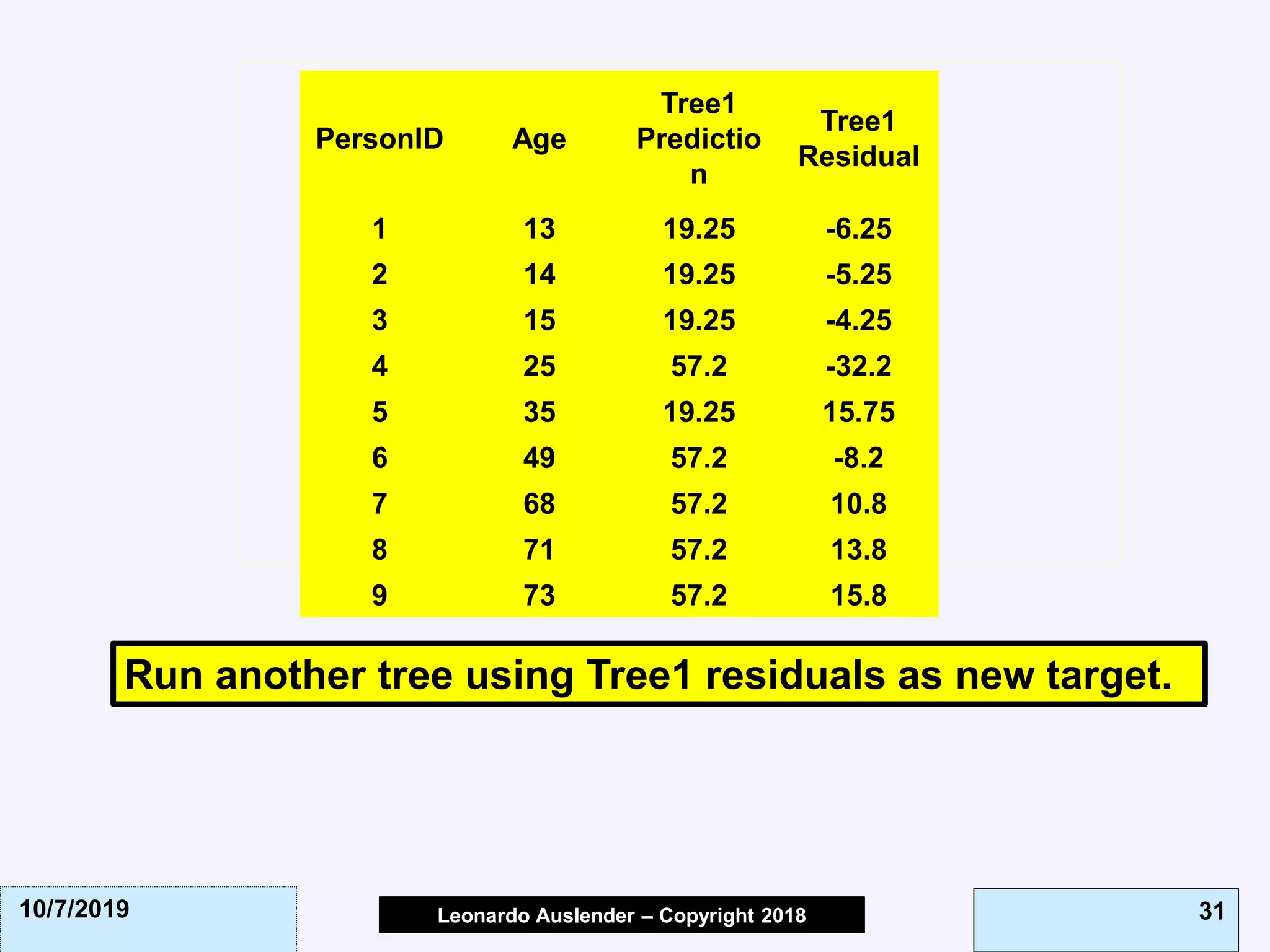

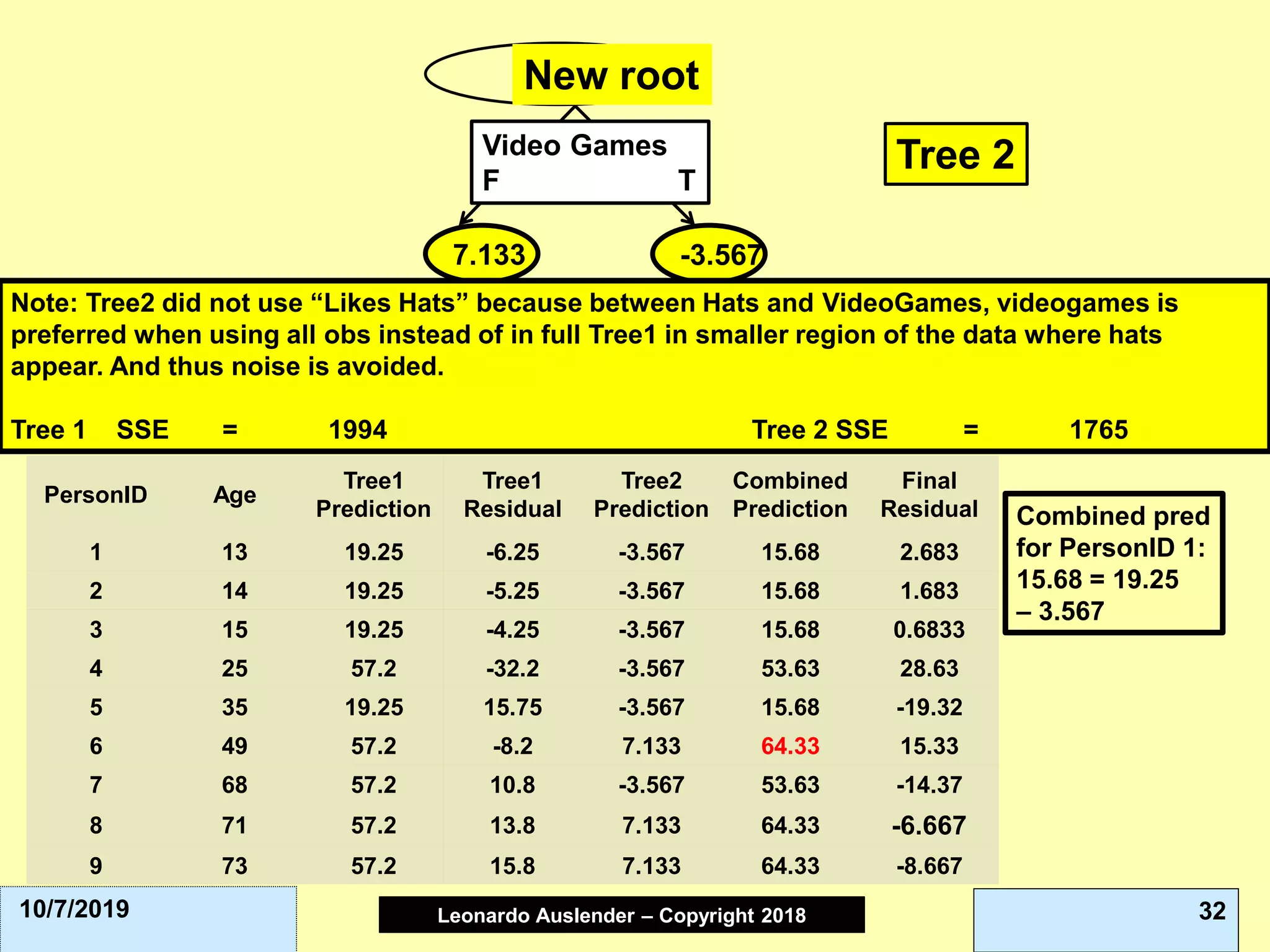

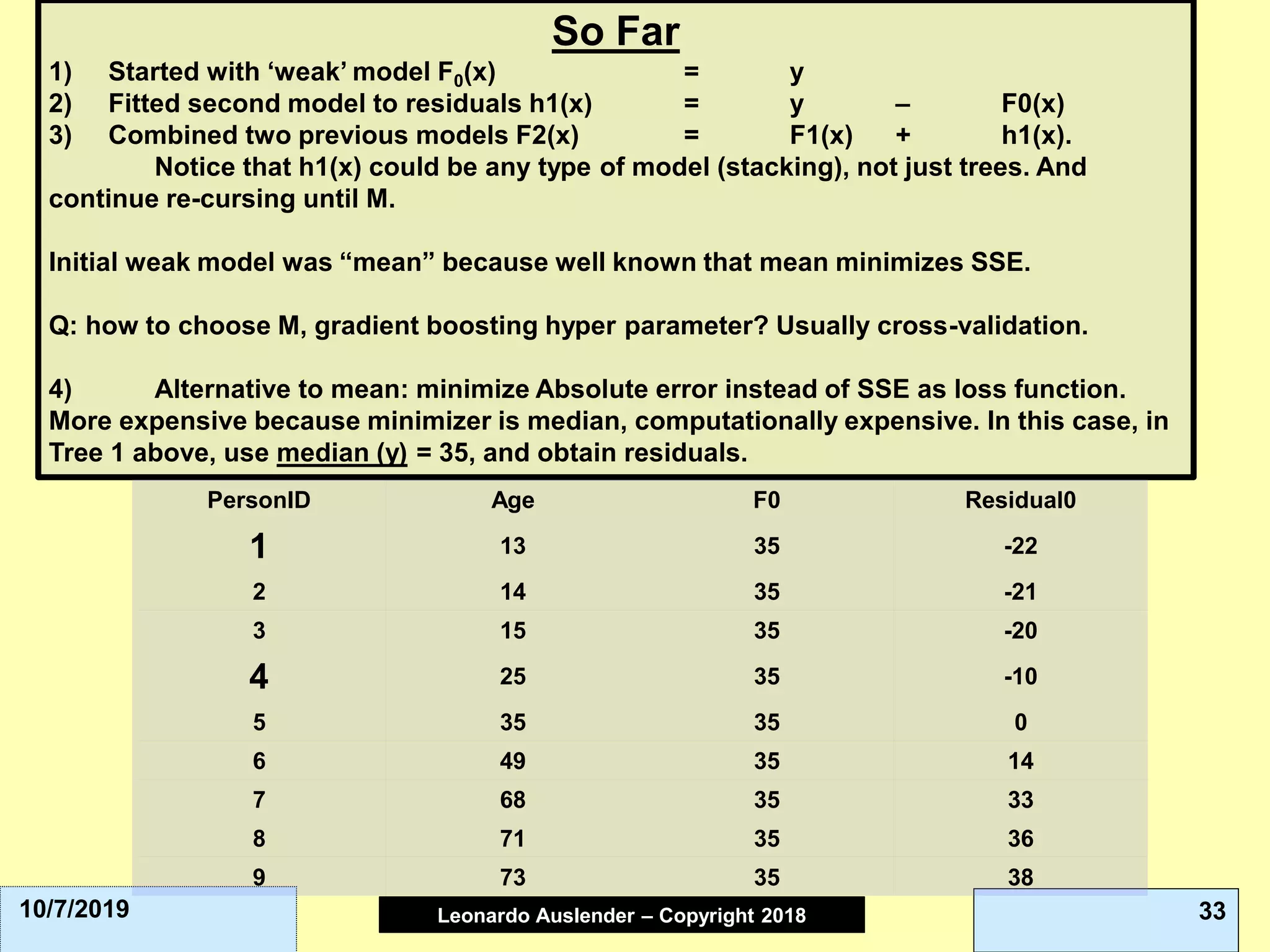

This document discusses ensemble methods and gradient boosting. It covers topics such as bagging, random forests, gradient boosting, and model interpretation using partial dependency plots. Bagging involves constructing multiple bootstrap samples from a dataset and building a model on each sample. Random forests grow many decision trees on randomly selected subsets of features and data. Gradient boosting sequentially adds models to minimize loss. The document provides examples and discusses evaluating and improving ensemble methods. It cautions about uncritically believing claims found online without verification.

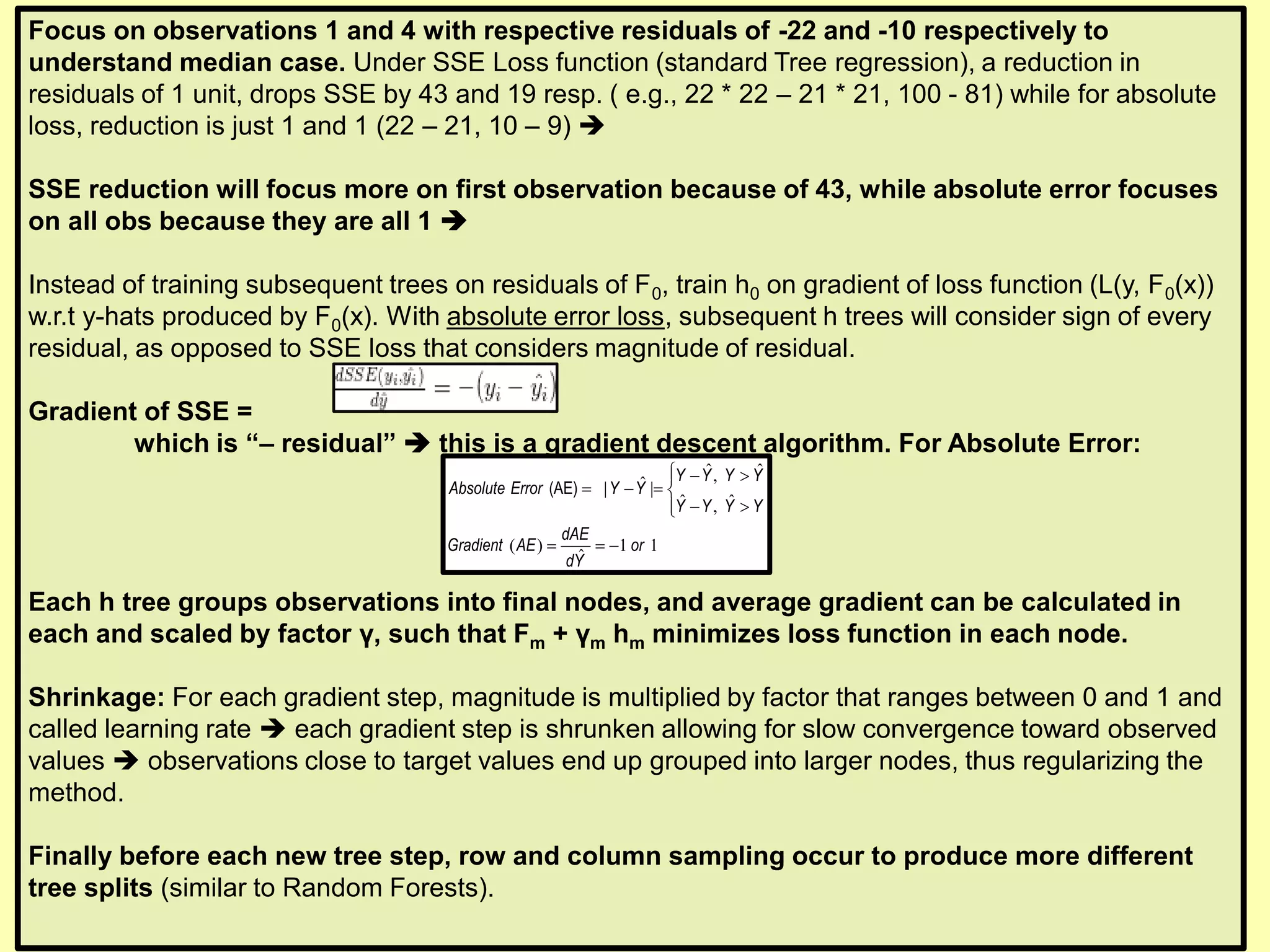

![Leonardo Auslender Copyright 2004Leonardo Auslender – Copyright 2018 4510/7/2019

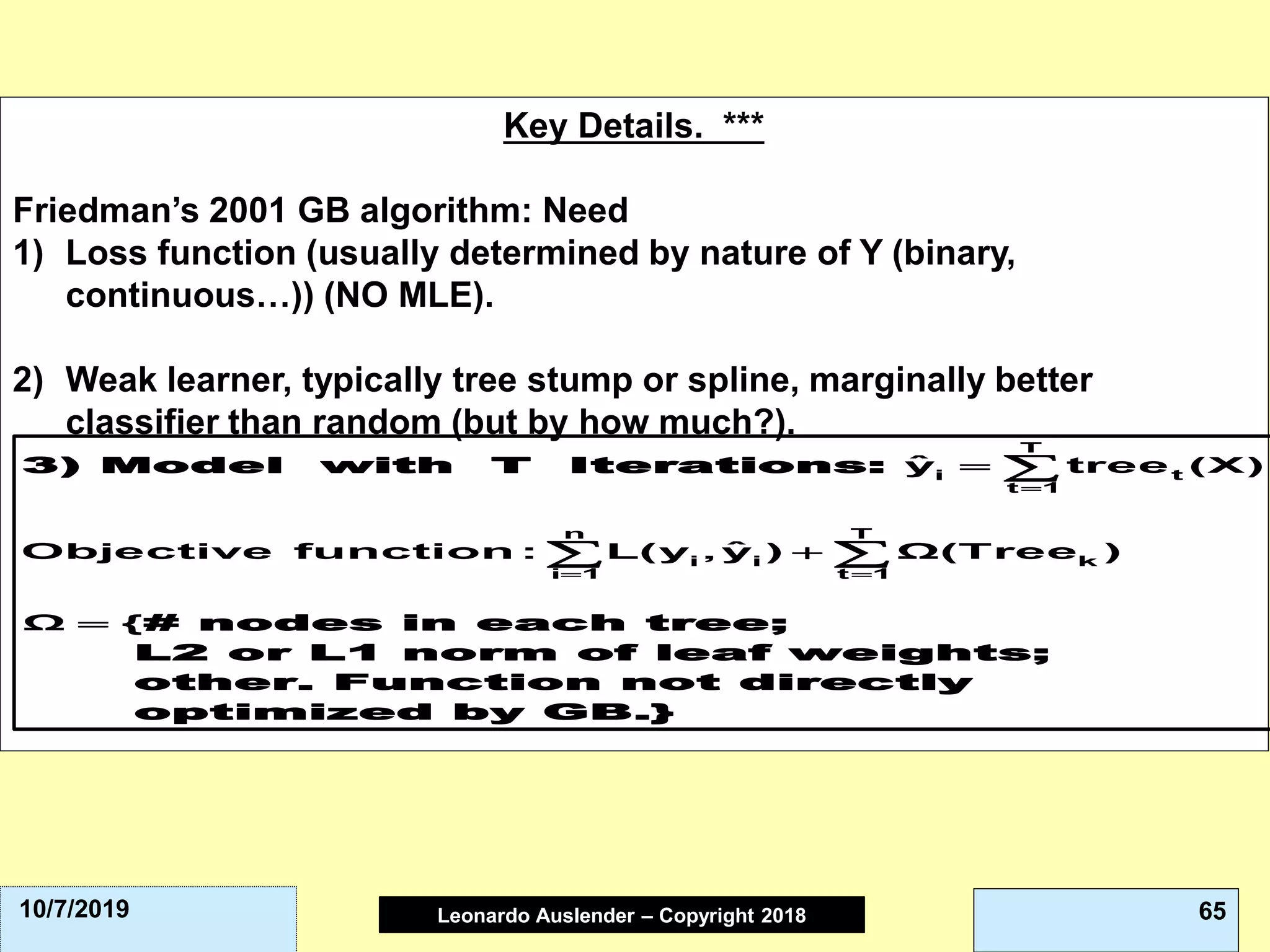

L2-error penalizes symmetrically away from 0, Huber penalizes less than OLS away

from [-1, 1], Bernoulli and Adaboost are very similar. Note that Y ε [-1, 1] in 0-1 case

here.](https://image.slidesharecdn.com/42ensemblemodelsandgradboostpart1-191007131313/75/4-2-ensemble-models-and-grad-boost-part-1-2019-10-07-45-2048.jpg)