The document discusses algorithms for solving the maximum subsequence sum problem in three sentences or less:

Algorithm 1 exhaustively tries all possibilities with O(N3) running time. Algorithm 2 improves on Algorithm 1 by removing a nested loop, resulting in O(N2) time. Algorithm 3 uses divide-and-conquer with a recursive O(N log N) approach by dividing the problem in half at each step.

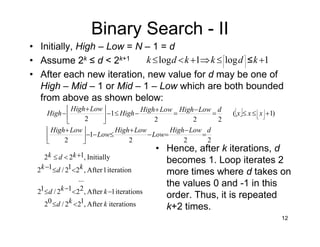

![Solutions for the Maximum Subsequence

Sum Problem: Algorithm 1

•exhaustively tries all possibilities: for

all combinations of all the values for

starting and ending points (i and j

respectively), the partial sum

(ThisSum) is calculated and

compared with the maximum sum

value (MaxSum) computed so far.

The running time is O(N3 ) and is

entirely due to lines 5 and 6.

• A more precise analysis;

2

int MaxSubSum1( const int A[ ], int N ) {

int ThisSum, MaxSum, i, j, k;

/* 1*/ MaxSum = 0;

/* 2*/ for( i = 0; i < N; i++ )

/* 3*/ for( j = i; j < N; j++ ) {

/* 4*/ ThisSum = 0;

/* 5*/ for( k = i; k <= j; k++ )

/* 6*/ ThisSum += A[ k ];

/* 7*/ if( ThisSum > MaxSum )

/* 8*/ MaxSum = ThisSum;

}

/* 9*/ return MaxSum;

}

6/)2233()232(2/12/)1()2/3(6/)12)(1(2/1

1

1)232(2/1

1

)2/3(

1

22/1

1

2/)1)(2(

1

0

2/))(1(

1

0

1

1

1

0

1

1

NNNNNNNNNNNN

N

i

NN

N

i

iN

N

i

i

N

i

iNiN

N

i

iNiN

N

i

N

ij

ij

N

i

N

ij

j

ik

++=+++++−++=

∑

=

+++∑

=

+−∑

=

=

∑

=

+−+−=

∑

−

=

−+−=∑

−

=

∑

−

=

+−=∑

−

=

∑

−

=

∑

=](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-2-320.jpg)

![3

Solutions for the Maximum Subsequence

Sum Problem: Algorithm 2

• We can improve upon Algorithm 1

to avoid the cubic running time by

removing a for loop. Obviously,

this is not always possible, but in

this case there are an awful lot of

unnecessary computations

present in Algorithm 1.

• Notice that

• so the computation at lines 5 and

6 in Algorithm 1 is unduly

expensive. Algorithm 2 is clearly

O(N2 ); the analysis is even

simpler than before.

int MaxSubSum2( const int A[ ], int N ) {

int ThisSum, MaxSum, i, j;

/* 1*/ MaxSum = 0;

/* 2*/ for( i = 0; i < N; i++ ) {

/* 3*/ ThisSum = 0;

/* 4*/ for( j = i; j < N; j++ ) {

/* 5*/ ThisSum += A[ j ];

/* 6*/ if( ThisSum > MaxSum )

/* 7*/ MaxSum = ThisSum;

}

}

/* 8*/ return MaxSum;

}

k i

j

Ak Aj

k i

j 1

Ak](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-3-320.jpg)

![Solutions for the Maximum Subsequence Sum

Problem: Algorithm 3 – Implementation I

/* Implementation */

static int MaxSubSum(const int A[ ], int Left, int Right) {

int MaxLeftSum, MaxRightSum;

int MaxLeftBorderSum, MaxRightBorderSum;

int LeftBorderSum, RightBorderSum;

int Center, i;

/* 1*/ if( Left == Right ) /* Base case */

/* 2*/ if( A[ Left ] > 0 )

/* 3*/ return A[ Left ];

else

/* 4*/ return 0;

/* Initial Call */

int MaxSubSum3( const int A[ ], int N ) {

return MaxSubSum( A, 0, N - 1 );

}

/* Utility Function */

static int Max3( int A, int B, int C ) {

return A > B ? A > C ? A : C : B > C ? B : C;

}

5](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-5-320.jpg)

![Solutions for the Maximum Subsequence Sum

Problem: Algorithm 3 – Implementation II

/* Implementation */

/* Calculate the center */

/* 5*/ Center = ( Left + Right ) / 2;

/* Make recursive calls */

/* 6*/ MaxLeftSum = MaxSubSum( A, Left, Center );

/* 7*/ MaxRightSum = MaxSubSum( A, Center + 1, Right );

/* Find the max subsequence sum in the left half where the */

/* subsequence spans the last element of the left half */

/* 8*/ MaxLeftBorderSum = 0; LeftBorderSum = 0;

/* 9*/ for( i = Center; i >= Left; i-- )

{

/*10*/ LeftBorderSum += A[ i ];

/*11*/ if( LeftBorderSum > MaxLeftBorderSum )

/*12*/ MaxLeftBorderSum = LeftBorderSum;

}

6](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-6-320.jpg)

![/* Implementation */

/*13*/ MaxRightBorderSum = 0; RightBorderSum = 0;

/*14*/ for( i = Center + 1; i <= Right; i++ )

{

/*15*/ RightBorderSum += A[ i ];

/*16*/ if( RightBorderSum > MaxRightBorderSum )

/*17*/ MaxRightBorderSum = RightBorderSum;

}

/* The function Max3 returns the largest of */

/* its three arguments */

/*18*/ return Max3( MaxLeftSum, MaxRightSum,

/*19*/ MaxLeftBorderSum + MaxRightBorderSum );

}

Solutions for the Maximum Subsequence Sum

Problem: Algorithm 3 – Implementation III

7](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-7-320.jpg)

![9

Solutions for the Maximum Subsequence

Sum Problem: Algorithm 4

• Algorithm 4 is O(N).

• Why does the algorithm

actually work? It’s an

improvement over Algorithm

2 given the following:

• Observation 1: If A[i] < 0

then it can not start an

optimal subsequence. Hence,

no negative subsequence

can be a prefix in the optimal.

• Observation 2: If

i can advance to j+1.

• Proof: Let . Any

subsequence starting at p

int MaxSubSum4(const int A[], int N)

{

int ThisSum, MaxSum, j;

/* 1*/ ThisSum = MaxSum = 0;

/* 2*/ for( j = 0; j < N; j++ )

{

/* 3*/ ThisSum += A[ j ];

/* 4*/ if( ThisSum > MaxSum )

/* 5*/ MaxSum = ThisSum;

/* 6*/ else if( ThisSum < 0 )

/* 7*/ ThisSum = 0;

}

/* 8*/ return MaxSum;

}

0][ <∑ =

j

ik

kA

[ ]jip ..1+∈

is not larger than the corresponding sequence starting at i,

since j is the first index causing sum<0).](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-9-320.jpg)

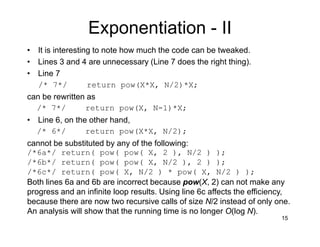

![Binary Search - I

• Definition: Given an

integer x and integers

A0, A1, . . . , AN-1, which

are presorted and already

in memory, find i such that

Ai = x, or return i = -1 if x

is not in the input.

• The loop is O(1) per

iteration. It starts with

High-Low=N - 1 and ends

with High-Low ≤-1. Every

time through the loop the

value High-Low must be

at least halved from its

previous value; thus, the

loop is repeated at most

= O(log N).

11

typedef int ElementType;

#define NotFound (-1)

int BinarySearch(const ElementType A[],

ElementType X, int N){

int Low, Mid, High;

/* 1*/ Low = 0; High = N - 1;

/* 2*/ while( Low <= High ){

/* 3*/ Mid = ( Low + High ) / 2;

/* 4*/ if( A[ Mid ] < X )

/* 5*/ Low = Mid + 1;

/* 6*/ else if( A[ Mid ] > X )

/* 7*/ High = Mid - 1;

else

/* 8*/ return Mid;/* Found */

}

/* 9*/ return NotFound;

}

2)1log( +−N](https://image.slidesharecdn.com/3chapter2algorithmanalysispart2-181027235948/85/3-chapter2-algorithm_analysispart2-11-320.jpg)