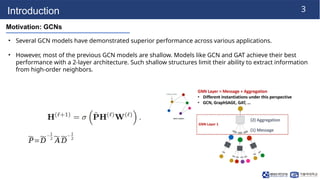

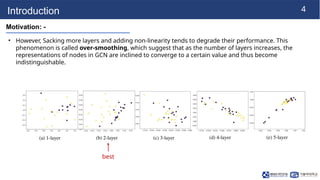

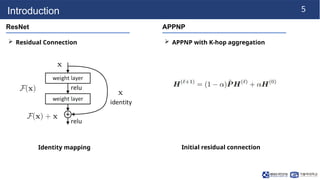

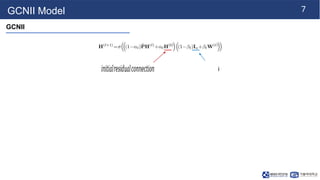

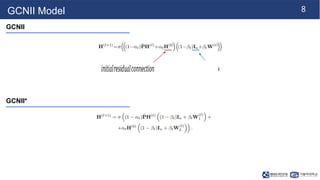

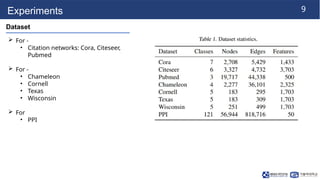

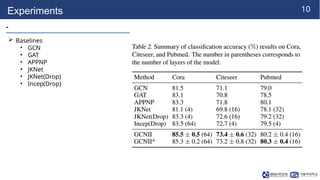

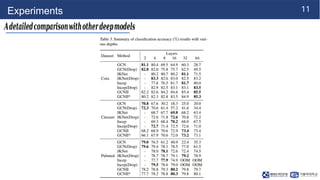

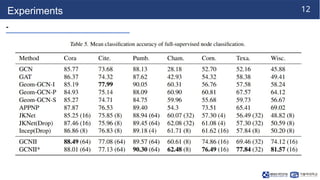

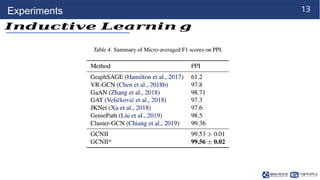

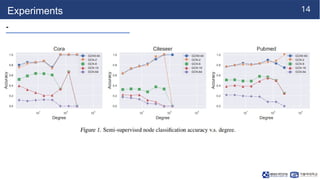

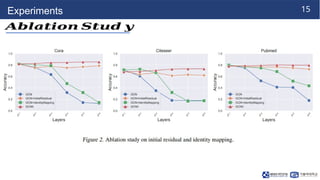

The document presents the GCNiI model, a new approach to graph convolutional networks (GCNs) designed to avoid over-smoothing through initial residual connections and identity mapping. Experimental results indicate that this deep GCN model achieves state-of-the-art performance on various semi- and full-supervised tasks, outperforming previous shallow models. Key experiments were conducted using multiple datasets, including citation networks and others.