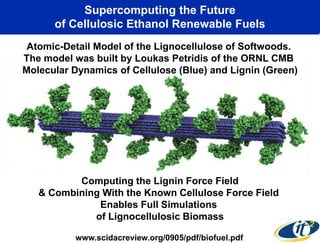

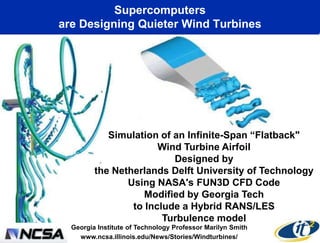

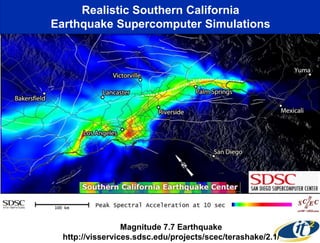

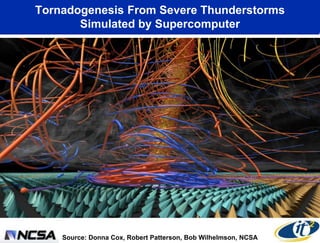

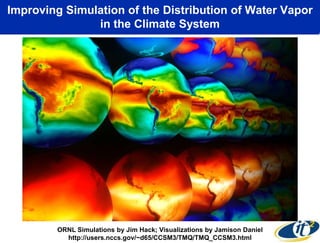

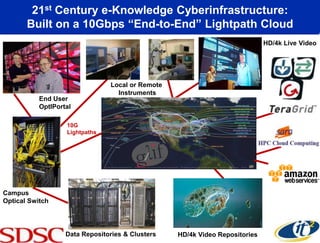

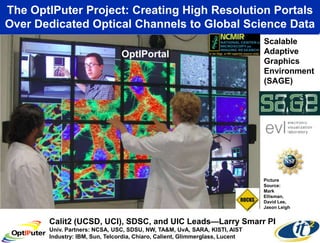

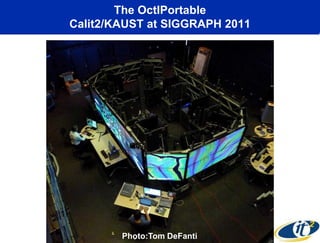

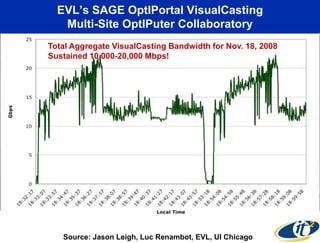

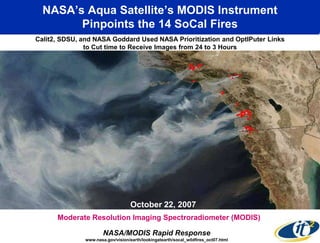

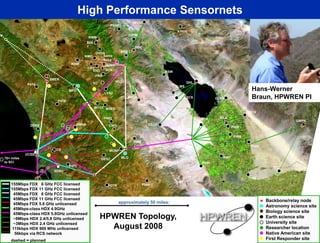

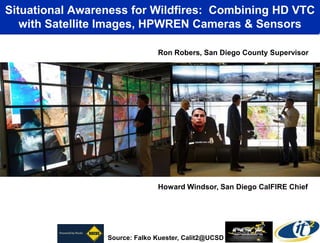

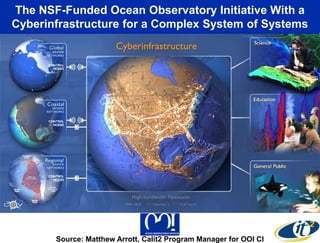

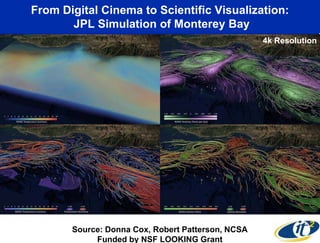

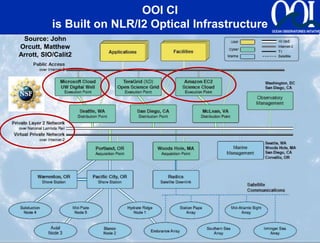

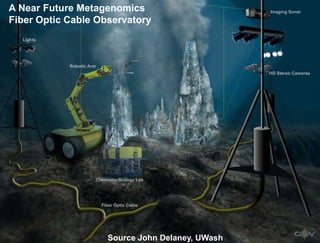

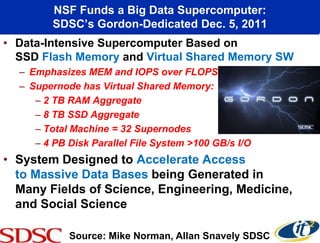

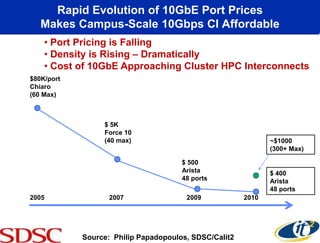

The keynote presentation by Dr. Larry Smarr discusses the role of high-performance computing and e-infrastructure in advancing scientific simulation across various fields, including biology and climate science. The Optiputer project illustrates how dedicated lightpaths can facilitate access to global data and instruments, supporting the next generation of data-driven research. In collaboration with organizations like SURFnet, efforts are underway to create high bandwidth collaboratories and enhance capabilities in computational research and visualization services.