2016Nonlinear inversion of electrical resistivity imaging.pdf

- 1. 267 Manuscriptreceived by the Editor Jajuary 17, 2015; revised manuscript received June 4, 2016. *This work was supported by the National Natural Science Foundation of China (Grant No. 41374118), the Research Fund for the Higher Education Doctoral Program of China (Grant No. 20120162110015), the China Postdoctoral Science Foundation (Grant No. 2015M580700), the Hunan Provincial Natural Science Foundation, the China (Grant No. 2016JJ3086), the Hunan Provincial Science and Technology Program, China (Grant No. 2015JC3067), the Scientific Research Fund of Hunan Provincial Education Department, China (Grant No. 15B138). 1. College of Physics and Information Science, Hunan Normal University, Changsha 410081, China. 2. School of Geosciences and Info-Physics, Central South University, Changsha 410083, China. 3. Department of Information Science and Engineering, Hunan International Economics University, Changsha 410205, China. ♦Corresponding author: Dai Qian-Wei (Email: qwdai@csu.edu.cn) © 2016 The Editorial Department of APPLIED GEOPHYSICS. All rights reserved. Nonlinear inversion of electrical resistivity imaging using pruning Bayesian neural networks* APPLIED GEOPHYSICS, Vol.13, No.2 (June 2016), P. 267-278, 7 Figures. DOI:10.1007/s11770-016-0561-1 Jiang Fei-Bo1,2 , Dai Qian-Wei♦2 , and Dong Li2,3 Abstract: Conventional artificial neural networks used to solve electrical resistivity imaging (ERI) inversion problem suffer from overfitting and local minima. To solve these problems, we propose to use a pruning Bayesian neural network (PBNN) nonlinear inversion method and a sample design method based on the K-medoids clustering algorithm. In the sample design method, the training samples of the neural network are designed according to the prior information provided by the K-medoids clustering results; thus, the training process of the neural network is well guided. The proposed PBNN, based on Bayesian regularization, is used to select the hidden layer structure by assessing the effect of each hidden neuron to the inversion results. Then, the hyperparameter αk, which is based on the generalized mean, is chosen to guide the pruning process according to the prior distribution of the training samples under the small-sample condition. The proposed algorithm is more efficient than other common adaptive regularization methods in geophysics. The inversion of synthetic data and field data suggests that the proposed method suppresses the noise in the neural network training stage and enhances the generalization. The inversion results with the proposed method are better than those of the BPNN, RBFNN, and RRBFNN inversion methods as well as the conventional least squares inversion. Keywords: Electrical resistivity imaging, Bayesian neural network, regularization, nonlinear inversion, K-medoids clustering Introduction Electrical resistivity imaging (ERI) is widely used in hydrogeological, environmental, and archaeological investigations, and mineral and oil and gas exploration. During the last several decades, various traditional approaches to the interpretation of geoelectrical resistivity data have been published. These methods are based on linear or quasilinear inversion techniques,

- 2. 268 Pruning Bayesian neural networks such as the alpha center method (Shima, 1992), the least squares method (Loke and Barker, 1995), and the integral method (Lesur et al., 1999). Nevertheless, the geophysical inversion problem is a typical nonlinear inversion problem, and the traditional linear approaches depend on the initial models of choice. If the selection of the initial model is inadequate, the linear inversion method will fall into local minima and local optimal solutions and thus inaccurate solutions will be obtained. In recent years, nonlinear inversion methods were used in ERI inversion. Artificial neural networks (ANNs) are widely used in nonlinear inversion methods for one- and multidimensional applications. Calderón-Macías et al. (2000) applied ANN to vertical electrical sounding and seismic inversion. El-Qady et al. (2000) studied 1D and 2D geoelectrical resistivity data using ANN-based inversion, designed the neural network structure, and compared the performance of different learning algorithms. Xu and Wu (2006) introduced the backpropagation neutral network (BPNN) to 2D resistivity inversion, and the pole–pole configuration. Neyamadpour et al. (2009) built an ERI inversion model based on the Wenner–Schlumberger configuration using the MATLAB Neural Network Toolbox. Ho (2009) used BPNN to invert 3D borehole-to-surface electrical data, described the sample generation method, and compared the inversion performance of different training algorithms in borehole-to-surface exploration. The studies mentioned above used BPNN to invert ERI data. BPNN is widely applied to ERI inversion problems despite the slow convergence, low accuracy, and overfitting. The parameters of 2D and 3D ERI models are complex and the search space of BPNN extends rapidly when the model parameters increase; therefore, the convergence and accuracy of the ANN inversion algorithms are intensively studied. Global search algorithms are combined with BPNN to solve the problems of sensitivity to the initial values and local optimal solutions. Zhang and Liu (2011) proposed an improved BPNN with ant colony optimization, and Dai and Jiang (2013) presented a hybrid particle swarm optimization (PSO)–BPNN inversion and used a chaotic oscillating PSO algorithm to optimize the BPNN parameters. However, global search algorithms have low computational efficiency and the optimized BPNN inversion results are sensitive to noise. The trained BPNN has bad generalization that affects the application of theoretical models to actual engineering problems. Maiti et al. (2012) introduced regularization neural networks to resistivity inversion and used an algorithm based on Bayesian neural network (BNN) and Markov Chain Monte Carlo (MCMC) simulation to invert vertical electrical sounding measurements for groundwater exploration. BNN can minimize the redundant structure of the neural network hidden layer, avoid overfitting, and shows good generalization in field data inversion. In this study, an improved pruning Bayesian neural network (PBNN), which can compute the regularization coefficients and prune the hidden layer nodes based on Bayesian statistics and neural networks, is developed. The neural network can suppress the weight vectors of the different hidden nodes using Bayesian statistics and minimizes the redundant structure of the hidden layer, which solves the hidden layer design problem and enhances the generalization of neural networks. The proposed regularization method is compared with the Bayesian method, the CMD method, and the Zhdanov method. The PBNN ERI inversion model is established based on the regularization neural network. Experimental results based on noisy synthetic and field data verify that the proposed method is more accurate than BPNN, the radial basis function neural network (RBFNN) and the regularized radial basis function neural network (RRBFNN) as well as the least squares, and can be used for engineering practice. Regularization neural networks Generalization of neural networks Generalization means that the trained neural network can accurately predict data from test samples with the same intrinsic laws. There are many factors that can affect the generalization of the neural networks, such as the quality and quantity of the training samples, the structure of the neural networks and the complexity of the problem being solved. In ERI inversion, the complexity of the problem depends on the exploration scale and geoelectrical structure, which is difficult to control; therefore, the chief method for improving generalization is to optimize the structure and training samples of the neural networks. Typically, field data contain noise. If the neural network is overtraining or the neural network is too complex, the neural network will not learn the intrinsic laws of the problem but only the nonrepresentative features or the noise. For a new input sample, the output may differ from the objective values and the generalization may be thus affected. This is known as

- 3. 269 Jiang et al. overfitting. The generalization is closely related to the neural network scale of the training samples. Under a given learning accuracy, the neural network structure design is an underdetermined problem. The regularization approach is an optimization method of the available hidden layer that improves the generalization of the neural network and obtains a compact neural network structure that matches the complexity of the training samples. Regularization approach The regularization approach is used to amend the objective function and improve the generalization of neural networks. In general, the fitting error of the neural network is introduced to the objective function. For given data pairs 1 1 2 2 {( , ),( , ),...,( , )} N N D x y x y x y , the fitting error function is (MacKay, 1992) 2 1 1 ( ) ( ( , ) ) , 2 N D i i i E ¦ w f x w y (1) where ( , ) f x w explicitly maps the input vector variable ,1 ,2 , [ , , , ]T n i i i i n x x x x R and the output variable ,1 ,2 , [ , , , ]T m i i i i n y y y y R , N is the number of samples, and w is the weight vector of the neural network. Training the neural network is to find the weight vector w, while the mapping ( , ) f x w has the smallest fitting error ED(w). This is an underdetermined problem. To maintain the stability of the training process and obtain accurate weight vectors, the fitting error function ED(w) is replaced by a new objective function F(w) called the generalized error function (MacKay, 1992) 2 2 1 1 ( ) ( ) ( ) 1 1 ( ( , ) ) , 2 2 D w N M i i j i j F E E w E D E D ¦ ¦ w w w f x w y (2) where Ew(w) is a stable function and it is called the weight decay term. M denotes the number of weight values, and parameters α and β are hyperparameters (also called regularization coefficients), which affect the complexity and generalization of the neural network. The selection of the regularization coefficients affects the structure and the inversion accuracy of the neural network directly. Bayesian neural networks Bayesian statistics use probability and prior information. Thus, the weight vectors of neural networks are interpreted as stochastic variables, the objective function is the likelihood function of training samples, and the weight decay is the prior probability distribution of the network parameters. The prior probability distribution is applied to adjust the posterior distribution of the network parameters for a given dataset and the corresponding output is computed based on the posterior probability distribution. Bayesian neural network (BNN) is a typical regularization neural network that is introduced to estimate the regularization coefficients α and β using Bayesian theory (MacKay, 1992). The workflow of BNN consists of the following steps. First, choose the initial values for BNN, including the number of hidden neurons, the weight vector w and the hyperparameters α and β. Second, use a standard nonlinear training algorithm, e.g., the Levenberg– Marquardt or RPROP algorithm, to find the weight vector w that minimizes the objective function. Third, compute the new values for the hyperparameters α and β using Bayesian statistics. Finally, fourth, repeat steps 2 to 3 until convergence. According to the BNN workflow, the regularization coefficients (α and β) are introduced to the objective function to solve the overfitting problem and improve the generalization, which is critical to the inversion of noisy field data. Moreover, the weight vector w is adjusted and optimized based on the training samples using Bayesian statistics, and the training process has no need of cross validation, which is well suited for the ERI inversion of small samples. In BNN, the prior information can be used to design the network structure, select the initial variables and improve the generalization, which in theory improves the accuracy of the inversion results. The BNN has also shortcomings, nevertheless. First, the design method for the hidden layer is not given. Second, even though the weight decay can improve the generalization of BNN, the computational complexity is not reduced. Third, the decay of the weights is distributed over the entire parameter space and the effect of each hidden node cannot be evaluated precisely. In ERI inversion, the scale of the structure of the neural network is large and the computational efficiency is low; thus, further optimization is necessary for BNN. An effective number of parameters are proposed in BNN but this does not simplify the hidden layer structure. Furthermore, the convergences of the weight vectors that correspond to the hidden nodes are inconsonant and a uniform hyperparameter α cannot prune the hidden layer nodes.

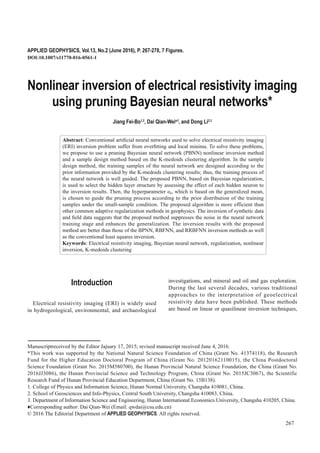

- 4. 270 Pruning Bayesian neural networks Pruning Bayesian neural networks To optimize BNN, we propose a novel pruning Bayesian neural network (PBNN), which selects the hidden layer structure and improves the computational efficiency. Considering the convergent differences among the weight vectors of the hidden nodes, we redefine the objective function and the significance of the hidden neurons by using the corresponding weight vectors, and prune the hidden node, which is insignificant and can be removed. The rationale behind PBNN is the following. For a three-layer feedforward neural network, the weight matrix from the input layer to the hidden layer IW is denoted as (MacKay, 1992) 1,1 1, ,1 , , n h h n iw iw iw iw ª º « » « » « » ¬ ¼ IW # % # (3) where n is the number of input layer nodes and h is the number of hidden layer nodes. The weight matrix LW for the hidden to the output layer is (MacKay, 1992) 1,1 1, ,1 , , h m m h lw lw lw lw ª º « » « » « » ¬ ¼ LW # % # (4) where m is the number of output layer nodes. Then, we define the corresponding weight vector of the hidden neuron k with the equation ,1 ,2 , 1, 2, , , , , , , , , 1 , k T k k k k k n k k m k iw iw iw iw iw iw k h ª º ¬ ¼ ª º ¬ ¼ d d HW iw lw (5) and the significance of the hidden neuron k with the following equation 2 1 1 ( ) . 2 2 T sig k k k E k w ¦ HW HW (6) With decreasing Esig(k), the significance of the hidden neuron k also decreases. Hence, Esig(k) can be used as the pruning criterion and the hidden layer can be simplified to improve the computational efficiency. In the pruning algorithm, each hidden neuron k has a corresponding regularization coefficient ak, and we use two different computing methods for ak 1 1 2 ( ) 1 ( ) 2 ( ) sig k sig E k gmean E J D D J ˜ w (7) and 2 1 1 ( ) 2 ( ) 2 ( ) , 1 ( ) 2 ( ) sig sig k sig mean E k E std E J J D D J w w (8) where gmean stands for the generalized mean function, mean stands for the average function, and std stands for the standard deviation function. αk1 is based on the generalized mean and αk2 is subject to normal distribution, γ is the effective number of parameters, and Esig(w) is the set of Esig(k) calculated by all hidden neurons. Then, we rewrite the objective function using αk and Esig(k) as follows: 2 1 1 1 ( ) ( ( , ) ) ( ). 2 N h sig i i k sig i k F a E k E ¦ ¦ w f x w y (9) The pruning algorithm attempts to directly link the inversion accuracy to the significance of the hidden neurons and uses it in the learning process to realize a compact neural network. The neural network starts as a relatively large network and hidden neurons are gradually removed to improve the generalization of the neural network in the training process. The pruning criterion is ( ) , 1 , ( ) sig k w E k ratio k h E U d d w (10) where the ratiok is the significance ratio of the hidden neuron k, ρ is the pruning threshold of choice. If the ratiok is less than ρ, the hidden neuron k is insignificant and can be removed. To evaluate the two regularization coefficients, the PBNN is trained with αk1 and αk2 using the synthetic training datasets in described in the sample design section. The number of the initial hidden nodes is set at 31 (Hecht-Nielsen, 1988) and the pruning threshold at 0.03 by trial and error. The neuron pruning progress and the training error performance are shown in Figure 1. Figure1a shows the neuron pruning progress of the different regularization coefficients in the training stage. It is not difficult to see that compared to ak2, ak1 yields a more compact network—the number of hidden layer

- 5. 271 Jiang et al. neurons is 18 after the pruning process. Figure 1a shows that the pruning algorithm removes neurons smoothly and, because the pruning threshold is very small, only one neuron can be pruned each time. Figure 1b shows the performance of the different regularization coefficients in the training stage. It is clearly seen that the convergence curve obtained by ak1 declines sharply at the beginning of the procedure and decreases slowly at the later stages, and the same can be seen in Figure 1b for αk2. In general, αk1 has smaller training error than ak2 because the sample size of the ERI inversion is too small to obey the normal distribution, and the generalized mean with proper setting forces αk1 to better agree with the distribution of the ERI training samples. 0 50 100 150 200 250 300 15 20 25 30 35 Epoch Hidden neuron number (a) ak1 ak2 0 50 100 150 200 250 300 101 102 103 104 Epoch Training performance (b) ak1 ak2 Fig.1 Training process of PBNN: (a) pruning and (b) convergence. Neural network modeling of the ERI inversion ERI inversion using neural network The neural network inversion is different from conventional nonlinear inversion methods; for, it uses the ERI forward algorithm to produce a series of training samples and then applies the neural network model to study the samples and adjust the structure and weights to generate an inversion model that can match the field data. Neural networks can converge to global optimal solutions and are widely used in ERI inversion. The procedure for the ERI inversion using PBNN is shown in Figure 2. The neural network inversion procedure is divided into three stages (Ho, 2009). Sample design stage: An effective sample set is designed and the ERI forward algorithmis introduced to generate samples ^ ` 1 , N i i i x y that are suitable for neural network learning, ,1 ,2 , [ , , , ]T n i i i i n x x x x R represents the apparent resistivity data and ,1 ,2 , [ , , , ]T m i i i i m y y y y R represents the true resistivity data. Neural network training stage: After the initialization of the parameters, the samples are inputted into the neural network, the pruning Bayesian algorithm is repeatedly executed, while all parameters are simultaneously adjusted to appropriate values, so that the neural network model producing the minimum generalized error for the given samples is selected. Neural network testing stage: The test data are Generate samples using forward solver Initial parameters of the neural network Input training samples Train the neural network using the pruning Bayesian method Satisfy the stopping condition? Save the trained inversion model No Design samples Sample design stage Neural network training stage Neural network testing stage Evaluate the PBNN model Yes Get inversion results Start Input testing samples Invert resistivity data Fig.2 Flowchart of the pruning Bayesian neural network.

- 6. 272 Pruning Bayesian neural networks inputted into the selected neural network model and the inversion results are obtained. Sample design of the neural network The neural network modeling aims to find the relation between the input and output of a limited number of samples. When the size of the neural network is identified, the quality and quantity of samples are the major factors affecting the generalization of the neural network. To ensure the generalization of the neural network, the selected training samples should include all the intrinsic information of the problem to be solved. For synthetic data, this inversion requirement is easily satisfied but the sample design is difficult for field data because of the irregularity of the anomalies and the lack of prior information. Consequently, there is less discussion in the literature about field data inversion using neural networks (Dai and Jiang, 2013; Xu and Wu, 2006; Zhang and Liu, 2011). The sample design is one of difficulties to overcome in neural network field data inversion. Cluster analysis is commonly used in machine learning to obtain prior information from unlabeled samples. Using cluster analysis, the prior distribution information of apparent resistivity data is introduced to the learning process of the neural network by adopting the apparent resistivity values as the data similarity indicators. In this study, the K-medoids algorithm is applied to cluster the field data, which then guides the training sample design of the neural network. The main steps of the K-medoids algorithm are the following. (1) Choose k objects in a given dataset as the initial cluster centers, arbitrarily. (2) Assign each remaining object to the cluster with the nearest cluster center. (3) Recalculate the cluster centers using the following equation (Park and Jun, 2009) argmin ( , ), 1,2,..., , i i i C j C i d i j i k ¦ (11) where Ci is the cluster with data point i, d(i, j) is the Euclidean distance between the apparent resistivity data points i and j. (4) Repeat steps (2) to (3) until there is no change and output the clustered results. (5) Use the cluster distribution and the cluster centers as the prior information to guide the neural network sample design. An ERI field dataset is used to design the training samples of the neural network. The field data are from a survey, which was carried out to determine the subsurface geological structure in the Eastern Guangdong Province, China. The data were collected on a W–E cross section. A Wenner–Schlumberger array with 60 electrodes at 5 m intervals, and level number n = 10. This profile produced 480 measurements. The objective of this survey was to image the geological structure down to approximately 20 m. The inversion results were verified using data from the borehole drilled in the array profile (numbered ZK1) (Figure 3). The field data are shown in Figure 3 as a pseudosection of the apparent resistivity. x (m) z (m) 50 100 150 200 250 í1 í1 í10 í í2 ȡ (ȍÂm) 100 15 251 1 1000 ZK1 It can be seen from Figure 4, that the SC decreases gradually when the k value increases gradually. The convergence of the SC suggests the successful clustering of the field data. When k = 4, the SC decreases to very low values. This means that the field data have been clustered adequately and are relatively simple, which simplifies the sample design of the neural network. The clustering results of the field data are shown in Figure 5. Fig.3 Apparent resistivity pseudosection. To provide high-quality training samples of the neural network, the cluster analysis is applied to obtain the prior information of the apparent resistivity data. The number of cluster centers k is determined before the clustering. In this study, the partition index (SC) is used to evaluate the clustering results (Bensaid, et al., 1996). When [2,14] k , the SC of the field data after clustering shown in Figure 4.

- 7. 273 Jiang et al. As shown in Figure 5, the field data are divided into four clusters according to the Euclidean distances, and the cluster center information is given in Table 1. The prior information is obtained from Figure 5 (cluster distribution) and Table 1 (cluster centers), and then samples of the neural network are designed based on the prior information. Table 1 Cluster centers Cluster number 1 2 3 4 Cluster center apparent resistivity (Ω·m) 1243.3 722.39 210.57 479.4 Fig.5 Clustering results of the field data. In the designed samples, layered medium model is used to simulate the covering layer and weathered layer and rectangular medium to simulate the high and low resistivity anomaly bodies in the exploration area. The parameters of the samples are designed referring to the cluster centers and cluster distribution. By modifying the size, location, and combination of abnormities, we designed 40 training models. These models include 19200 data points and add 3% random noise to all the calculated apparent resistivity data to evaluate the stability and convergence of the inversion algorithm. The finite-volume approach with a cell-centered and variable grid is used (Pidlisecky and Knight, 2008). We also use the grouping method for the samples (Dai and Jiang, 2013), and set the number of network input layer nodes at 30 and the number of network output layer nodes at 10 based on numerical simulations. Regularization performance The selection of the regularization coefficient directly affects the inversion results. The regularization algorithm that can adjust the regularization coefficients during the inversion process has broad applications. The commonest adaptive regularization algorithms in geophysics are the Bayesian regularization (MacKay, 1992), the CMD regularization (Chen et al., 2005), and the Zhdanov regularization (Gribenko and Zhdanov, 2007). All the adaptive regularization algorithms are described with the following equation (MacKay, 1992; Chen et al., 2005; Gribenko and Zhdanov, 2007) ( ) ( ) ( ), D G w F E E O w w w (12) , 1 , 1 , 1 1 1 / Bayesian ( ) / ( ( ) ( )) , Zhdanov G G G D G D G w G G E E E CMD q D E O O ° ® ° ˜ ¯ w w w (13) where G is the iteration number, αG and βG are calculated by the Bayesian regularization, λG in the CMD regularization is the ratio of the data fitting function to the Fig.4 SC results of the clustering algorithm. 2 4 6 8 10 12 14 0 1 2 3 x 10í3 Partition Index (SC) Clustering number SC value 0 50 100 150 200 250 300 í í í í í í í í í í 0 x (m) z (m) Cluster 1 Cluster 2 Cluster 3 OXVWHU

- 8. 274 Pruning Bayesian neural networks model stability function in the last iteration, and q in the Zhdanov regularization is set at 0.9 (Chen et al., 2011). For different regularization coefficients, the parameters for the feedforward neural networks are the input layer nodes n = 30, the initial hidden layer nodes h = 31, the output layer nodes number m = 10, the maximum epochs ep = 300, and the training goal F(w) = 0.01. The Levenberg–Marquardt algorithm is used for the training function. To evaluate the generalization of the different regularization algorithms, the training ED(w) and testing ED(w) are calculated. The detailed performances of the hidden nodes number, the fitting error ED(w), and the corresponding computing time (CT) are listed in Table 2. In the simulation, we used MATLAB 2013 and a computer with an Intel Core i5-2450 CPU and 4 GB RAM, running Windows 7. Table 2 Performance using different adaptive regularization methods Regularization algorithms Training stage Testing stage Hidden nodes number ED(w) CT(s) ED(w) CT(s) Pruning Bayesian 18 19.1519 217.3845 26.3124 2.45 Bayesian 31 18.4325 278.9732 38.3948 3.76 CMD 31 76.3945 57.8364 80.6353 3.58 Zhdanov 31 54.4578 58.9374 112.8364 4.02 The experimental results suggest that the training fitting errors of the pruning Bayesian and the Bayesian methods offer obvious advantages compared to those of the CMD and Zhdanov methods. This means that the Bayesian method can optimize the regularization coefficients more effectively by using the posterior information under the small-sample condition. In the pruning Bayesian and CMD methods, the inversion errors are stable and the testing fitting and training fitting errors are the same but the fitting errors of the Bayesian and Zhdanov methods are large in the testing stage, which means that the generalization of the pruning Bayesian and CMD methods are better than the Bayesian and Zhdanov methods. In general, all the generalization algorithms have low fitting errors in the training and testing stages, which proves the availability of the regularization neural networks in the ERI inversion. The PBNN is the optimal model with the lowest fitting error and best generalization ability, because it finds the best regularization coefficients and suppresses the weight vectors using Bayesian statistics and the pruning algorithm prunes the insignificant hidden nodes and selects a compact neural network structure automatically. Another important evaluation index of the nonlinear inversion is the computing time. Neural network inversion is different from conventional nonlinear inversion methods, which calls for forward programming only in the sample design stage. Therefore, the neural network inversion has higher computational efficiency than conventional nonlinear inversion methods. Comparing the data in Table 2, the training CTs required by the pruning Bayesian and Bayesian methods are higher than the CTs of the CMD and Zhdanov methods. It is because the posteriori analysis and calculation of the hyperparameters of the pruning Bayesian and Bayesian methods are time consuming, whereas the CMD and Zhdanov methods calculate the regularization coefficients directly. In the testing stage, the differences among the CTs of all the regularization methods are not significant. Primarily, because there are no iterations in the testing stage and the corresponding CT is the time of only one forward calculation of the neural network. Hence, the testing CT of the pruning Bayesian method is the least because of the compact neural network structure. The optimal inversion model is the PBNN because of its compact structure and good generalization. Although the training CT for the PBNN is higher than other comparable methods, the PBNN has the smallest testing fitting error and best inversion performance under the small-sample condition. Thus, we chose the PBNN to invert the ERI data. Examples and results The PBNN and BPNN (Neyamadpour et al., 2009), the RBFNN (Srinivas et al., 2012), and the RRBFNN (Chen et al., 1996) are used to invert the ERI data and test the performance of the PBNN. All these neural networks are three-layer feedforward networks and their parameters are listed in Table 3.

- 9. 275 Jiang et al. Table 3 Parameters for the different neural networks Neural network Input nodes Output nodes Hidden nodes Training algorithm Active function Epoch Spread constant Goal PBNN 30 10 18 (after pruning process) Pruning Bayesian tansig 400 - 0.01 BPNN 30 10 31 LM tansig 400 - 0.01 RBFNN 30 10 - OLS radbas - 0.6 0.01 RRBFNN 30 10 - ROLS radbas - 0.6 0.01 than that of the BPNN and RBFNN owing to the more complex posteriori analysis of the Bayesian method. Οn the other hand, neural networks without regularization coefficients (BPNN, RBFNN) have higher R2 , which means lower fitting errors; nevertheless the stability and generalization of the inversion results will be affected. Table 4 Performance indicators of the different neural networks in the training stage Neural network MSE R2 RSD BPNN 0.05214 0.9750 0.0368 PBNN 0.05585 0.9707 0.0501 RRBFNN 0.05622 0.9687 0.0685 RBFNN 0.03794 0.9834 0.0352 Synthetic data The following model is used to test the inversion performance of the PBNN. It is a homogeneous model of 1000 Ω·m with a 500 Ω·m upper layer, a 700 Ω·m middle layer, and a 50 Ω·m conductive block (Figure 6a). The synthetic dataset is generated using the same forward solver and parameters mentioned above. Then, 3% Gaussian noise is added to the apparent resistivity data. Figures 6b and 6e show the four inversion solutions. In all cases, the inversion results show the 500 Ω·m upper layer and the 50 Ω·m conductive block, whereas the 700 Ω·m middle layer is poorly resolved without regularization. The 50 Ω·m conductive block and the 500 Ω·m upper layer differ from the homogeneous background. Clearly, the neural network is useful to solve complex ERI inversion in high resistivity contrast regions. For BPNN, a little distortion is seen in the bottom of the conductive block, whereas massive distortion is seen on both sides of the conductive block for RBFNN. This is because of the overfitting in the neural networks without regularization coefficients, which is common in noisy samples. For the 700 Ω·m middle layer, the regularization neural networks (PBNN, RRBFNN) perform better than BPNN and RBFNN. The proposed PBNN reconstructs the size, location, To evaluate the performance of the proposed PBNN method, we define the following indexes (Liu et al., 2011) Mean square error: 2 1 1 ˆ ( ) . N i i i MSE z z N ¦ (14) Determination coefficient: 2 1 1 2 2 2 1 1 ˆ ˆ . ˆ ˆ N N i i i i N N i i i i z z z z R z z z z ª º « » ¬ ¼ ¦ ¦ ¦ ¦ (15) Relative standard deviation: 2 2 1 ˆ ( ) 1 , 1 N i i i i z z RSD N z ¦ (16) where zi and ˆi z are the actual (or measured) and fitted (or predicted) values, respectively, N is the number of samples, and 1 1 N i i z z N ¦ and 1 1 ˆ ˆ N i i z z N ¦ are the average zi and ˆi z of all samples. R2 measures the correlation of the predicted and measured values, and ranges between 0 and 1. The higher R2 is, the stronger the linear relation between the measured and predicted values is, whereas MSE and RSD are the estimation errors and the lower they are, the smaller the prediction errors are. The evaluation indices for the training stage are tabulated in Table 4. The experimental results suggest that all the neural networks converge in the training stage despite the different features. The regularization neural networks (PBNN, RRBFNN) that have higher MSE and RSD perform worse than BPNN and RBFNN in the training stage. Probably, because the Bayesian regularization that is introduced to update the parameters of the neural networks not only focuses on the fitting error but also on weight decay. The training time of the regularization neural network methods is also longer

- 10. 276 Pruning Bayesian neural networks and sharpness of the 700 Ω·m middle layer better than RRBFNN. The performances of the neural networks for the above model are given in Table 5. The MSE and RSD of the regularization neural networks (PBNN and RRBFNN) are smaller than those of the BPNN and RBFNN. Because of the regularization coefficients, the noise suppression and regularization of the neural network are enhanced. The main reason for this is the pruning algorithm that is used to obtain a compact neural network structure and the error propagation method for PBNN; furthermore, the parameters of the neural network are affected by the MSE of all the samples. This global characteristic enhances the learning ability of the PBNN and yields better inversion performance than RRBFNN that is characterized by local response. The same information can be obtained by R2 . The latter is highest for PBNN because the proposed pruning Bayesian algorithm improves the generalization and the strong correlation between measured and predicted values. Table 5 Testing stage performance indices for the different neural networks and synthetic example Neural network type MSE R2 RSD BPNN 0.09133 0.9509 0.1053 PBNN 0.08956 0.9589 0.0763 RRBFNN 0.09094 0.9527 0.1008 RBFNN 0.09364 0.9255 0.1498 Field data As the true field resistivity is unknown, the performance indices (MSE, R2 , and RSD) are calculated using the forward modeling results for the constructed model and the field data, and the inversion results are evaluated by using the performance indices and drilling data (Ho, 2009). The inversion results are shown in Figure 7. í í í í í í í í í í í í í í í x (m) x (m) x (m) x (m) x (m) z (m) z (m) z (m) z (m) z (m) (a) (b) (c) (d) (e) ȡ (ȍÂm) ȡ (ȍÂm) ȡ (ȍÂm) ȡ (ȍÂm) ȡ (ȍÂm) The geoelectrical models obtained by all inversion methods show the geological structure clearly. The size and location of overburden, regolith, and fault zone agree well with the results of the more conventional least squares inversion. The regularization neural networks (PBNN and RRBFNN) show clearly the deep fault zone because of their ability to resolve the ERI with low contrast of resistivity values. The performance indices of the neural networks are listed in Table 6. Table 6 Testing stage performance indices of different inversion methods and field data Neural network MSE R2 RSD Inversion depth at ZK1 (m) BPNN 0.2784 0.8276 0.1859 6.1634; 8.6114 PBNN 0.1332 0.9074 0.1617 5.4812; 8.4365 RRBFNN 0.1763 0.8791 0.1743 5.5043; 8.7258 RBFNN 0.3154 0.7701 0.1996 6.7245; 10.874 Least-squares 0.3022 0.8053 0.1843 5.5033; 7.7475 z (m) z (m) z (m) z (m) z (m) (a) 0 10 20 30 40 50 60 í4 í2 0 0 500 1000 (b) 10 20 30 40 50 í3 í2 í1 (c) x (m) x (m) x (m) x (m) x (m) 10 20 30 40 50 í3 í2 í1 (d) 10 20 30 40 50 10 20 30 40 50 í3 í2 í1 (e) í3 í2 í1 0 500 1000 0 500 1000 0 500 1000 0 500 1000 ȡ (ȍÂm) ȡ (ȍÂm) ȡ (ȍÂm) ȡ (ȍÂm) ȡ (ȍÂm) Fig.6 Model dataset inversion: (a) model; (b) BPNN; (c) PBNN; (d) RRBFNN; (e) RBFNN. Fig.7 Field data inversion: (a) BPNN; (b) PBNN; (c) RRBFNN; (d) RBFNN; (e) Least squares method.

- 11. 277 Jiang et al. According to MSE, R2 , and RSD, the regularization neural networks (PBNN and RRBFNN) perform better than the other inversion methods. From the drilling data, the regularization neural networks yield depths that are closer to the actual depths (5.2 m and 8.5 m) than those of the BPNN and RBFNN because the training samples with noise affect the generalization of the neural networks and reduce the inversion accuracy. All inversion results suggest that the regularization neural networks suppress the noise in the field data and enhance the generalization of the neural networks. The regularization neural networks have better fitting accuracy and produce more detailed information of the geological structure than the least squares inversion method. Based on the drilling data, the regularization neural networks produce inversion results of higher precision than the least squares inversion method, especially for the depth of the regolith. Conclusions Because of the lack of prior information and noise interference, the neural network inversion using field data has to overcome the following problems: (1) how to design training samples based on the data and (2) how to solve the overfitting problem and enhance the generalization by suppressing the noise in training stage. In this study, an improved PBNN based on Bayesian regularization and a novel pruning algorithm for ERI inversion is proposed. First, Bayesian statistics are used to estimate the regularization coefficients of the feedforward neural network. Then, the pruning algorithm is used to realize the compact hidden layer of the neural network. Finally, a sample design method based on the K-medoids algorithm for clustering is developed to obtain samples suitable to field data. The results of the numerical simulations and resistivity data inversion suggest that the proposed method offers better generalization and global search than BPNN, RBFNN, and RRBFNN and it is superior to conventional adaptive regularization methods for ERI inversion. The ERI inversion using PBNN was tested. The introduction of Bayesian regularization and K-medoids clustering increases the computing time and complexity. How to combine neural networks, regularization, and clustering will be examined in the future. Acknowledgements This research was supported by the National Natural Science Foundation of China (Grant No. 41374118), the Research Fund for the Higher Education Doctoral Program of China (Grant No. 20120162110015), China Postdoctoral Science Foundation (Grant No. 2015M580700), Hunan Provincial Natural Science Foundation, China (Grant No. 2016JJ3086), Hunan Provincial Science and Technology Program, China (Grant No. 2015JC3067), Scientific Research Fund of Hunan Provincial Education Department, China (Grant No.15B138). We would like to thank Drs. Chen Xiaobin and Shen Jinsong for their valuable comments that improved the quality of the manuscript. References Bensaid, A. M., Hall, L. O., Bezdek, J. C., et al., 1996, Validity-guided (re) clustering with applications to image segmentation: Fuzzy Systems, IEEE Transactions on, 4(2), 112−123. Calderón-Macías, C., Sen, M. K., and Stoffa, P. L., 2000, Artificial neural networks for parameter estimation in geophysics: Geophysical Prospecting, 48(1), 21−47. Chen, S., Chng, E., and Alkadhimi, K., 1996, Regularized orthogonal least squares algorithm for constructing radial basis function networks: International Journal of Control, 64(5), 829−837. Chen, X. B., Zao, G. Z., Tang, J., et al., 2005, An adaptive regularized inversion algorithm for magnetotelluric data: Chinese Journal Geophysics, 48(4), 937−946. Chen, X., Yu, P., Zhang, L. L., et al., 2011, Adaptive regularized synchronous joint inversion of MT and seismic data: Chinese Journal Geophysics, 54(10), 2673 −2681. Dai, Q. W., and Jiang, F. B., 2013, Nonlinear inversion for electrical resistivity tomography based on chaotic oscillation PSO-BP algorithm:The Chinese Journal of Nonferrous Metals, 23(10), 2897−2904. El-Qady, G., and Ushijima, K., 2001, Inversion of DC resistivity data using neural networks: Geophysical Prospecting, 49(4), 417−430. Gribenko, A., and Zhdanov, M., 2007, Rigorous 3D inversion of marine CSEM data based on the integral equation method: Geophysics, 72(2), WA73−WA84. Hecht-Nielsen, R., 1988, Neurocomputing: picking the human brain: Spectrum, IEEE, 25(3), 36−41.

- 12. 278 Pruning Bayesian neural networks Ho, T. L., 2009, 3-D inversion of borehole-to-surface electrical data using a back-propagation neural network: Journal of Applied Geophysics, 68(4), 489−499. Lesur, V., Cuer, M., Straub, A., et al., 1999, 2-D and 3-D interpretation of electrical tomography measurements, Part 2: The inverse problem: Geophysics, 64(2), 396− 402. Liu, M., Liu, X., Wu, M., et al., 2011, Integrating spectral indices with environmental parameters for estimating heavy metal concentrations in rice using a dynamic fuzzy neural-network model: Computers Geosciences, 37(10), 1642 −1652. Loke, M., and Barker, R., 1995, Least-squares deconvolution of apparent resistivity pseudosections: Geophysics, 60(6), 1682−1690. MacKay, D. J., 1992, Bayesian interpolation: Neural computation, 4(3), 415−447. Maiti, S., Erram, V. C., Gupta, G., et al., 2012, ANN based inversion of DC resistivity data for groundwater exploration in hard rock terrain of western Maharashtra (India): Journal of Hydrology, 46(4), 294−308. Neyamadpour, A., Taib, S., and Wan Abdullah, W. A. T., 2009, Using artificial neural networks to invert 2D DC resistivity imaging data for high resistivity contrast regions: A MATLAB application: Computers Geosciences, 35(11), 2268−2274. Park, H. S., and Jun, C. H., 2009, A simple and fast algorithm for K-medoids clustering: Expert Systems with Applications, 36(2), 3336−3341. Pidlisecky, A., and Knight, R., 2008, FW2_5D: A MATLAB 2.5-D electrical resistivity modeling code: Computers Geosciences, 34(12), 1645−1654. Shima, H., 1992, 2-D and 3-D resistivity image reconstruction using crosshole data: Geophysics, 57(10), 1270−1281. Srinivas, Y., Raj, A. S., Oliver, D. H., et al., 2012, A robust behavior of Feed Forward Back propagation algorithm of Artificial Neural Networks in the application of vertical electrical sounding data inversion: Geoscience Frontiers, 3(5), 729−736. Xu, H. L., and Wu, X. P., 2006, 2_D resistivity inversion using the neural network method:Chinese Journal Geophysics, 49(2), 584−589. Zhang, L. Y., and Liu, H. F., 2011, The application of ABP method in high-density resistivity method inversion: Chinese Journal Geophysics, 54(1), 227−233. Jiang Fei-Bo, Ph.D. graduated from Central South University in 2014. His main research interests are forward electromagnetic modeling and inversion, and artificial intelligence. He is presently a lecturer at the College of Physics and Information Science at Hunan Normal University. Email: jiangfeibo@126.com