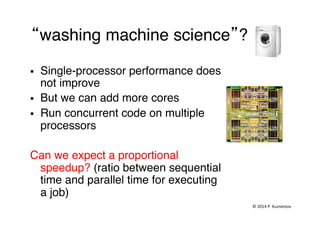

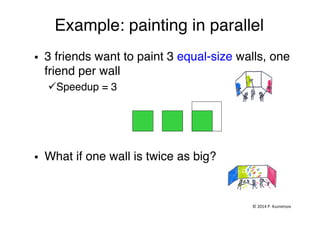

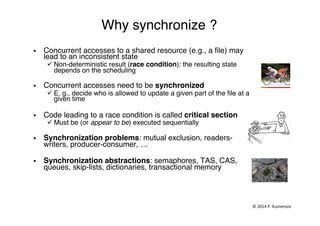

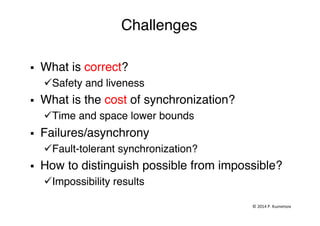

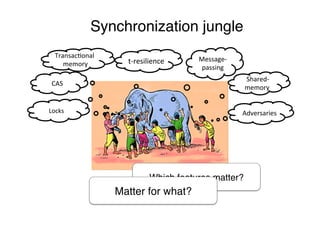

The document discusses concurrency and synchronization in distributed computing. It provides an overview of Petr Kuznetsov's research at Telecom ParisTech, which includes algorithms and models for distributed systems. Some key points discussed are:

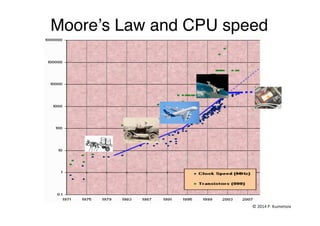

- Concurrency is important due to multi-core processors and distributed systems being everywhere. However, synchronization between concurrent processes introduces challenges.

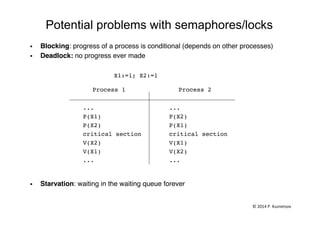

- Common synchronization problems include mutual exclusion, readers-writers problems, and producer-consumer problems. Tools for synchronization include semaphores, transactional memory, and non-blocking algorithms.

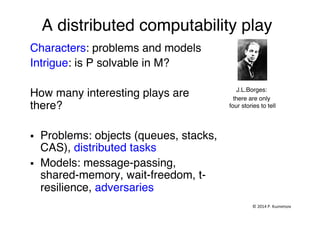

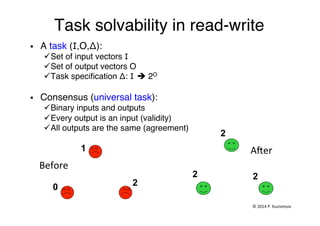

- Characterizing distributed computing models and determining what problems can be solved in a given model is an important area of research, with implications for distributed system design.

![Producer-consumer (bounded buffer) problem

§ Producers put items in the buffer (of bounded size)"

§ Consumers get items from the buffer"

§ Every item is consumed, no item is consumed twice"

"(Client-server, multi-threaded web servers, pipes, …)"

Why synchronization? Items can get lost or consumed twice:"

"

Producer!

/* produce item */!

while (counter==MAX);!

buffer[in] = item !

in = (in+1) % MAX;!

counter++; !!

Consumer!

/* to consume item */!

while (counter==0); !

item=buffer[out];!

out=(out+1) % MAX;!

counter--; !

/* consume item */!

!

Race!

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-21-320.jpg)

![Semaphores for producer-consumer

§ 2 semaphores used :"

ü empty: indicates empty slots in the buffer (to be used by the producer)"

ü full: indicates full slots in the buffer (to be read by the consumer)"

shared semaphores empty := MAX, full := 0;!

Producer" Consumer"

P(empty)!

buffer[in] = item; !

in = (in+1) % MAX;!

V(full)!

!

!

P(full);!

item = buffer[out];!

out=(out+1) % MAX; !

V(empty);!

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-23-320.jpg)

![Non-blocking algorithms"

A process makes progress, regardless of the other processes"

shared buffer[MAX]:=empty; head:=0; tail:=0;"

Producer put(item)! Consumer get()!

if (tail-head == MAX){!

!return(full);!

}!

buffer[tail%MAX]=item; !

tail++;!

return(ok);!

if (tail-head == 0){!

!return(empty);!

}!

item=buffer[head%MAX]; !

head++;!

return(item);!

Problems: "

• works for 2 processes but hard to say why it works J"

• multiple producers/consumers? Other synchronization pbs?"

(highly non-trivial in general)"

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-25-320.jpg)

![Transactional memory"

§ Mark sequences of instructions as an atomic transaction, e.g., the resulting

producer code:"

atomic {!

!if (tail-head == MAX){!

!return full;!

!}!

!items[tail%MAX]=item; !

!tail++;!

}!

return ok;!

§ A transaction can be either committed or aborted!

ü Committed transactions are serializable !

ü + aborted/incomplete transactions are consistent (opacity/local serializability)!

ü Let the transactional memory (TM) care about the conflicts"

ü Easy to program, but performance may be problematic"

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-26-320.jpg)

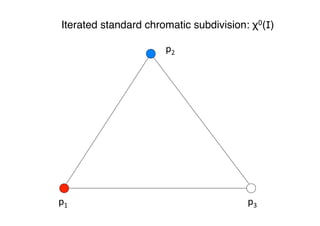

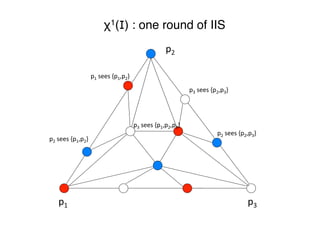

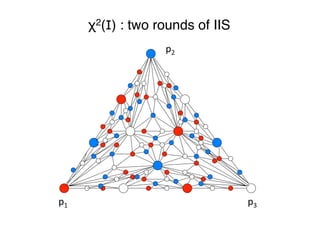

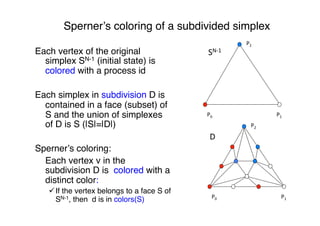

![An easy story to tell"

"

Task solvability in the iterated immediate

snapshot (IIS) memory model "

§ Processes proceed in rounds"

§ Each round a new memory is accessed:"

ü Write the current state"

ü Get a snapshot of the memory "

ü Equivalent the standard (non-iterated) read-write

model [BGK14]"

§ Visualized via standard chromatic subdivision

χk(I) [BG97,Lin09,Koz14]"

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-35-320.jpg)

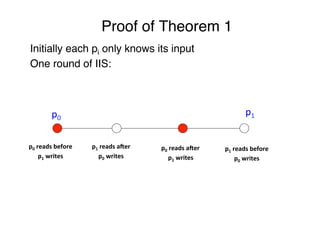

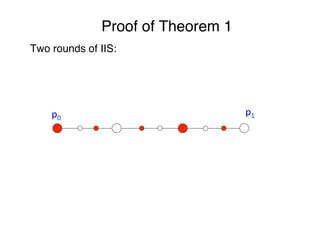

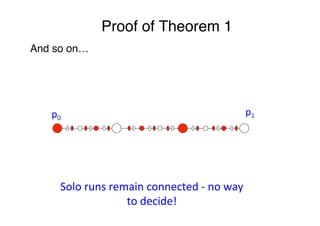

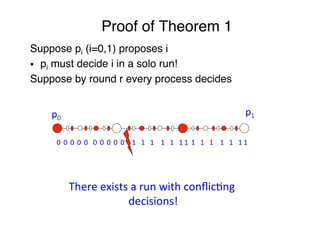

![Impossibility of wait-free consensus [FLP85,LA87]"

Theorem 1 No wait-free algorithm solves consensus"

"

We give the proof for N=2, assuming that "

"p0 proposes 0 and p1 proposes 1"

"

"

Implies the claim for all N≥2"

"

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-39-320.jpg)

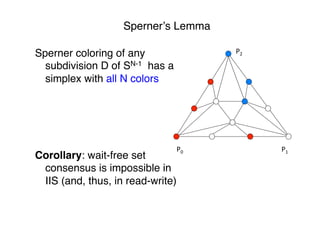

![Characterization of wait-free task

solvability"

Asynchronous computability theorem[HS93]"

A task (I,O,Δ) is wait-free RW solvable if and

only if there is a chromatic simplicial map from a

subdivision χk(I) to O carried by Δ"

"

Wait-free task computability reduced to the

existence of continuous maps between

geometrical objects"

"

"](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-49-320.jpg)

![1-resilient consensus?

k-resilient k-set agreement?""

"

NO"

"

Operational model equivalence:"

"

§ t-resilient N-process ó wait-free (t+1)-process

[BG93] (for colorless task, e.g. k-set agreement)"

"

[Main tool – protocol simulations]"

"](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-50-320.jpg)

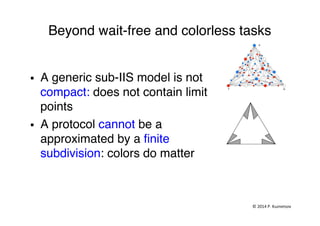

![What about…"

§ Generic sub-models of RW"

ü Many problems cannot be solved

wait-free"

ü So restrictions (sub-models) of RW

were considered"

ü E.g., adversarial models [DFGT09]:

specifying the possible correct sets"

● Non-uniform/correlated fault probabilities"

● Hitting set of size 1: a leader can be

elected"

"

"](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-51-320.jpg)

![Terminating subdivisions [GKM14]"

A (possibly infinite) subdivision that

allows processes to stabilize (models

outputs): stable simplices stop

subdividing"

A terminating subdivision must be

compatible with the model: every run

eventually lands in a stable simplex "

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-53-320.jpg)

![Generalized asynchronous

computability theorem [GMK14]"

A task (I,O,Δ) is solvable in a sub-IIS model M if and

only if there exists a terminating subdivision T of I

(compatible with M) and a chromatic simplicial map

from stable simplices of T to O carried by Δ "

"

Topological characterization of every task facing

every (crash) adversary?"

What about stronger ones? What about

long-lived problems?"

"

"

©

2014

P.

Kuznetsov](https://image.slidesharecdn.com/20141223kuznetsovdistributed-141223153633-conversion-gate02/85/20141223-kuznetsov-distributed-54-320.jpg)