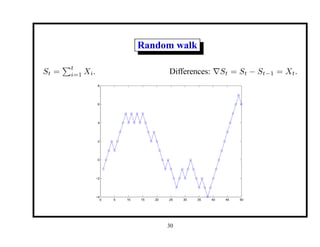

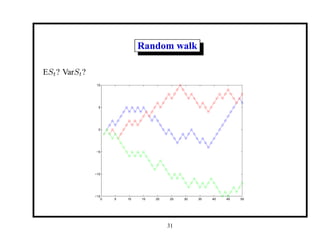

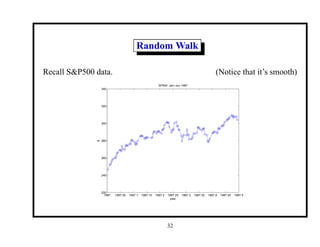

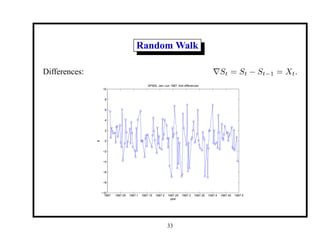

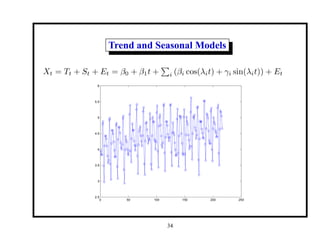

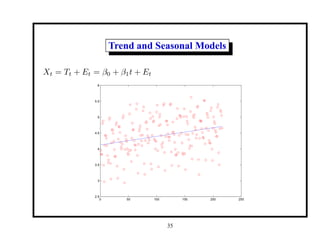

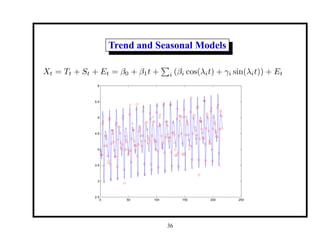

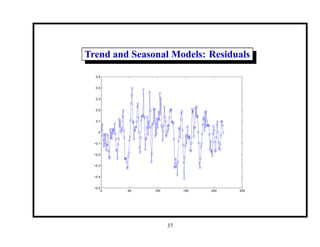

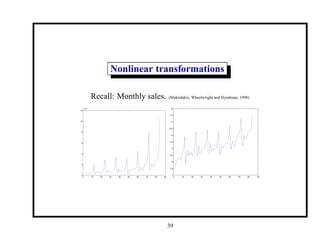

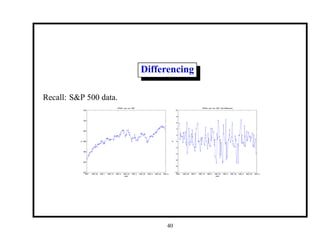

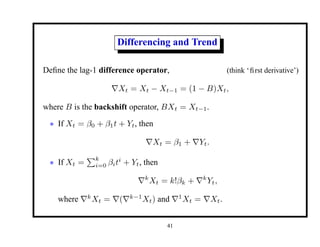

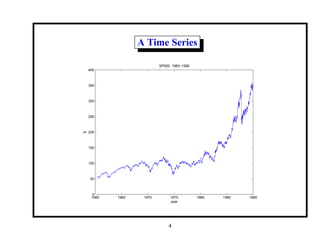

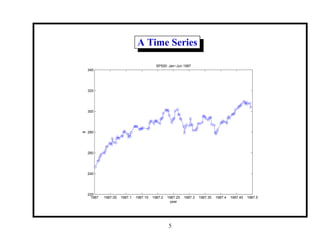

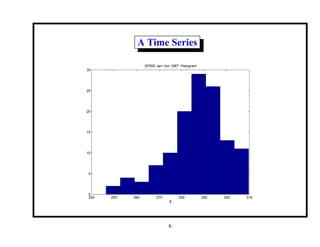

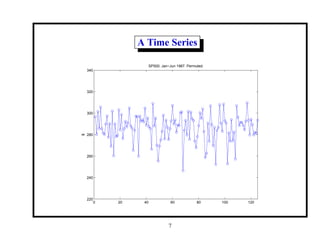

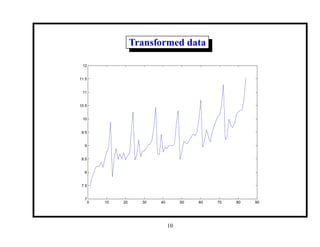

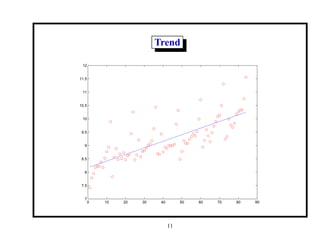

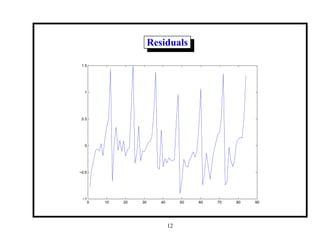

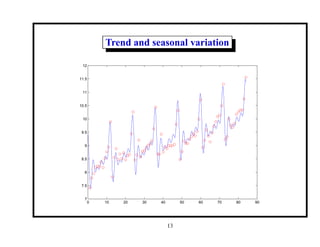

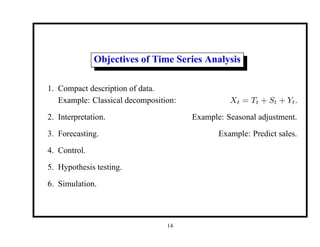

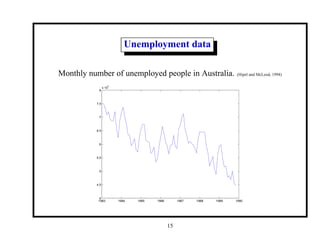

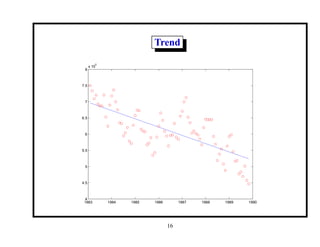

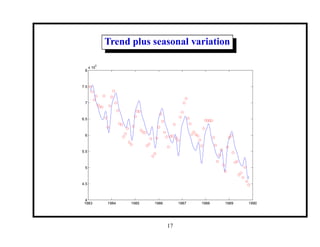

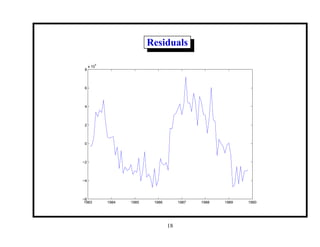

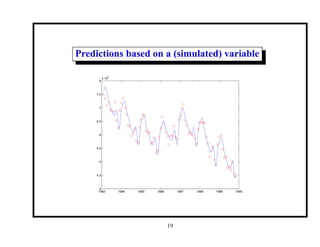

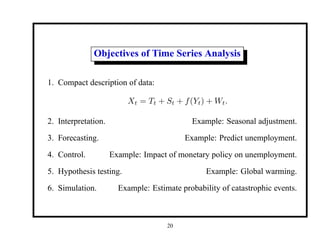

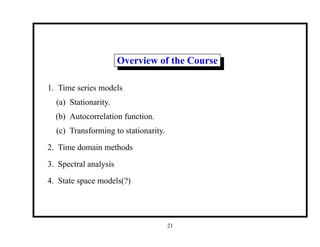

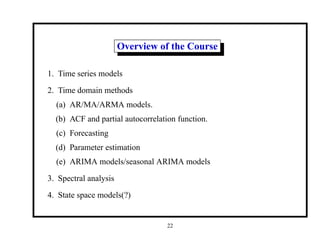

This document provides an introduction and overview of a time series analysis course. It discusses the objectives of time series analysis including compact description of data, interpretation, forecasting, control, hypothesis testing and simulation. Examples of decomposing time series into trend, seasonal and residual components are presented. The course will cover time series models, time domain methods like ARMA modeling, spectral analysis, and state space models. Stationarity, autocorrelation, differencing, and nonlinear transformations are discussed as ways to make time series stationary.

![Time Series Models

A time series model specifies the joint distribution of the se-

quence {Xt} of random variables.

For example:

P[X1 ≤ x1, . . . , Xt ≤ xt] for all t and x1, . . . , xt.

Notation:

X1, X2, . . . is a stochastic process.

x1, x2, . . . is a single realization.

We’ll mostly restrict our attention to second-order properties only:

EXt, E(Xt1 Xt2 ).

25](https://image.slidesharecdn.com/1notes-211018172623/85/1notes-25-320.jpg)

![Time Series Models

Example: White noise: Xt ∼ WN(0, σ2

).

i.e., {Xt} uncorrelated, EXt = 0, VarXt = σ2

.

Example: i.i.d. noise: {Xt} independent and identically distributed.

P[X1 ≤ x1, . . . , Xt ≤ xt] = P[X1 ≤ x1] · · · P[Xt ≤ xt].

Not interesting for forecasting:

P[Xt ≤ xt|X1, . . . , Xt−1] = P[Xt ≤ xt].

26](https://image.slidesharecdn.com/1notes-211018172623/85/1notes-26-320.jpg)

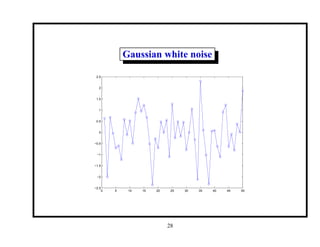

![Gaussian white noise

P[Xt ≤ xt] = Φ(xt) =

1

√

2π

Z xt

−∞

e−x2

/2

dx.

0 5 10 15 20 25 30 35 40 45 50

−2.5

−2

−1.5

−1

−0.5

0

0.5

1

1.5

2

2.5

27](https://image.slidesharecdn.com/1notes-211018172623/85/1notes-27-320.jpg)

![Time Series Models

Example: Binary i.i.d. P[Xt = 1] = P[Xt = −1] = 1/2.

0 5 10 15 20 25 30 35 40 45 50

−1

−0.8

−0.6

−0.4

−0.2

0

0.2

0.4

0.6

0.8

1

29](https://image.slidesharecdn.com/1notes-211018172623/85/1notes-29-320.jpg)