Embed presentation

Downloaded 249 times

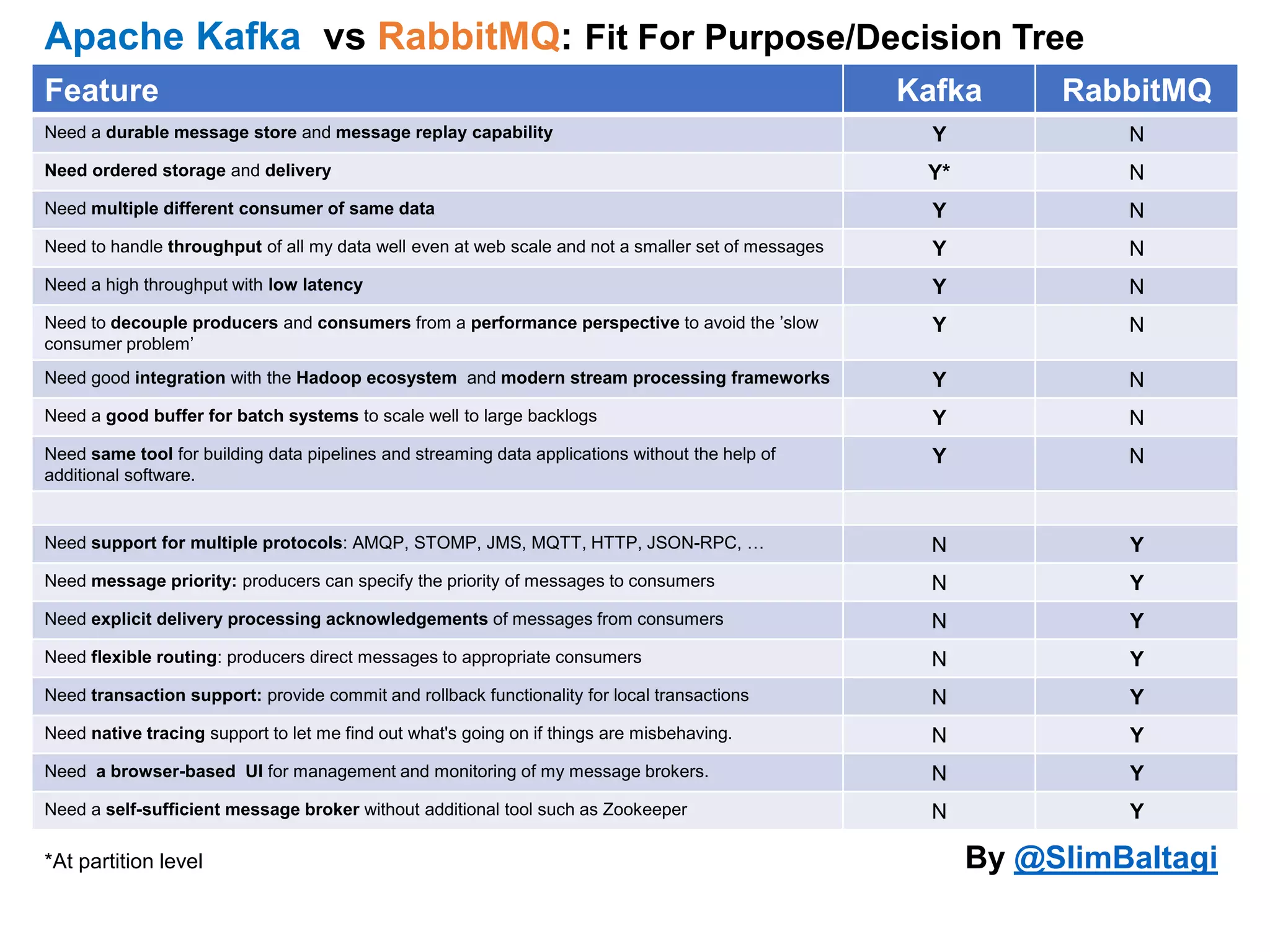

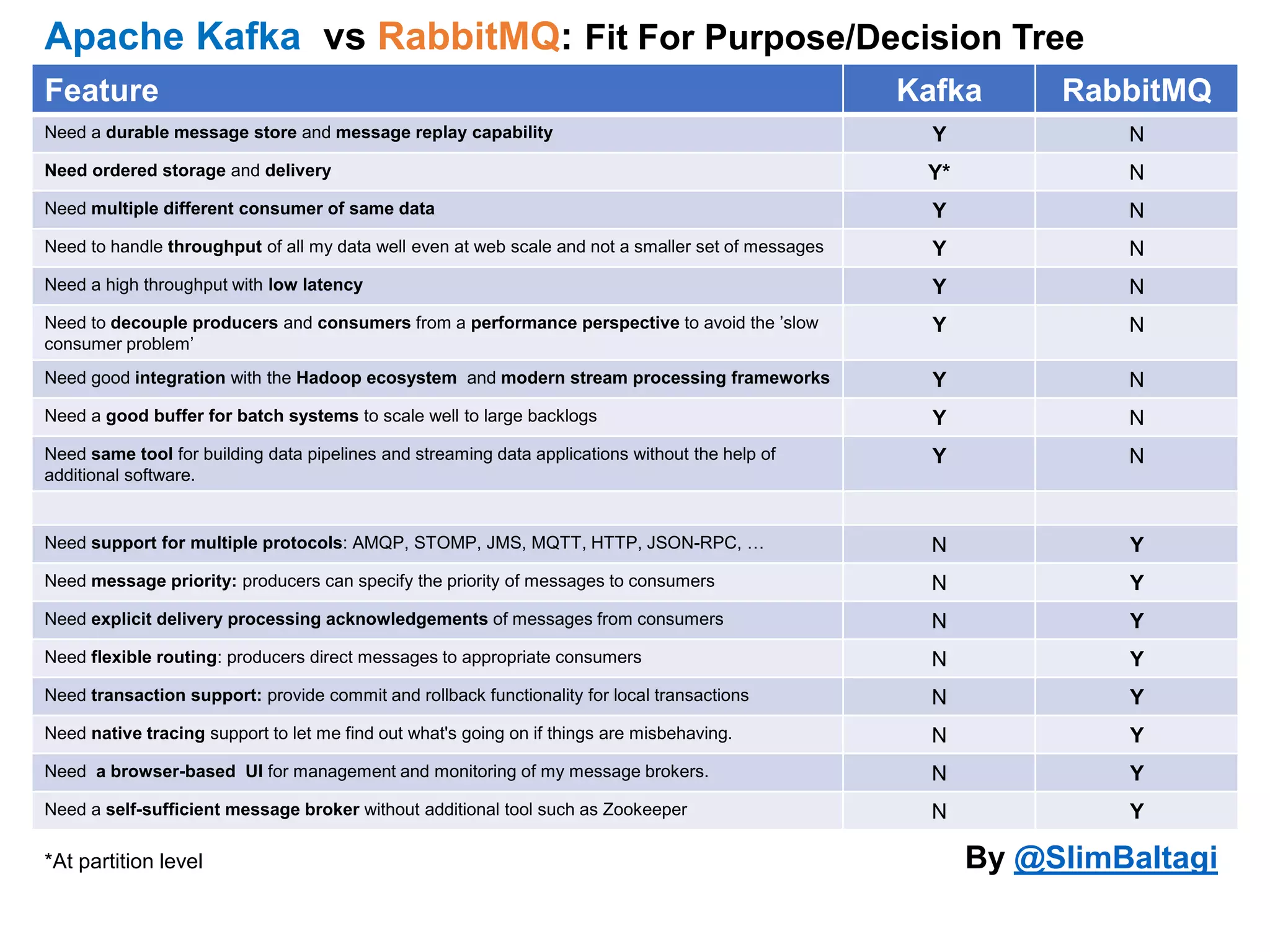

The document compares Apache Kafka and RabbitMQ using a decision tree framework based on various requirements for message handling, such as durability, ordering, throughput, and integration. It outlines specific needs like supporting multiple protocols, message priority, transaction support, and self-sufficiency of the message broker. Ultimately, it helps determine which system is better suited for different use cases by addressing key features and capabilities.