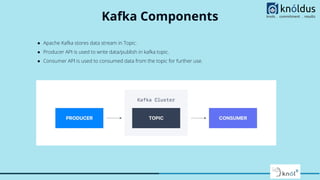

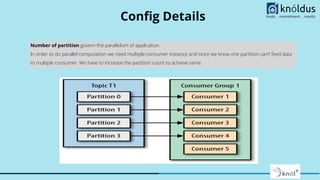

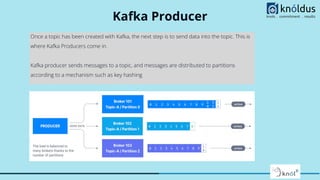

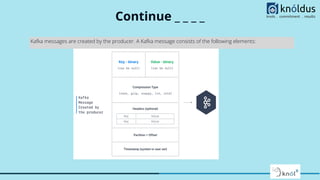

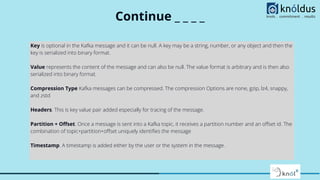

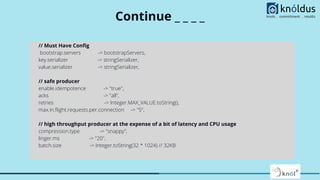

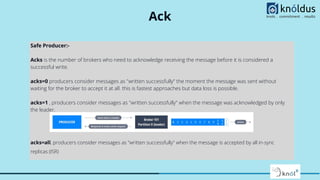

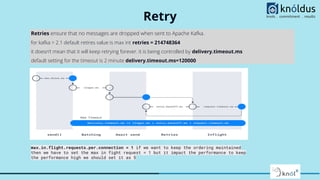

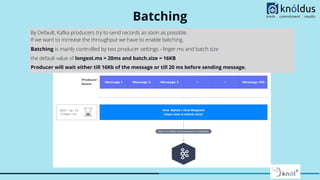

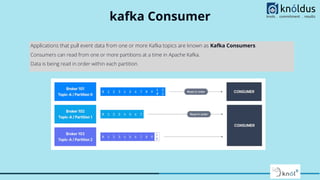

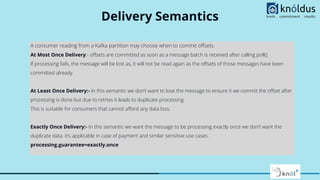

The document provides a comprehensive overview of Apache Kafka for big data applications, detailing its importance in real-time data streaming, features, and components. It discusses Kafka's producer and consumer APIs, message configurations, delivery semantics, and various operational settings like replication and retention policies. The guidelines emphasize maintaining etiquette during sessions and provide critical Kafka configurations for effective data management.