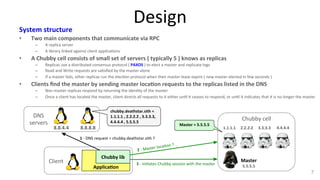

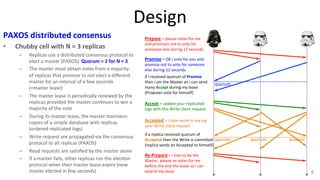

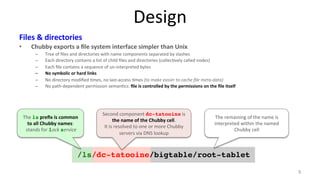

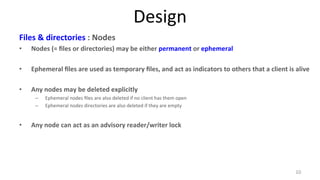

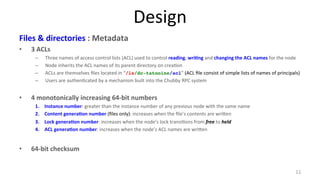

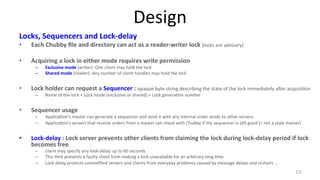

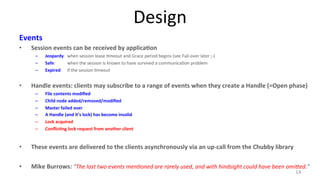

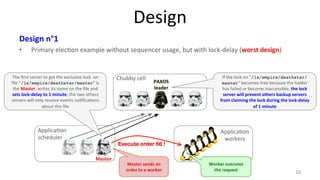

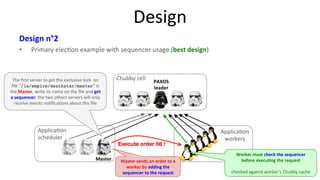

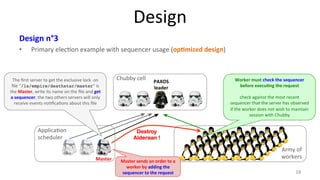

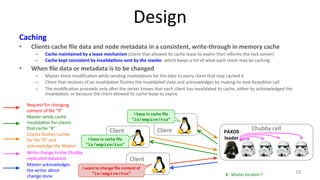

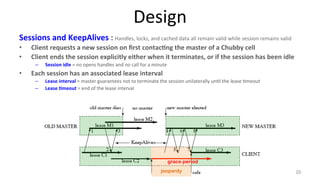

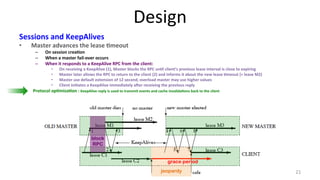

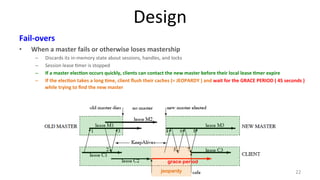

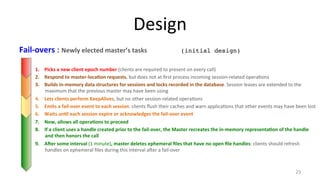

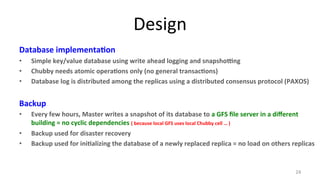

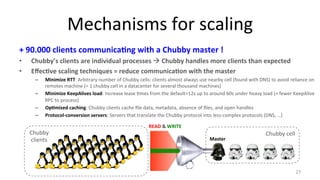

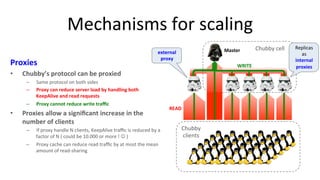

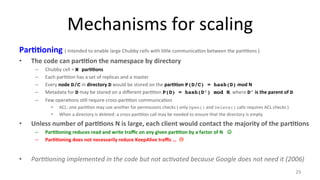

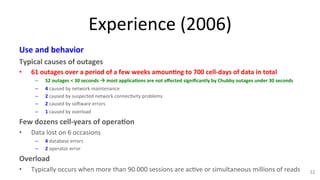

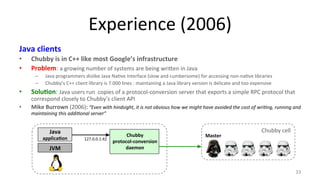

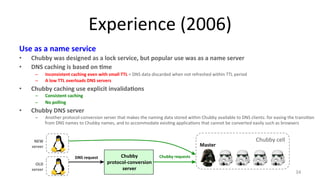

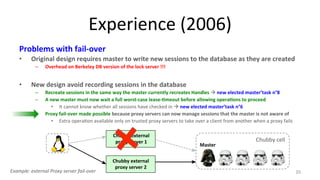

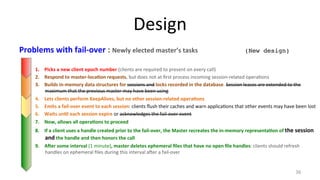

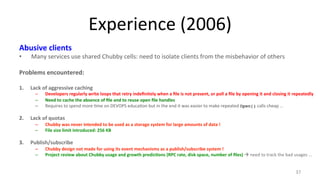

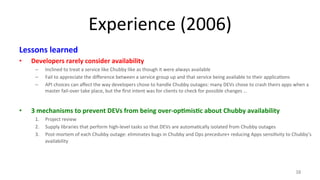

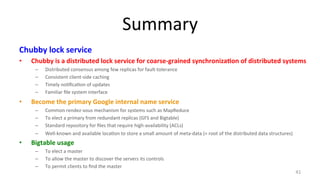

The document discusses Google's Chubby lock service, designed for distributed systems with thousands of machines, providing coarse-grained locking and reliable storage through an interface resembling a distributed file system. It emphasizes availability and reliability through a distributed consensus protocol called Paxos, along with a two-component system architecture involving a replica server and client library. Additionally, it details the design decisions, file management, locking mechanisms, events, and session management utilized in Chubby.