This document discusses using Kubernetes as an underlay platform for OpenStack. Some key points:

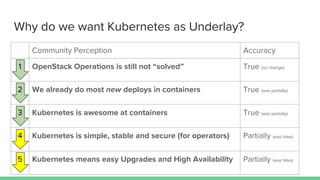

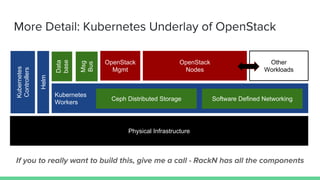

1. Kubernetes is becoming more widely used and understood by operators compared to OpenStack. Using Kubernetes as an underlay could improve simplicity, stability, and upgrade processes for OpenStack.

2. There are still many technical challenges to address, such as networking, storage, tooling to manage OpenStack on Kubernetes, and ensuring containers meet Kubernetes' immutable infrastructure requirements.

3. Using Kubernetes as an underlay risks further confusing the messaging around OpenStack by implying Kubernetes is more stable or a replacement target. Clear communication will be important to avoid undermining OpenStack.