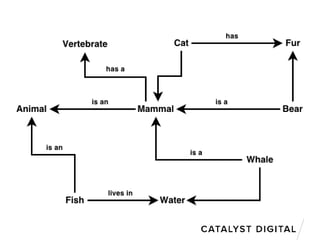

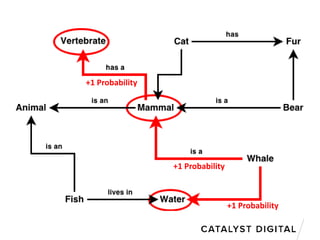

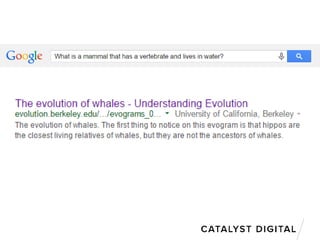

1) Semantic search relies on understanding the conceptual relationships between keywords rather than exact matches, so SEOs must conduct more thorough semantic keyword research.

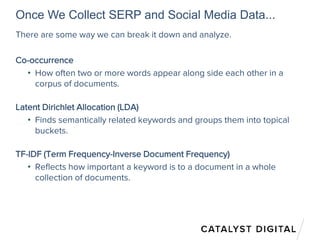

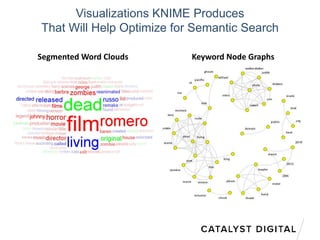

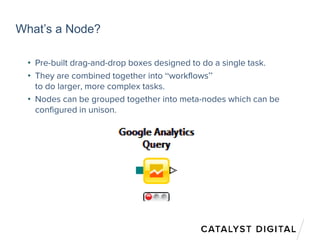

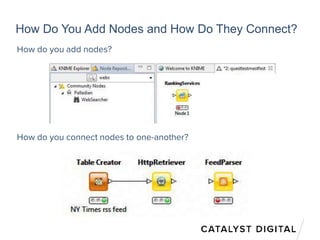

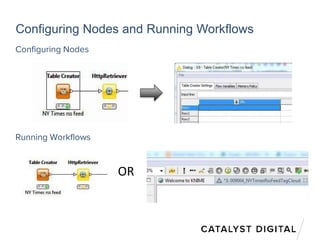

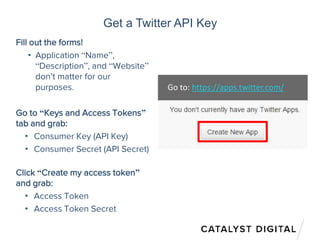

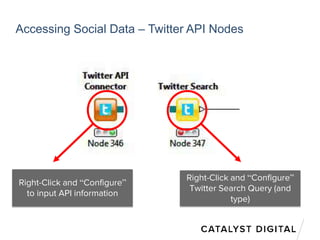

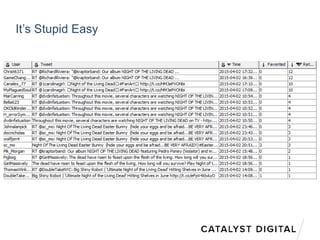

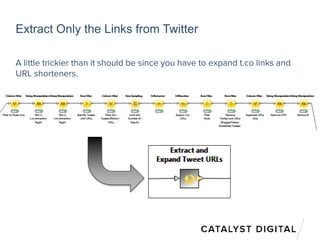

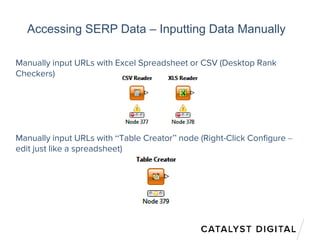

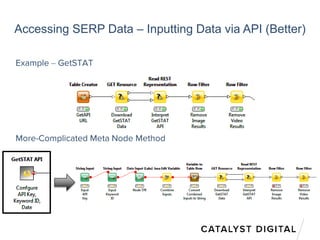

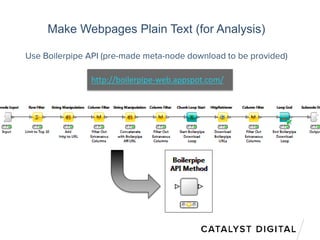

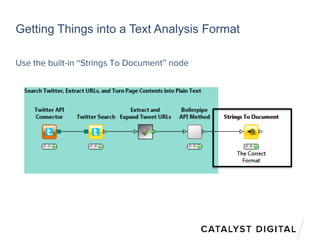

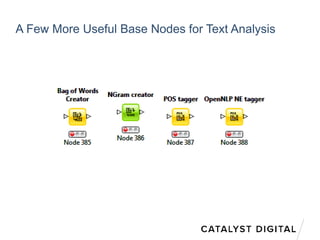

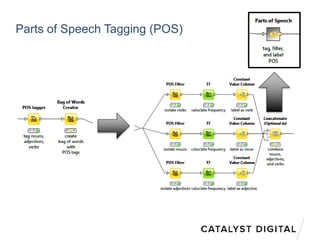

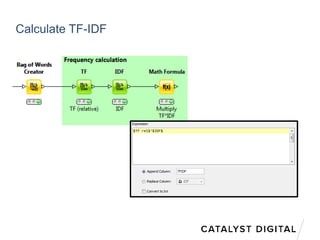

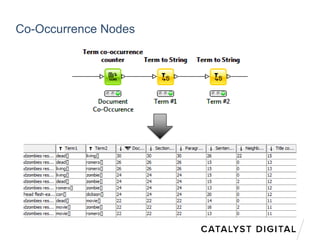

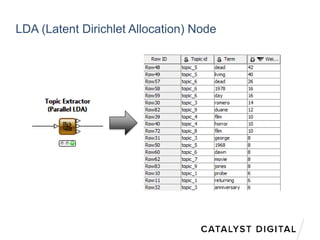

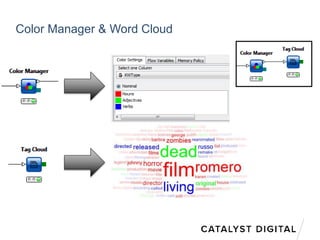

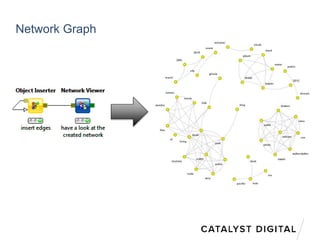

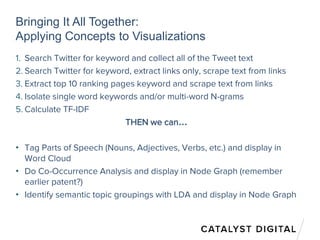

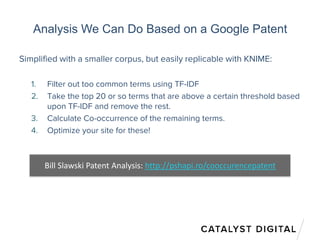

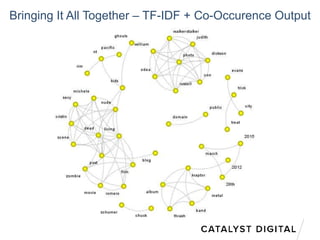

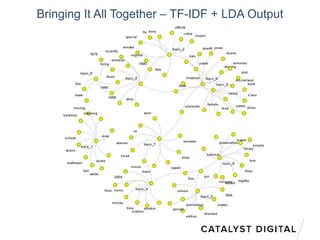

2) Tools like KNIME allow SEOs to automate data collection from sources like search engines and social media, analyze the data using techniques like TF-IDF and LDA to group keywords semantically, and visualize relationships to guide on-page optimization.

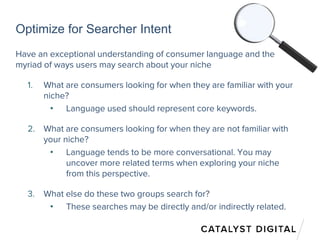

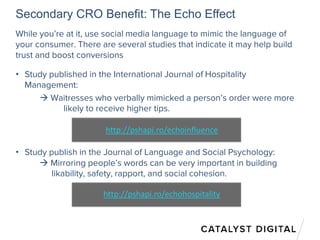

3) By understanding conceptual topics and how consumer language is used, SEOs can better optimize websites for searcher intent to perform well in semantic search.