Embed presentation

Downloaded 10 times

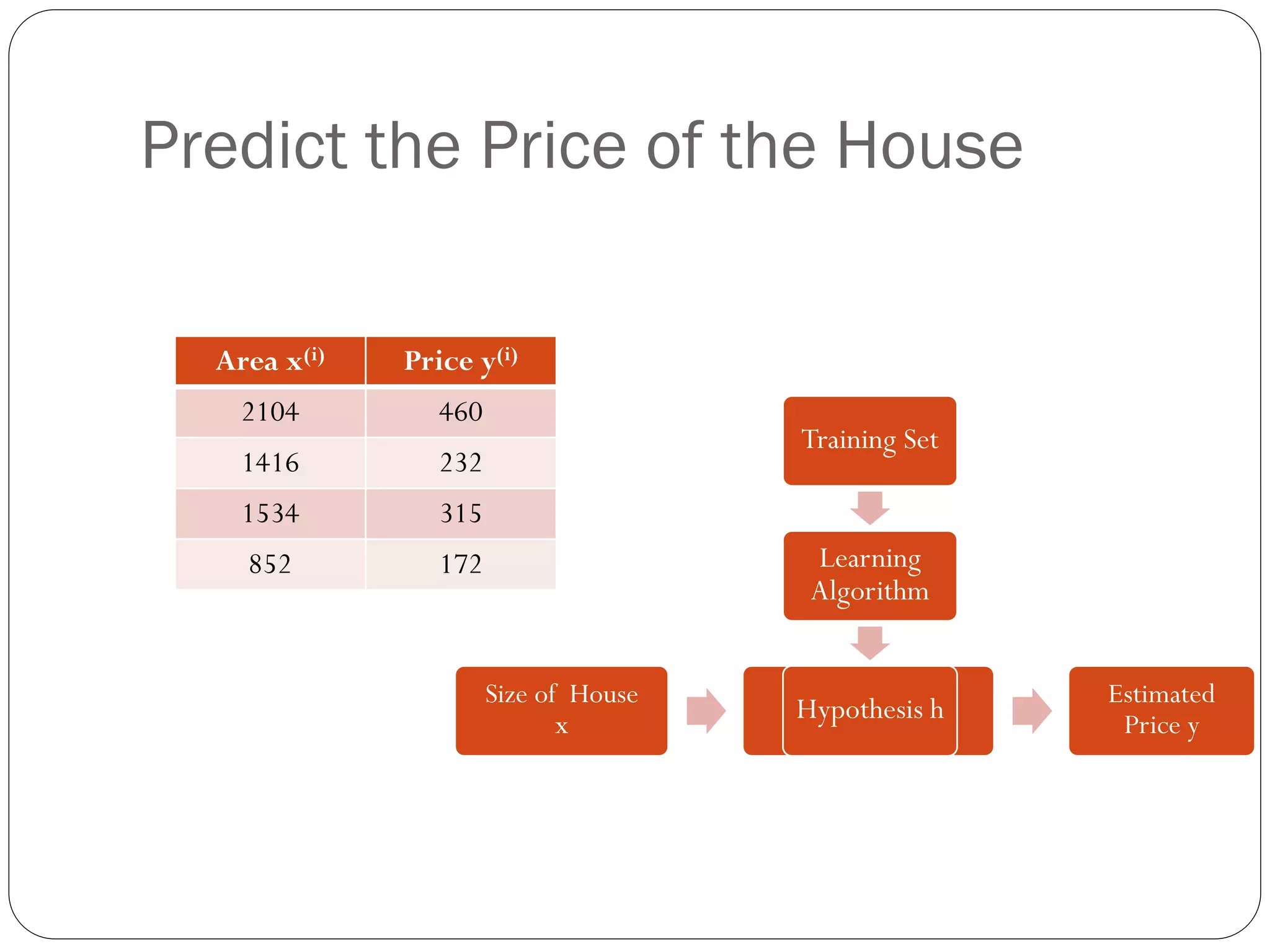

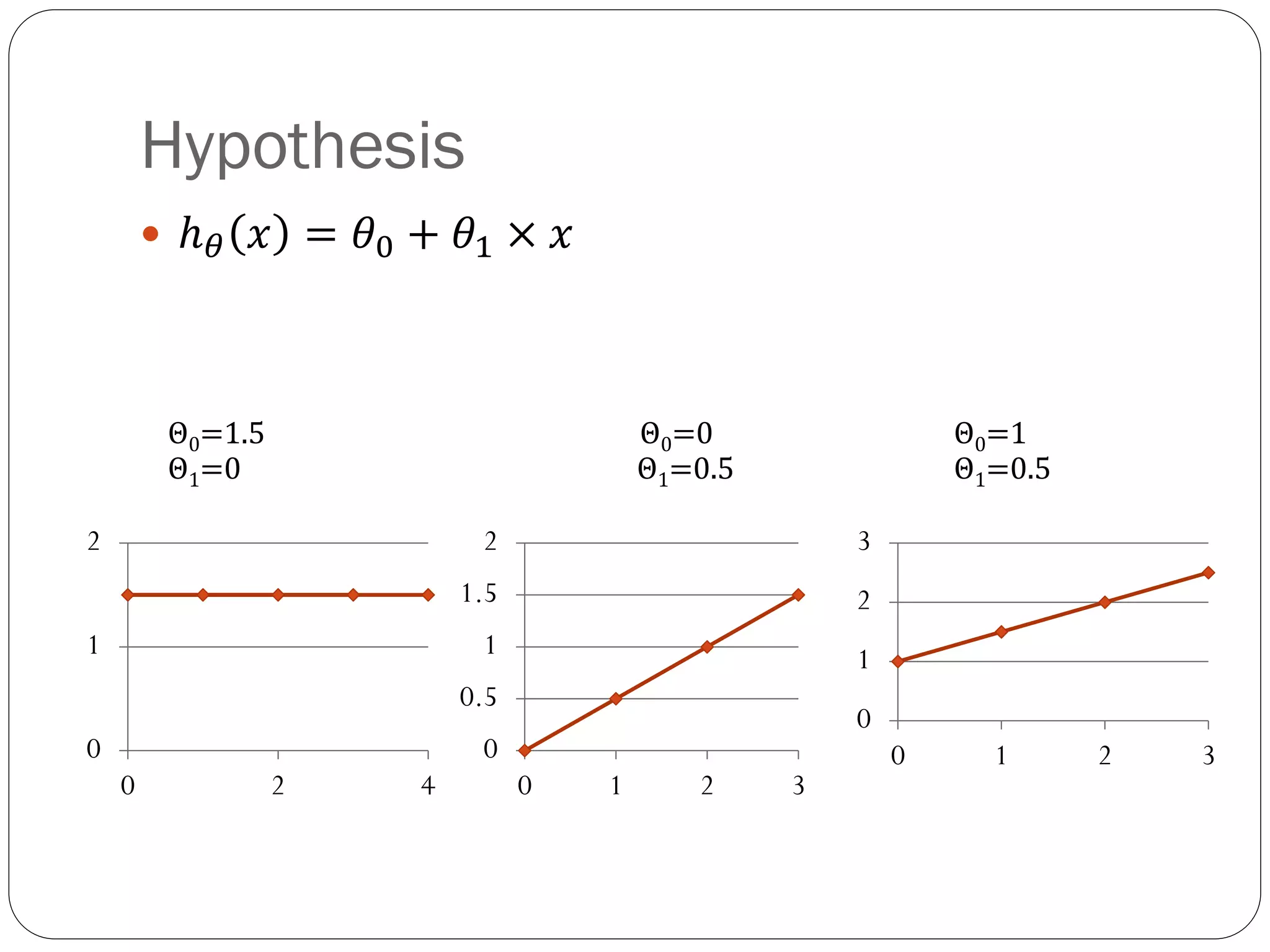

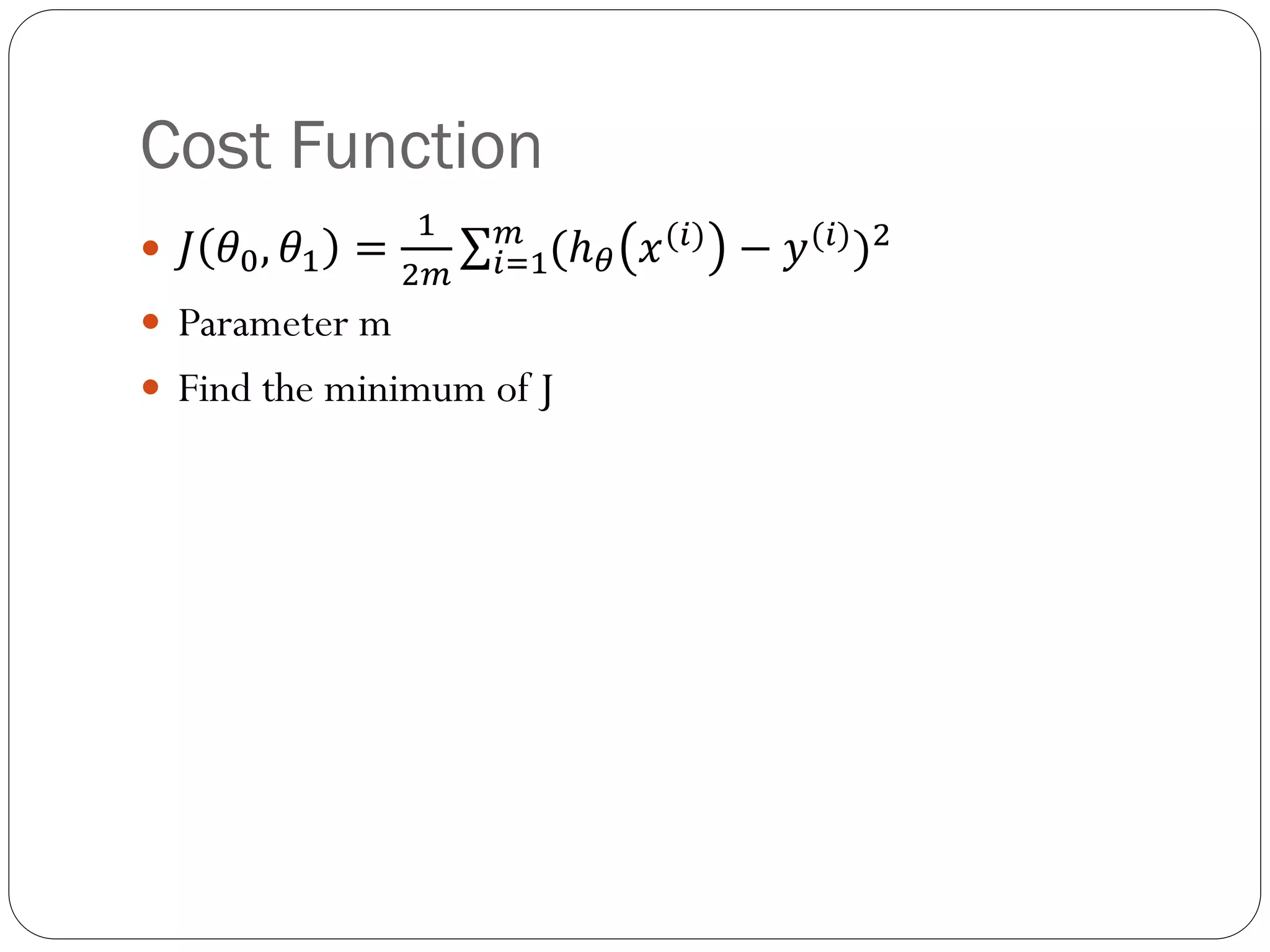

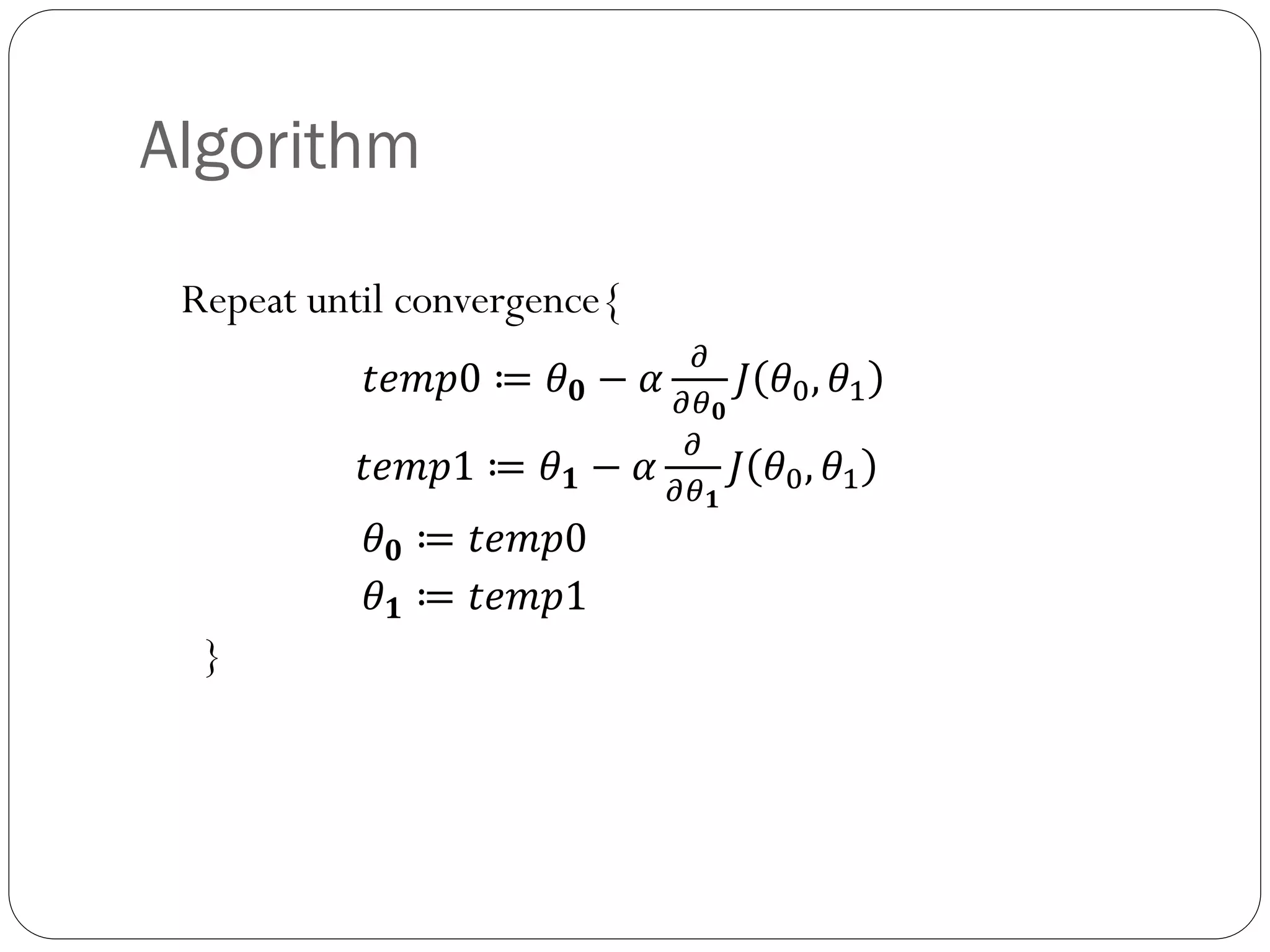

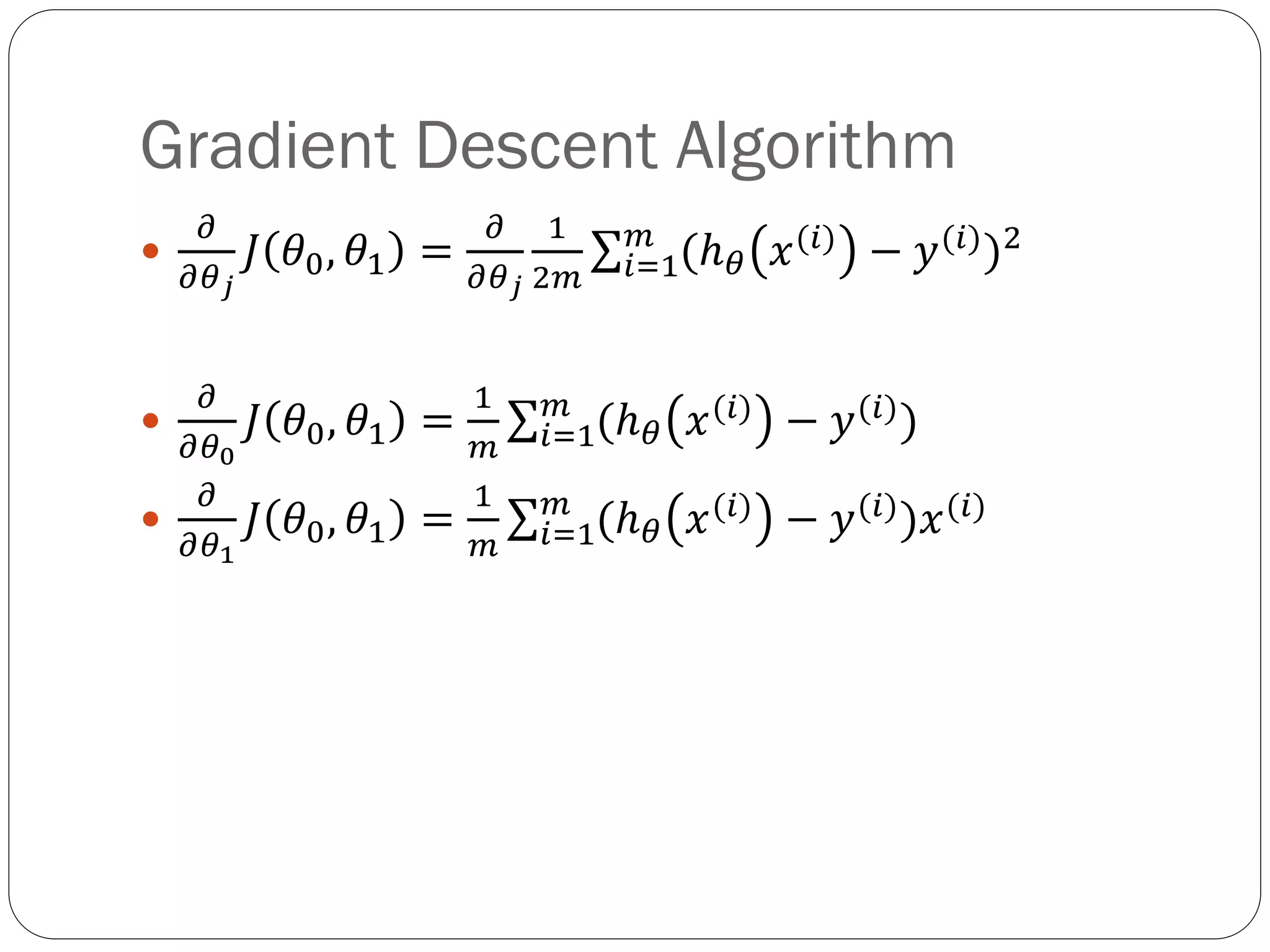

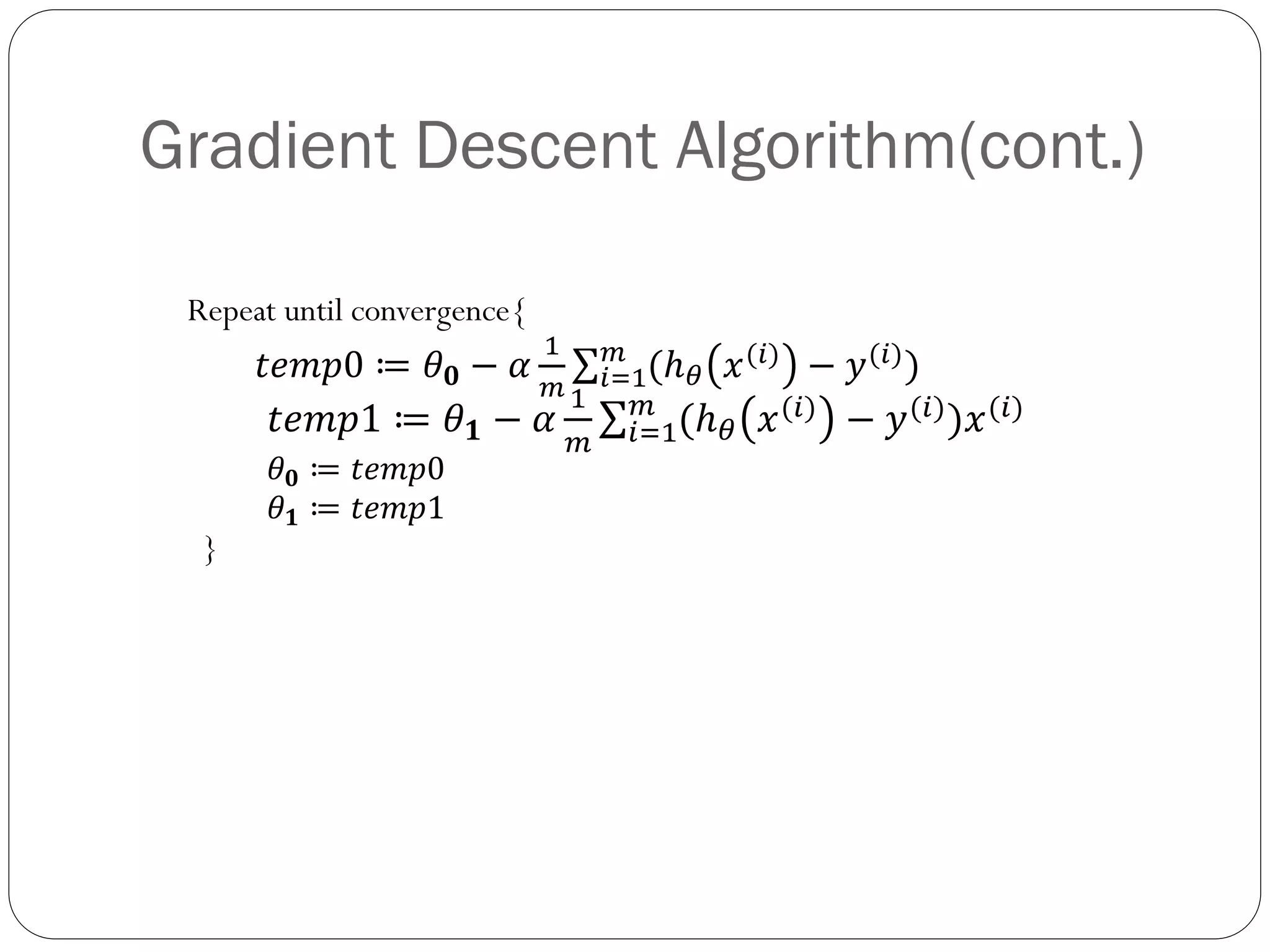

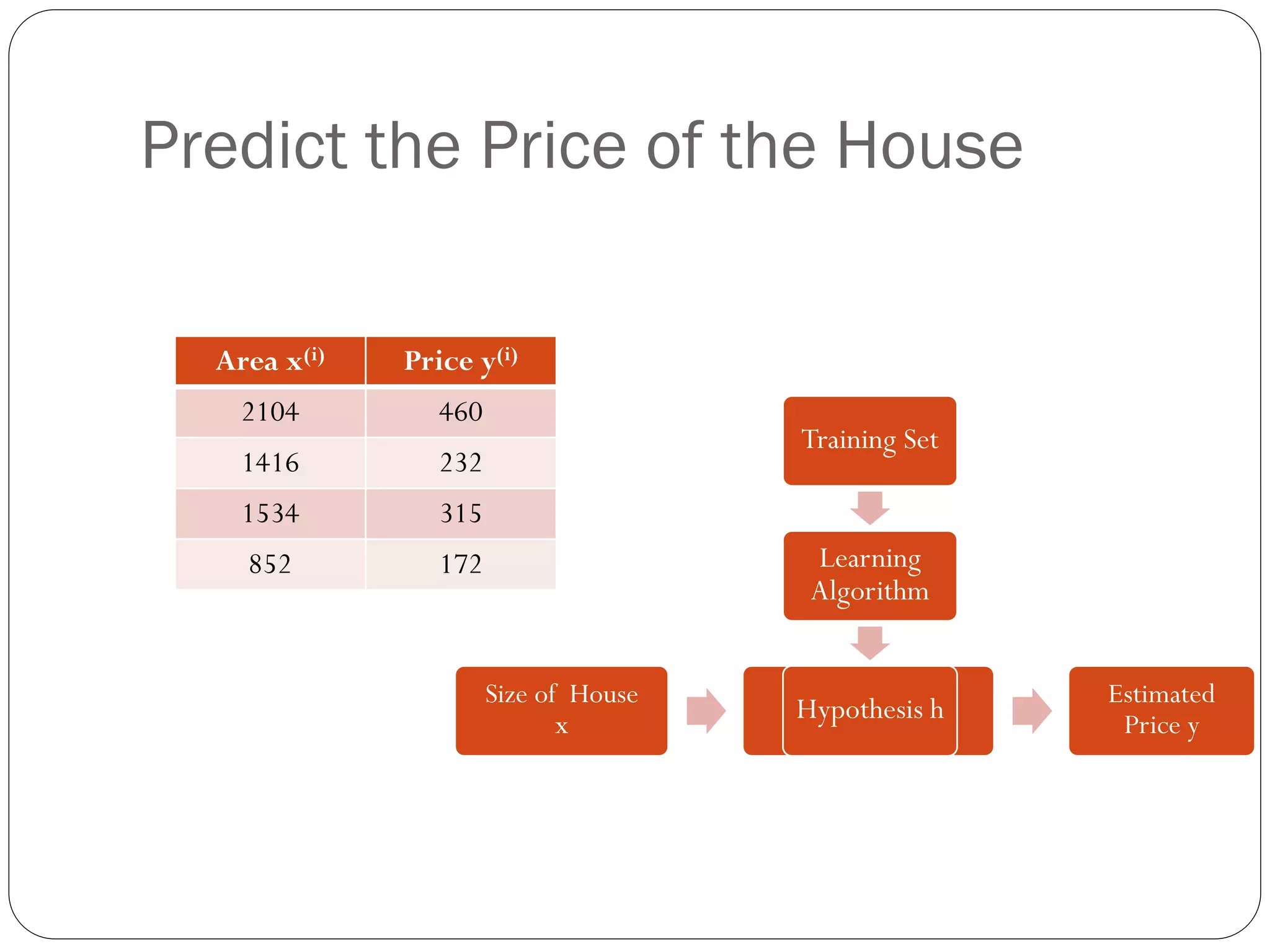

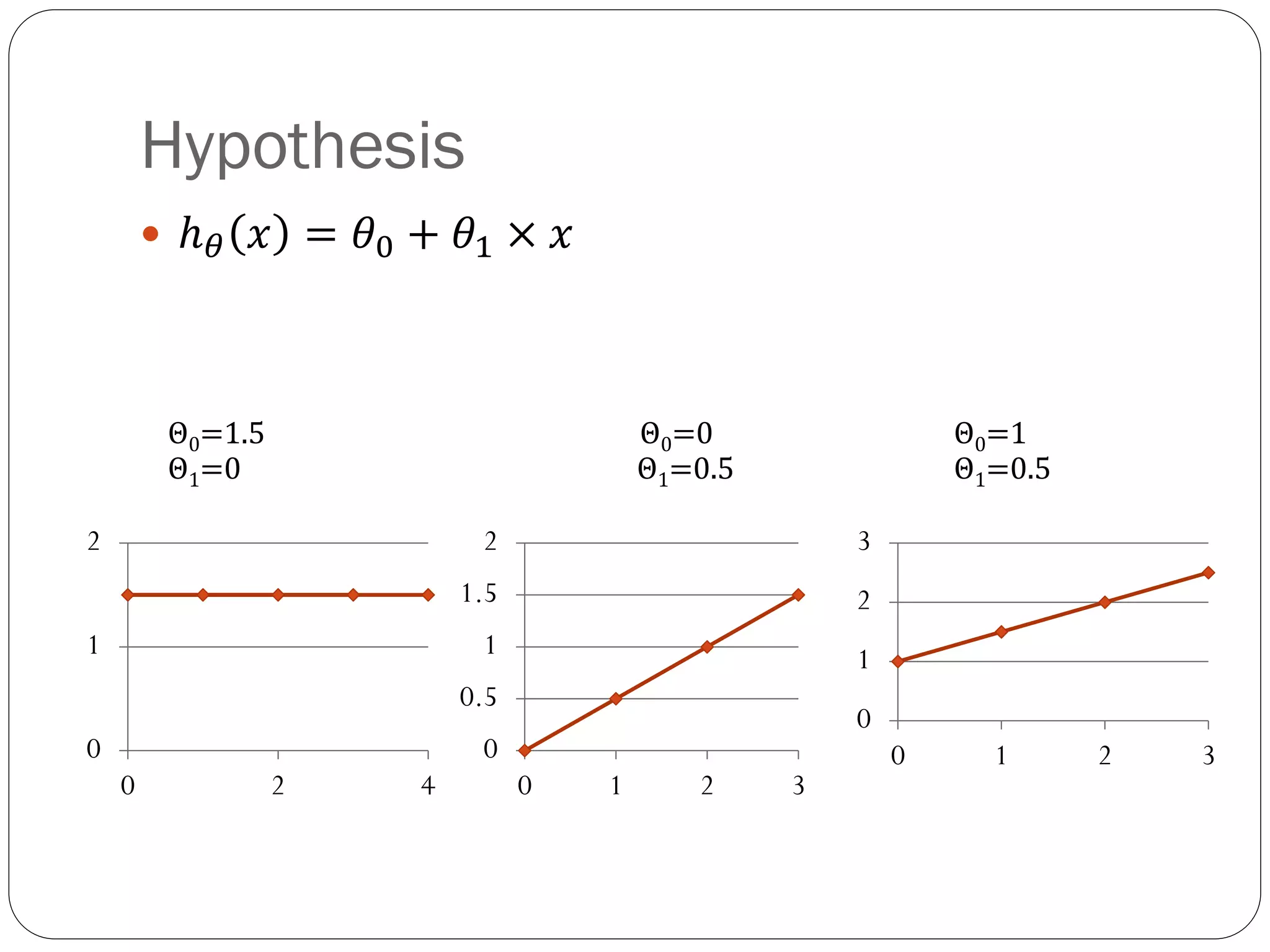

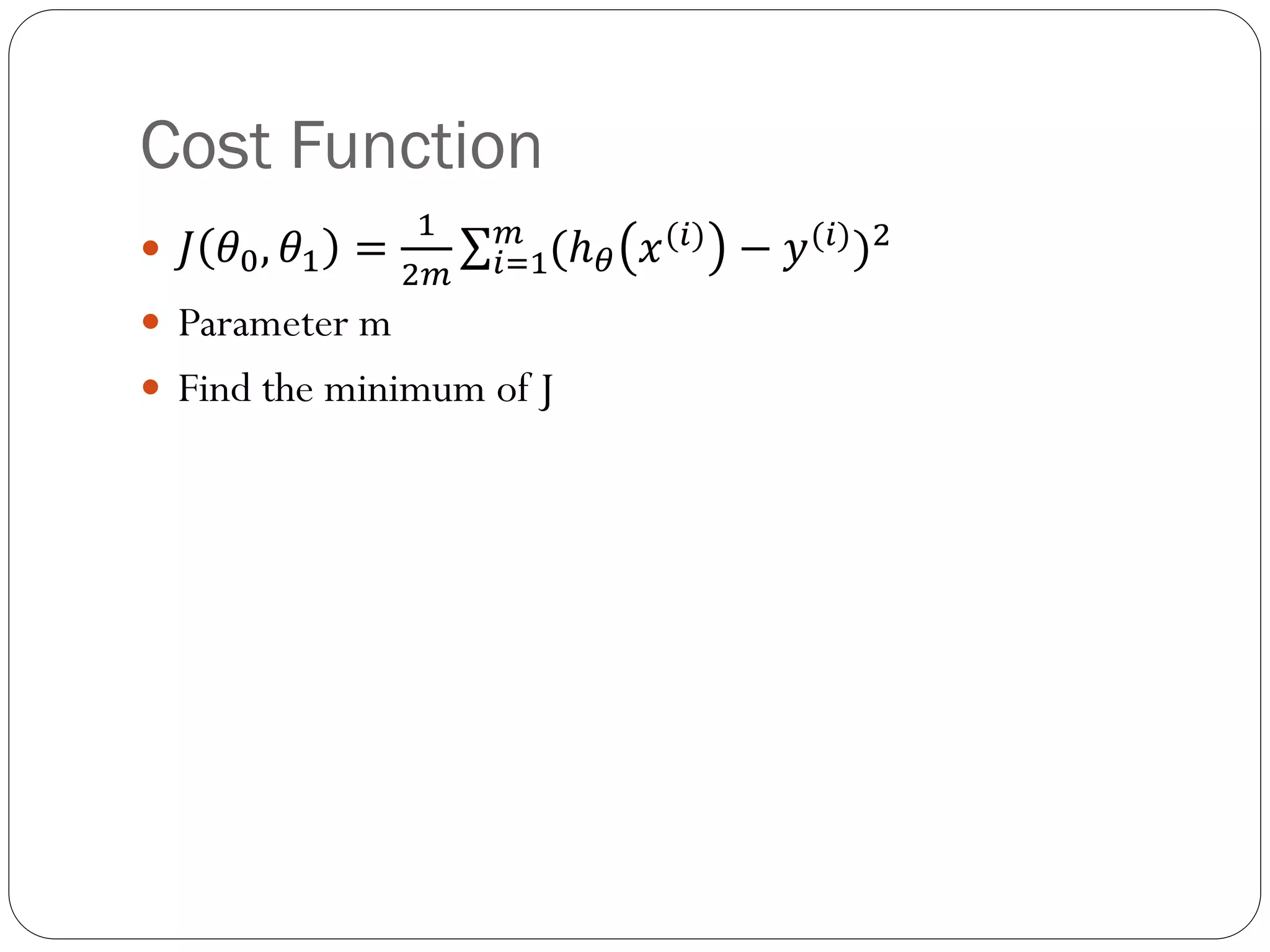

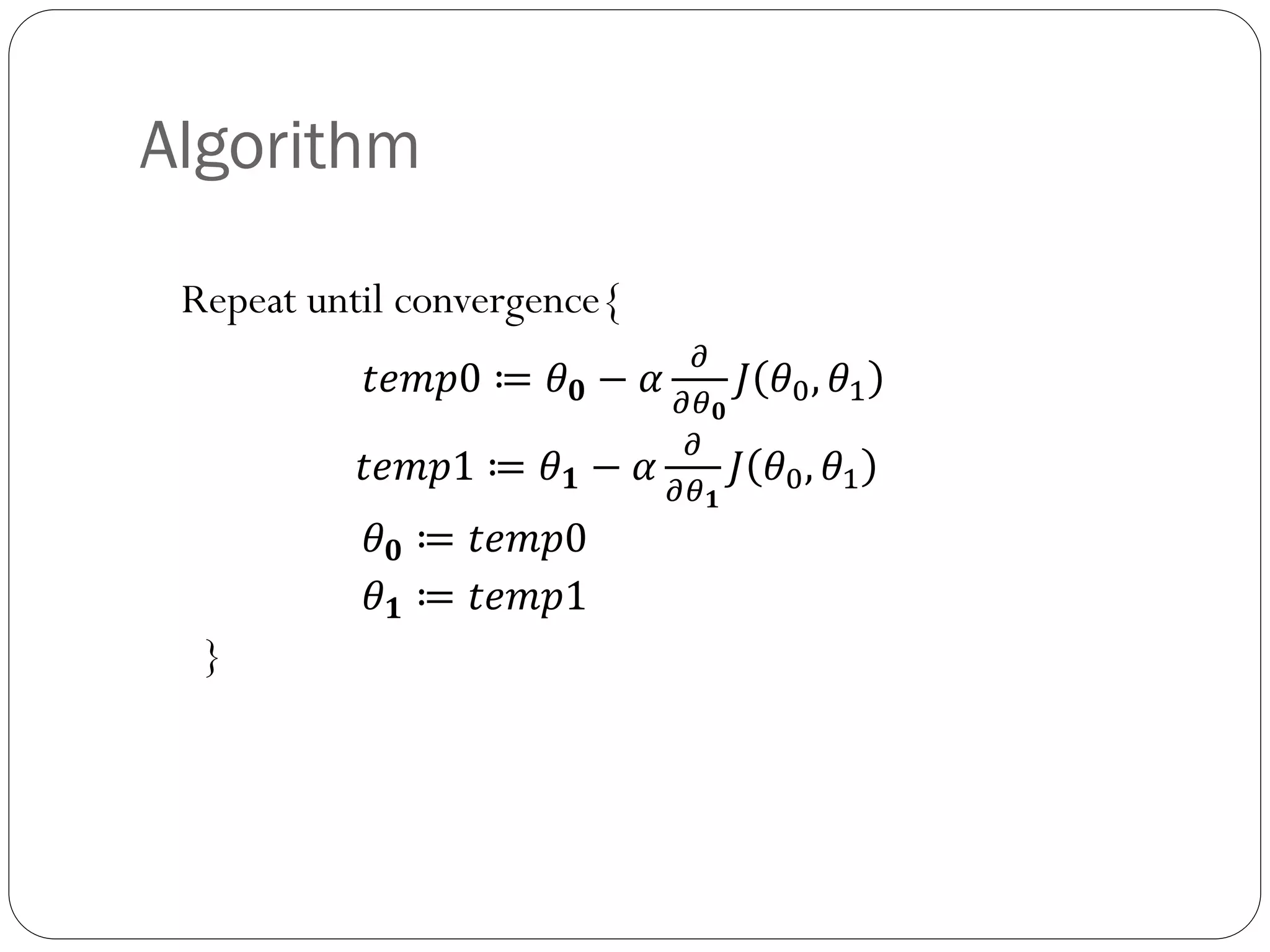

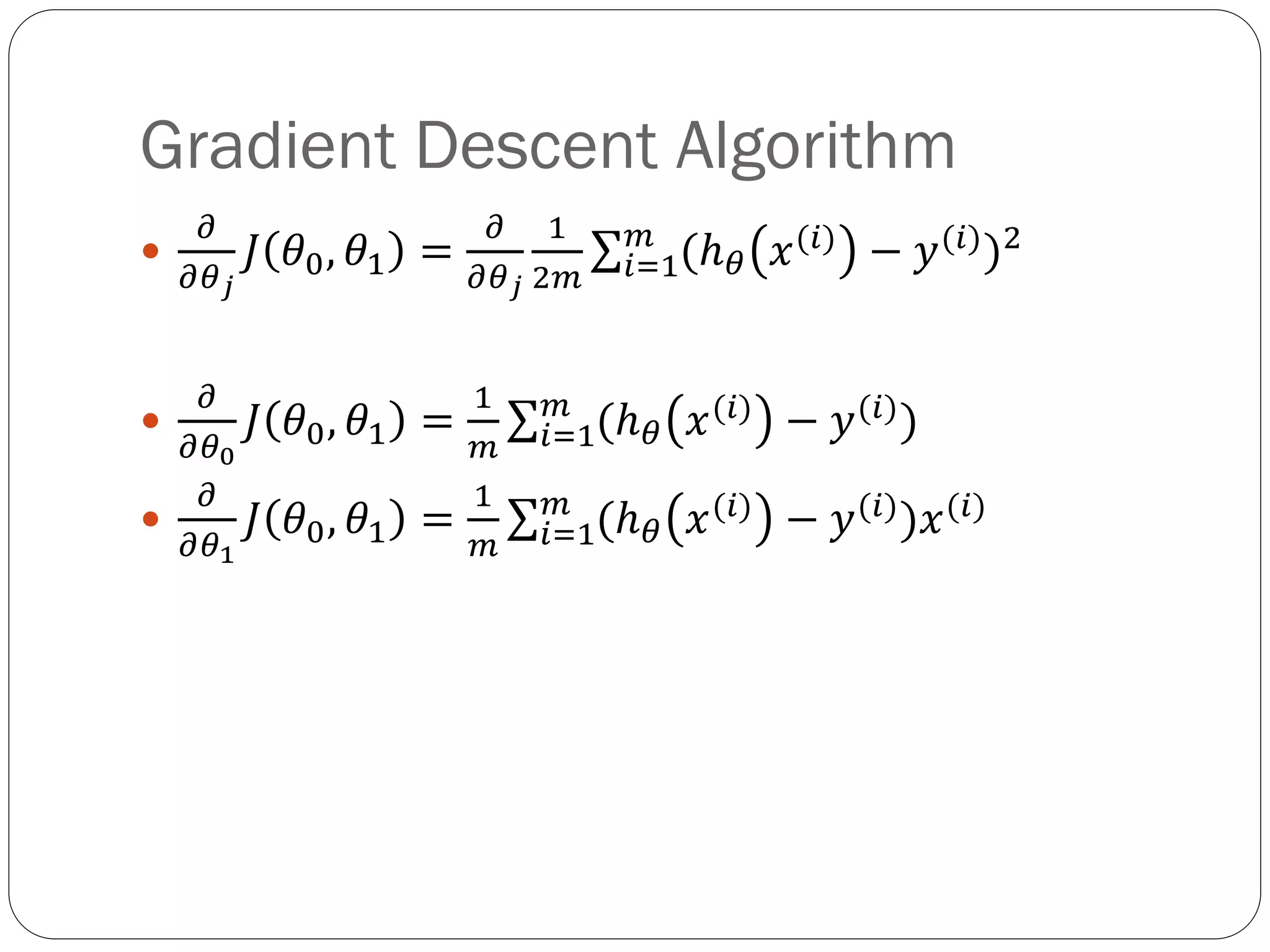

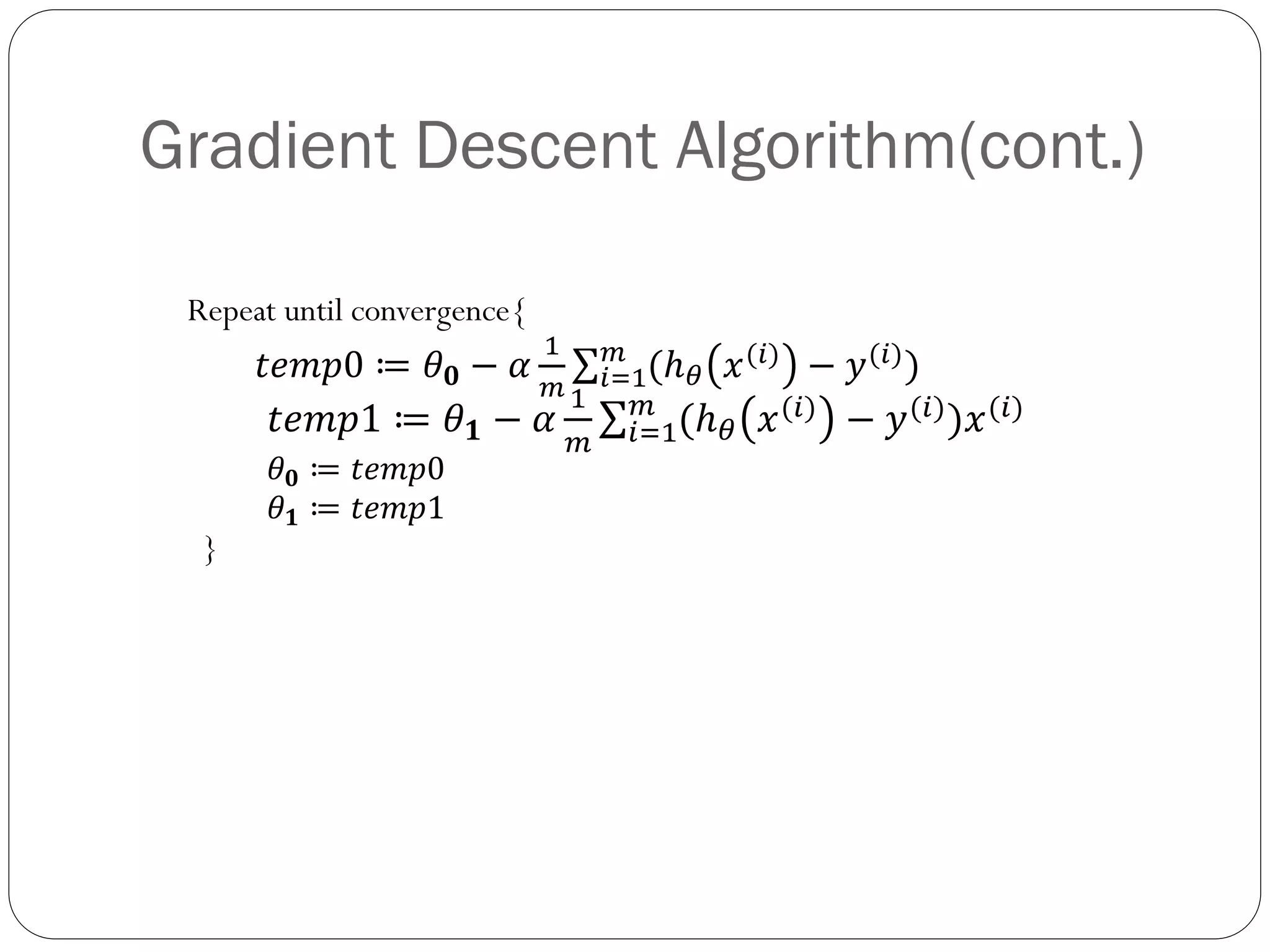

This document introduces linear regression with one variable to predict house prices based on size. It describes using an algorithm called gradient descent to find the parameters theta-0 and theta-1 that minimize the cost function J and best fit the linear hypothesis h to the training data. The algorithm repeats updating the parameter values temp-0 and temp-1 by subtracting a fixed learning rate alpha times the partial derivative of J with respect to each parameter, until convergence is reached.