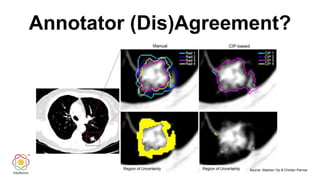

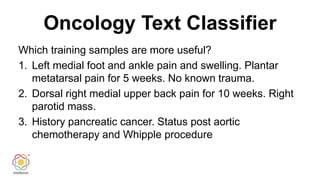

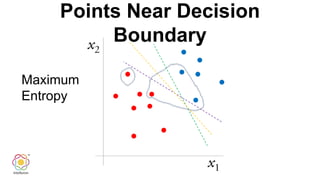

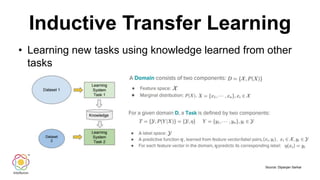

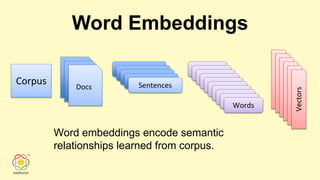

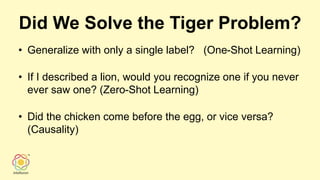

The presentation discusses the challenges and methodologies of machine learning with small data, emphasizing the limitations of big data approaches and the need for innovative techniques such as data augmentation and adversarial data generation. Key topics include the importance of semantic representations, inductive transfer learning, and effective experimental design to enhance model performance with limited annotated data. Additionally, the author highlights the relevance of understanding the unique characteristics of small datasets in various applications.