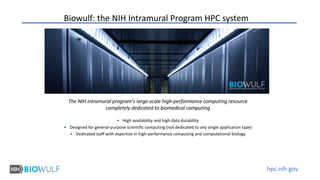

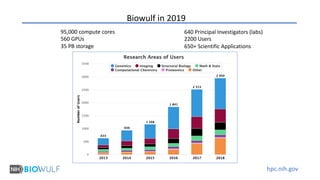

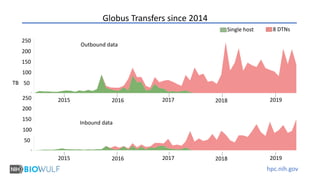

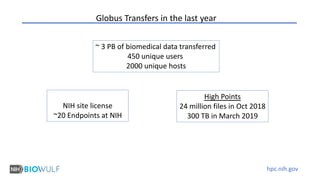

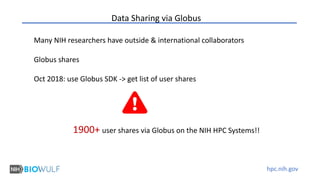

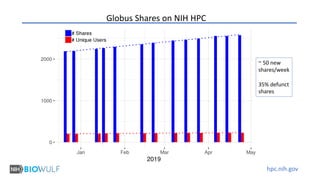

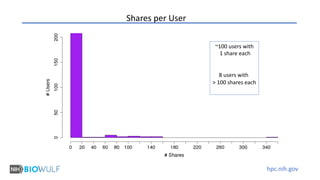

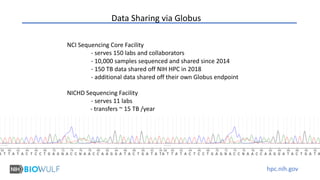

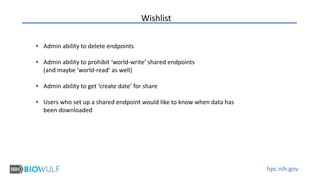

The document discusses the NIH intramural program's high-performance computing resource, Biowulf, which supports biomedical computing with significant capacity and a dedicated staff. It highlights the use of Globus for data sharing, noting substantial data transfers and user engagement, with over 1,900 user shares and 300 TB transferred in March 2019 alone. Additionally, it outlines wishlist items for improving administrative control over shared endpoints.