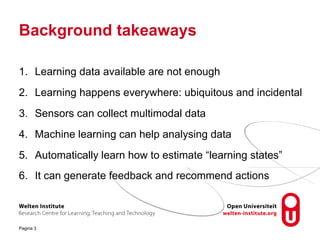

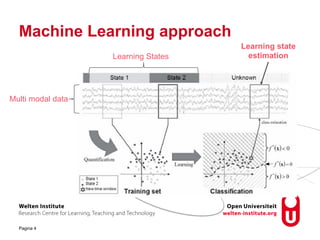

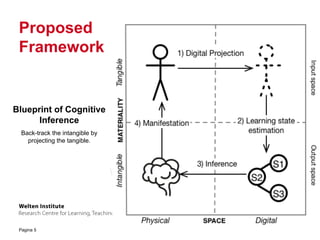

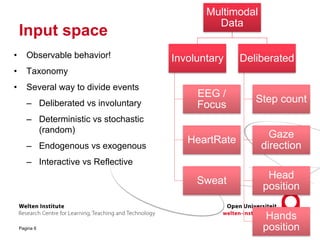

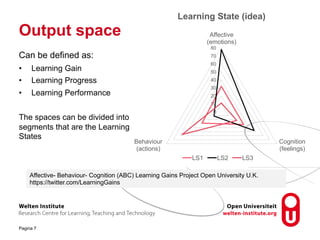

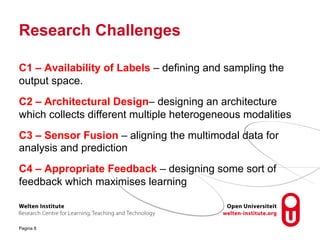

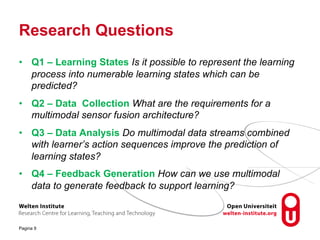

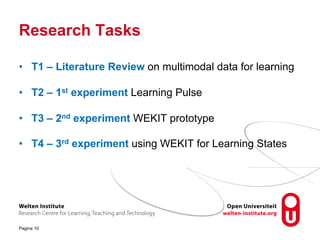

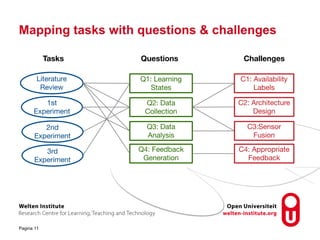

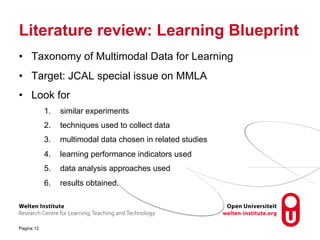

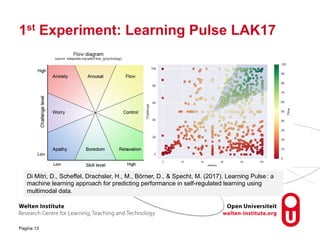

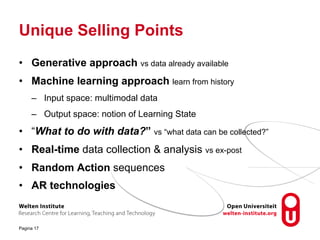

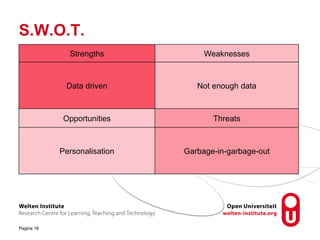

The document presents a doctoral consortium focused on learning state estimation using multimodal data, highlighting the importance of collecting and analyzing diverse data to understand learning processes. It outlines research challenges, questions, and proposed experiments aimed at developing a framework for predicting learning states and providing effective feedback. Key aspects include addressing data collection, sensor fusion, and the architectural design necessary for achieving these goals.