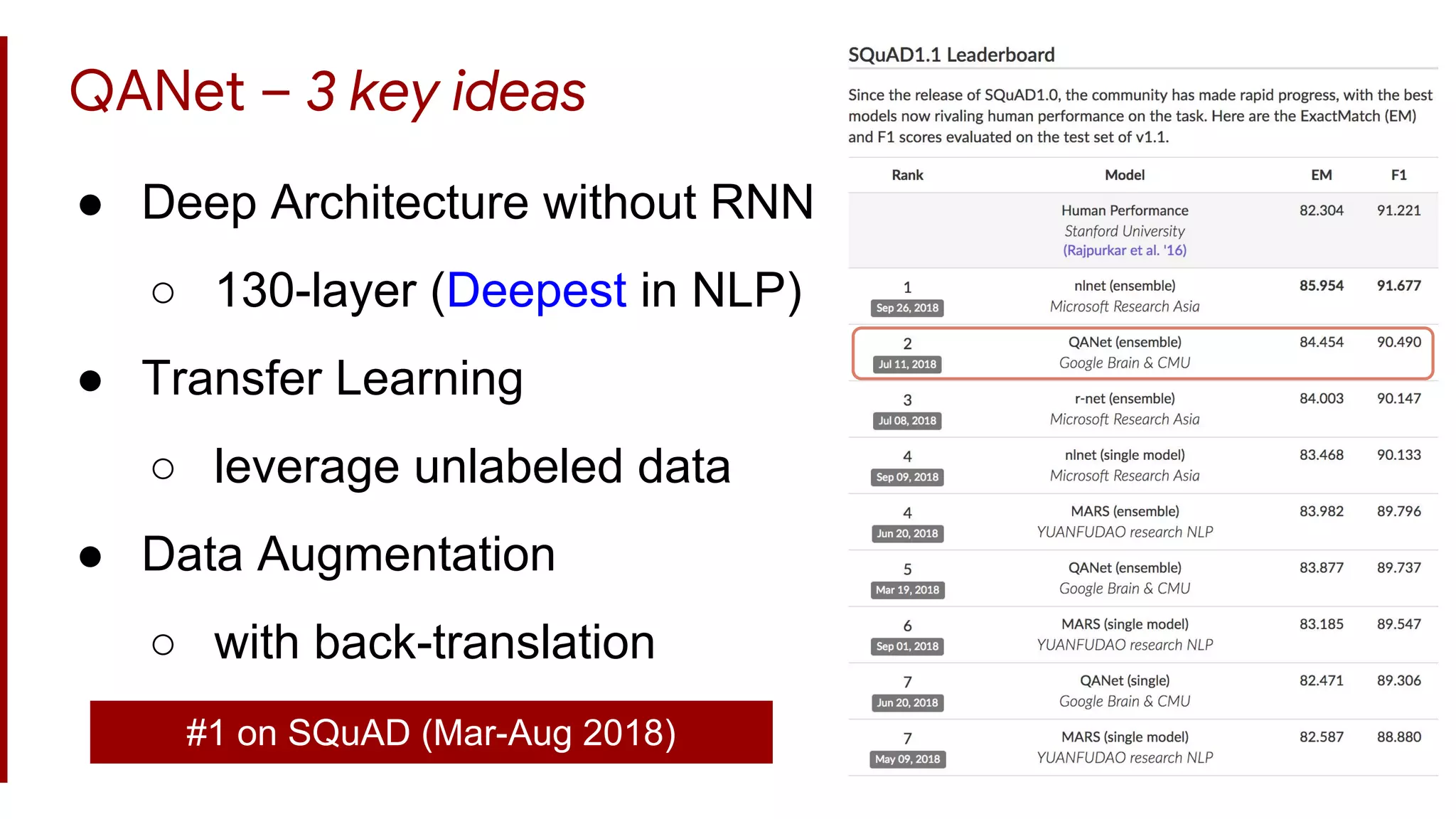

Adams Wei Yu is a PhD candidate at CMU working on machine reading comprehension and large scale optimization. His advisors are Jaime Carbonell and Alex Smola. He has worked on question answering models and datasets like SQuAD. QANet is one of his contributions, which uses self-attention and convolutional layers instead of RNNs for question answering. It achieves state-of-the-art results while being much faster to train and run than previous models.

![General framework neural QA Systems

Bi-directional Attention Flow (BiDAF)

[Seo et al., ICLR’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-33-2048.jpg)

![Base Model (BiDAF)

Similar general architectures:

● R-Net [Wang et al, ACL’17]

● DCN [Xiong et al., ICLR’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-34-2048.jpg)

![Base Model (BiDAF)

Similar general architectures:

● R-Net [Wang et al, ACL’17]

● DCN [Xiong et al., ICLR’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-35-2048.jpg)

![Base Model (BiDAF)

36

Similar general architectures:

● R-Net [Wang et al, ACL’17]

● DCN [Xiong et al., ICLR’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-36-2048.jpg)

![Base Model (BiDAF)

Similar general architectures:

● R-Net [Wang et al, ACL’17]

● DCN [Xiong et al., ICLR’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-37-2048.jpg)

![Base Model (BiDAF)

Similar general architectures:

● R-Net [Wang et al, ACL’17]

● DCN [Xiong et al., ICLR’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-38-2048.jpg)

![The todayniceisweather

The todayniceisweather

0.6

0.2

0.8

0.4

0.1

0.6

0.4

0.1

0.4

0.9

0.1

0.8

0.2

0.3

0.1

The todayniceisweather

w1

x + w2

x + w3

x + w4

x + w5

x

1.8

2.3

0.4

The

=

w1 w2

w3

w4

w5

w1

, w2

, w3

, w4

, w5

= softmax ( )

0.6

0.2

0.8

0.4

0.1

0.6

0.4

0.1

0.4

0.9

0.1

0.8

0.2

0.3

0.1

0.6 0.2 0.8 x

The

The todayniceisweather

[Vaswani et al., NIPS’17]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-50-2048.jpg)

![Position Emb

Feedforward

Layer Norm

Self Attention

Layer Norm

Convolution

Layer Norm

+

+

+

Repeat

Position Emb

Feedforward

Self Attention

Repeat

Convolution

if you want to

go deeper

QANet Encoder

[Yu et al., ICLR’18]](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-54-2048.jpg)

![Transfer learning for richer presentation

● Pretrained language model

(ELMo, [Peters et al., NAACL’18])

○ + 4.0 F1](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-70-2048.jpg)

![Transfer learning for richer presentation

71

● Pretrained language model

(ELMo, [Peters et al., NAACL’18])

○ + 4.0 F1

● Pretrained machine translation

model (CoVe [McCann, NIPS’17])

○ + 0.3 F1](https://image.slidesharecdn.com/246qanetdeview2018-181012000849/75/246-QANet-Towards-Efficient-and-Human-Level-Reading-Comprehension-on-SQuAD-71-2048.jpg)