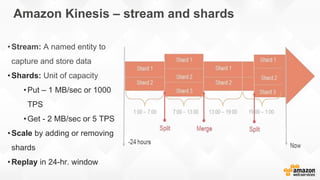

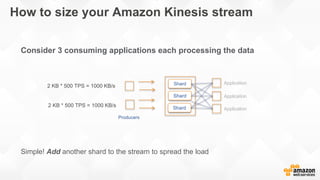

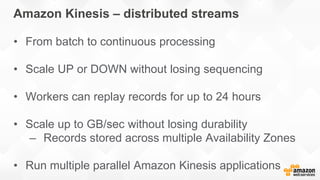

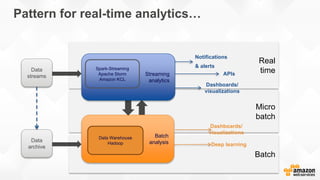

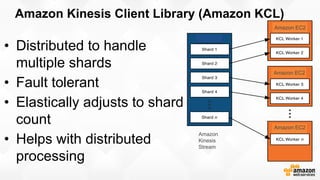

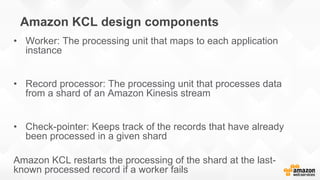

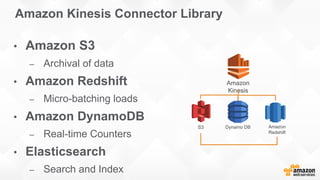

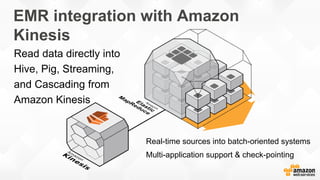

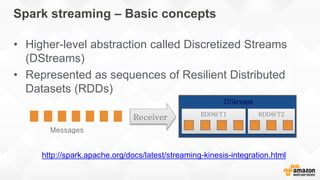

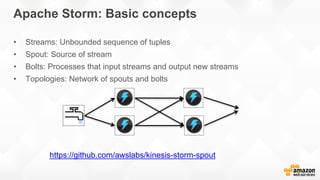

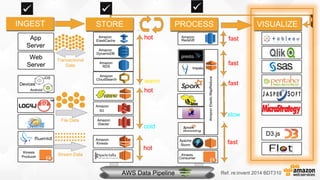

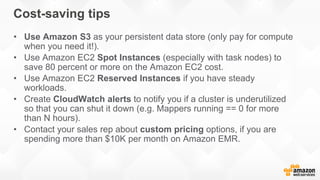

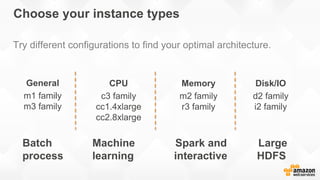

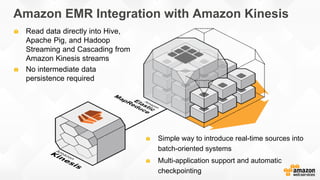

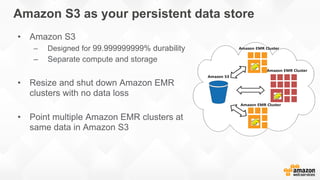

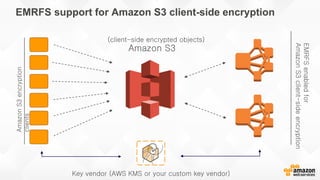

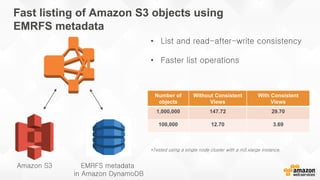

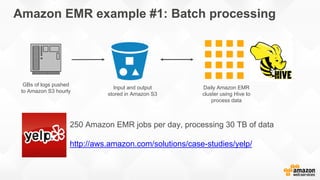

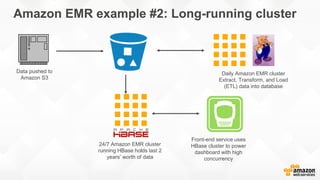

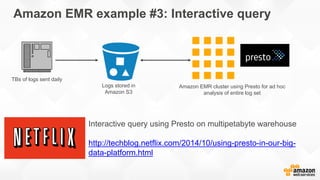

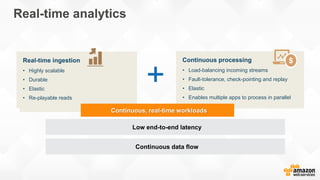

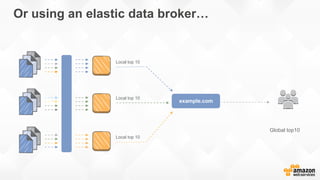

This document discusses real-time data processing using Amazon Web Services. It describes how to use Amazon Kinesis for real-time data ingestion and processing and Amazon Elastic MapReduce (EMR) for batch processing. It provides examples of using EMR for batch processing large amounts of log data and for interactive querying of data stored in Amazon S3. It also discusses using Kinesis as a data broker to distribute streaming data to multiple applications and using Kinesis with EMR, Spark, and Storm for real-time analytics.

![AWSendpoint

Amazon

S3

Amazon

DynamoDB

Amazon

Redshift

Data

sources

Availability

Zone

Availability

Zone

Data

sources

Data

sources

Data

sources

Data

sources

Availability

Zone

Shard 1

Shard 2

Shard N

[Data

archive]

[Metric

extraction]

[Sliding-window

analysis]

[Machine

learning]

App. 1

App. 2

App. 3

App. 4

Amazon EMR

Amazon Kinesis – common data broker](https://image.slidesharecdn.com/3-150427052112-conversion-gate01/85/AWS-Summit-Seoul-2015-AWS-36-320.jpg)