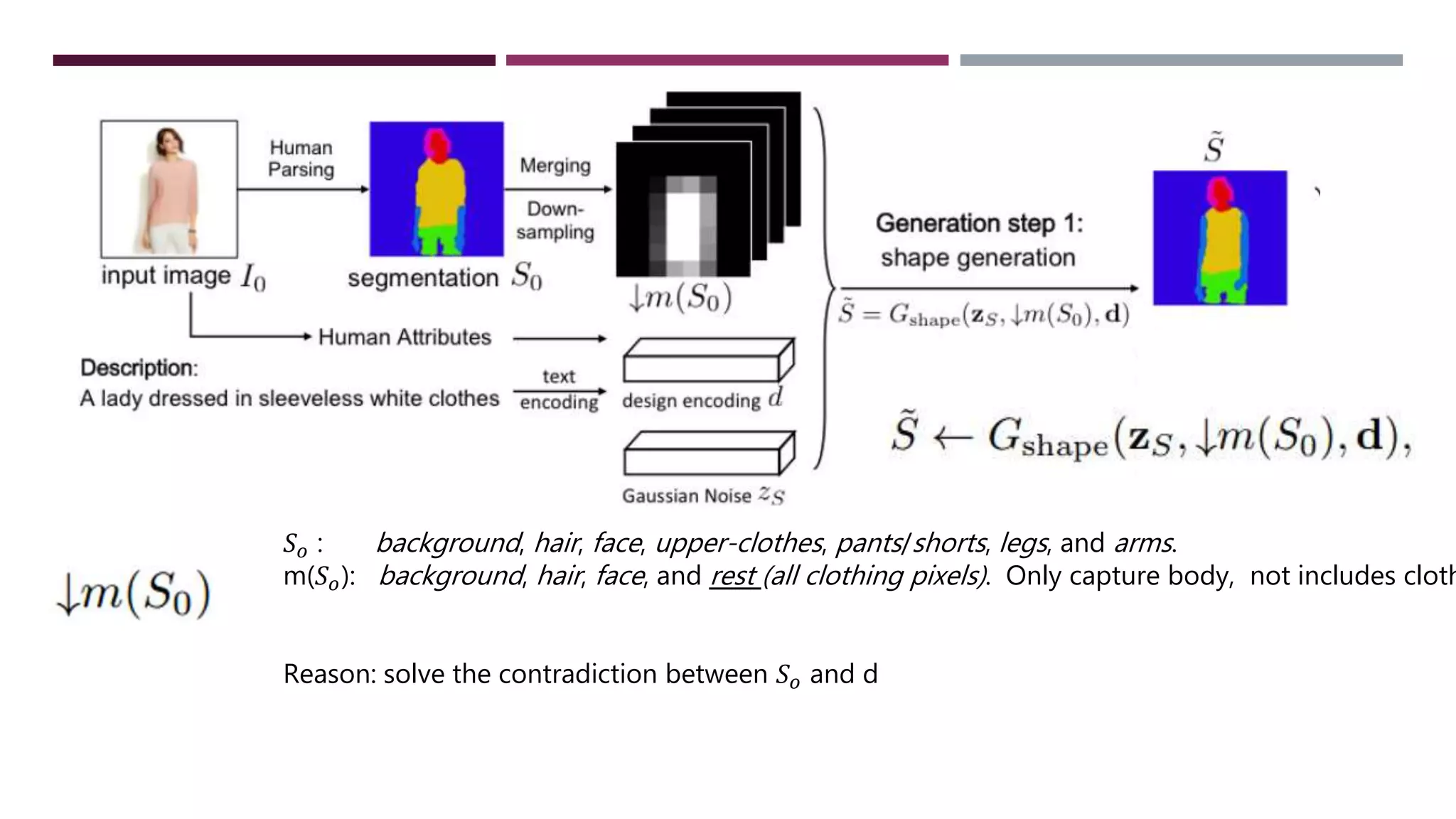

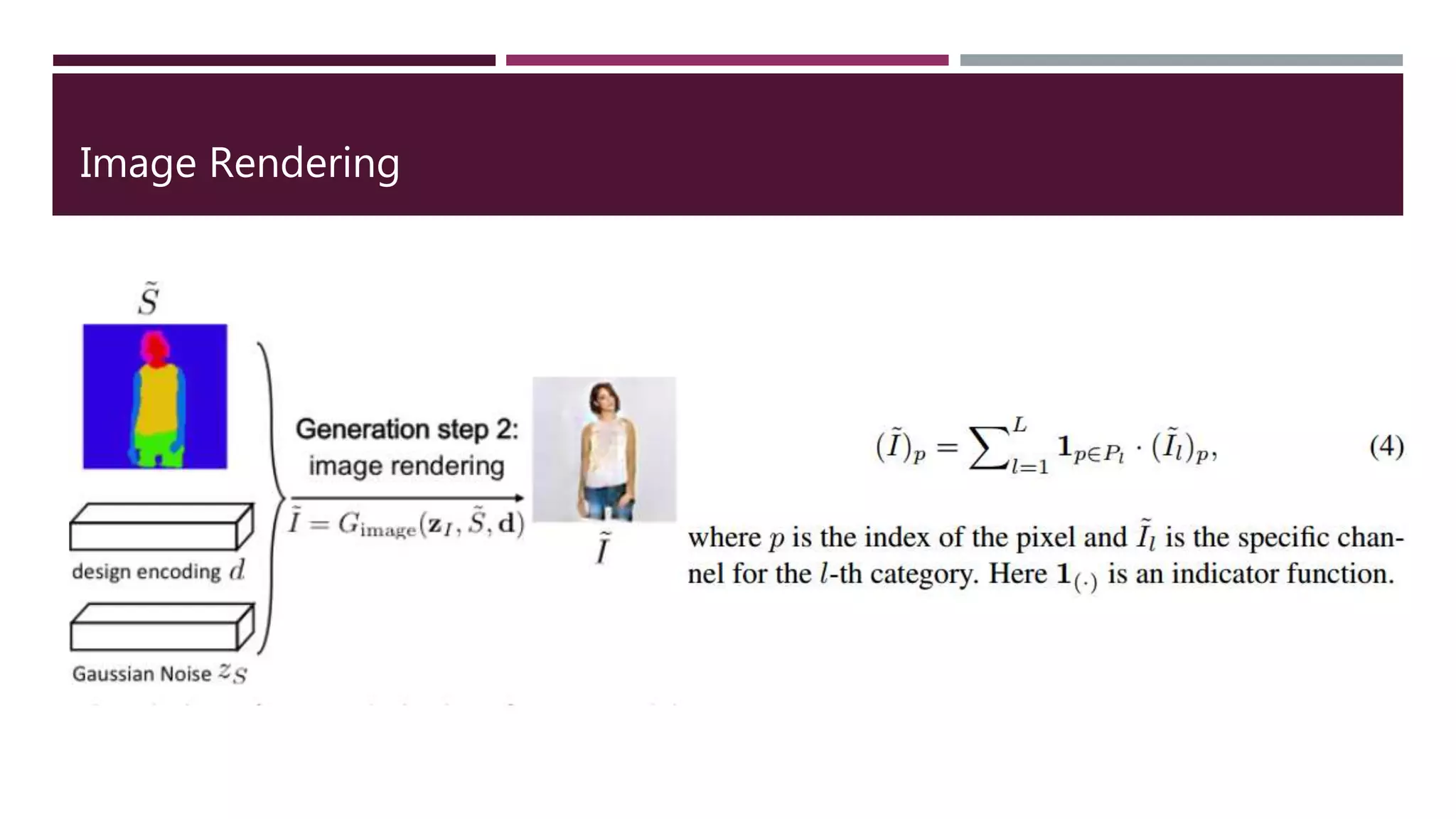

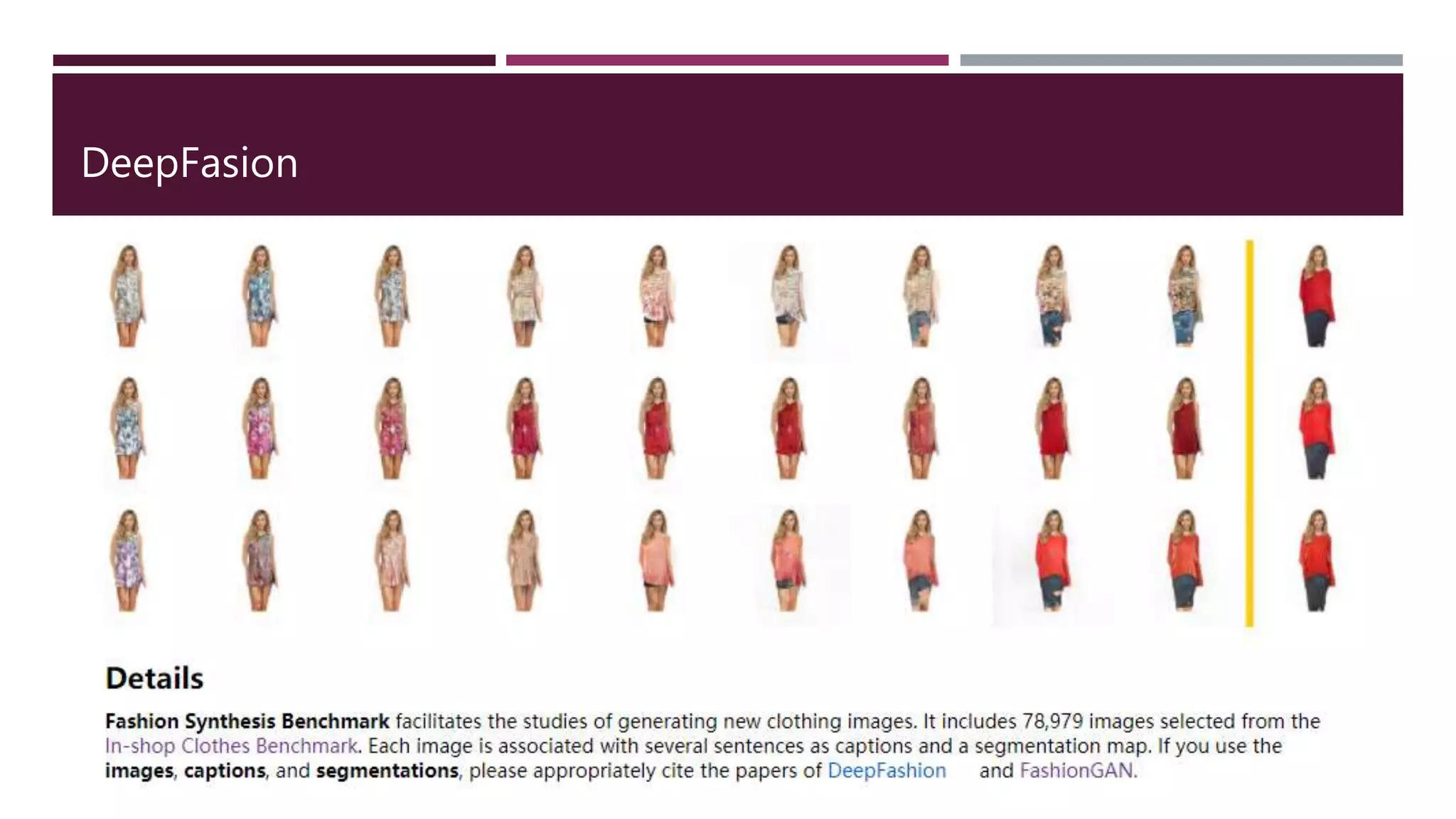

FashionGAN is a model that can generate new outfits for a person in an input image while keeping their pose unchanged. It takes in an input image, a segmentation map of the clothing items, and a text description of a new outfit. FashionGAN then generates a new segmentation map matching the description while maintaining consistency with the person's pose and attributes like gender, hair, and skin color. It renders the new outfit onto the person using the segmentation map and image rendering to realistically redraw the person in the new clothes description. FashionGAN is trained on the DeepFashion dataset containing over 800,000 diverse fashion images with labels.