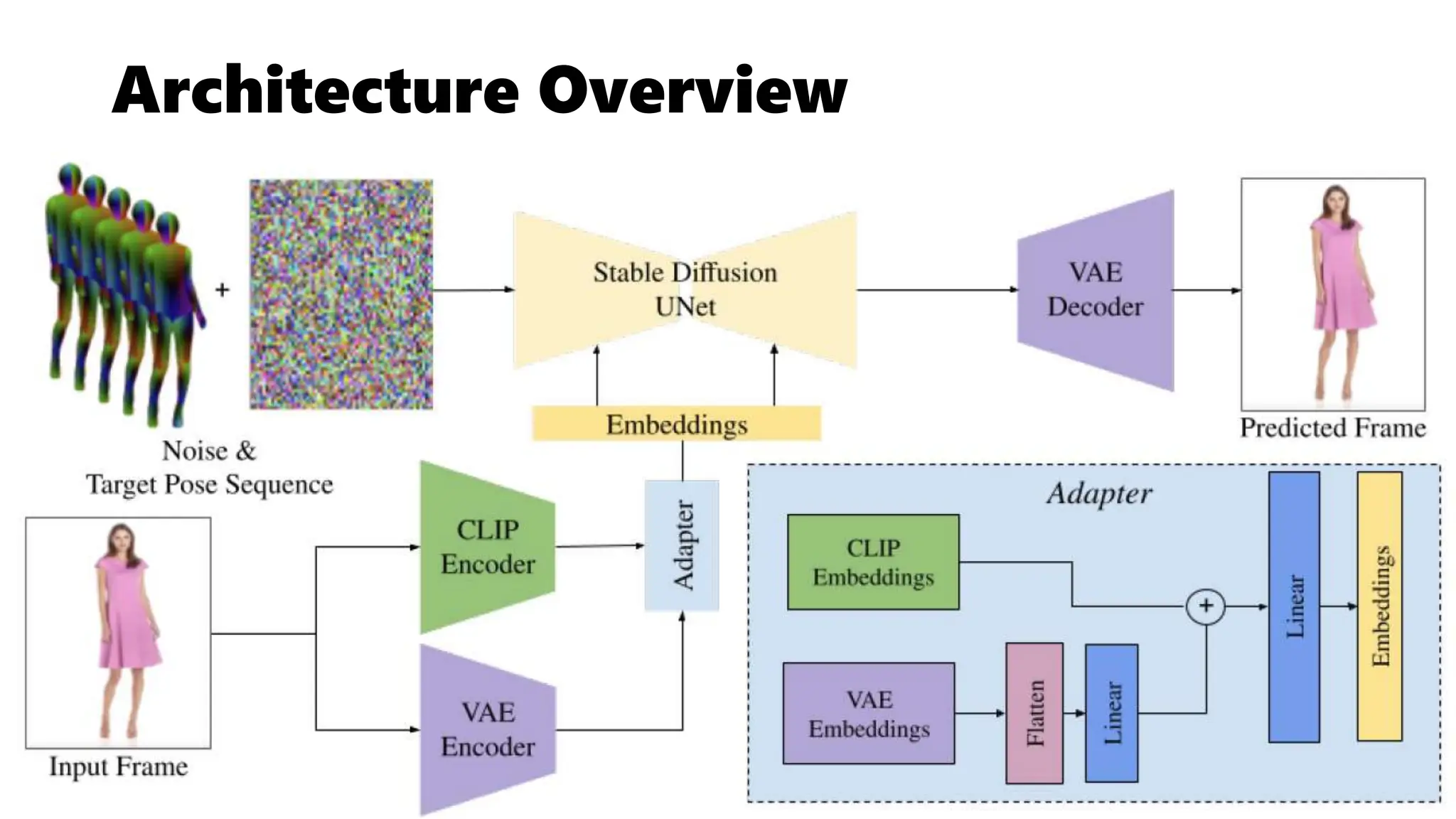

This document describes DreamPose, a method for generating animated fashion videos from still images and pose sequences using a modified Stable Diffusion model. It uses a dual CLIP-VAE encoder and adapter module to condition on both the input image and target poses. The model is fine-tuned on a fashion video dataset to achieve temporal consistency while maintaining fidelity to the input image and target poses. The goal is to synthesize videos where both the human and fabric are moving naturally.