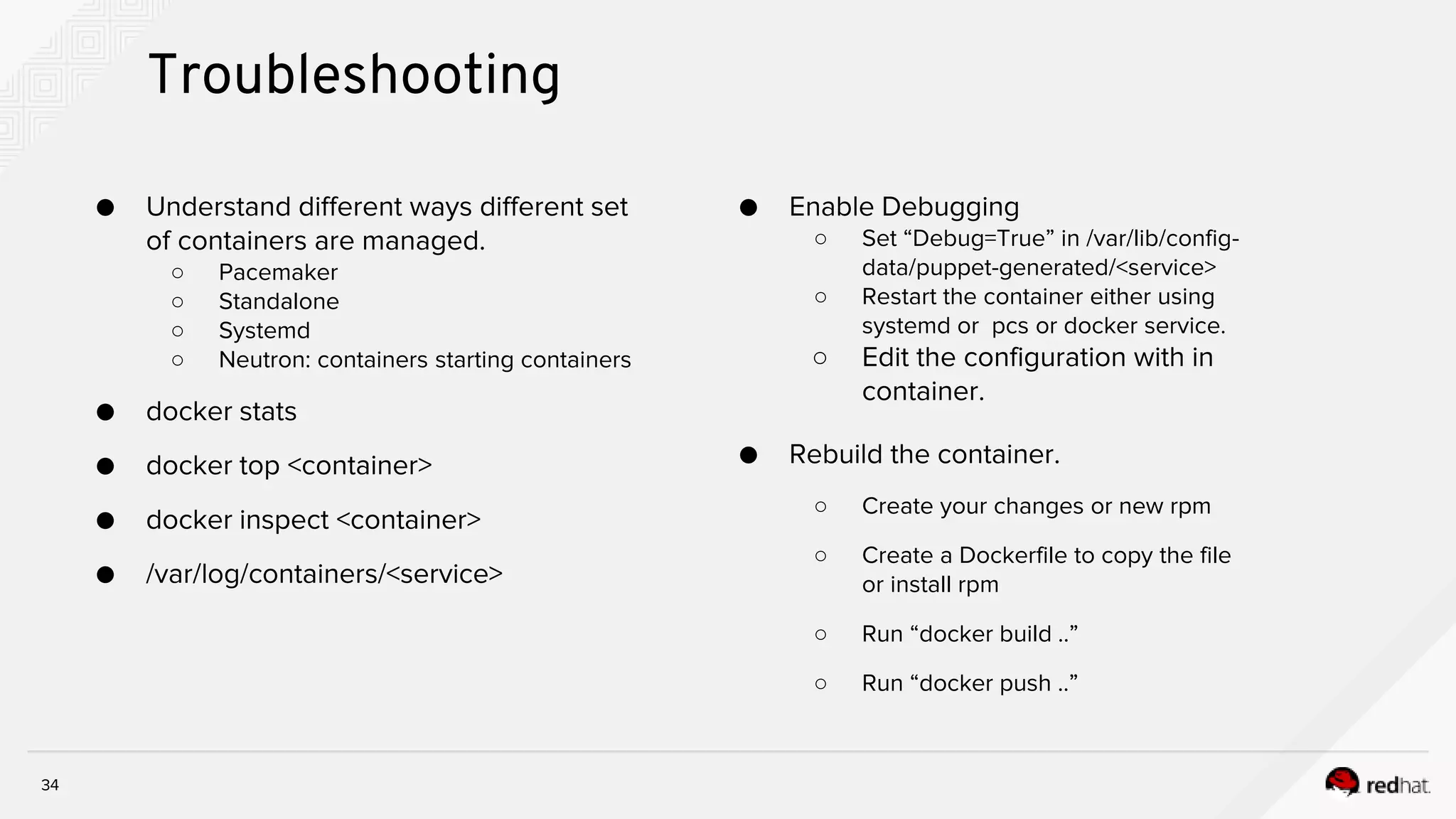

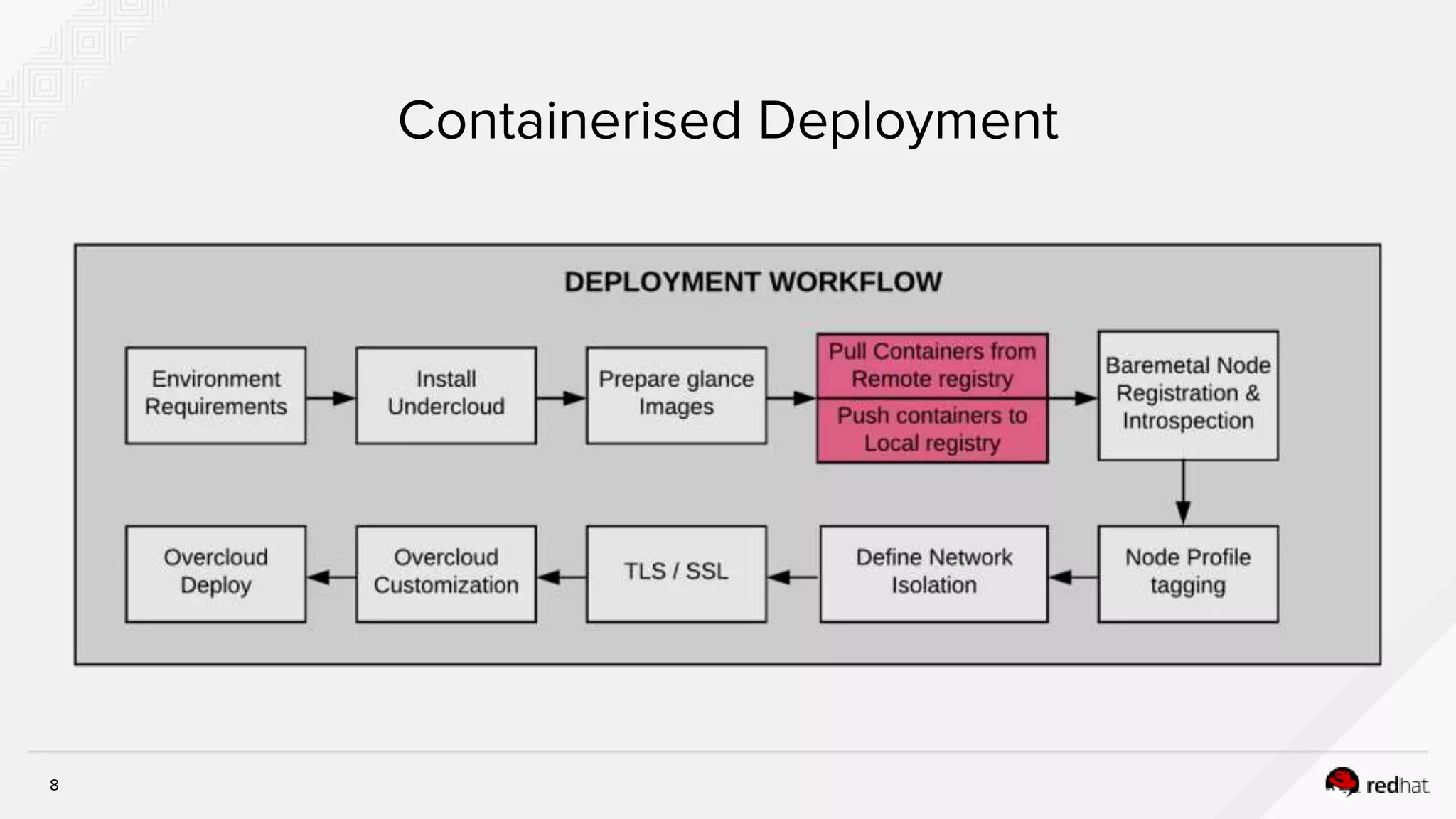

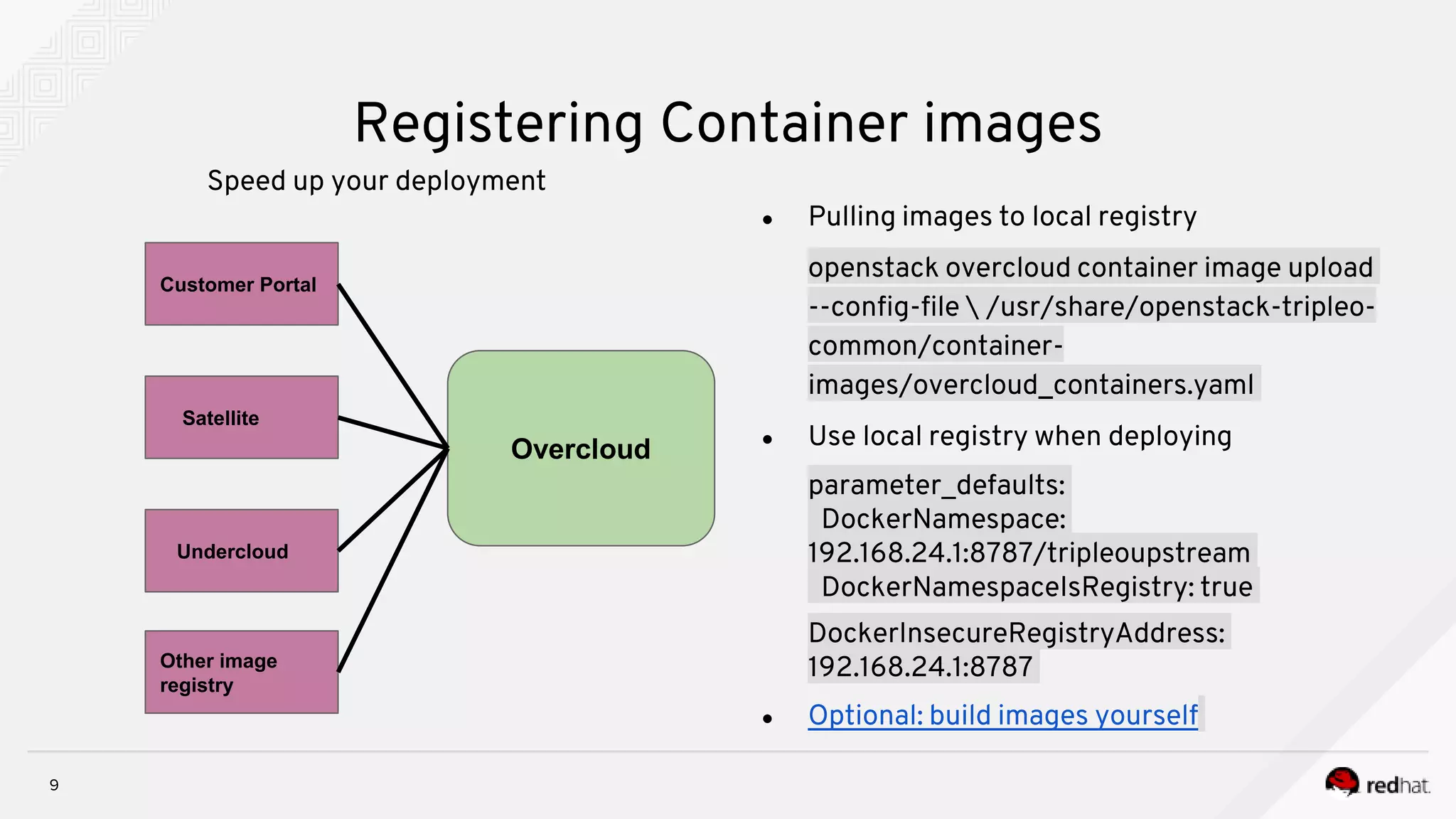

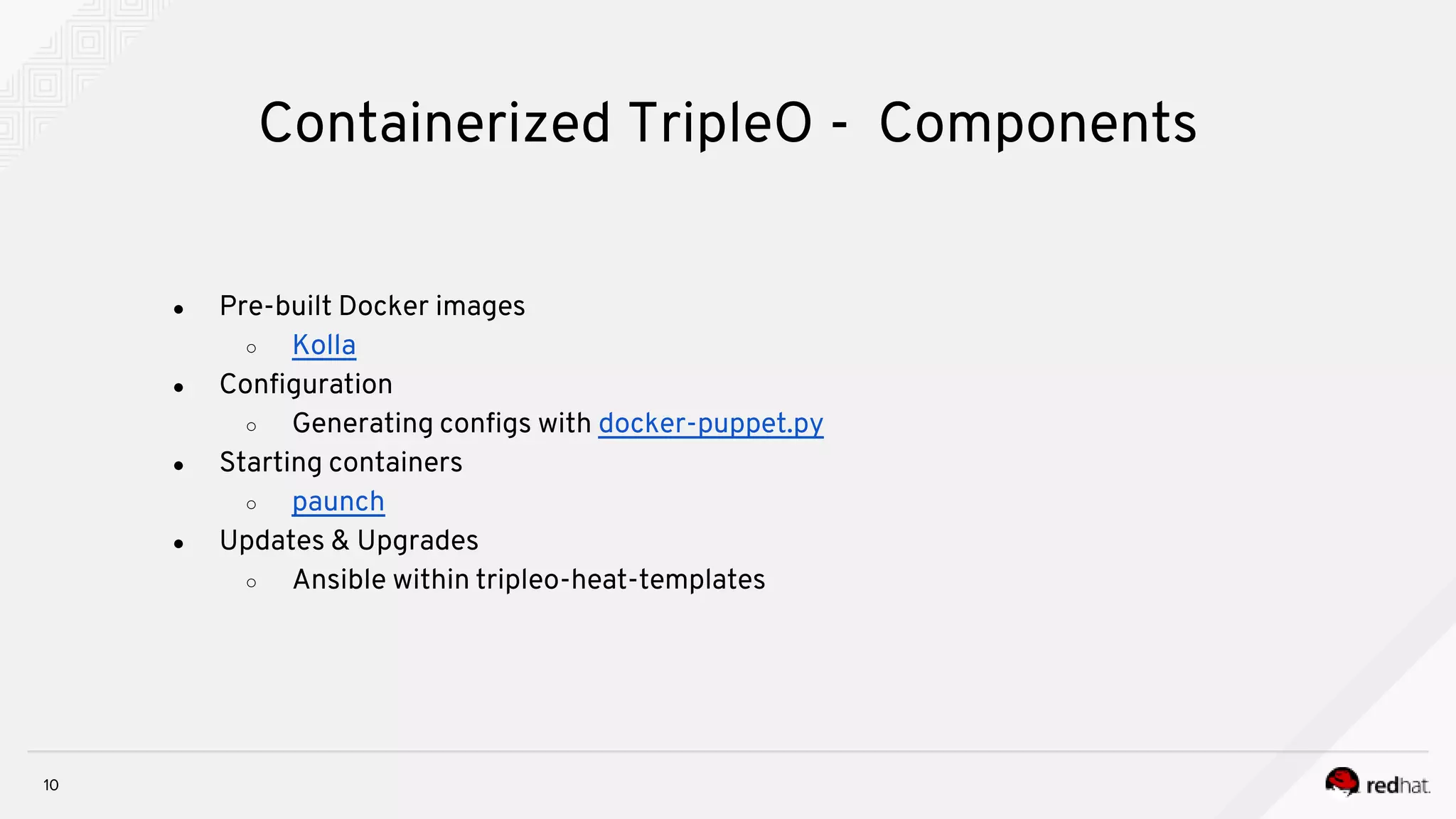

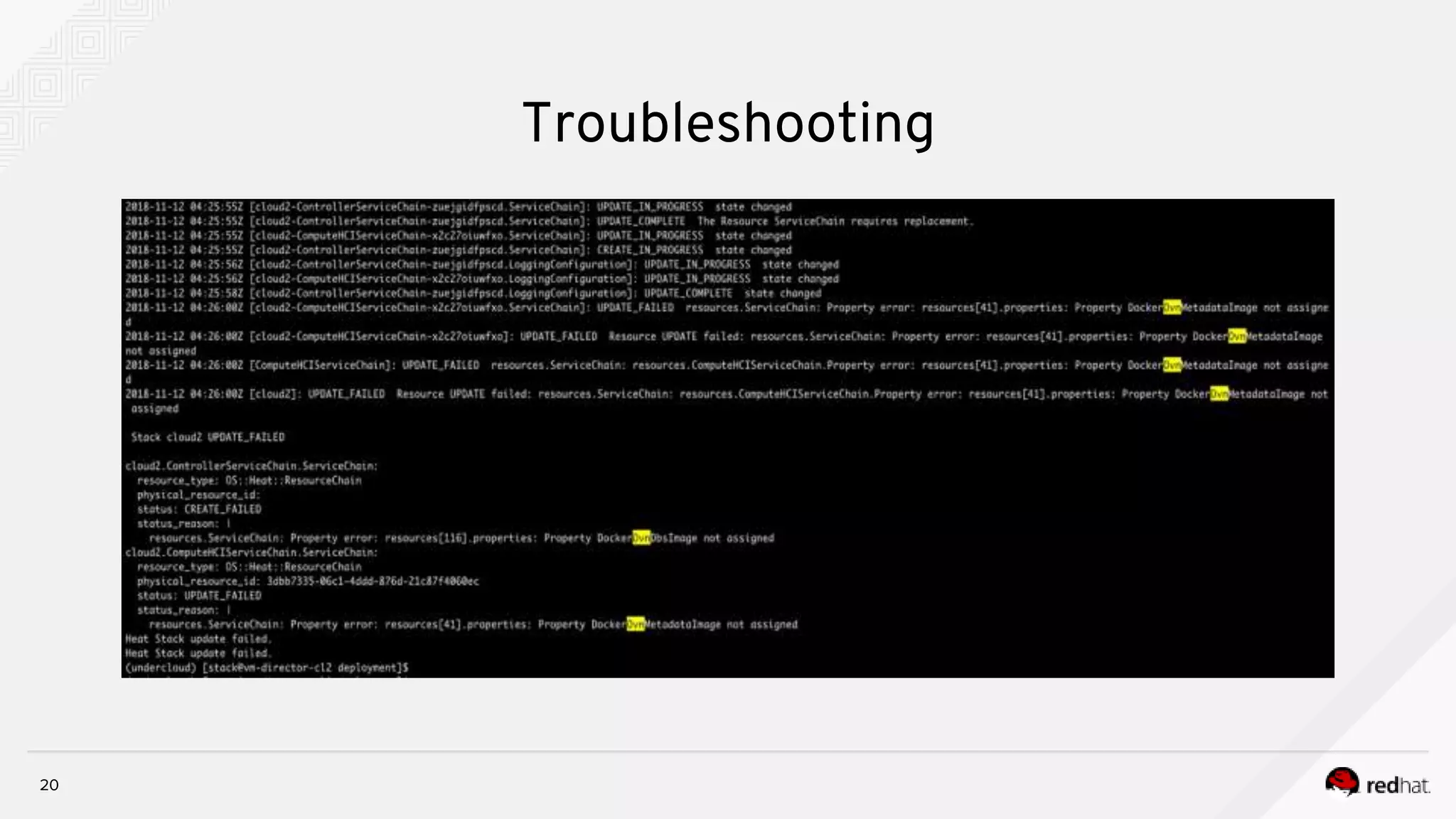

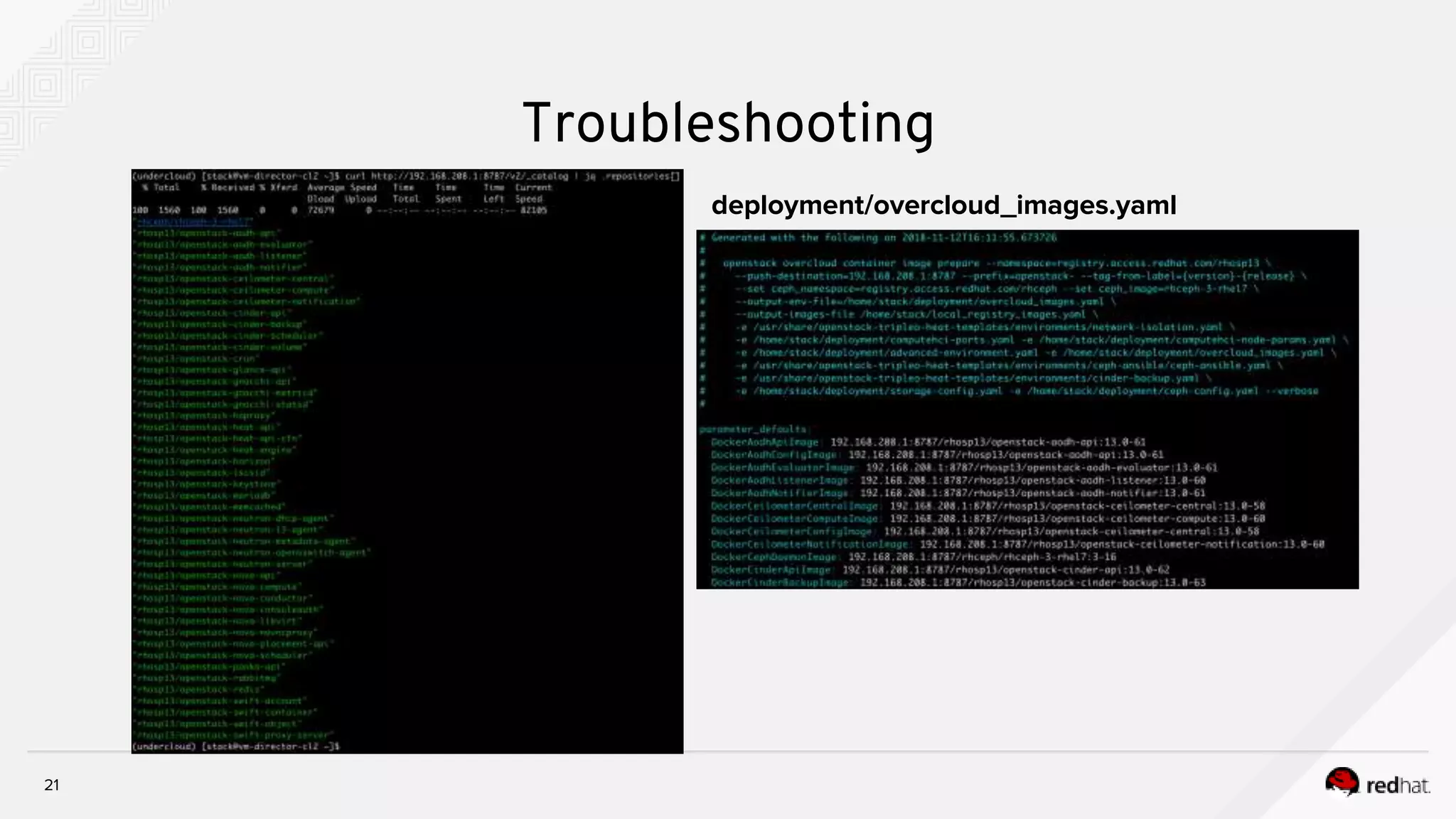

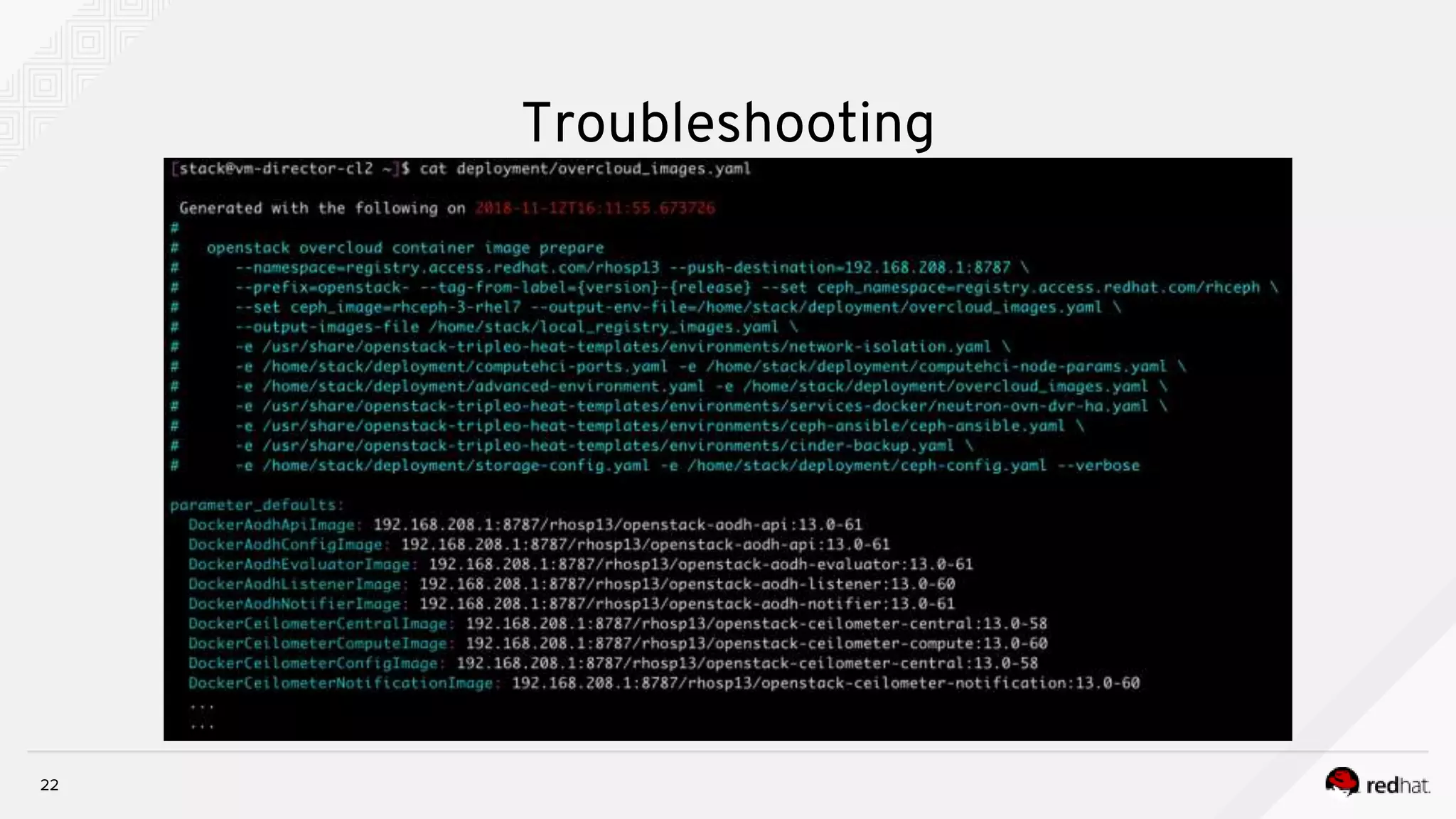

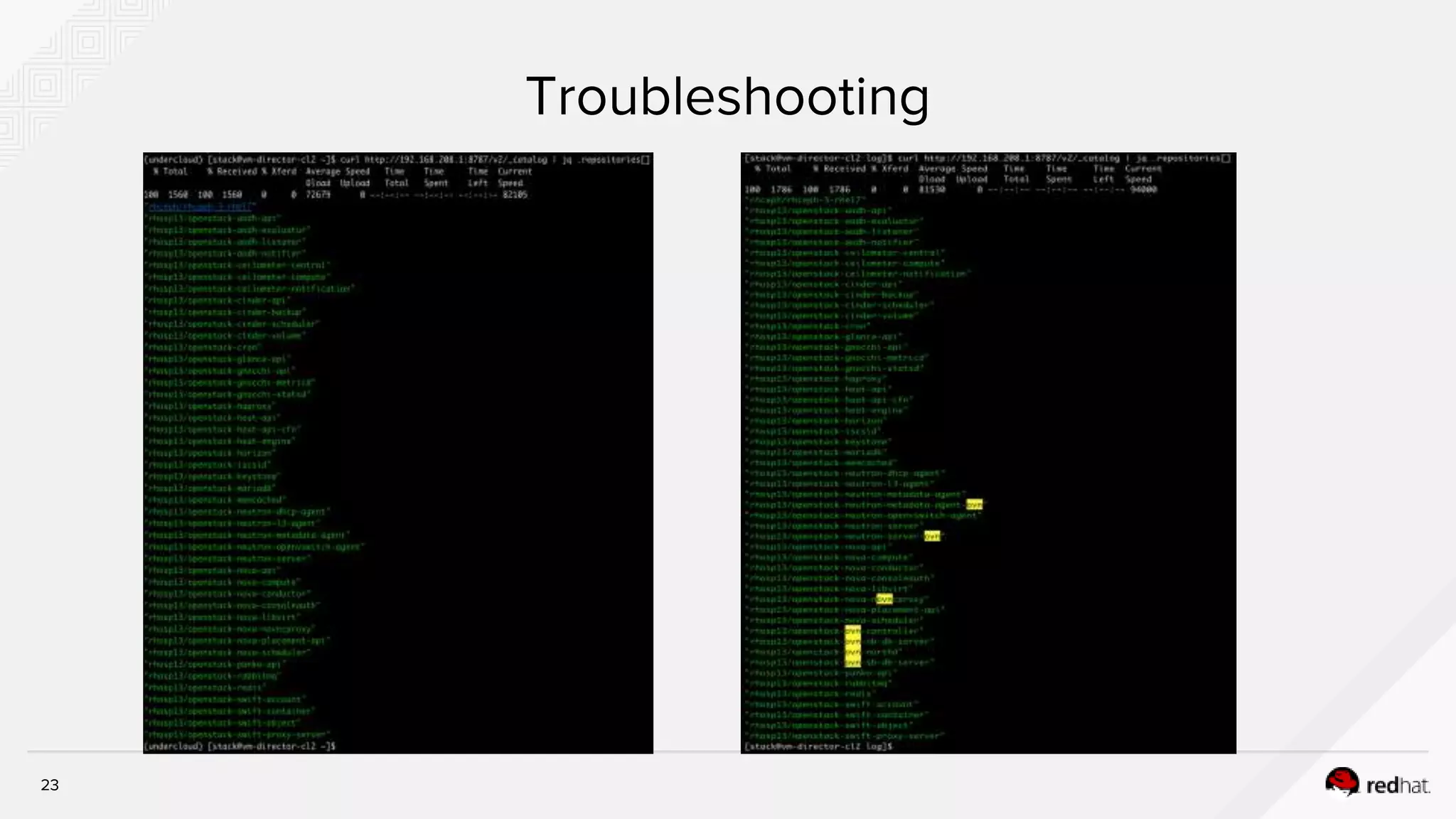

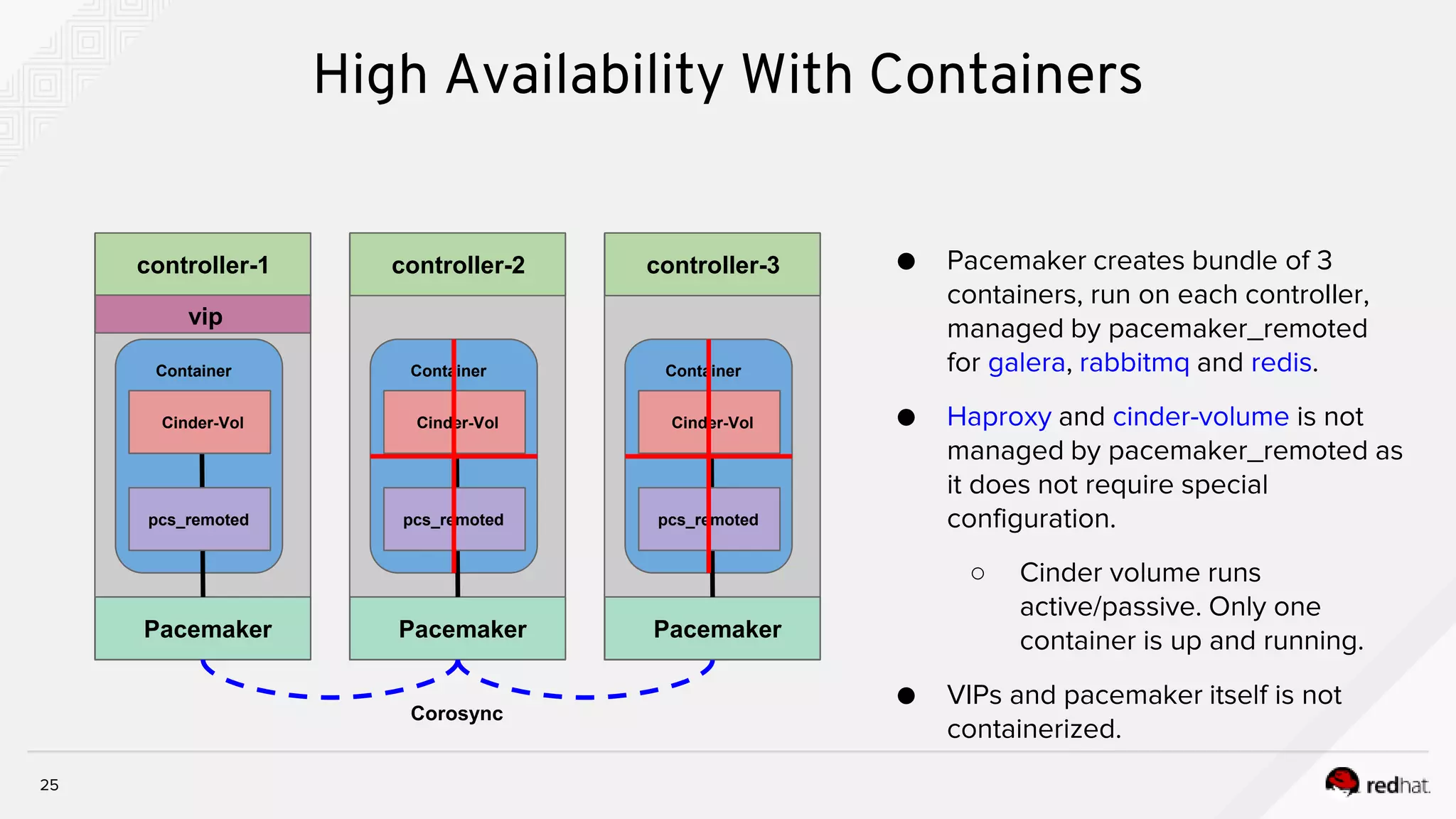

This document discusses troubleshooting containerized TripleO deployments. It provides an overview of traditional versus containerized TripleO deployments. Key aspects covered include building container images, registering images, deployment flow, and troubleshooting. It also discusses containerized components in the overcloud including HA pacemaker containers, standalone containers, containerized compute and Ceph nodes, and Neutron containers. Specific troubleshooting steps and files are outlined.

![14

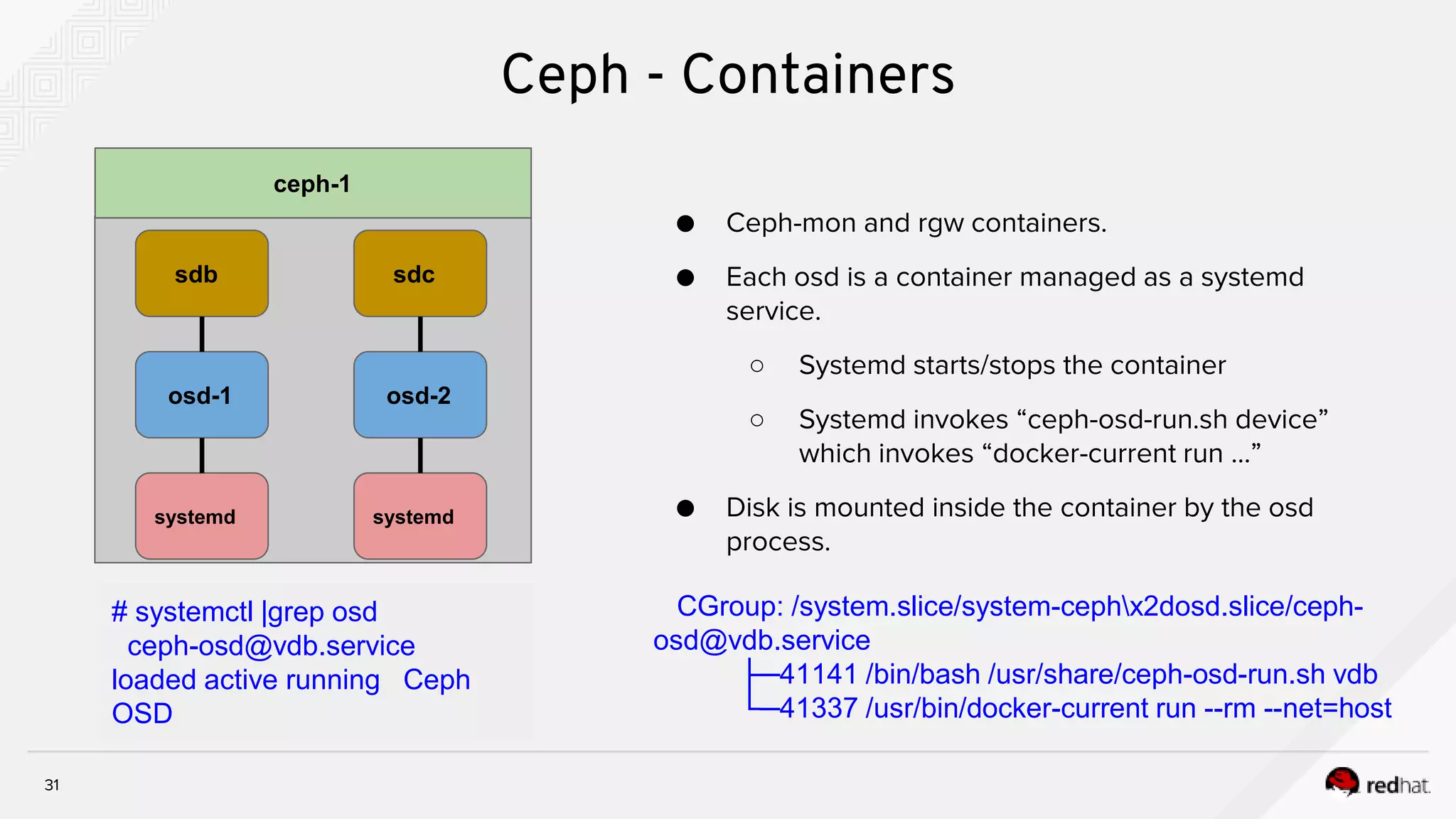

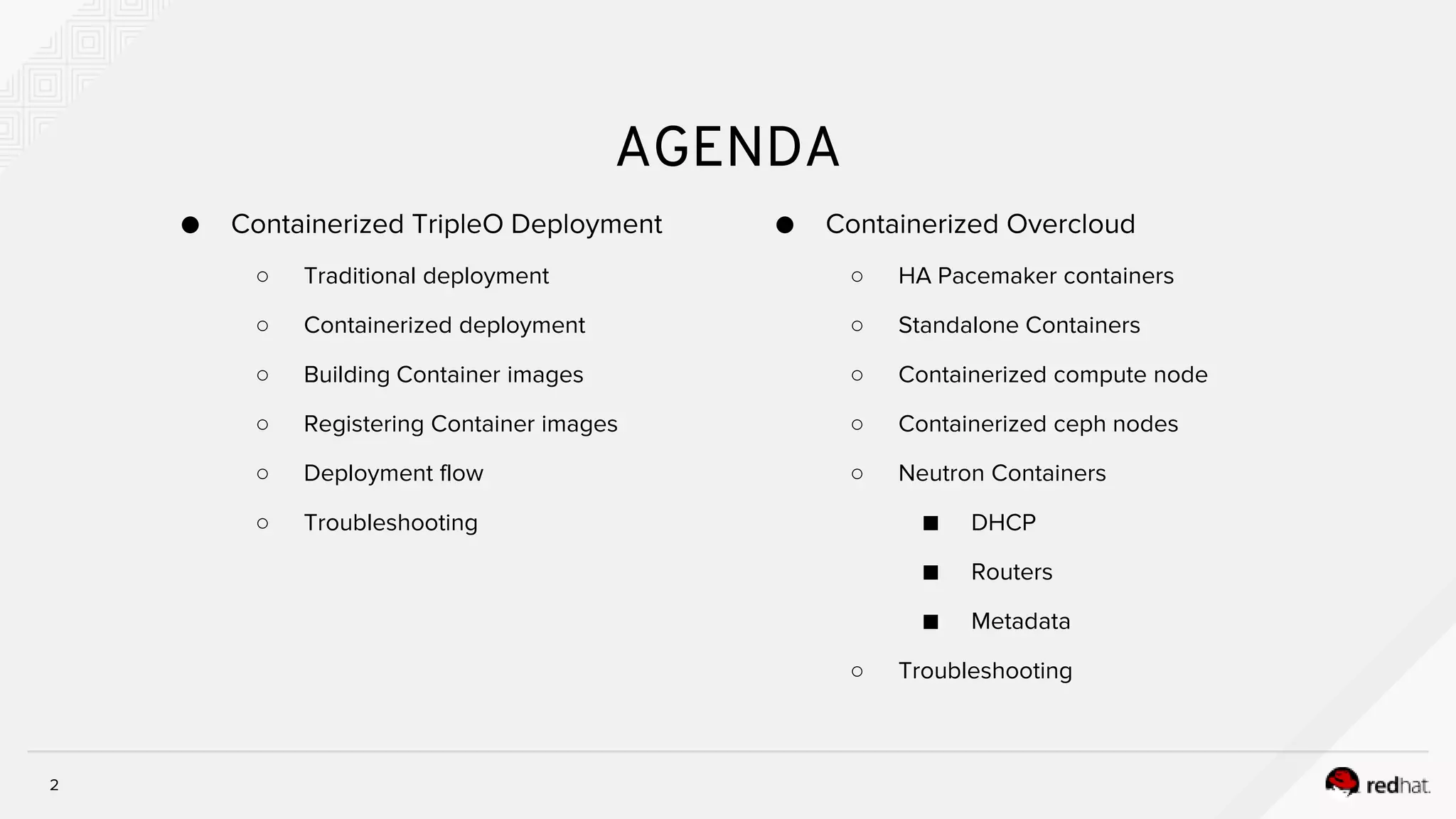

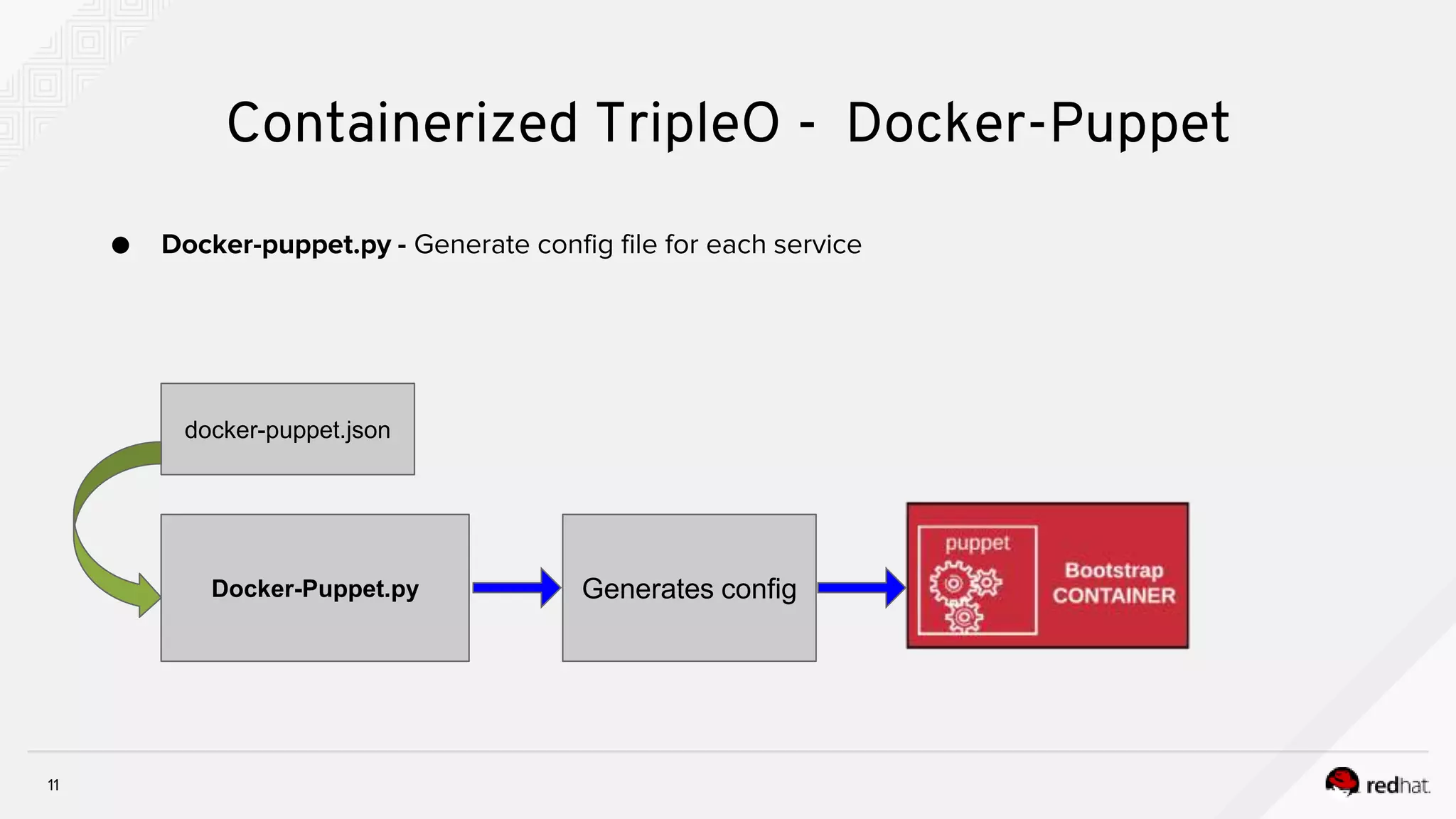

Containerized TripleO - Kolla_start

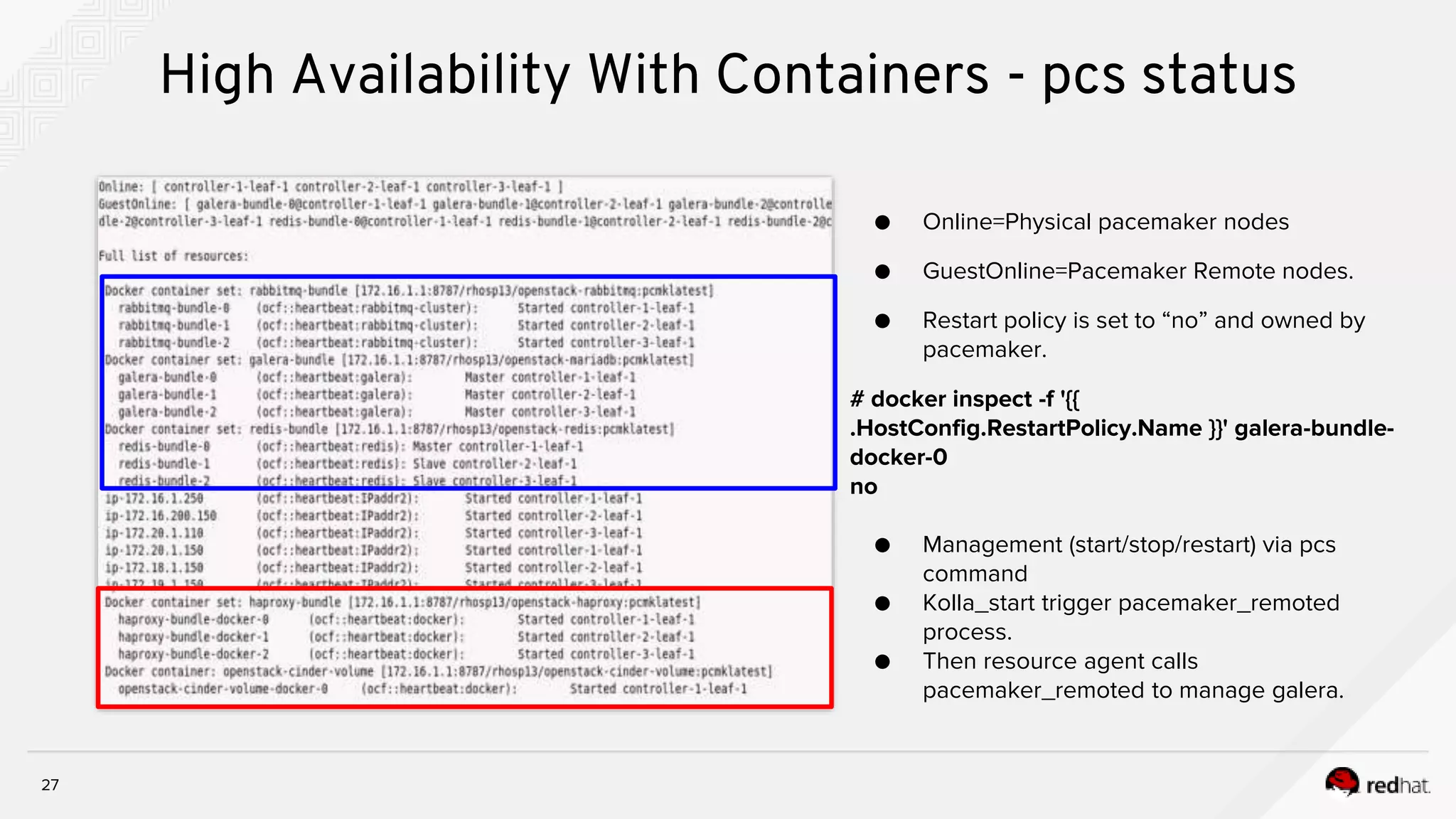

# jq . neutron_api.json

{

"permissions": [

{

"recurse": true,

"path": "/var/log/neutron",

"owner": "neutron:neutron"

}

],

"command": "/usr/bin/neutron-server --config-file /usr/share/neutron/neutron-dist.conf --config-dir

/usr/share/neutron/server --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugin.ini --config-dir

/etc/neutron/conf.d/common --config-dir /etc/neutron/conf.d/neutron-server --log-file=/var/log/neutron/server.log",

"config_files": [

{

"preserve_properties": true,

"merge": true,

"source": "/var/lib/kolla/config_files/src/*",

"dest": "/"

}

]

}](https://image.slidesharecdn.com/troubleshootingcontainerizedtripleodeployment-181121094436/75/Troubleshooting-containerized-triple-o-deployment-14-2048.jpg)

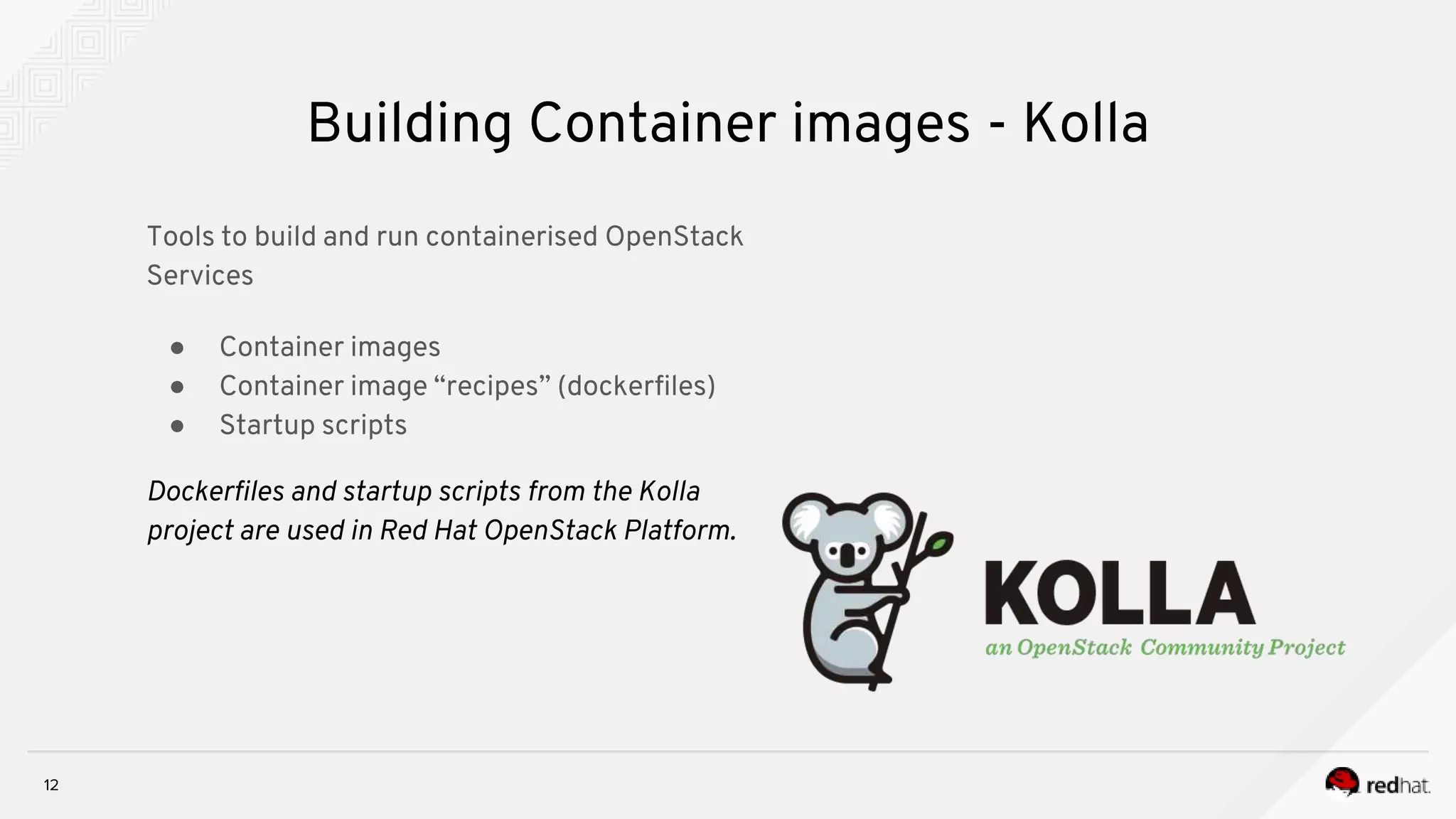

![19

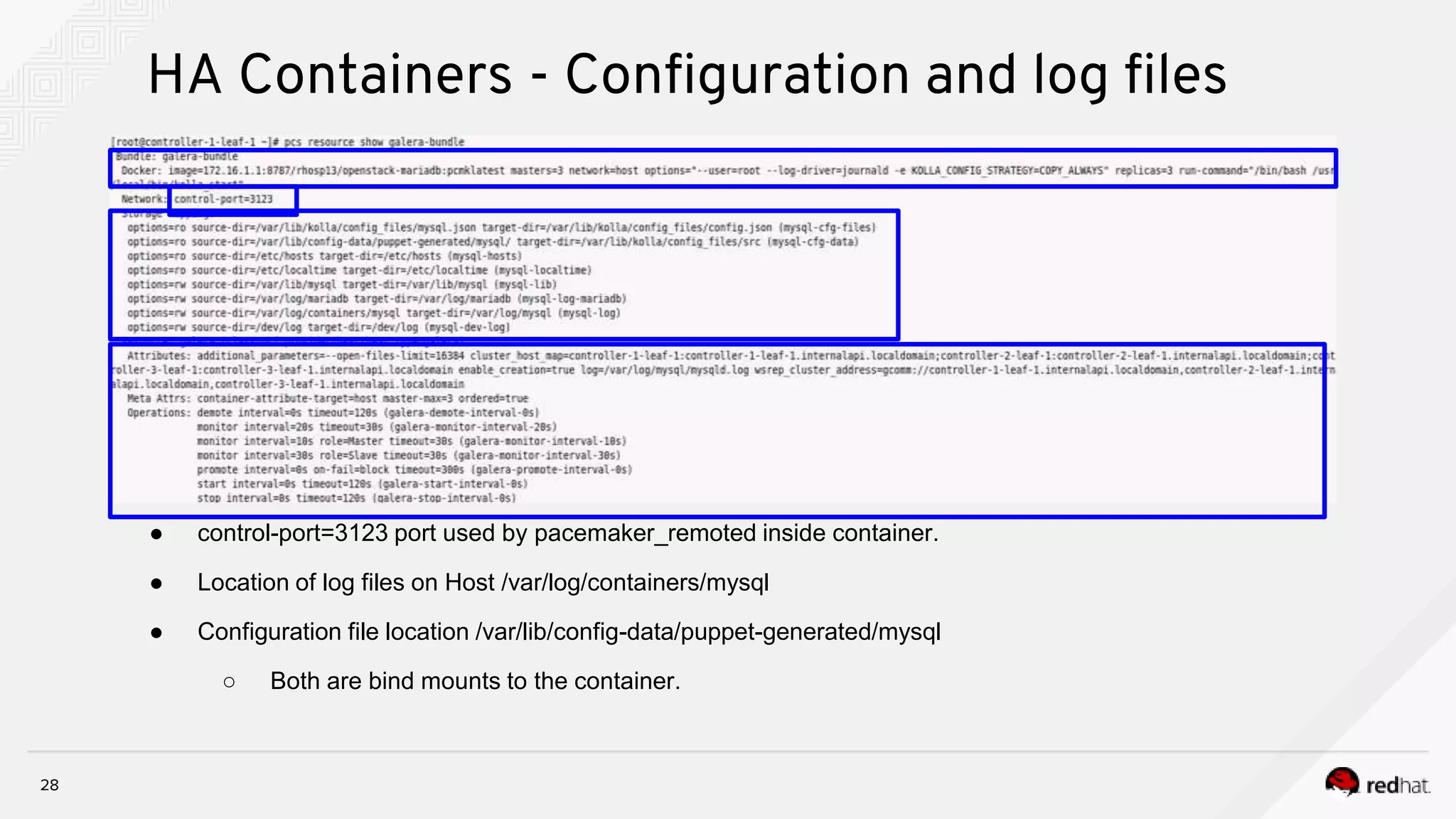

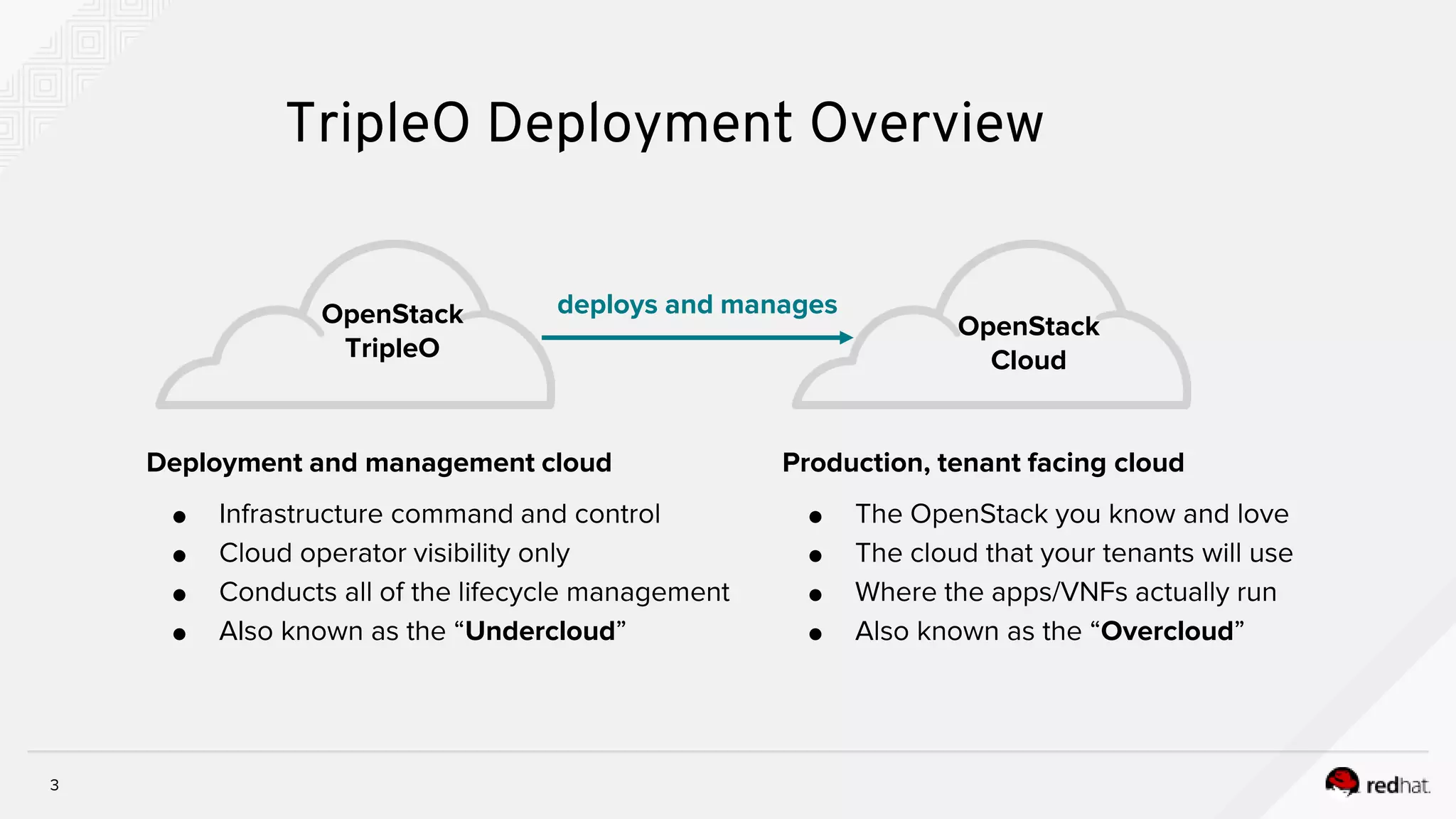

[stack@vm-director-cl2 ]$ cat /home/stack/deployment/deploy.sh

source ~/stackrc

openstack overcloud deploy --templates /usr/share/openstack-tripleo-heat-templates

--stack cloud2

-r /home/stack/deployment/roles_data.yaml

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml

-e /home/stack/deployment/computehci-node-params.yaml

-e /home/stack/deployment/advanced-environment.yaml

-e /usr/share/openstack-tripleo-heat-templates/environments/services-docker/neutron-ovn-dvr-ha.yaml

-e /home/stack/deployment/overcloud_images.yaml

-n /home/stack/deployment/network_data.yaml

--timeout 120

--control-scale 3

--control-flavor control

--compute-scale 0

--compute-flavor compute

--ceph-storage-scale 0

--ceph-storage-flavor ceph-storage

--ntp-server clock.redhat.com

Troubleshooting](https://image.slidesharecdn.com/troubleshootingcontainerizedtripleodeployment-181121094436/75/Troubleshooting-containerized-triple-o-deployment-19-2048.jpg)

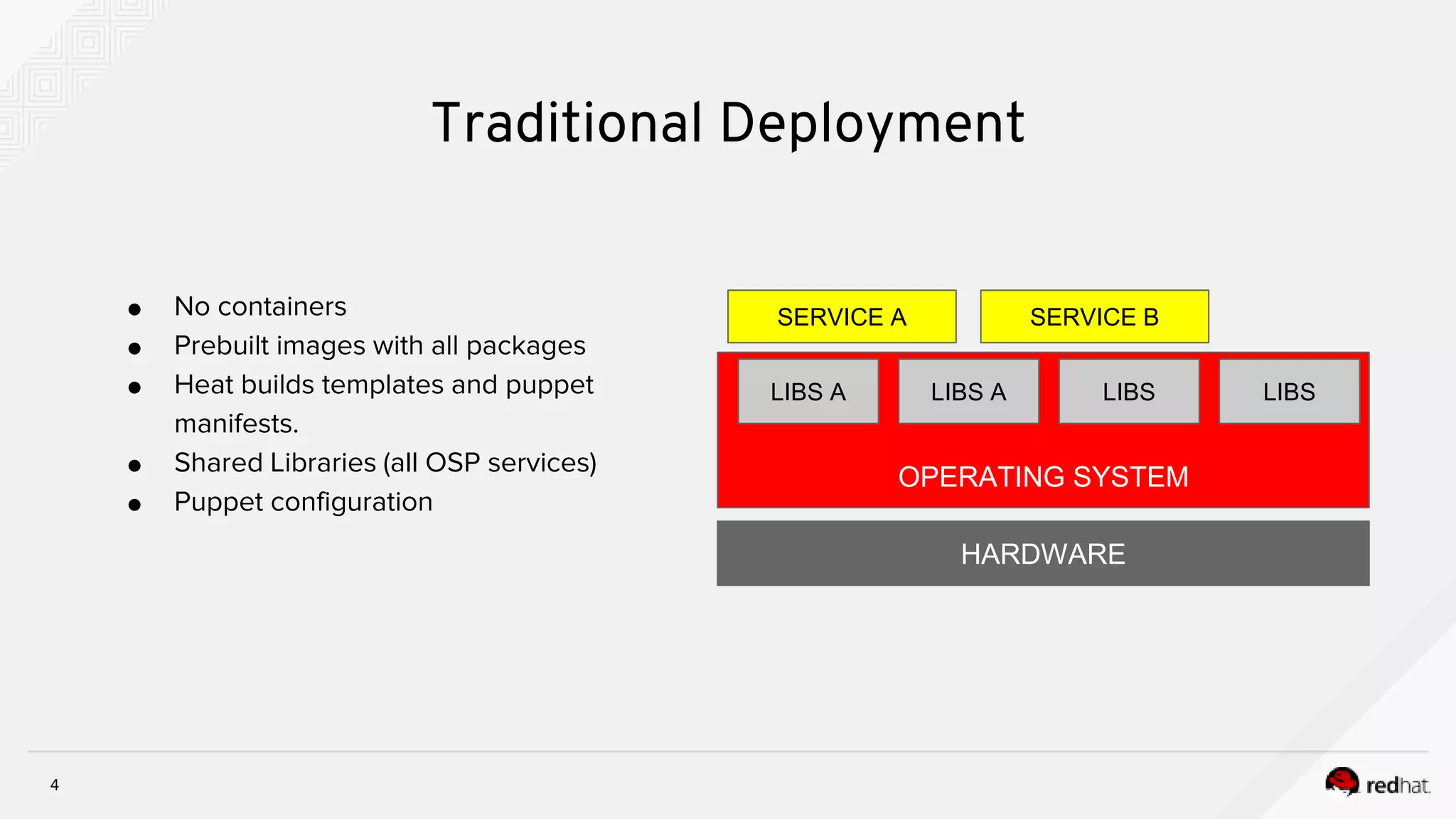

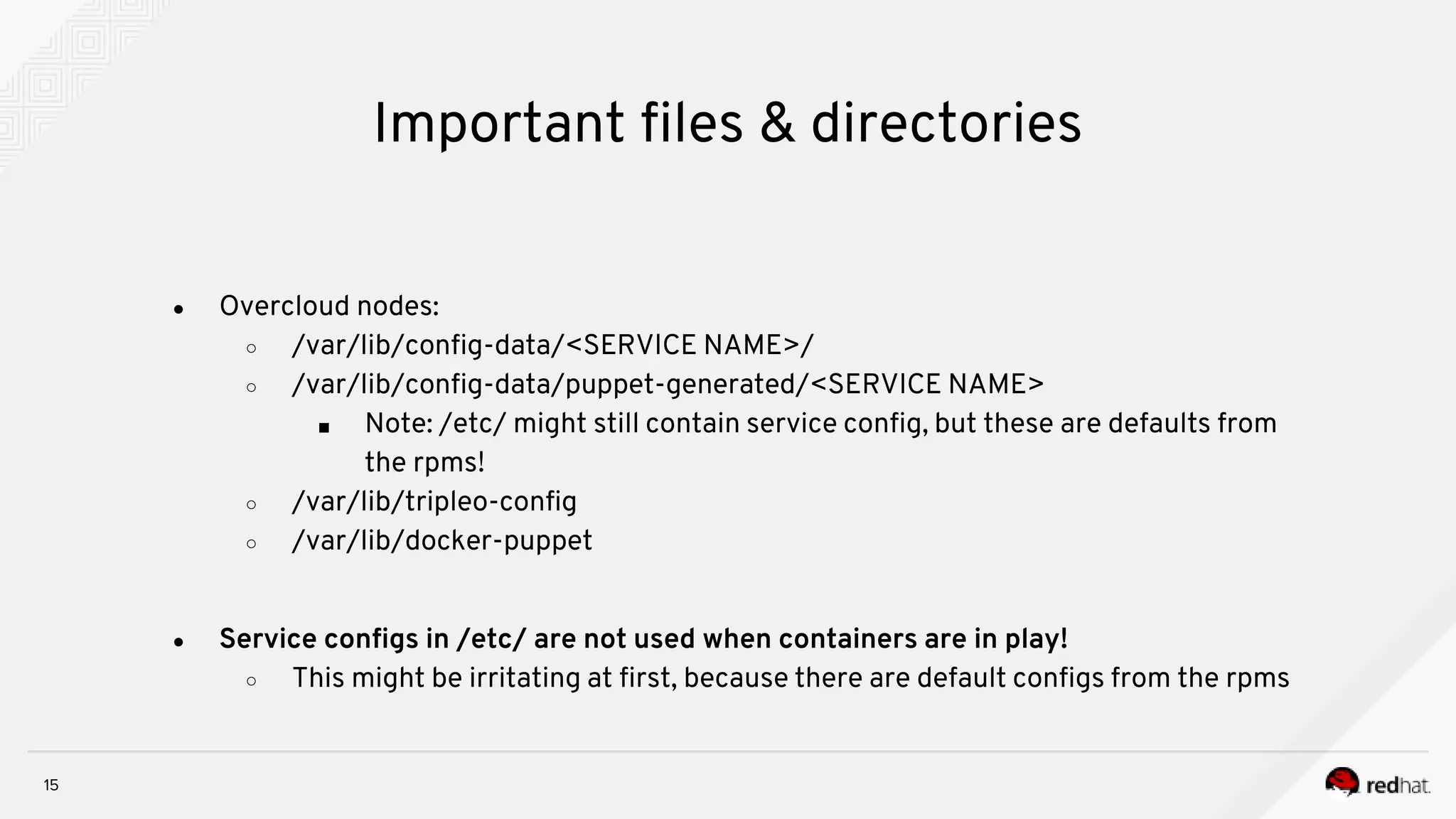

![26

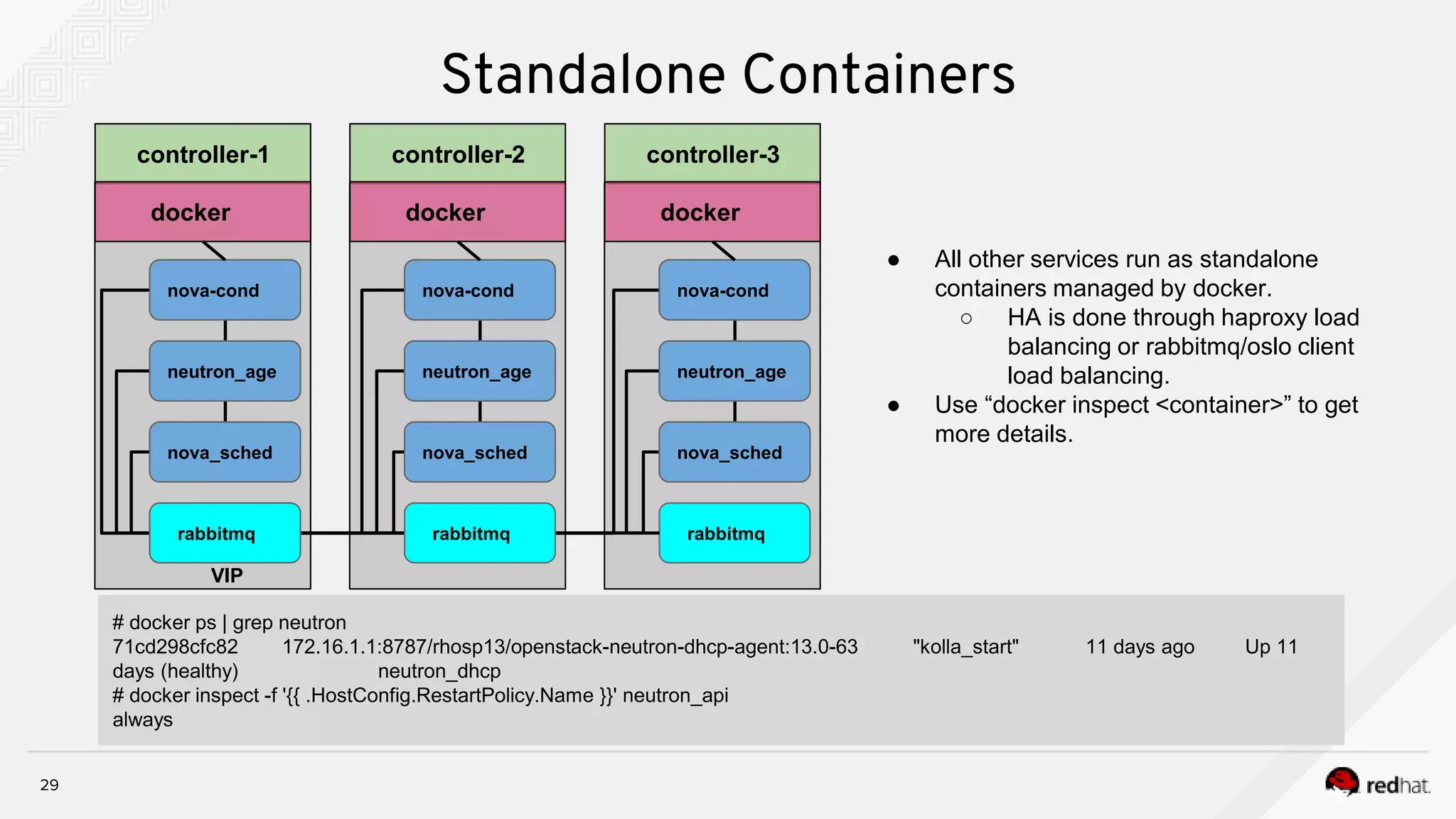

High Availability With Containers

Container

config.json

Config Dir

kolla_start copy config Set perm process

"permissions": [

{

"recurse": true,

"path": "/var/log/mysql",

"owner": "mysql:mysql"

},

"command": "/usr/sbin/pacemaker_remoted",

"config_files": [

{

"perm": "0644",

"owner": "root",

"source": "/dev/null",

"dest": "/etc/libqb/force-filesystem-sockets"

},

pacemaker remoted Galera

{

"preserve_properties": true,

"merge": true,

"source": "/var/lib/kolla/config_files/src/*",

"dest": "/"

},

[root@controller-2-leaf-1 ~]# docker ps --no-trunc | grep galera

0843f6314b3770380927542bad92411d3edb3d2d4de0bb064c00e2fdae041b18 172.16.1.1:8787/rhosp13/openstack-

mariadb:pcmklatest "/bin/bash /usr/local/bin/kolla_start”

options=ro source-dir=/var/lib/kolla/config_files/mysql.json target-dir=/var/lib/kolla/config_files/config.json (mysql-cfg-files)

options=ro source-dir=/var/lib/config-data/puppet-generated/mysql/ target-dir=/var/lib/kolla/config_files/src (mysql-cfg-data)

options=ro source-dir=/etc/hosts target-dir=/etc/hosts (mysql-hosts)

options=ro source-dir=/etc/localtime target-dir=/etc/localtime (mysql-localtime)

options=rw source-dir=/var/lib/mysql target-dir=/var/lib/mysql (mysql-lib)

options=rw source-dir=/var/log/containers/mysql target-dir=/var/log/mysql (mysql-log)

….

….](https://image.slidesharecdn.com/troubleshootingcontainerizedtripleodeployment-181121094436/75/Troubleshooting-containerized-triple-o-deployment-26-2048.jpg)