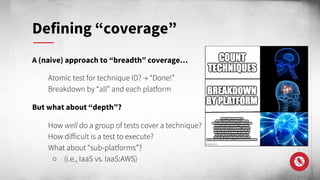

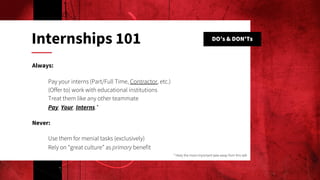

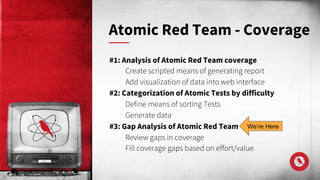

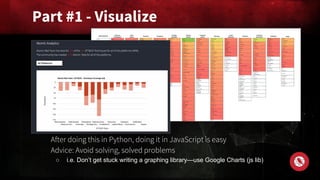

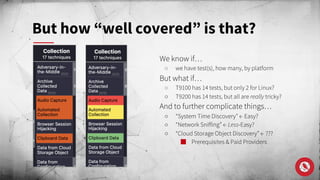

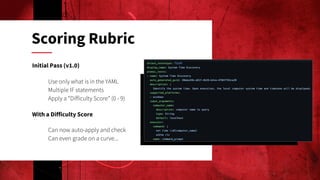

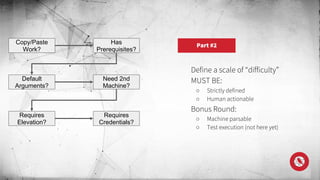

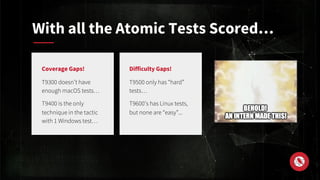

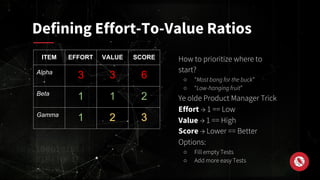

The document outlines a presentation on the Atomic Red Team and MITRE ATT&CK framework, focusing on enhancing coverage analysis through a breadth and depth approach. Key topics include the community-driven development of atomic tests, categorization by difficulty, and strategies for identifying coverage gaps. The presenters emphasize the importance of paying interns and contributing to open-source efforts within the cybersecurity community.

![Preface Slide

MITRE ATT&CK

A “common language” for (cyber)

security practitioners, executives,

and stakeholders.

Classification system for the

library of Atomic Red Team

examples.

Atomic Red Team

An open source library of simple,

focused tests mapped to the MITRE

ATT&CK® matrix. Each test runs in

five minutes or less, and many tests

come with easy-to-use configuration

and cleanup commands.

[Audience Interactive Slide: If you know what the “Dewey Decimal System” is, please feel old now!!!]](https://image.slidesharecdn.com/whatisattckcoverageanyway-220406003027/85/What-is-ATT-CK-coverage-anyway-Breadth-and-depth-analysis-with-Atomic-Red-Team-4-320.jpg)