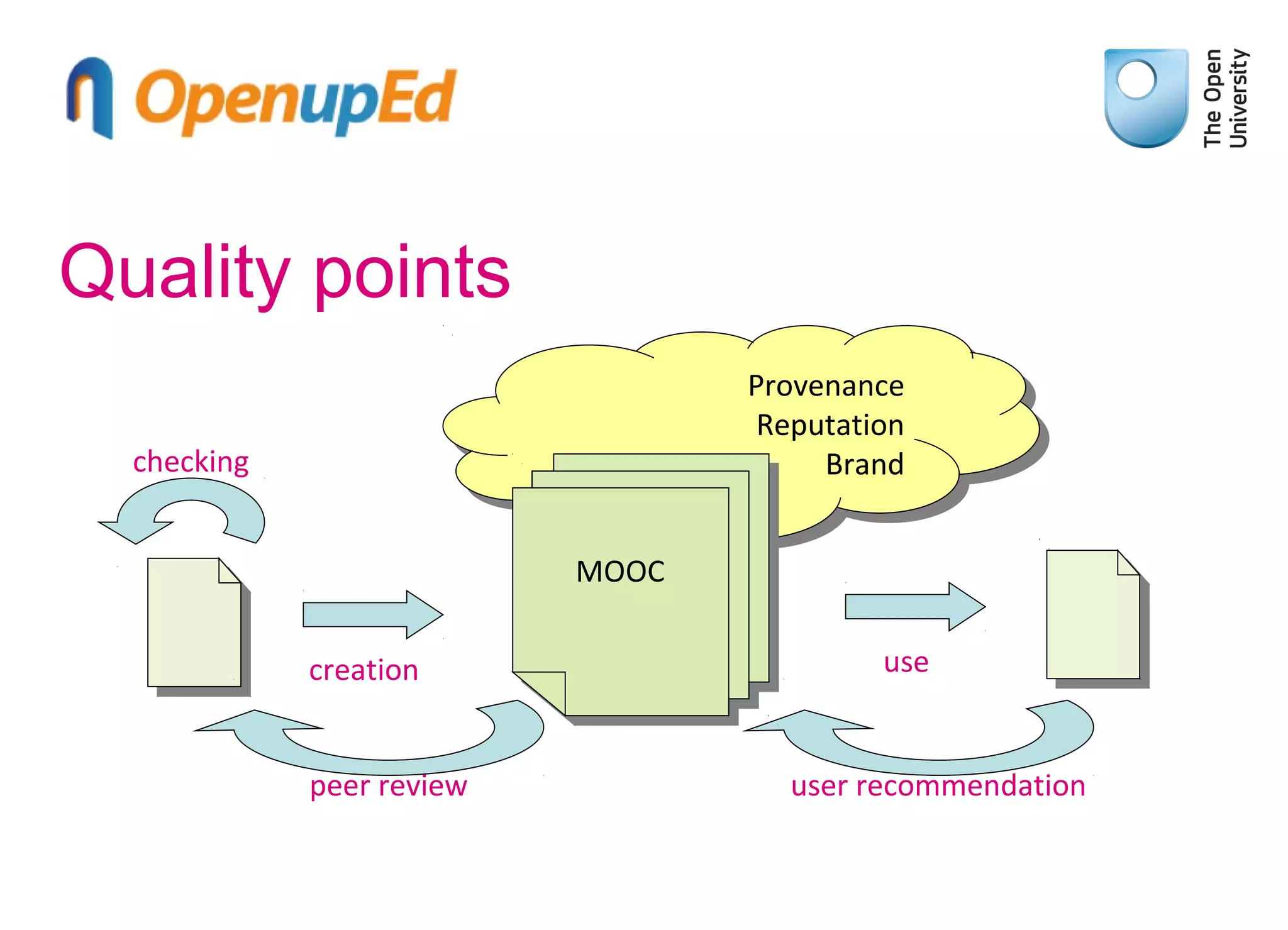

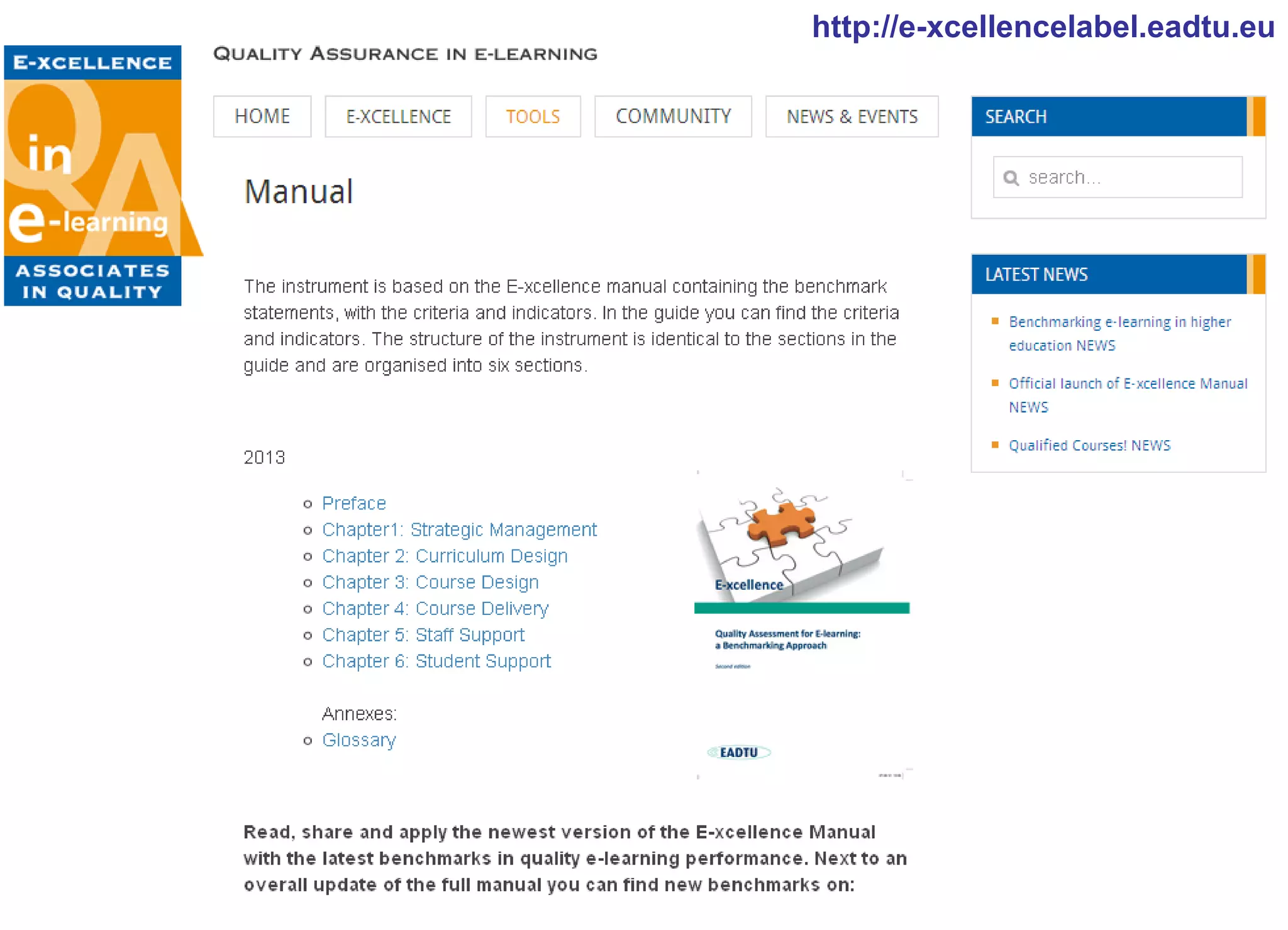

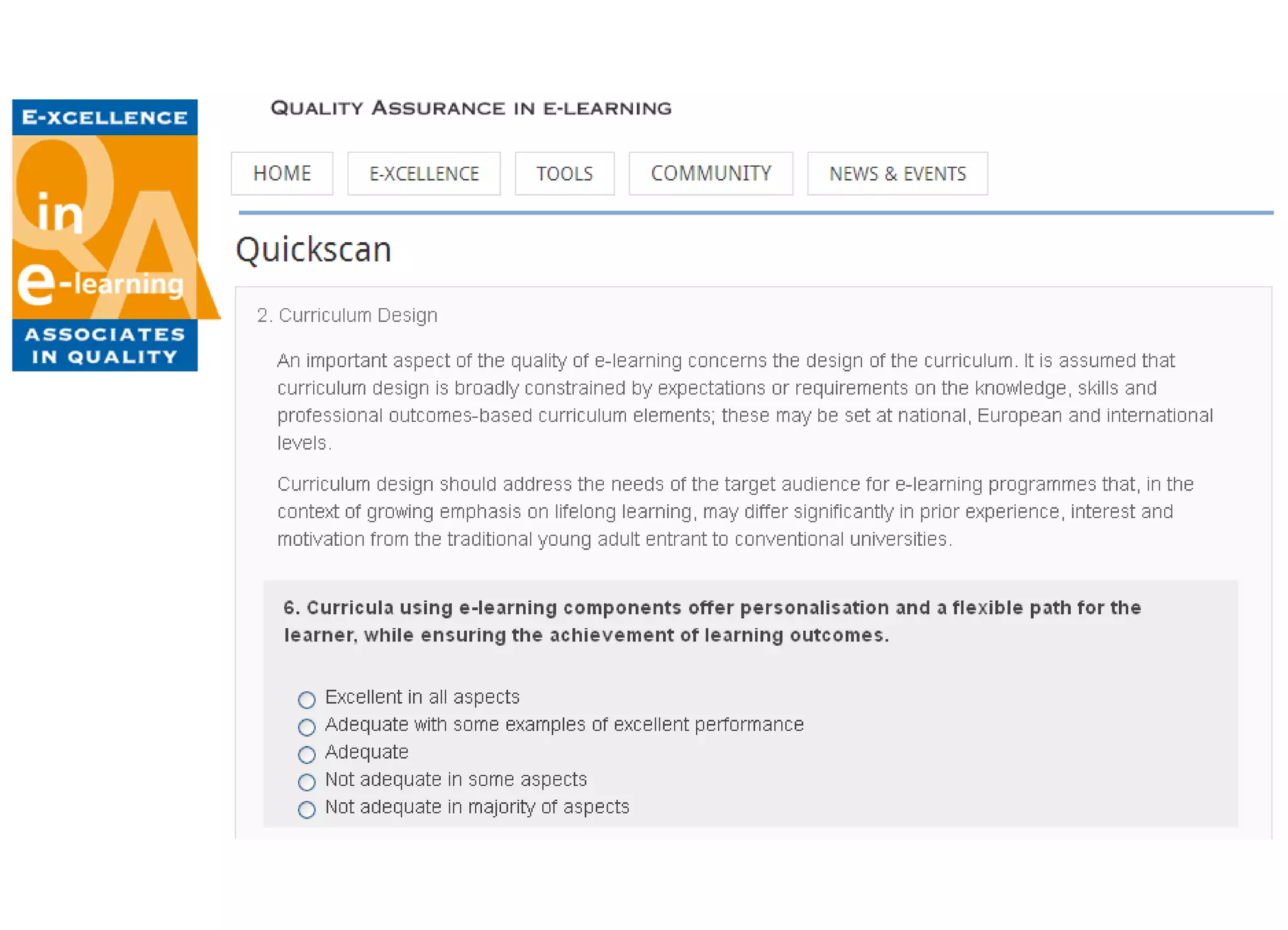

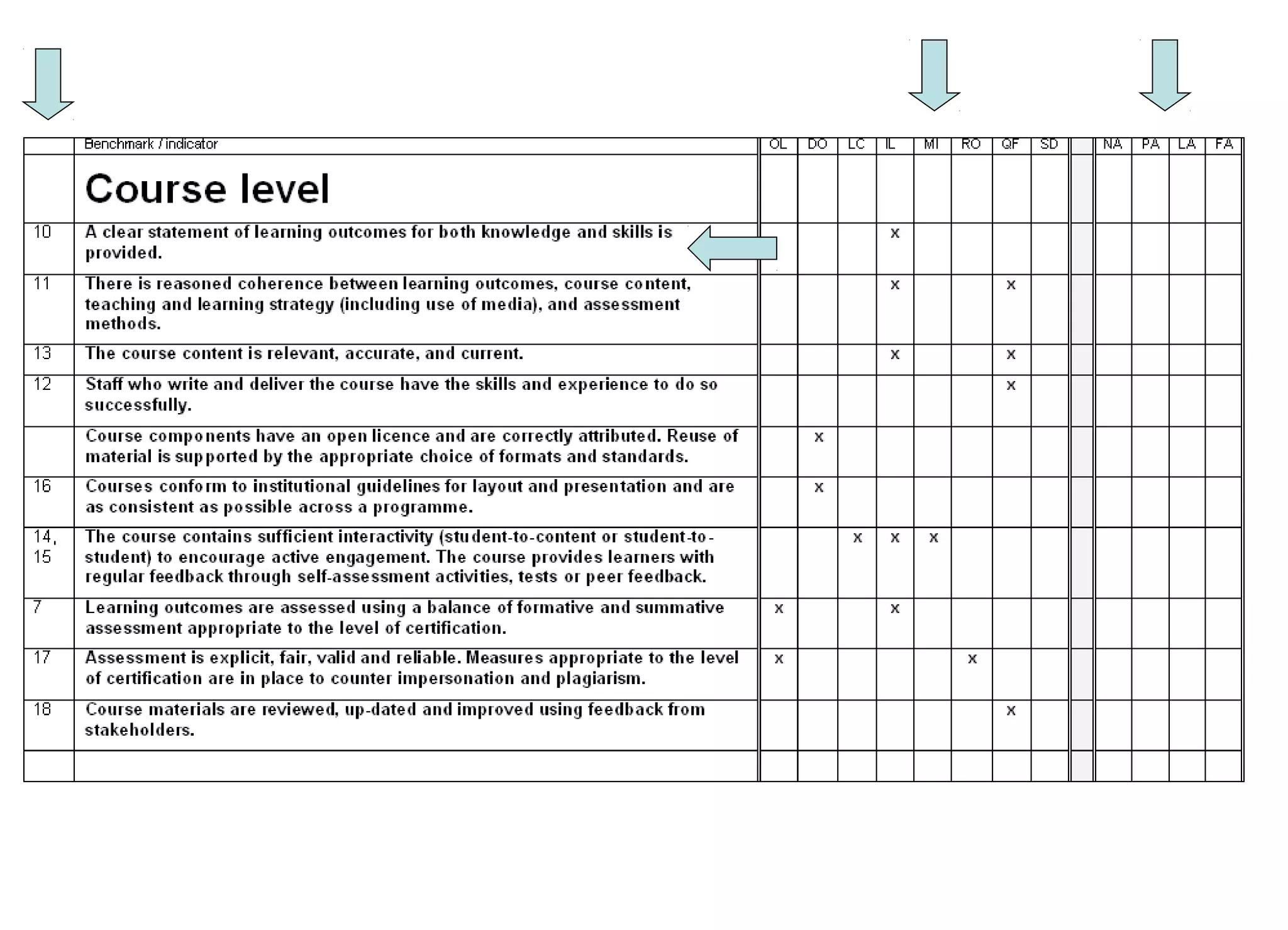

The document discusses the importance of quality benchmarking in MOOCs, emphasizing the perspectives of various stakeholders such as students, employers, and institutions. It introduces the OpenupED label, which involves self-assessment and peer review for MOOC quality assurance, and outlines the E-xcellence project that develops criteria and resources for evaluating MOOC quality. Challenges such as completion rates and terminology regarding participants are also addressed.