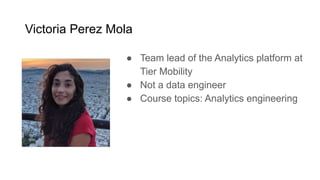

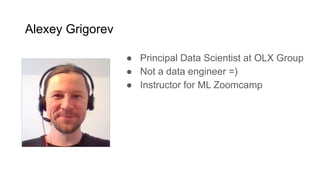

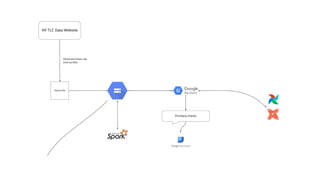

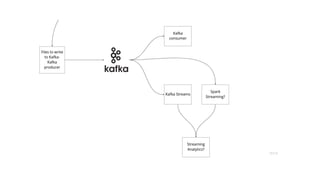

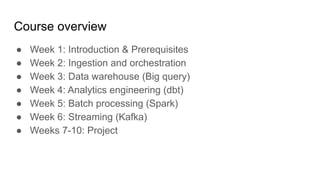

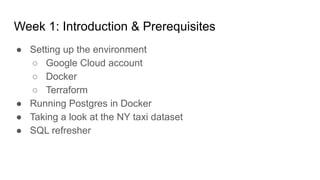

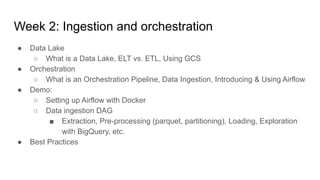

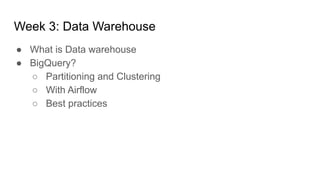

The document outlines the plan and syllabus for a Data Engineering Zoomcamp hosted by DataTalks.Club. It introduces the four instructors for the course - Ankush Khanna, Sejal Vaidya, Victoria Perez Mola, and Alexey Grigorev. The 10-week course will cover topics like data ingestion, data warehousing with BigQuery, analytics engineering with dbt, batch processing with Spark, streaming with Kafka, and a culminating 3-week student project. Pre-requisites include experience with Python, SQL, and the command line. Course materials will be pre-recorded videos and there will be weekly live office hours for support. Students can earn a certificate and compete on a