The document discusses consumer offset management in Kafka, focusing on the challenges and best practices for storing offsets, particularly in relation to Zookeeper versus Kafka's own storage. It emphasizes the need for durable, high-write-load systems that ensure consistent reads and atomic commits, while also addressing the handling of dead consumers and recommended topic settings. Additionally, it outlines migration strategies between Zookeeper and Kafka, along with key metrics to monitor during these processes.

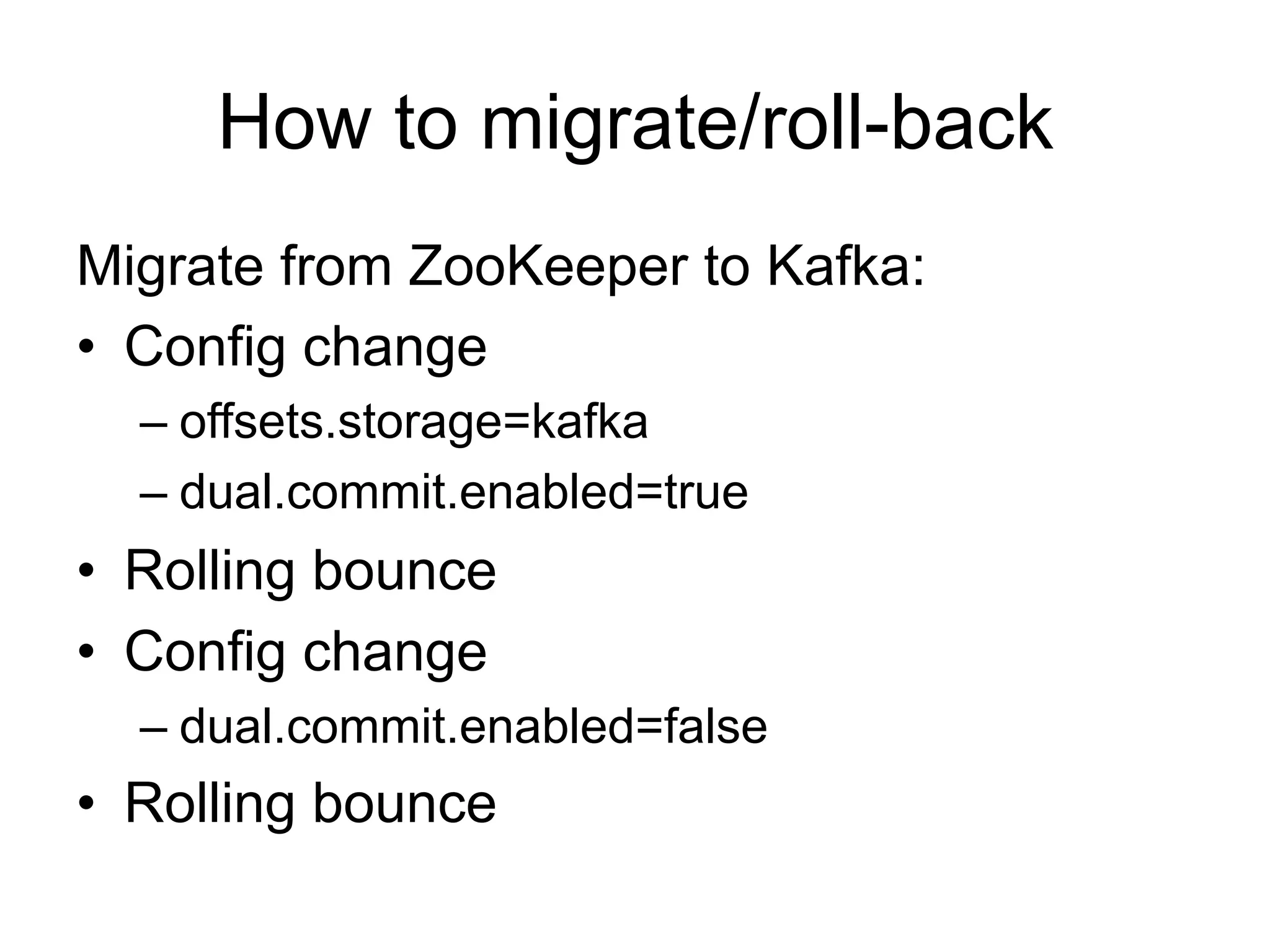

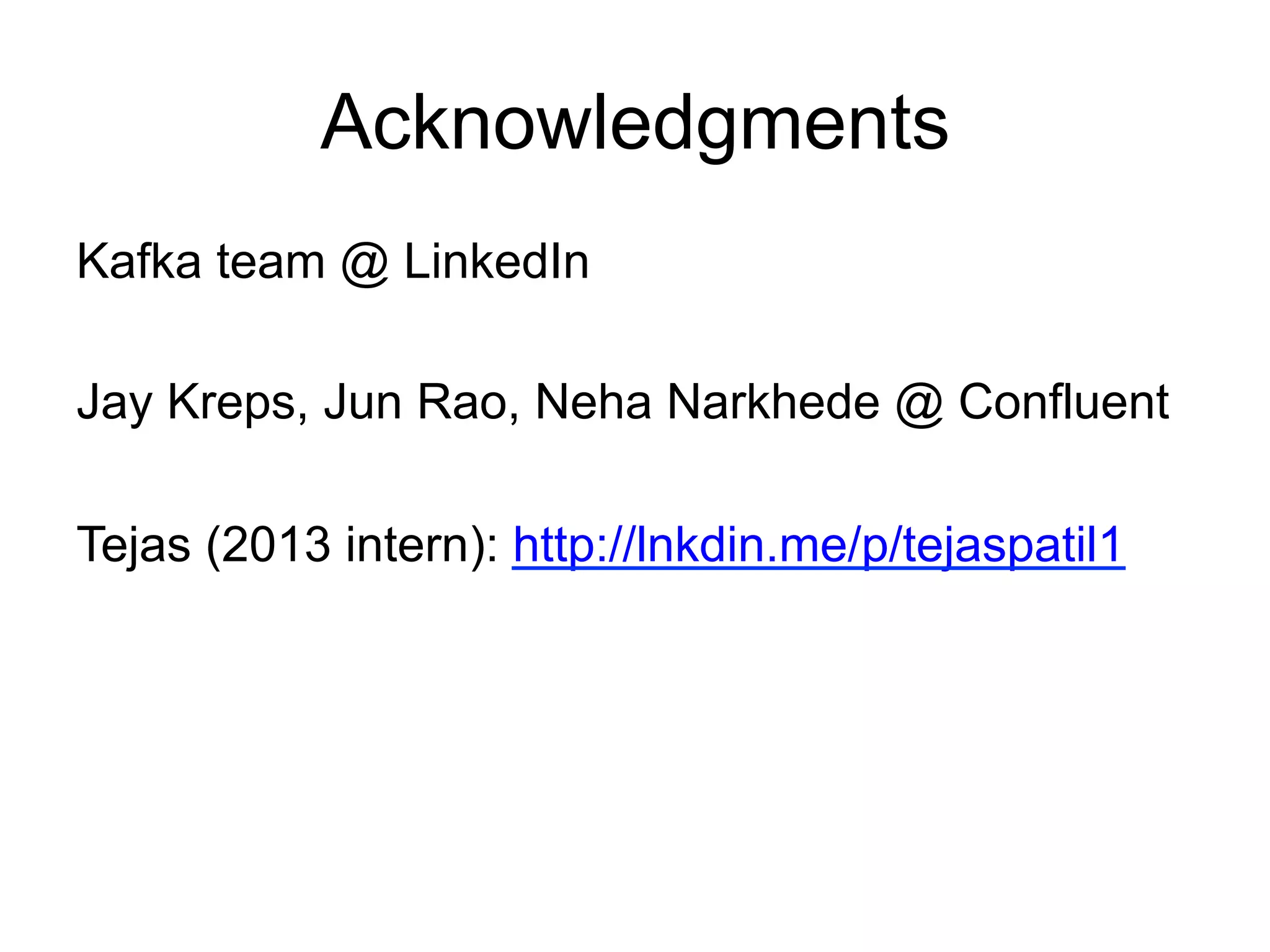

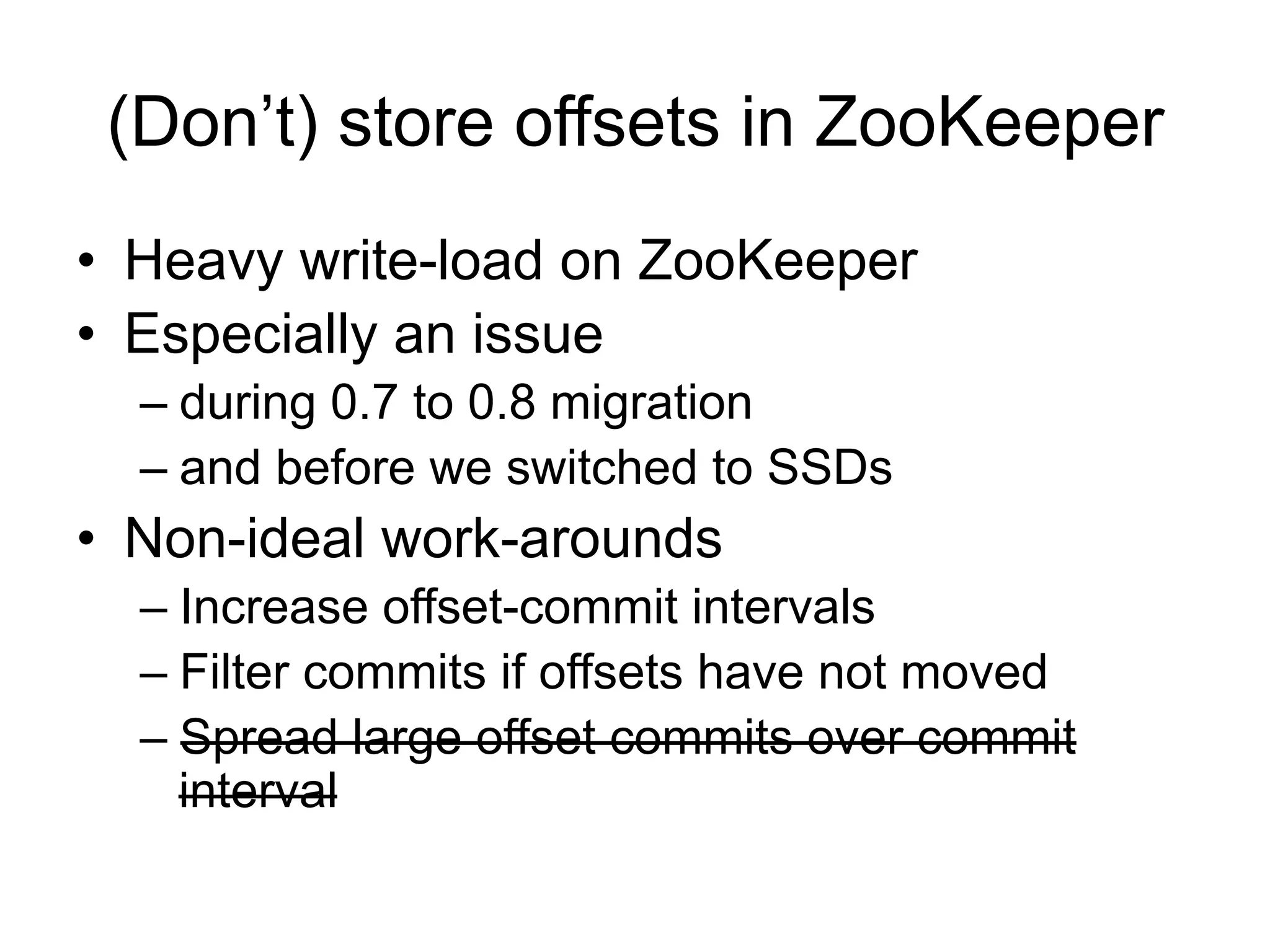

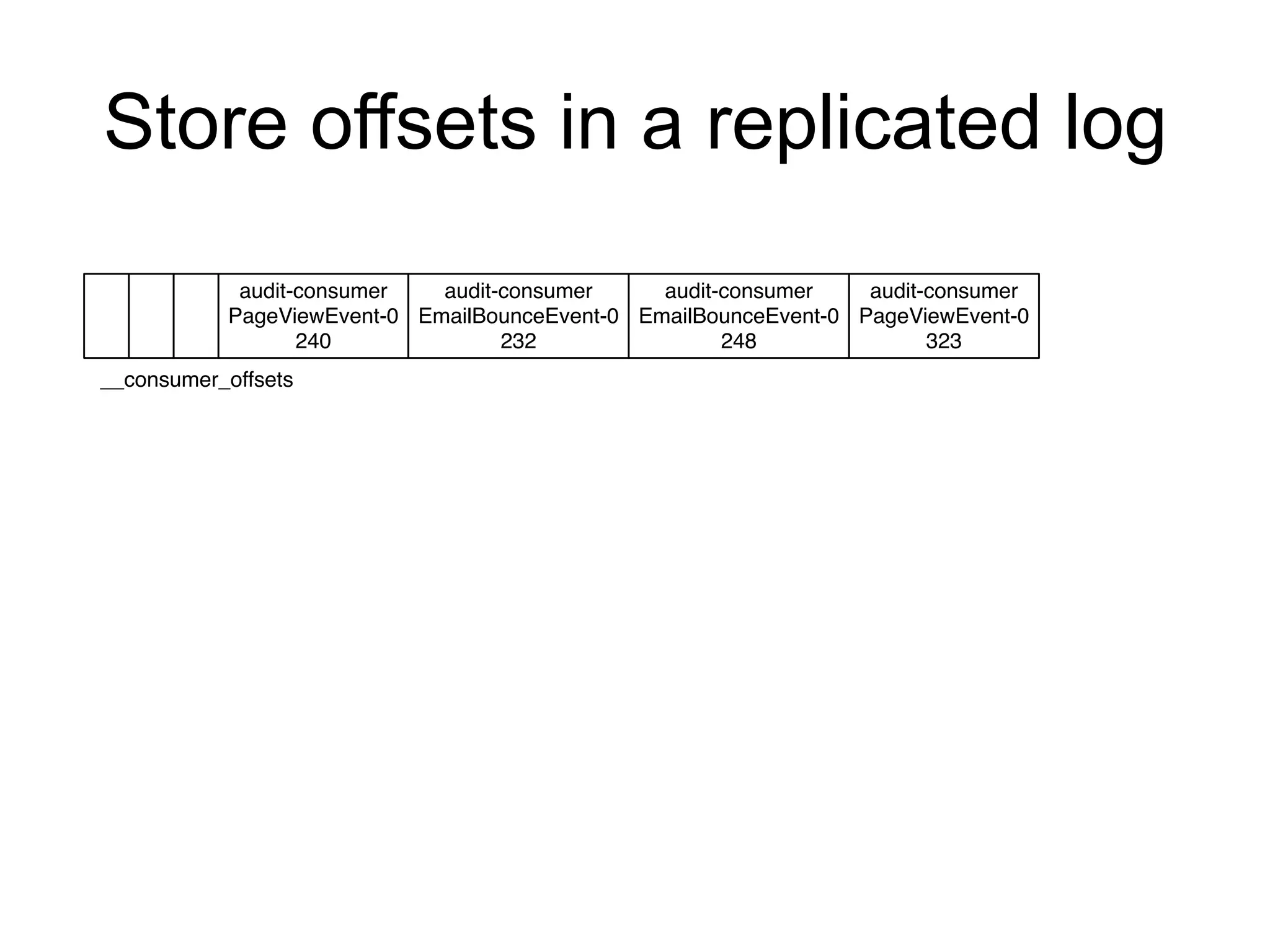

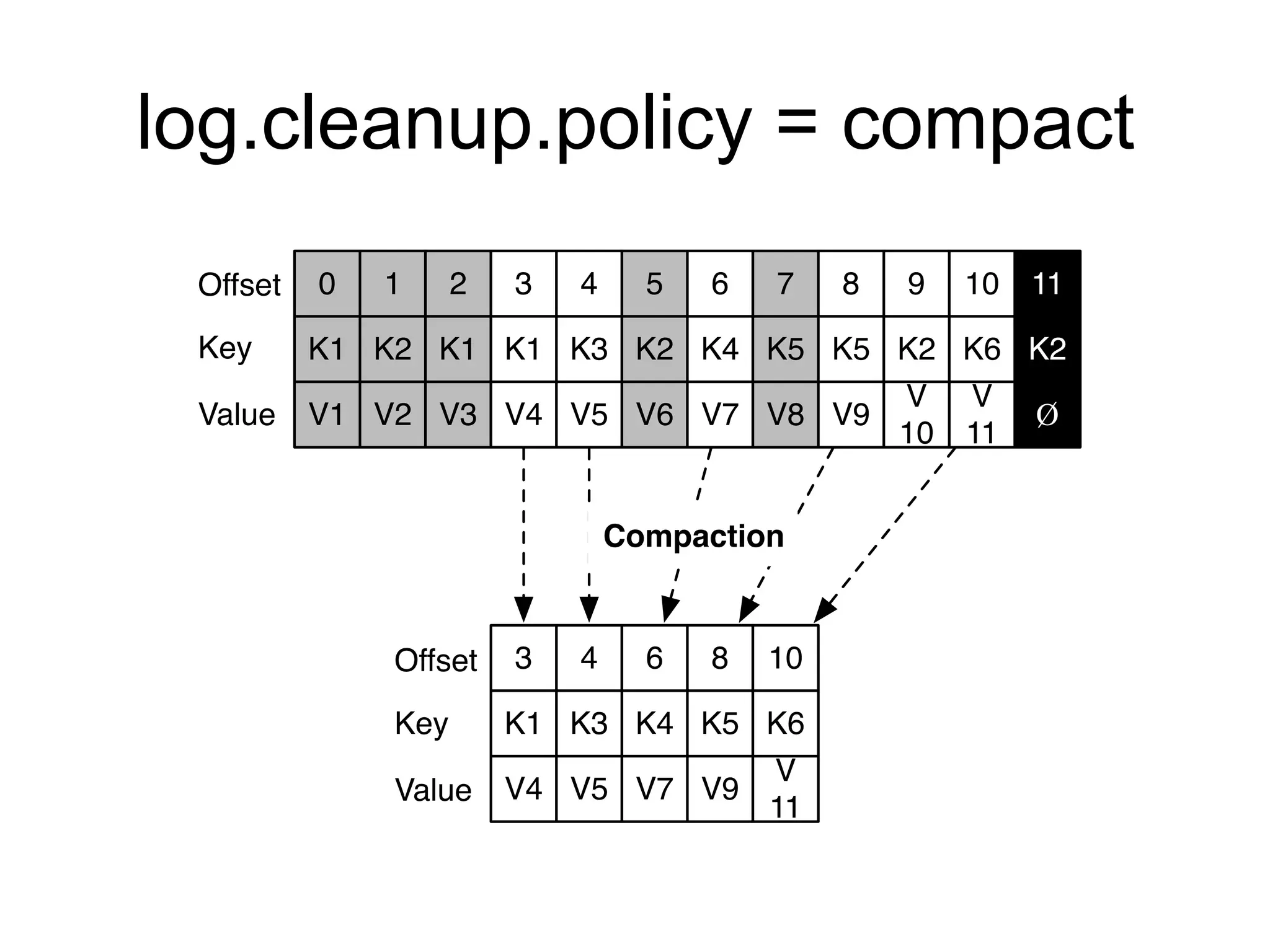

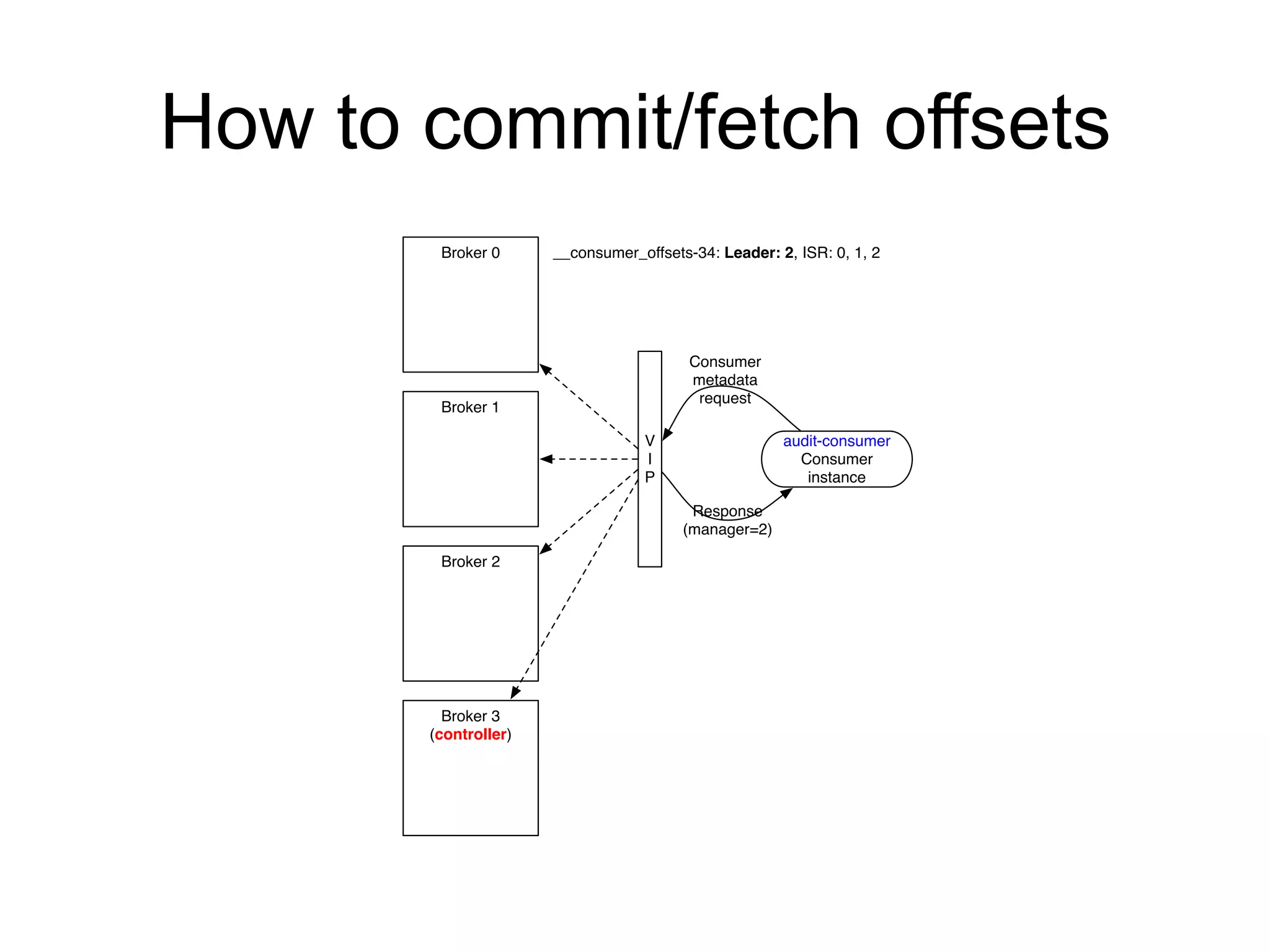

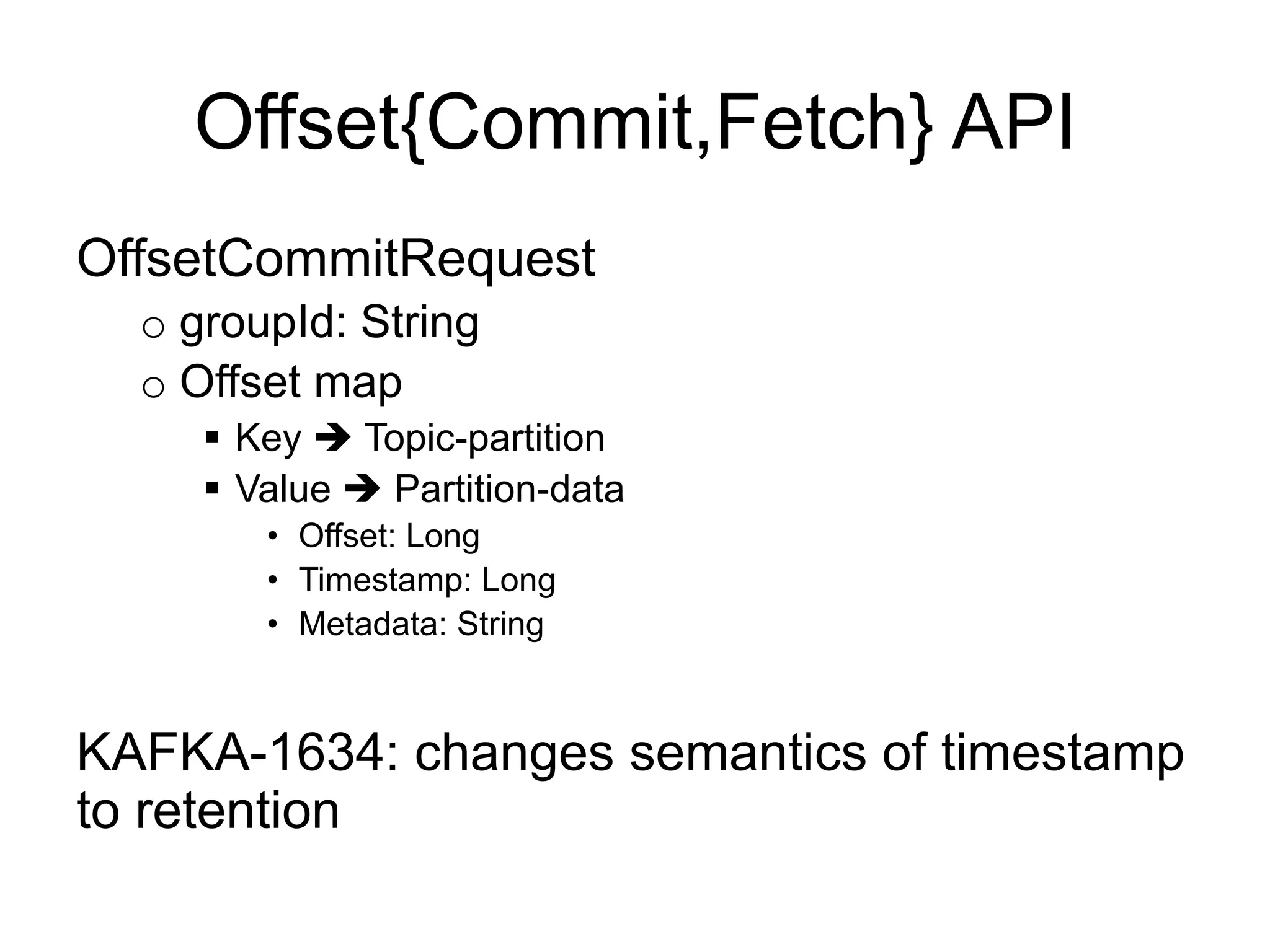

![Store offsets in a

replicated, partitioned log

audit-consumer

PageViewEvent-0

240

audit-consumer

EmailBounceEvent-0

232

__consumer_offsets, partition 3

audit-consumer

EmailBounceEvent-0

248

audit-consumer

PageViewEvent-0

323

mirrormaker

ClickEvent-0

54543

mirrormaker

ClickEvent-1

54444

mirrormaker

ClickEvent-1

54674

__consumer_offsets, partition 8

[audit-consumer, PageViewEvent-0]

[audit-consumer, EmailBounceEvent-0]

[mirrormaker, ClickEvent-0]

[mirrormaker, ClickEvent-1]

Offsets cache

323

248

54674

54543

Offset commits append to the offsets topic partition + update the cache

Offset fetches read from the offsets topic partition cache](https://image.slidesharecdn.com/offsetmanagement-150324213320-conversion-gate01/75/Consumer-offset-management-in-Kafka-12-2048.jpg)

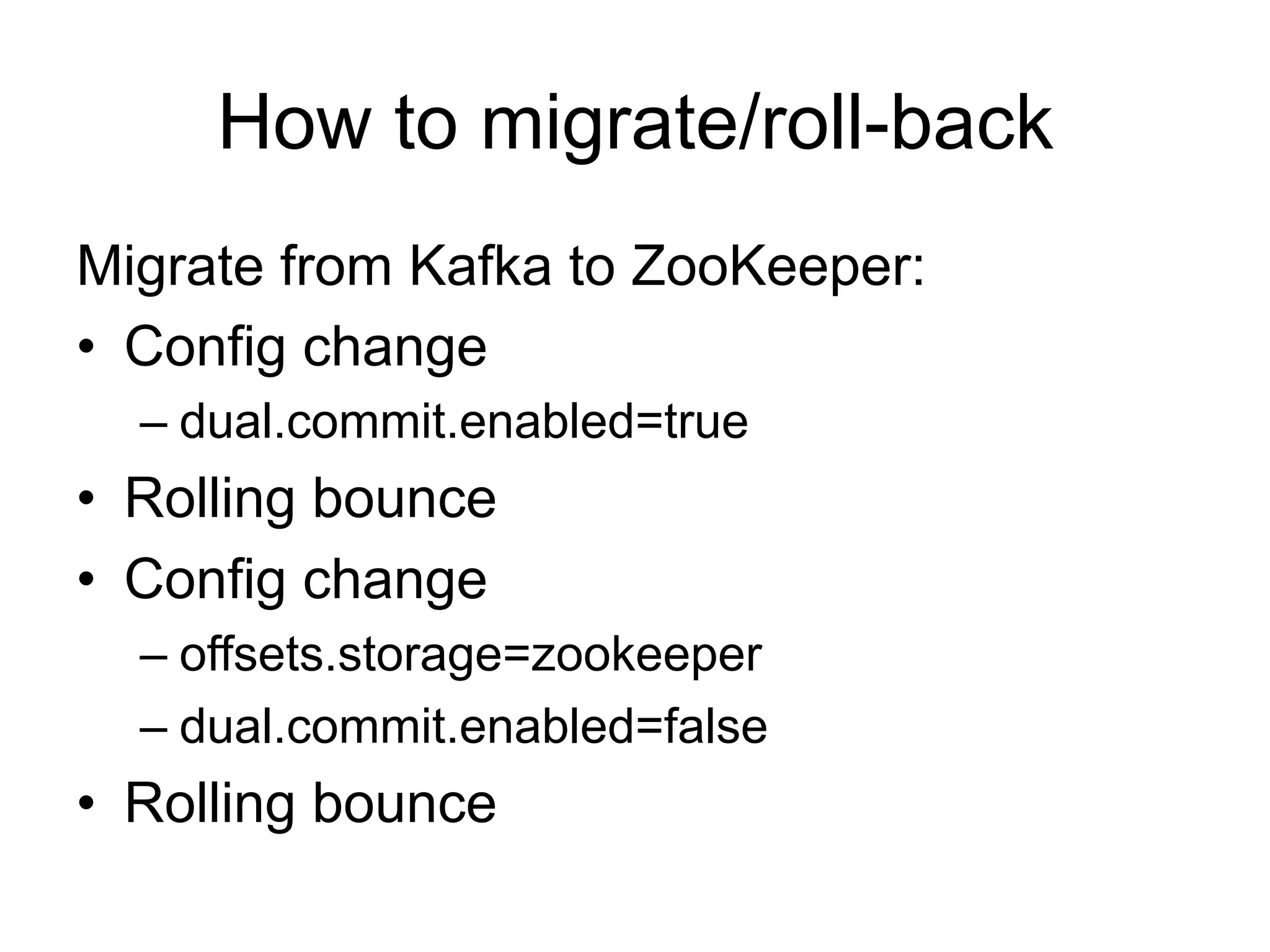

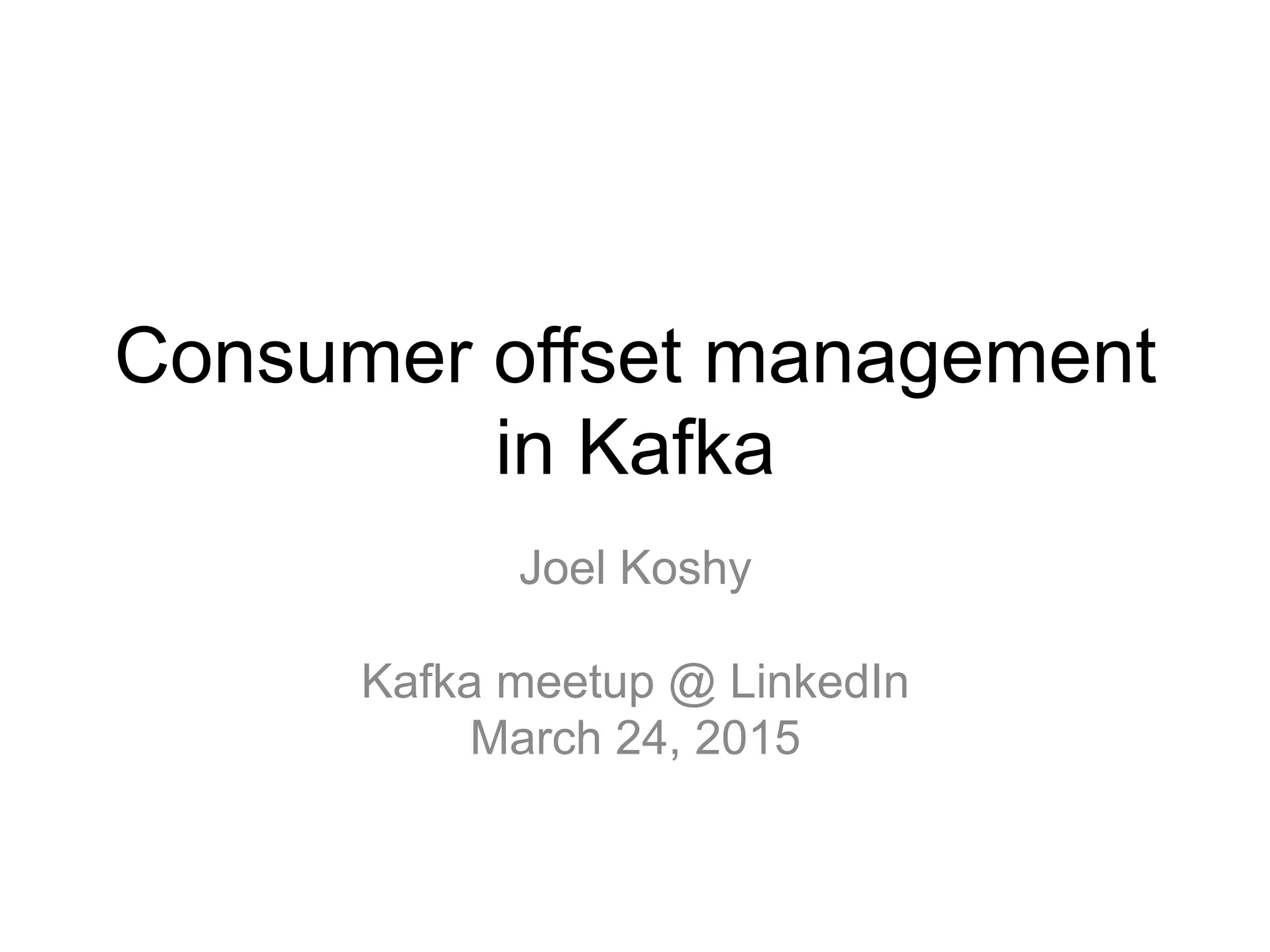

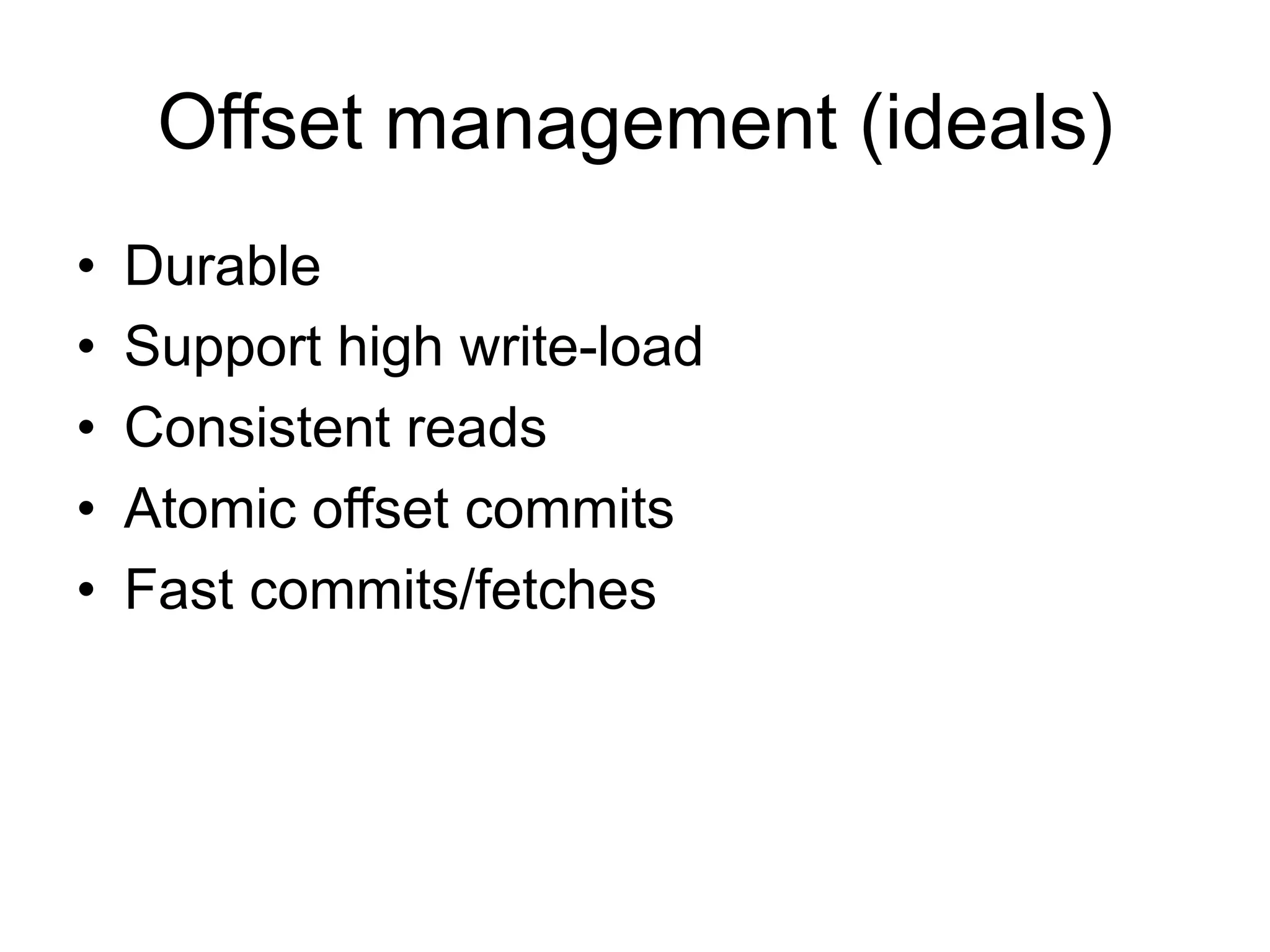

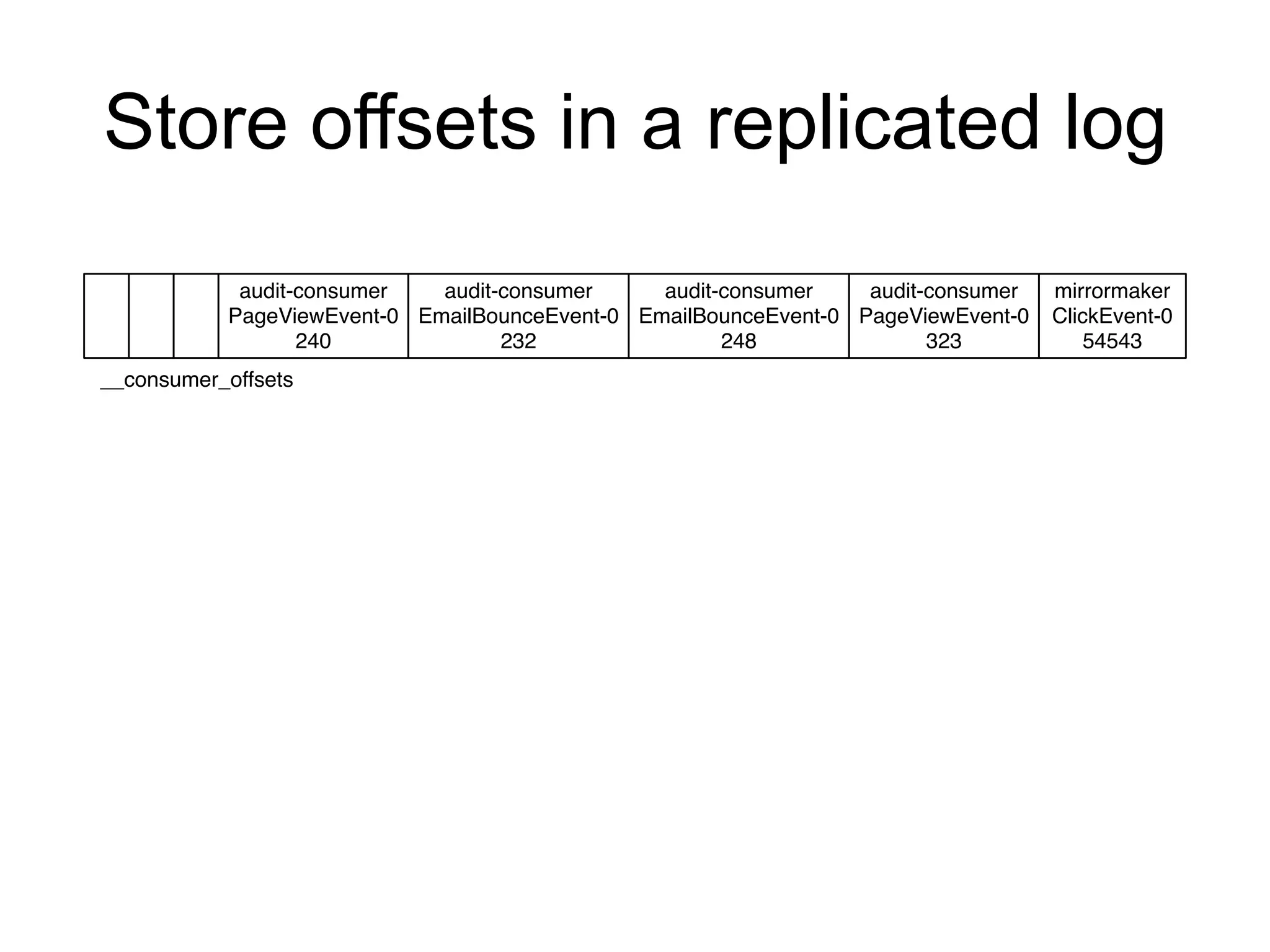

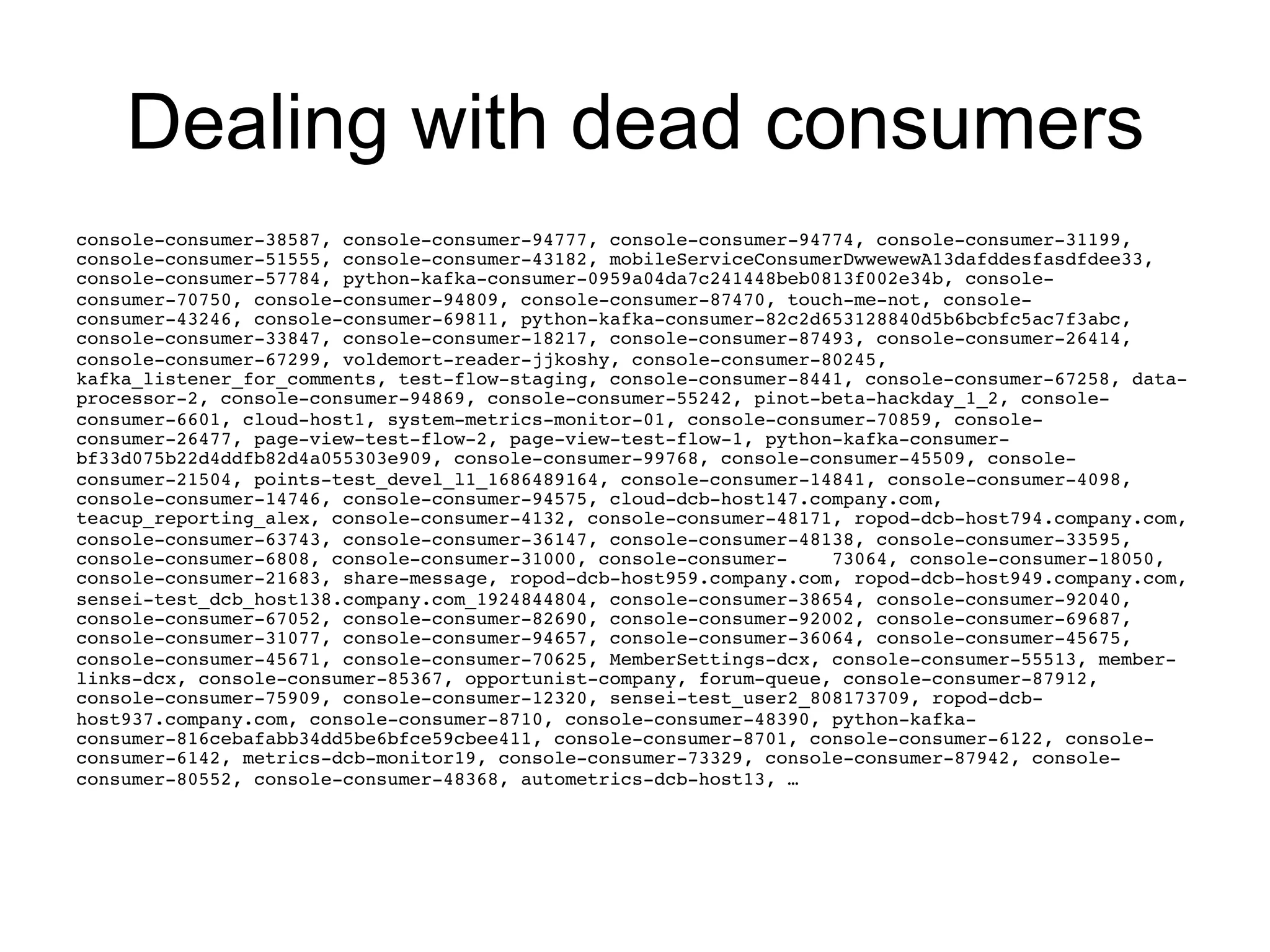

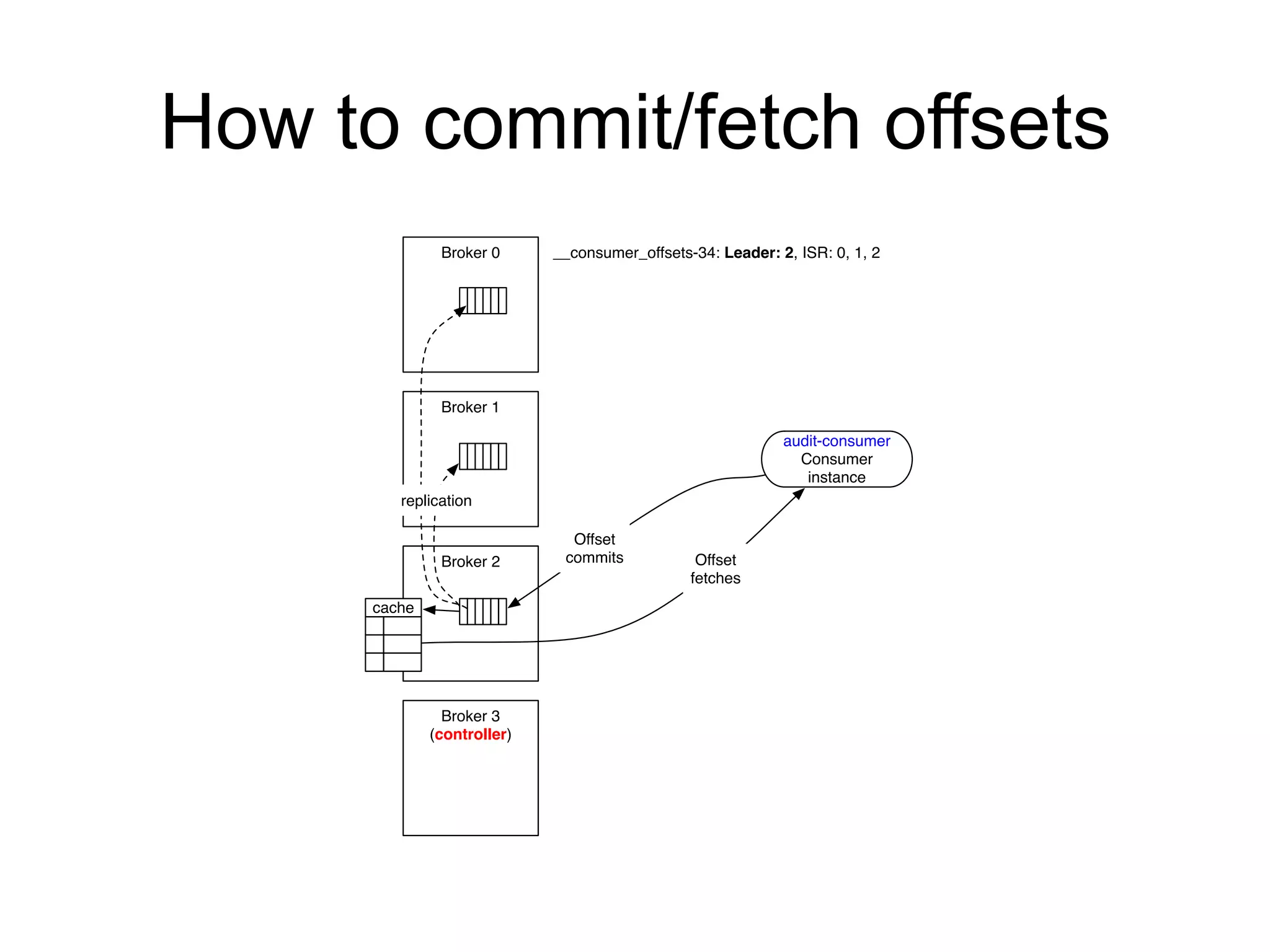

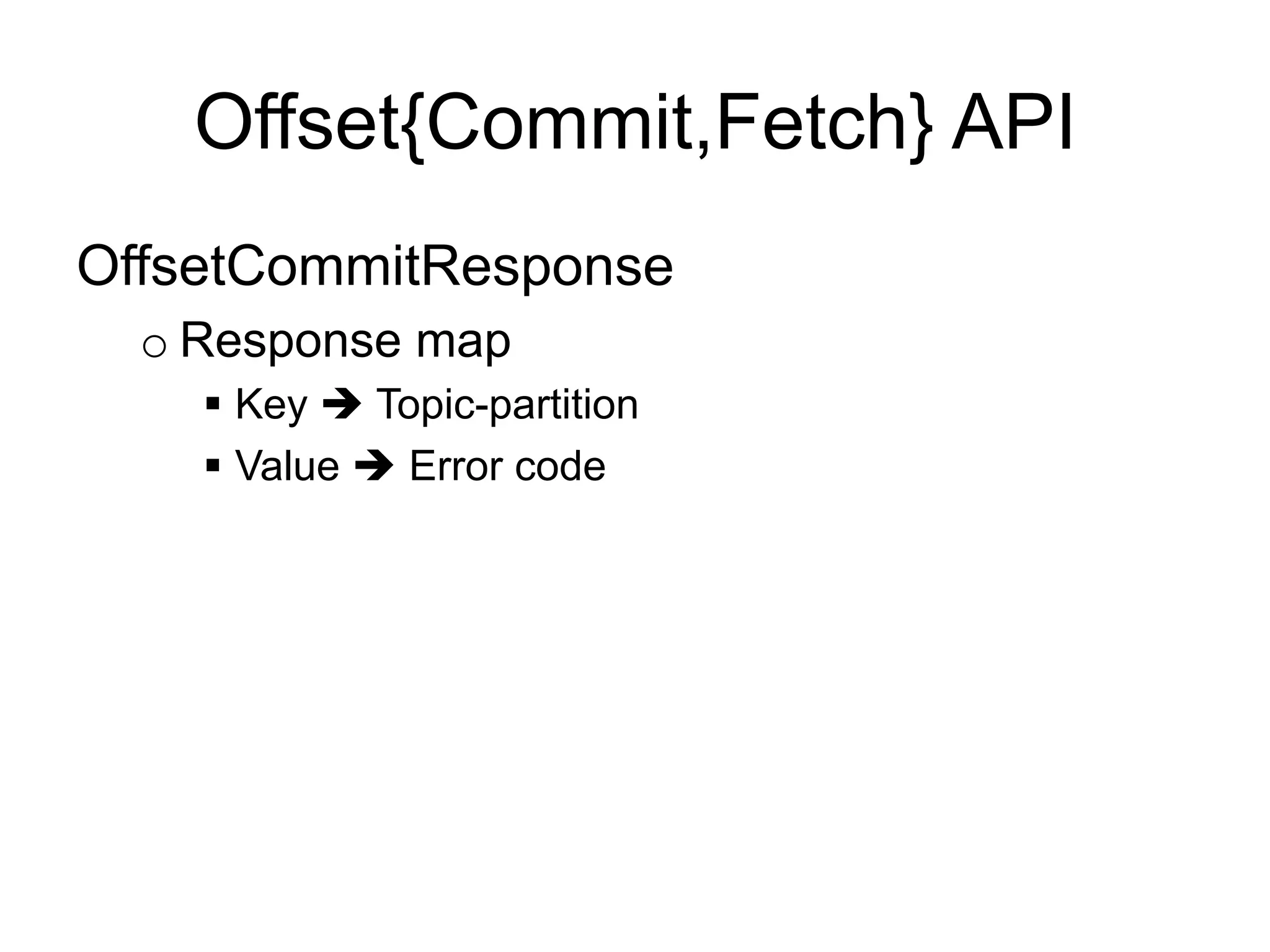

![Store offsets in a

replicated, partitioned log

audit-consumer

PageViewEvent-0

240

audit-consumer

EmailBounceEvent-0

232

__consumer_offsets, partition 3

audit-consumer

EmailBounceEvent-0

248

audit-consumer

PageViewEvent-0

323

mirrormaker

ClickEvent-0

54543

mirrormaker

ClickEvent-1

54444

mirrormaker

ClickEvent-1

54674

__consumer_offsets, partition 8

[audit-consumer, PageViewEvent-0]

[audit-consumer, EmailBounceEvent-0]

[mirrormaker, ClickEvent-0]

[mirrormaker, ClickEvent-1]

Offsets cache

323

248

54674

54543

Offset commits append to the offsets topic partition + update the cache

Offset fetches read from the offsets topic partition cache

How do we GC older offset entries?](https://image.slidesharecdn.com/offsetmanagement-150324213320-conversion-gate01/75/Consumer-offset-management-in-Kafka-13-2048.jpg)

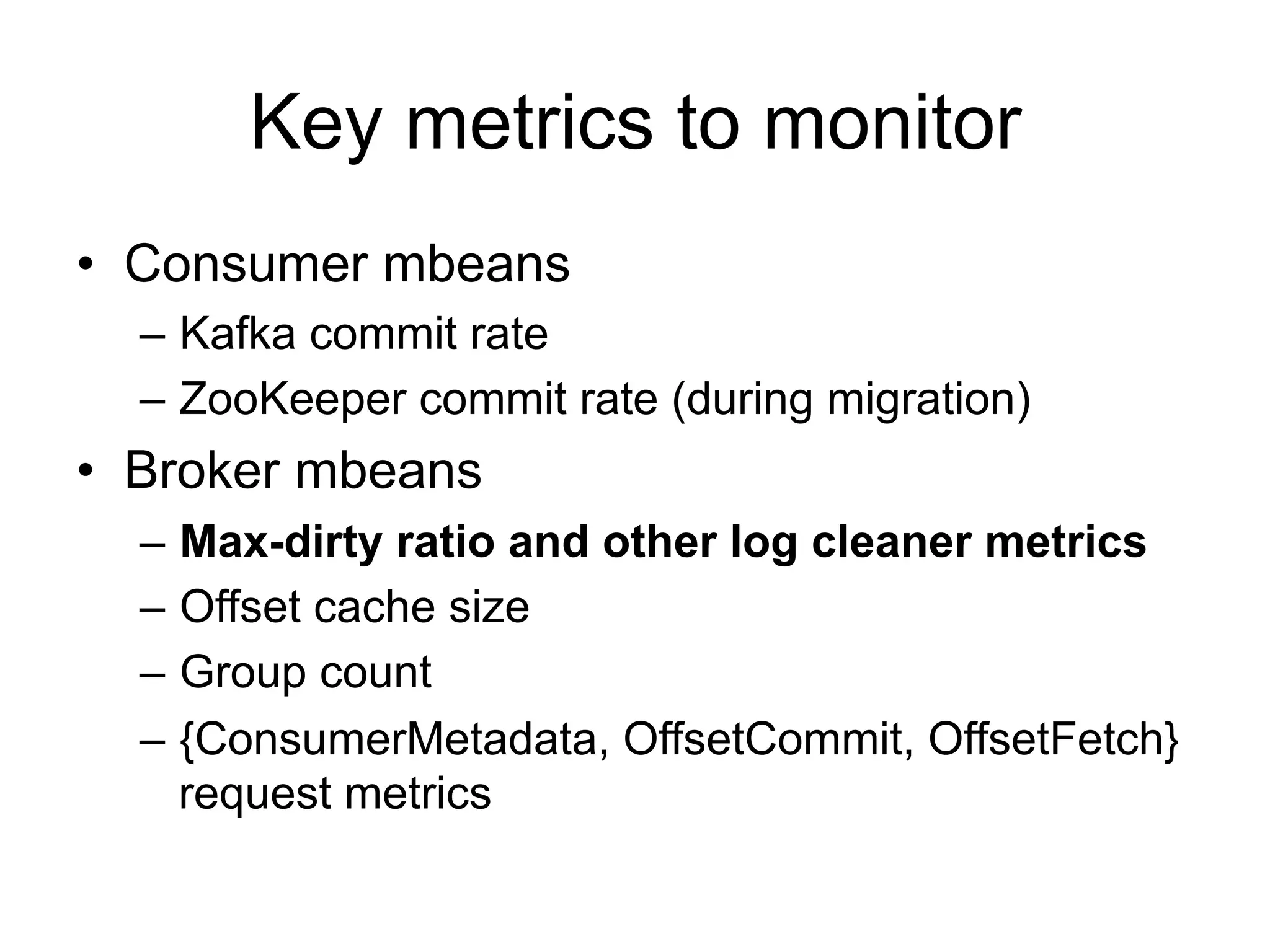

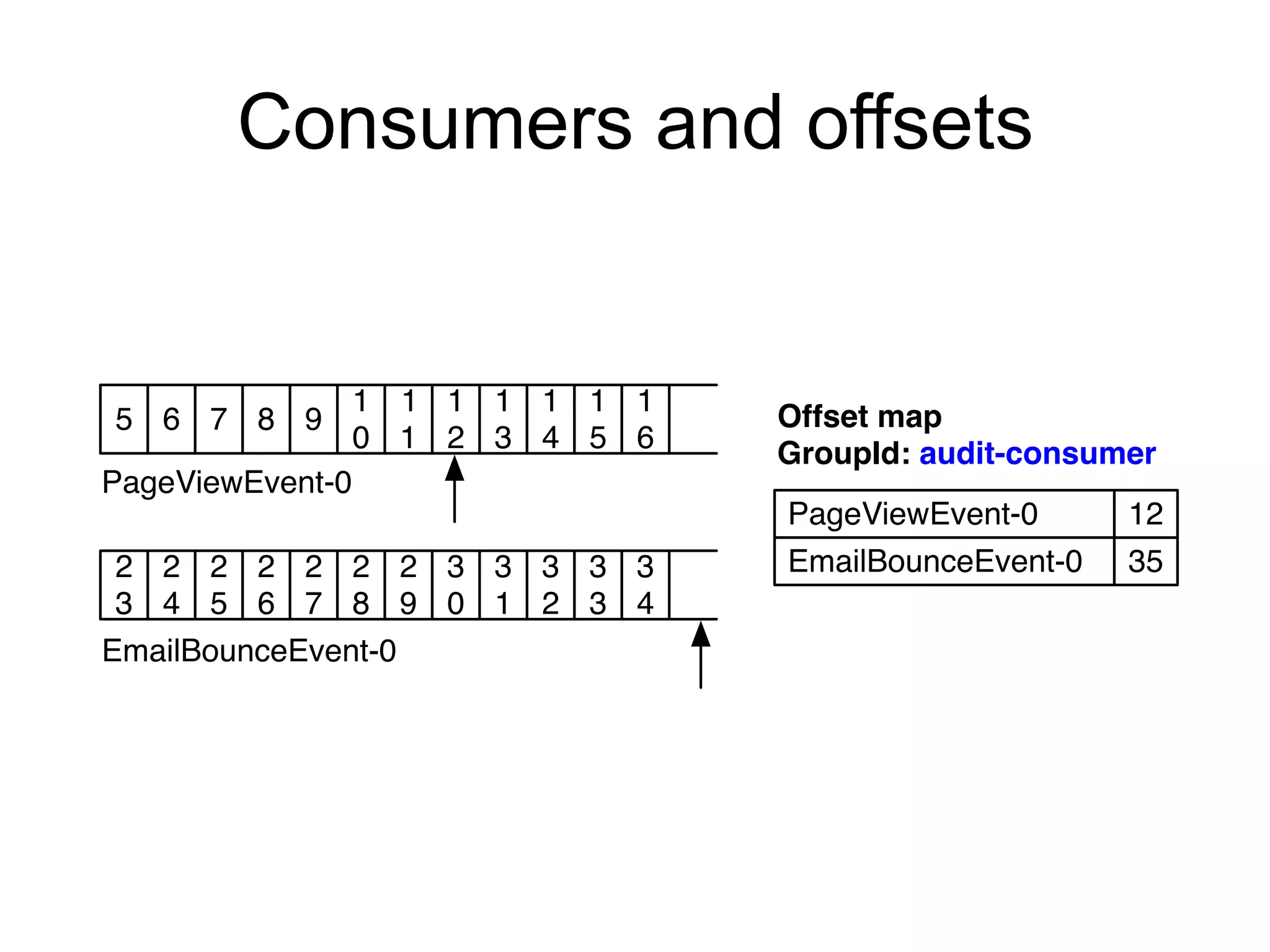

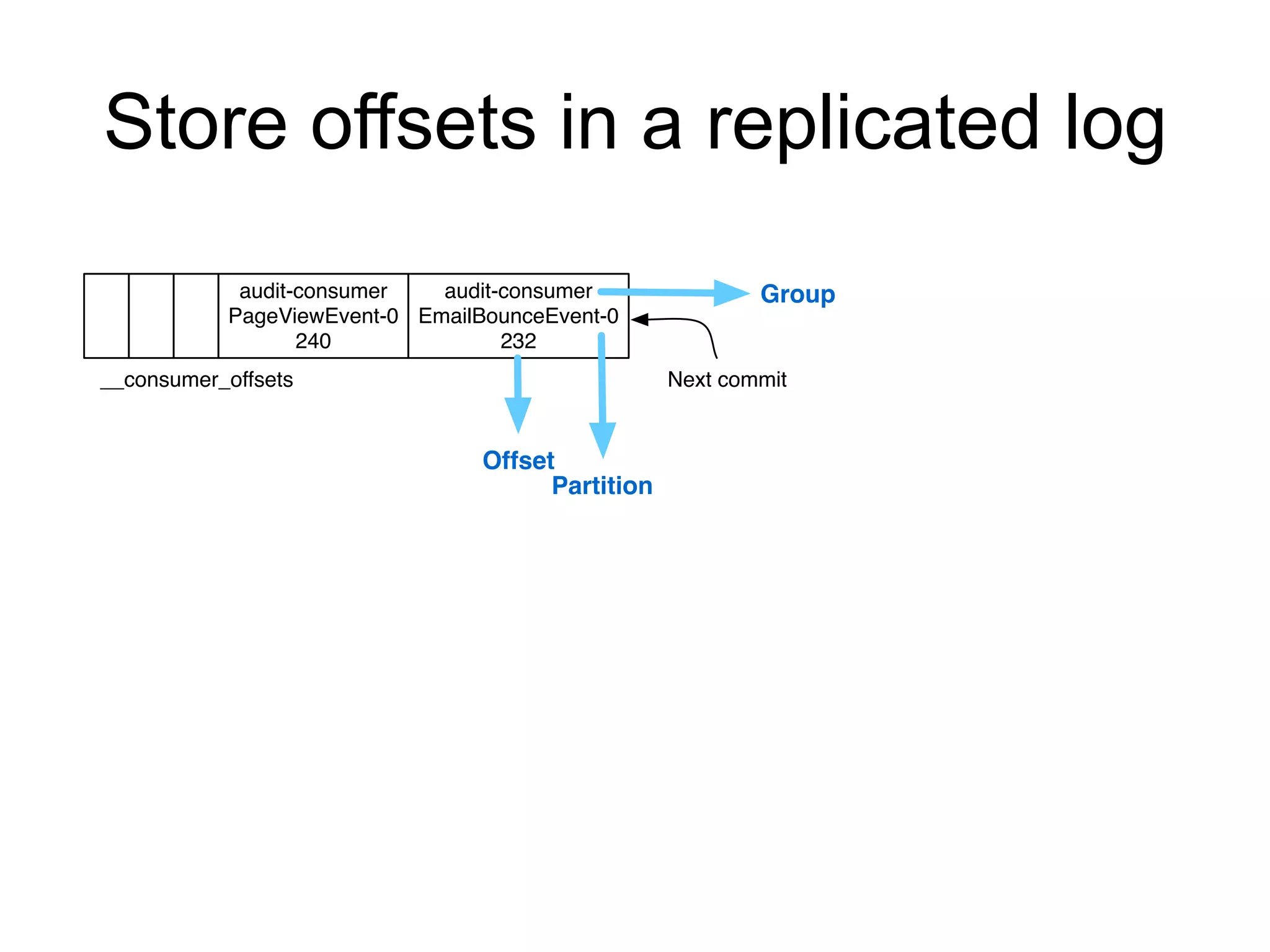

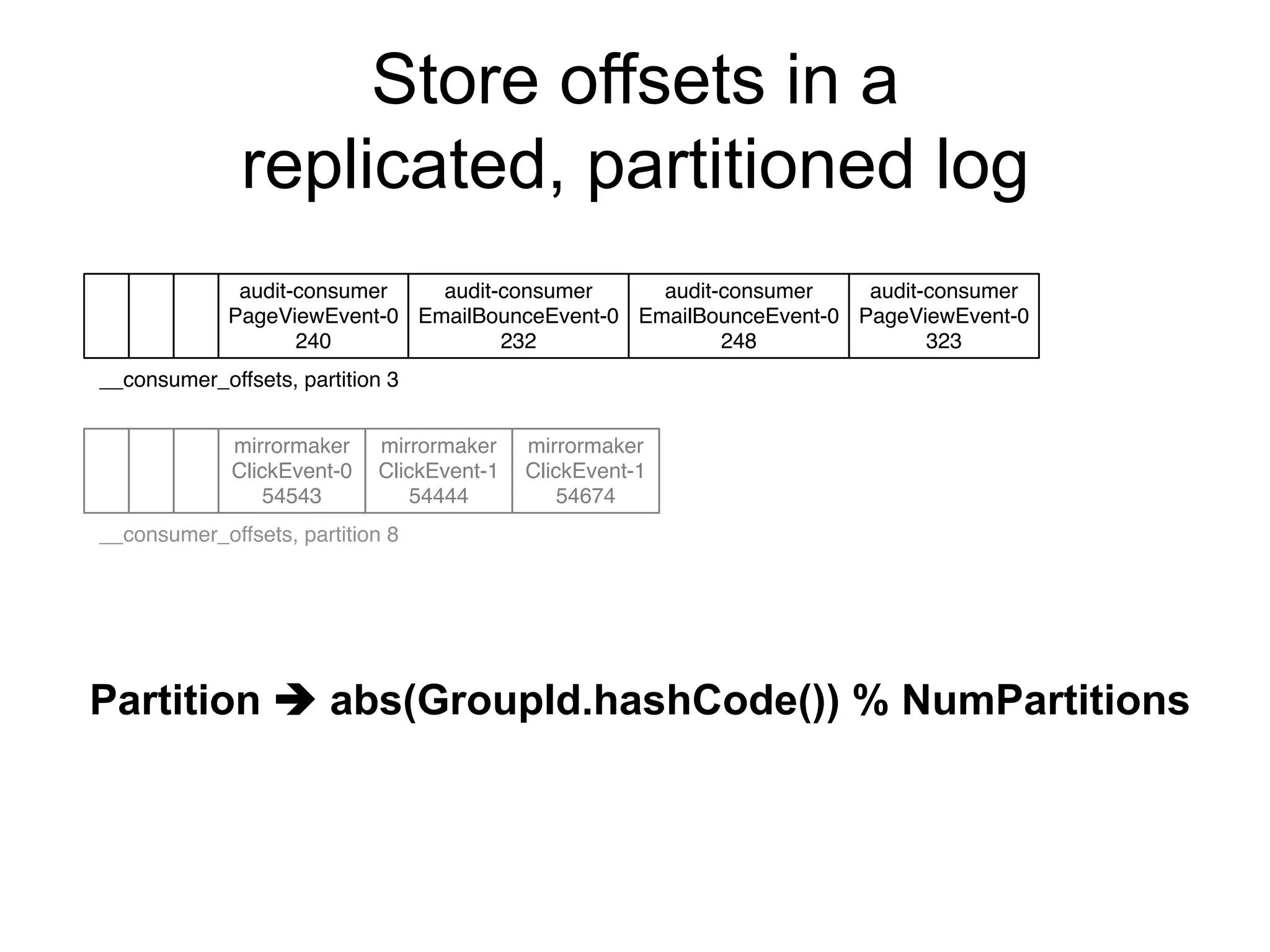

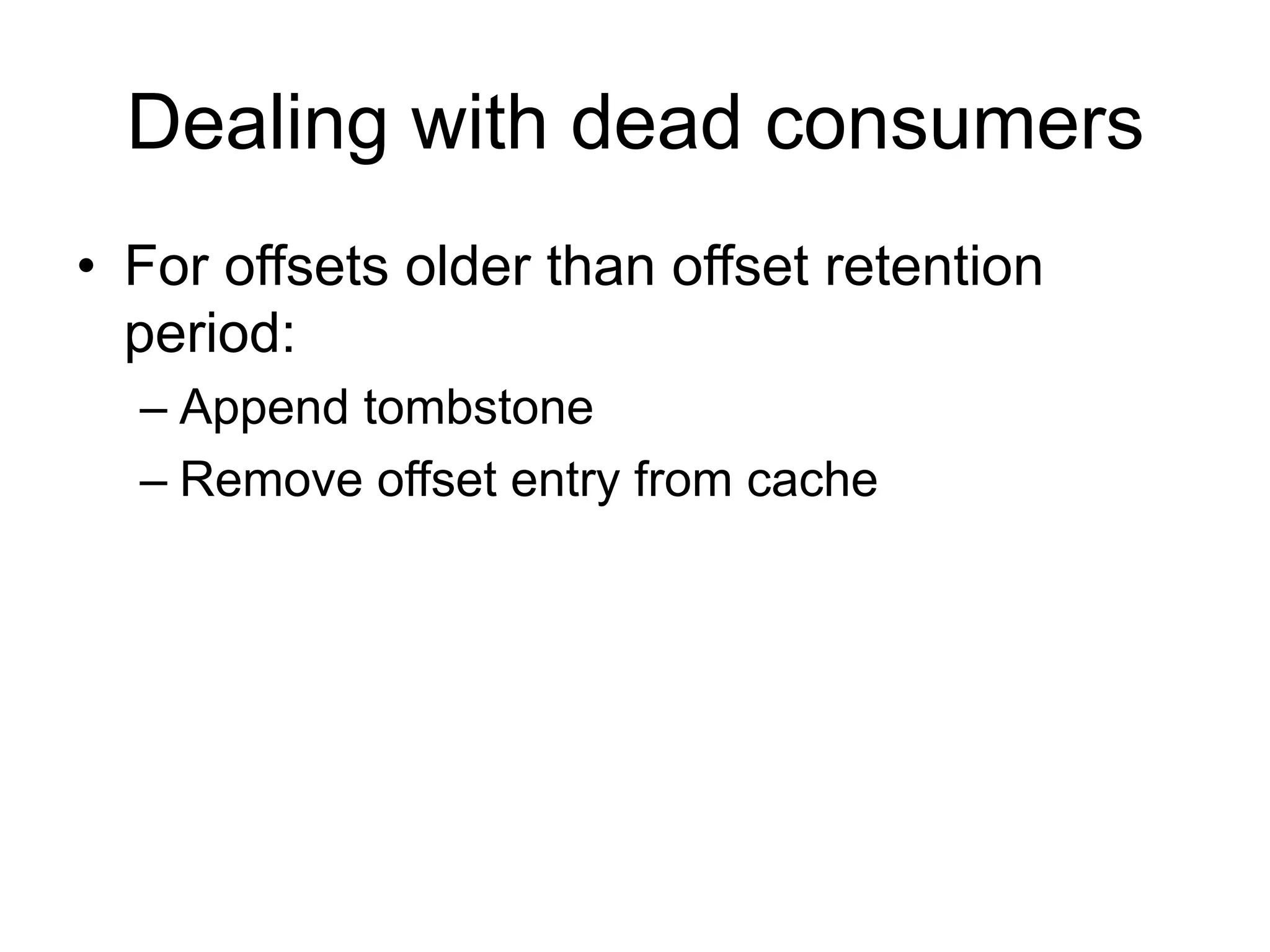

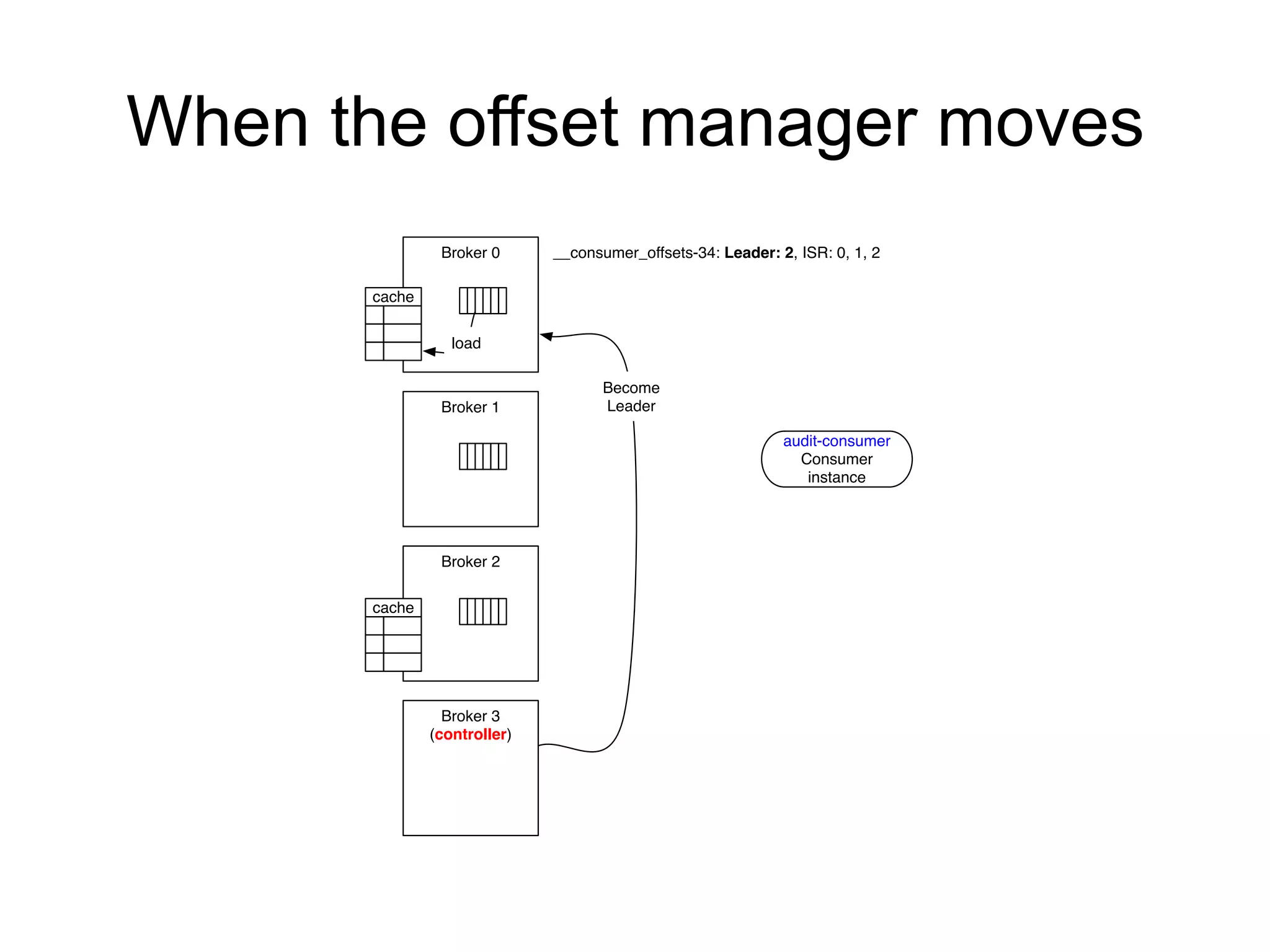

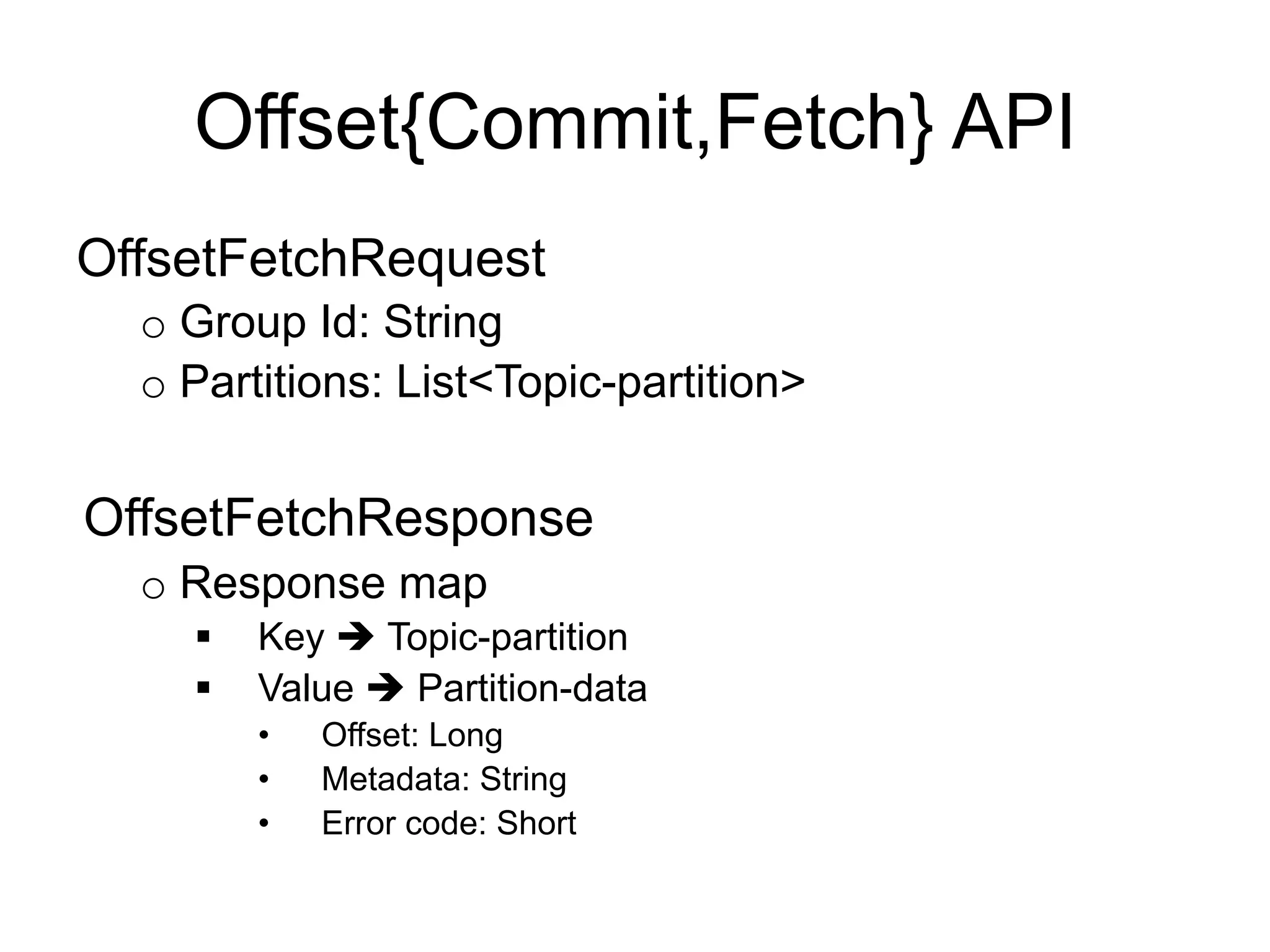

![Store offsets in a

replicated, partitioned, compacted

log

audit-consumer

PageViewEvent-0

126312342

audit-consumer

EmailBounceEvent-0

59843

audit-consumer

PageViewEvent-0

126319628

audit-consumer

EmailBounceEvent-0

86243

audit-consumer

PageViewEvent-0

126398102

Key

Value

audit-consumer

EmailBounceEvent-0

86243

audit-consumer

PageViewEvent-0

126398102

Compaction

Key è [Group, Topic, Partition]

Value è Offset](https://image.slidesharecdn.com/offsetmanagement-150324213320-conversion-gate01/75/Consumer-offset-management-in-Kafka-15-2048.jpg)

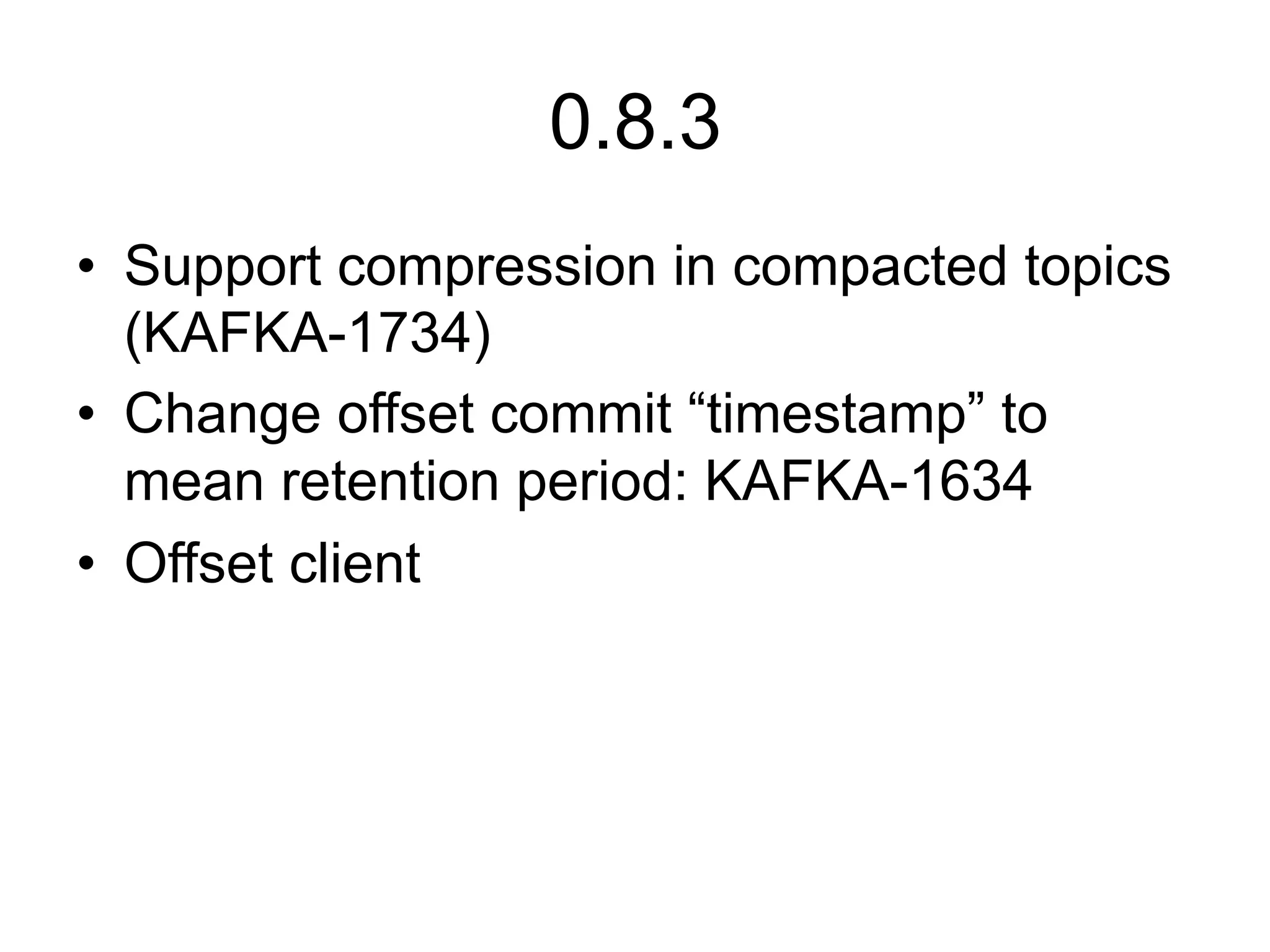

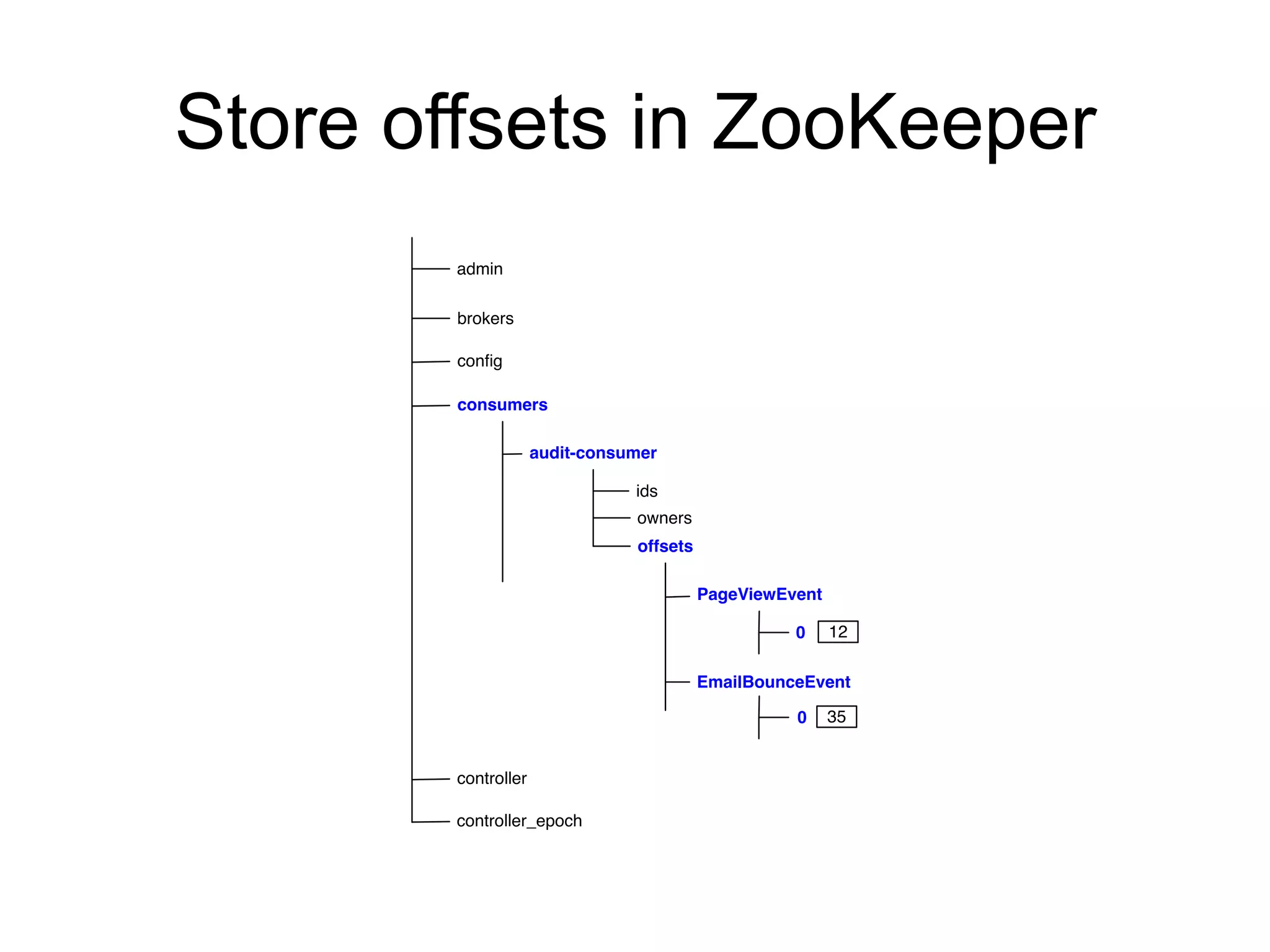

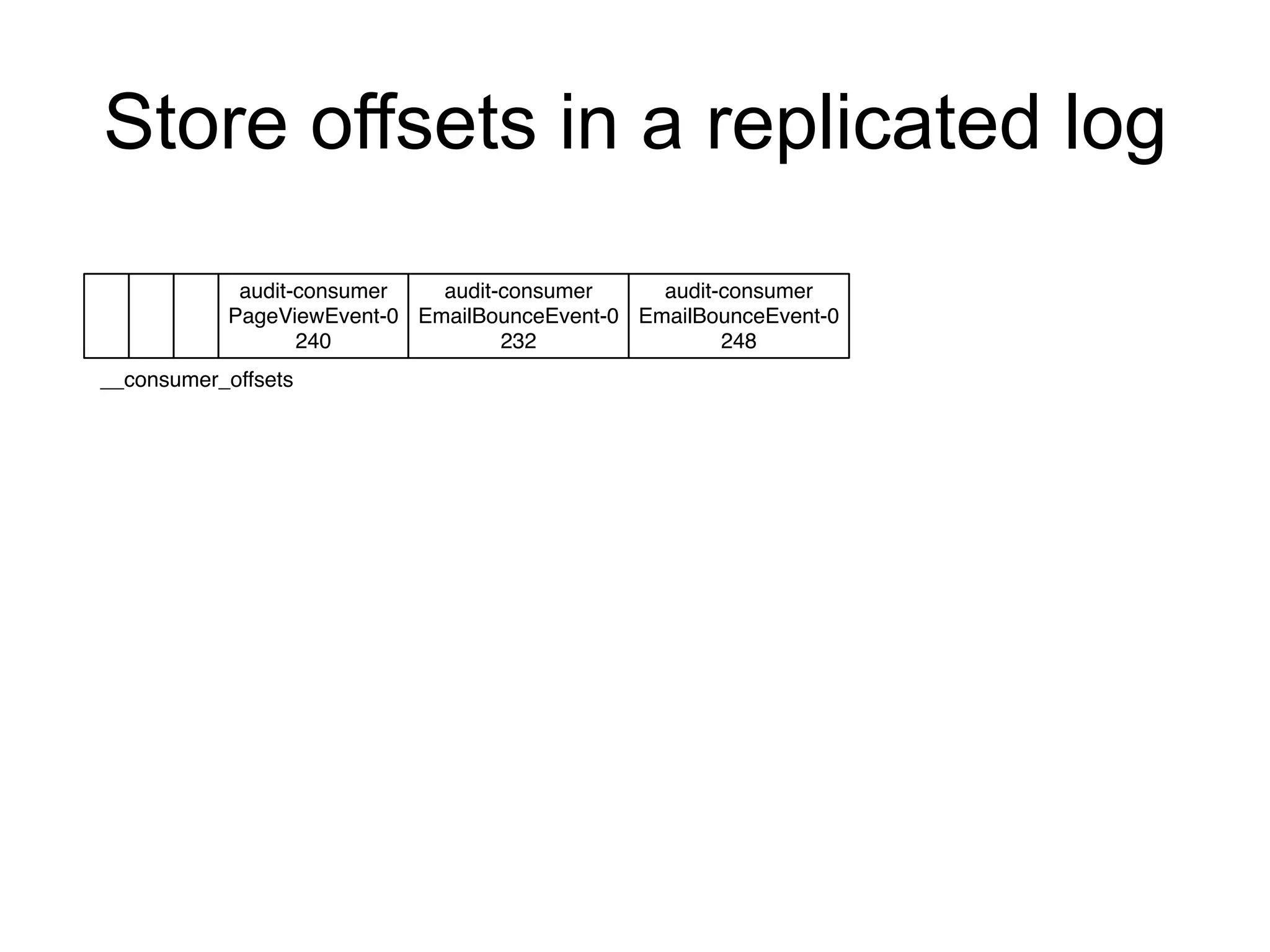

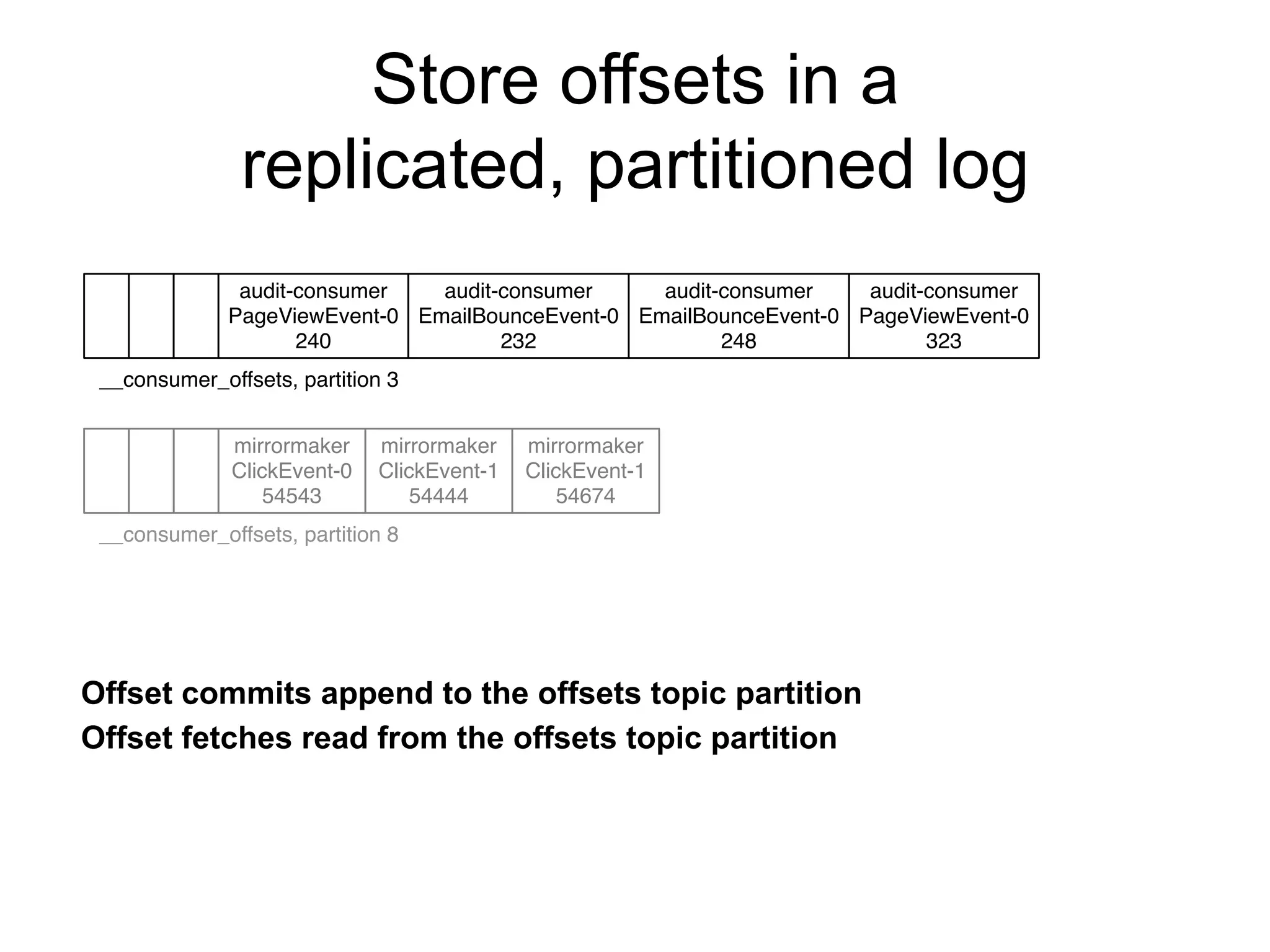

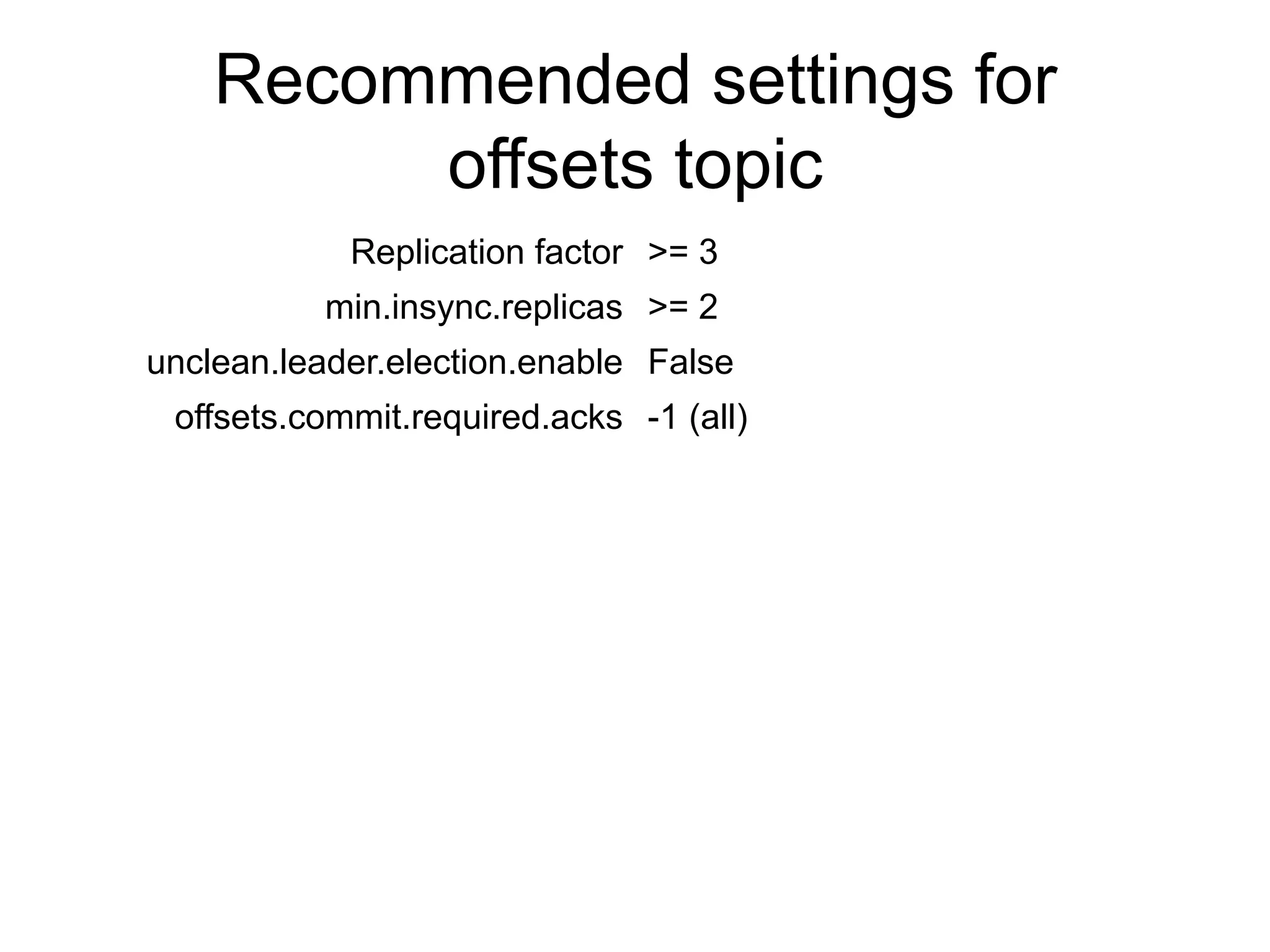

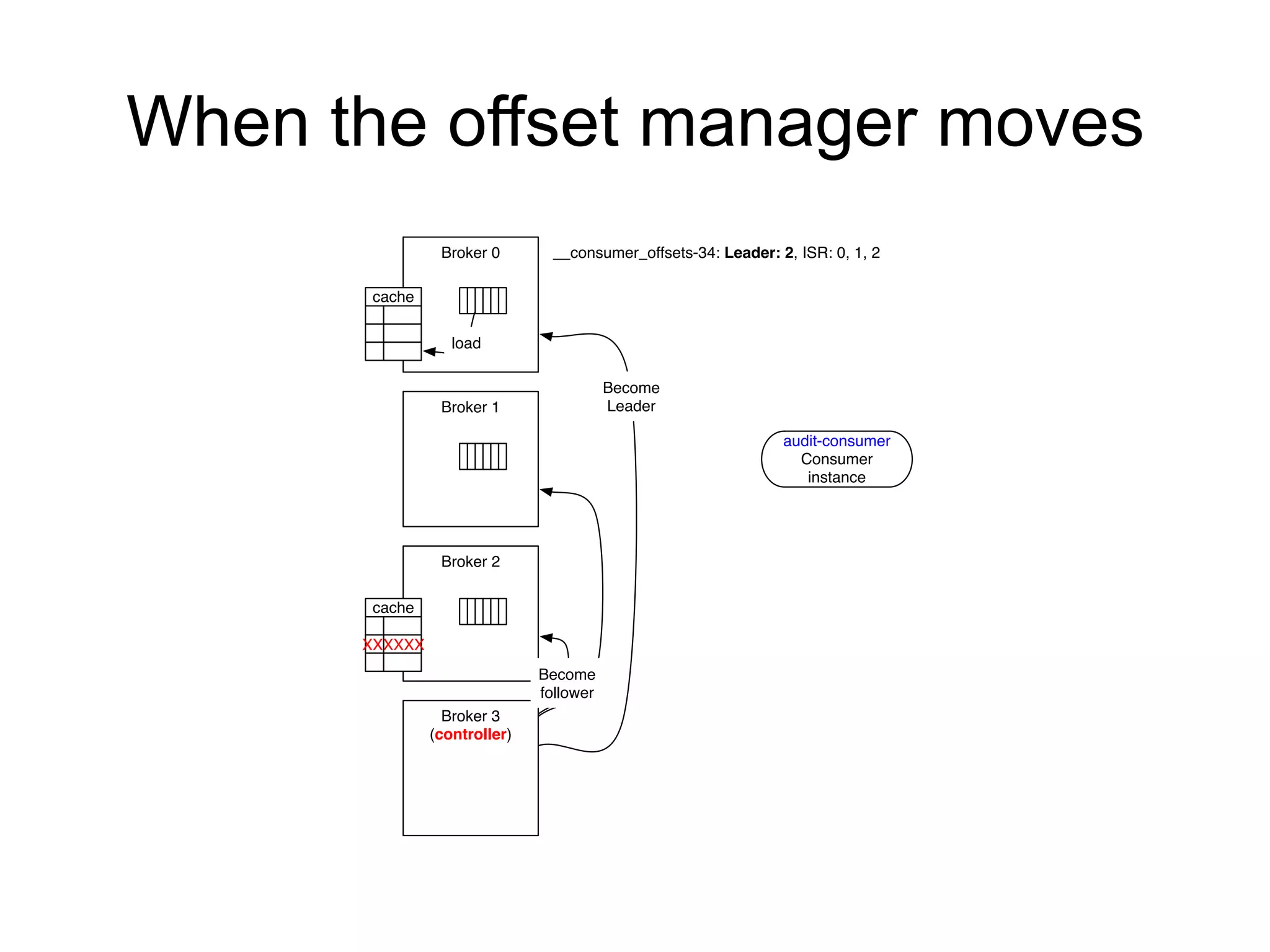

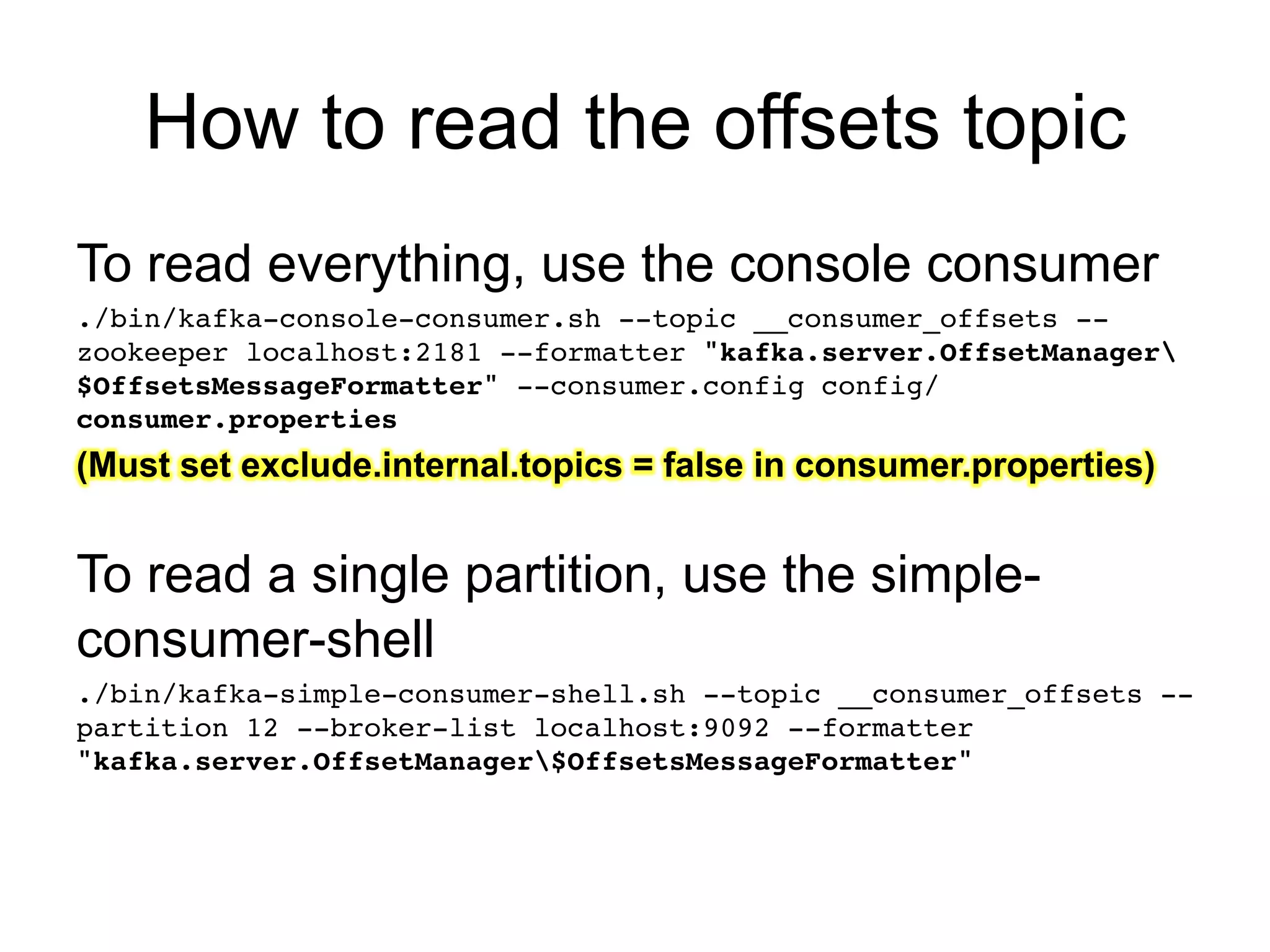

![Inside the offsets topic

[Group, Topic, Partition]::[Offset, Metadata, Timestamp]

[audit-consumer,PageViewEvent,7]::OffsetAndMetadata[53568,NO_METADATA,1416363620711]!

[audit-consumer,service-log-event,5]::OffsetAndMetadata[168012,NO_METADATA,

1416363620711]!

[audit-consumer,EmailBounceEvent,4]::OffsetAndMetadata[8524676,NO_METADATA,

1416363620711]!

[audit-consumer,ClickEvent,0]::OffsetAndMetadata[8132292,NO_METADATA,1416363620711]!

[audit-consumer,metrics-event,1]::OffsetAndMetadata[1835900,NO_METADATA,1416363620711]!

[audit-consumer,CompanyEvent,0]::OffsetAndMetadata[109337,NO_METADATA,1416363620711]!

[audit-consumer,test-topic,1]::OffsetAndMetadata[352989,NO_METADATA,1416363620711]!

[audit-consumer,meetup-event,2]::OffsetAndMetadata[39961,NO_METADATA,1416363620711]!

[audit-consumer,push-topic,6]::OffsetAndMetadata[4210366,NO_METADATA,1416363620711]!](https://image.slidesharecdn.com/offsetmanagement-150324213320-conversion-gate01/75/Consumer-offset-management-in-Kafka-33-2048.jpg)