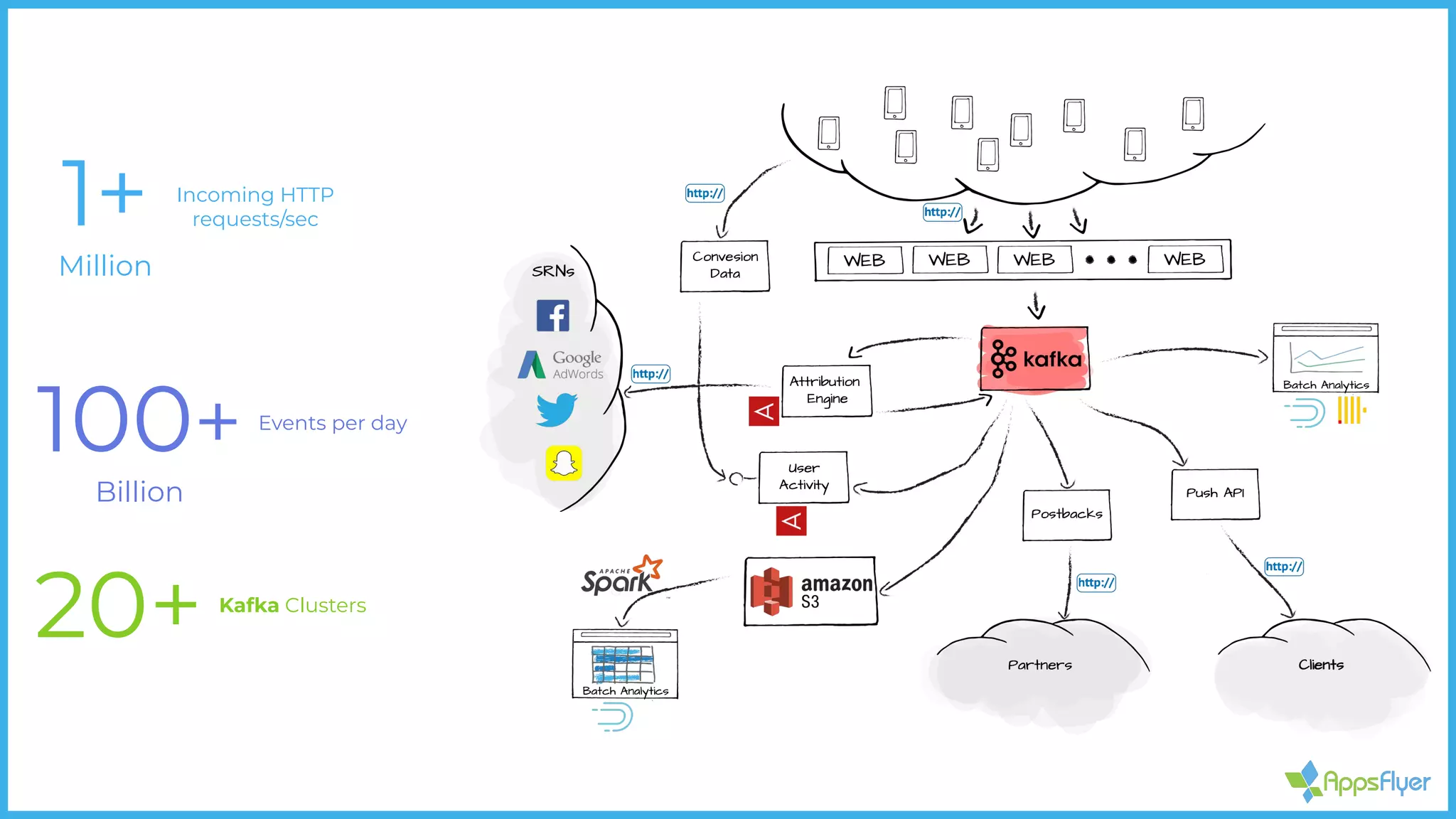

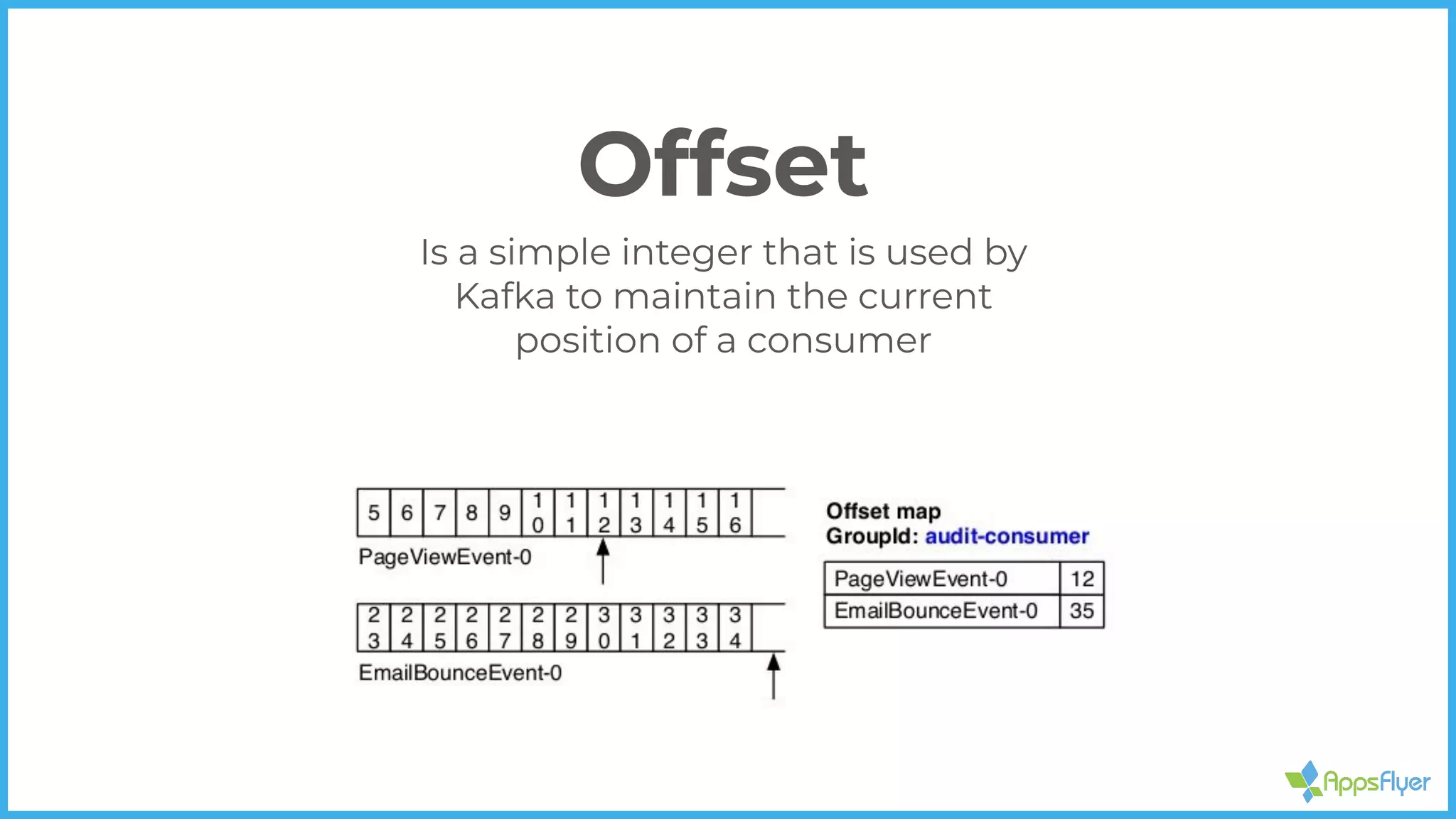

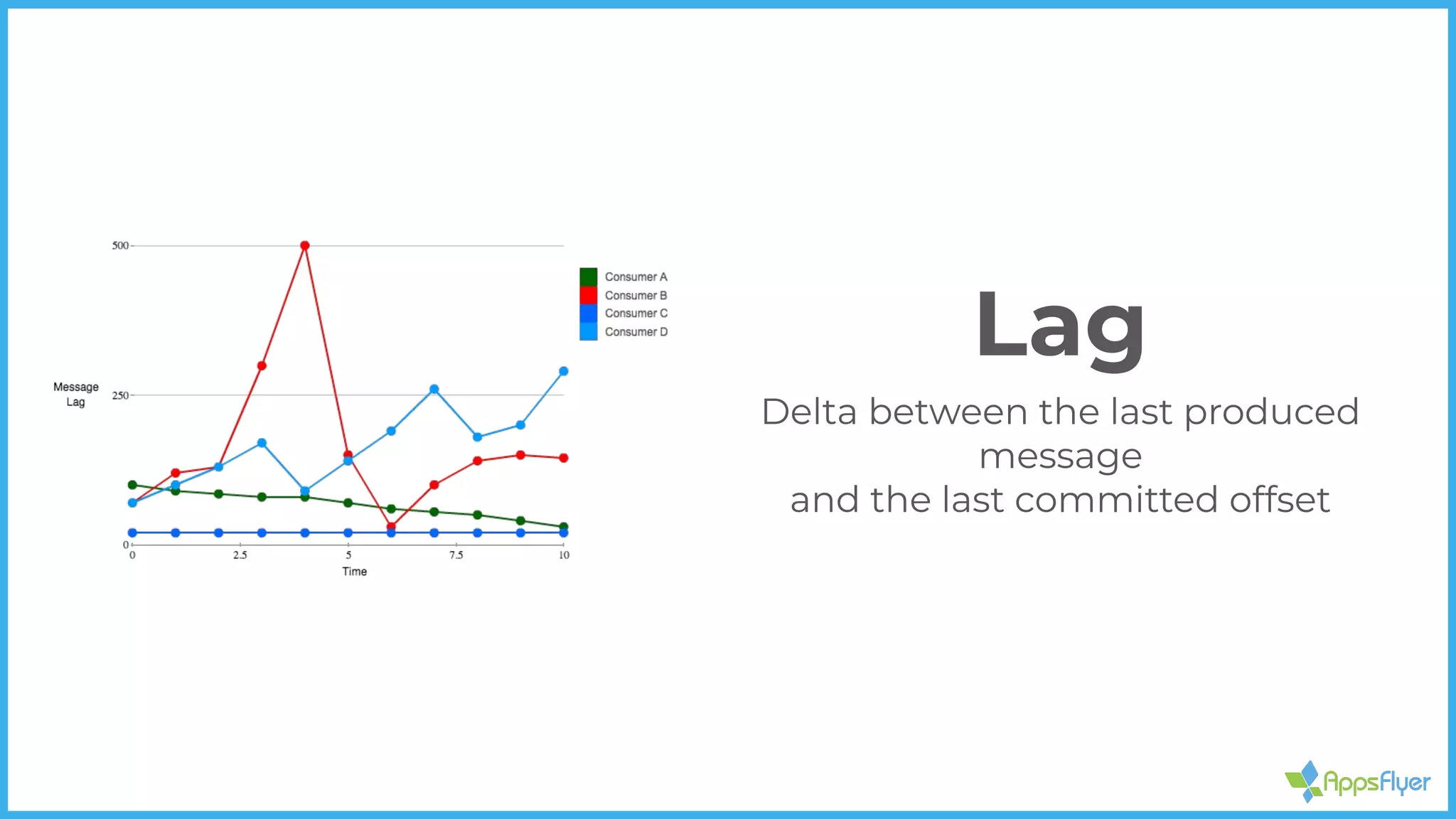

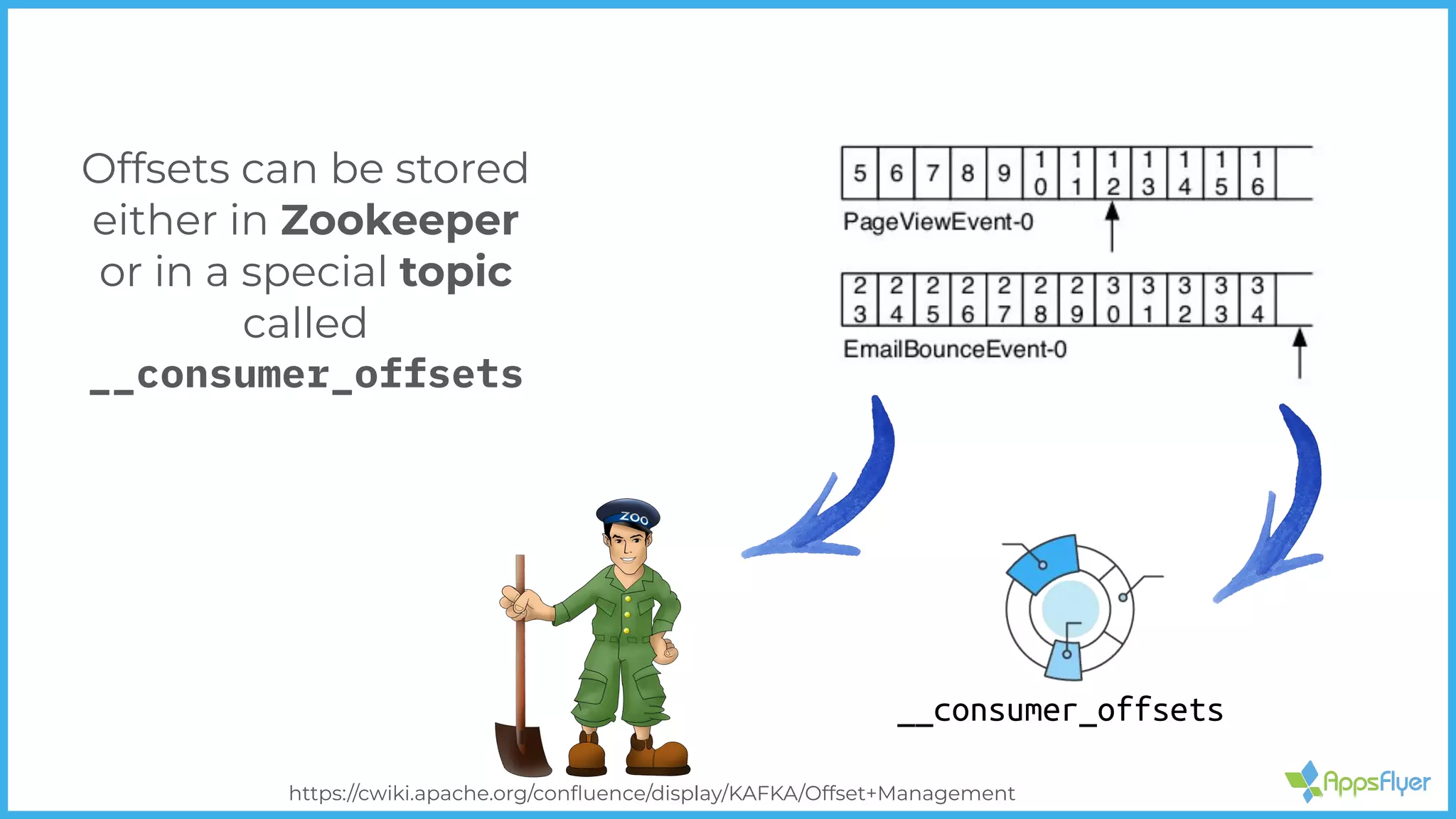

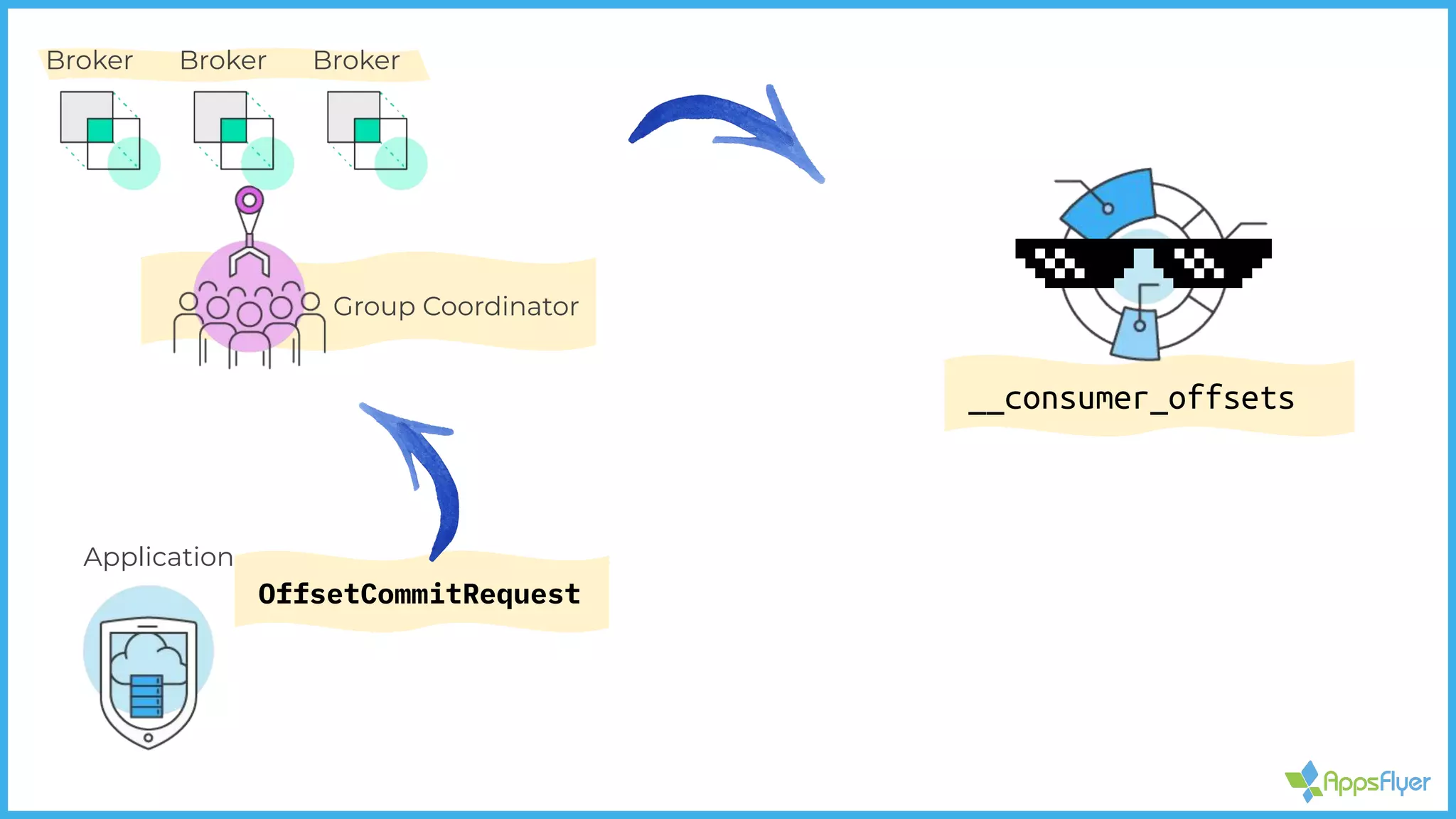

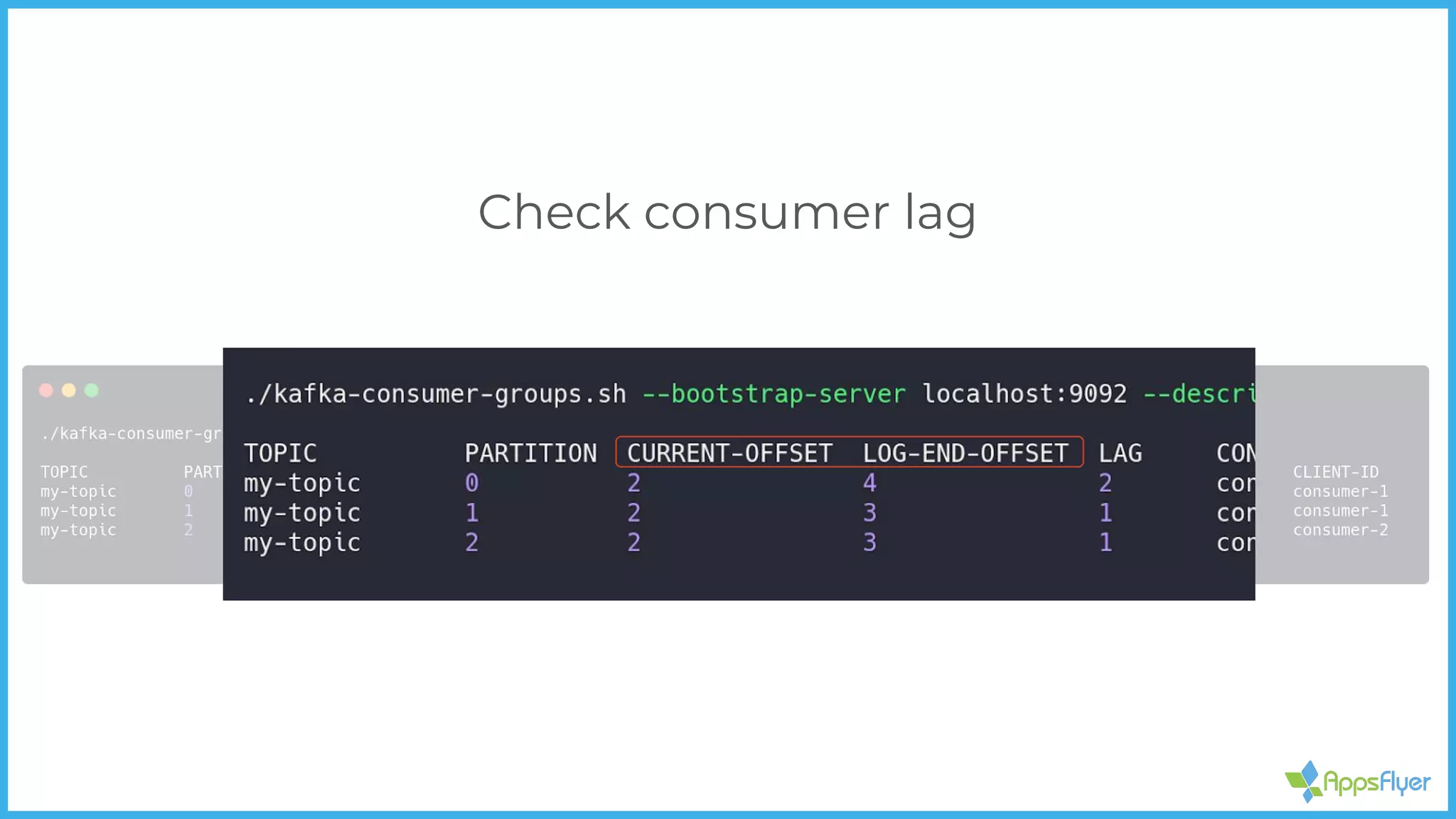

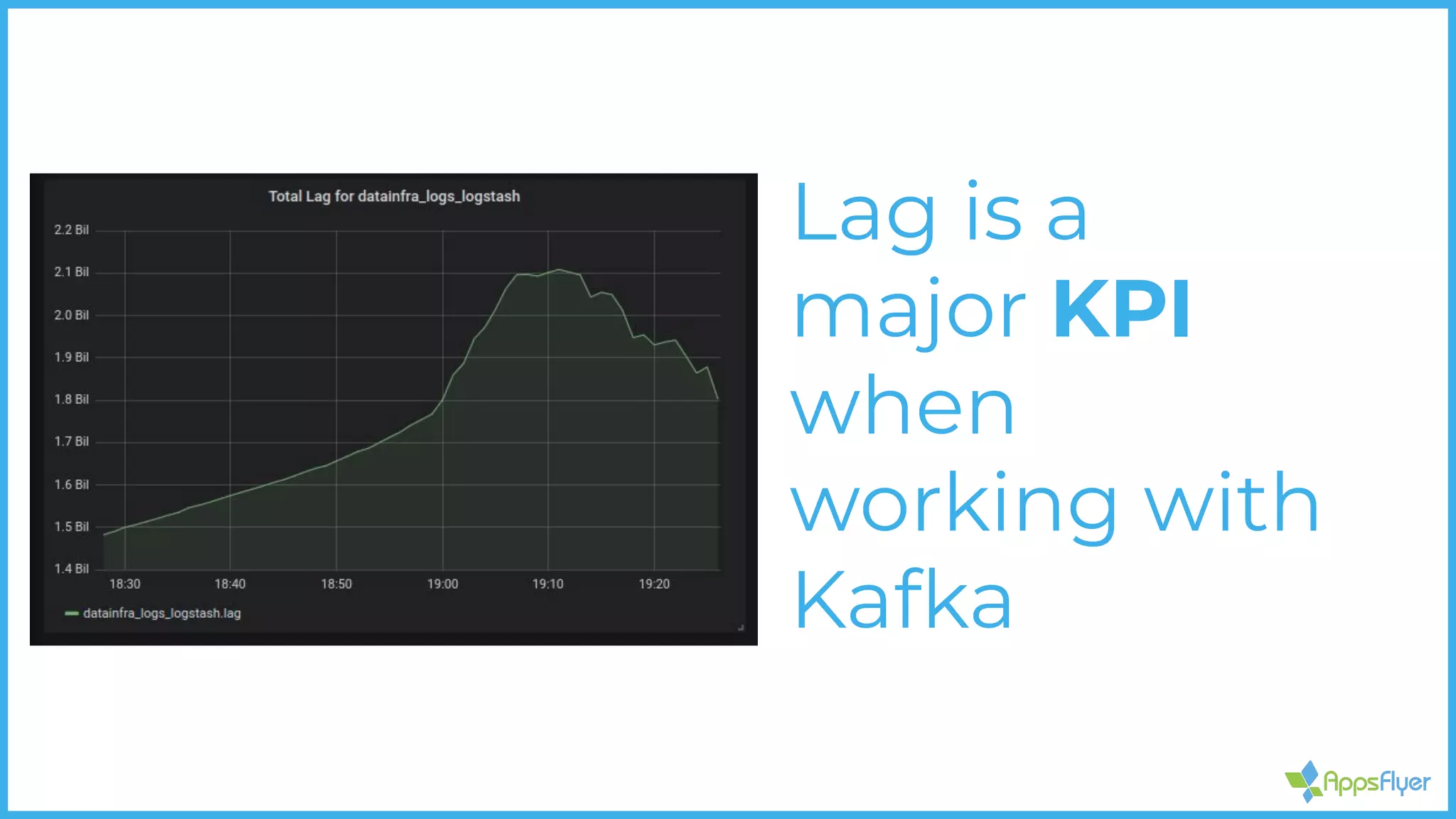

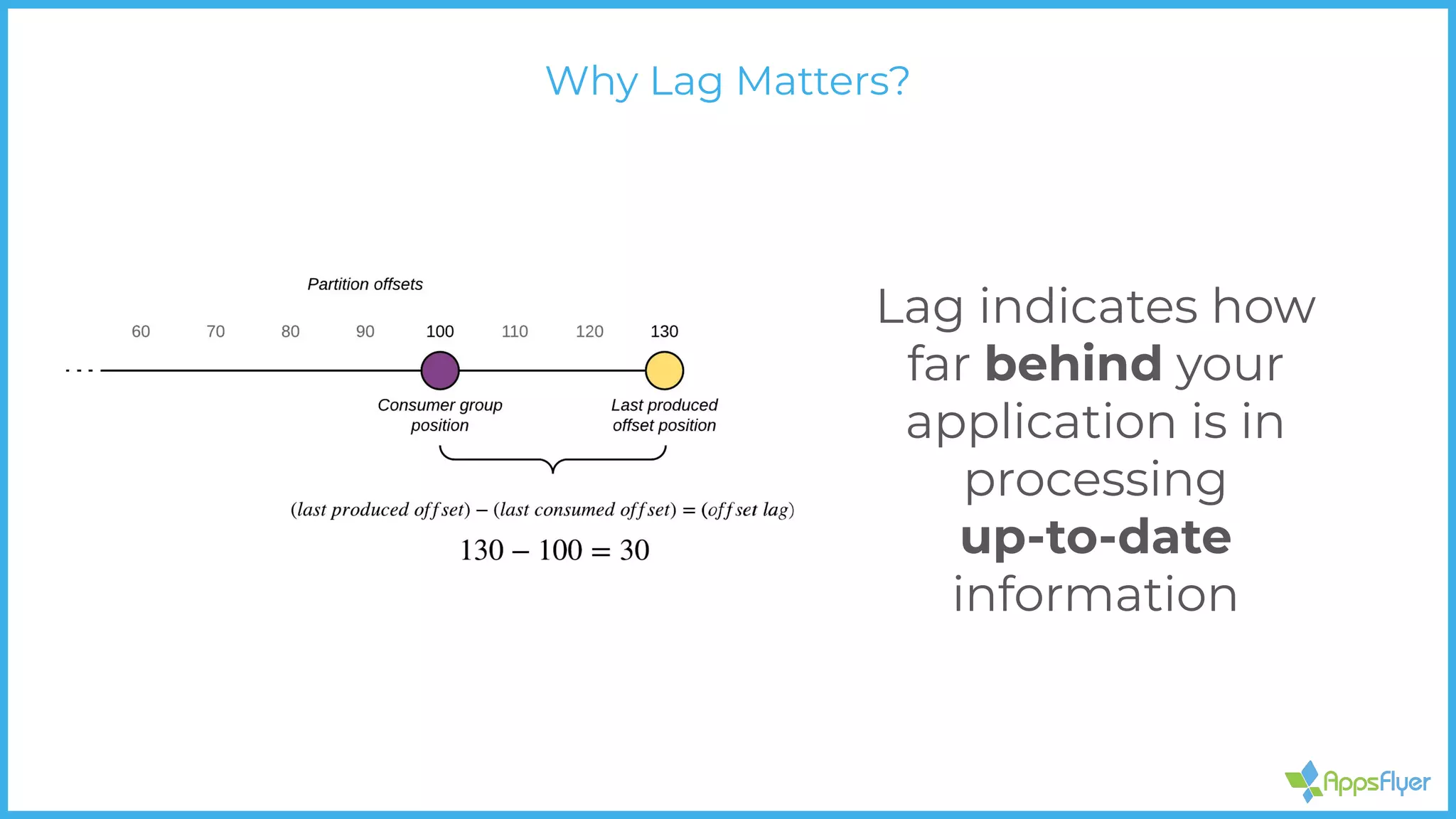

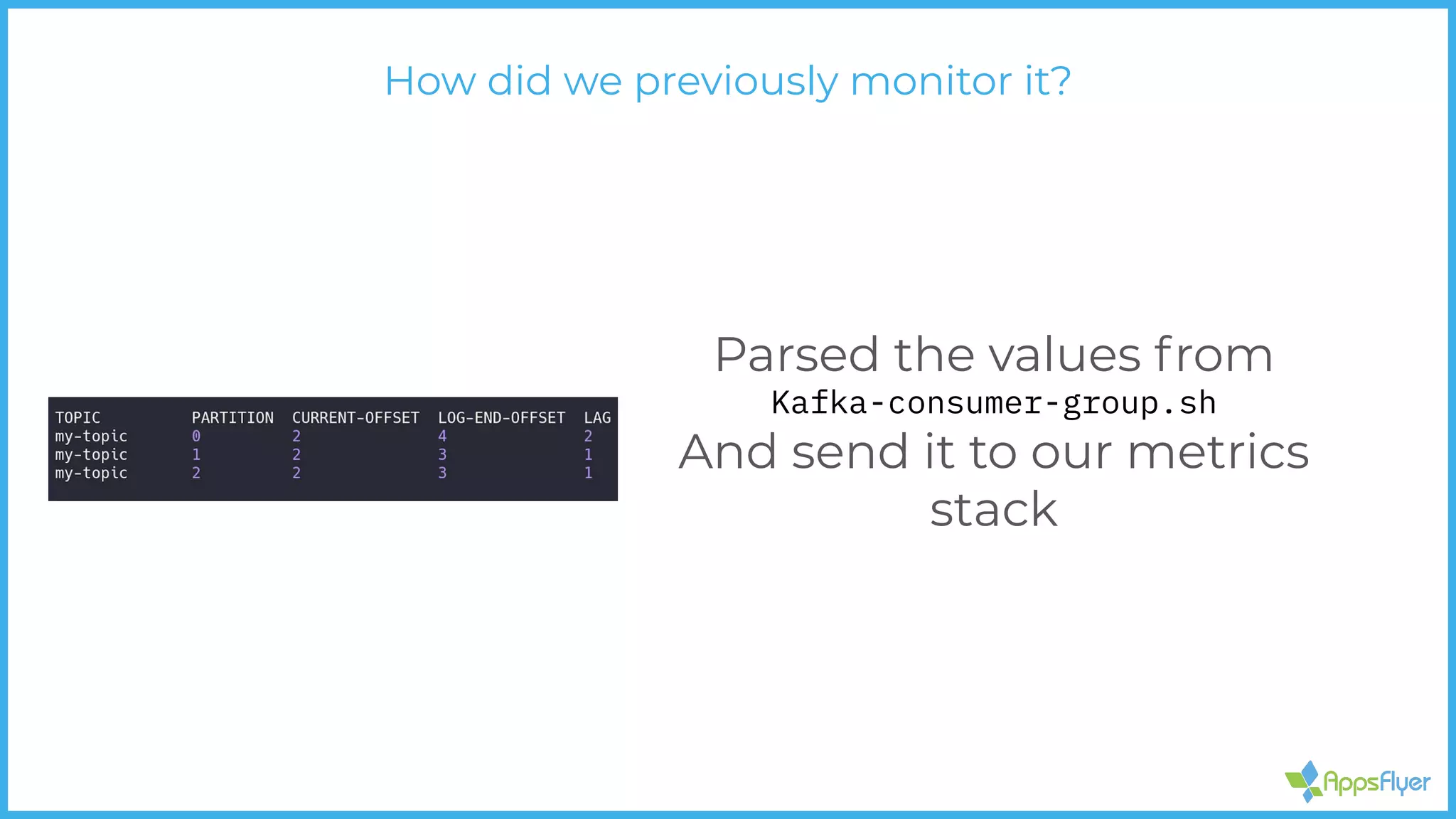

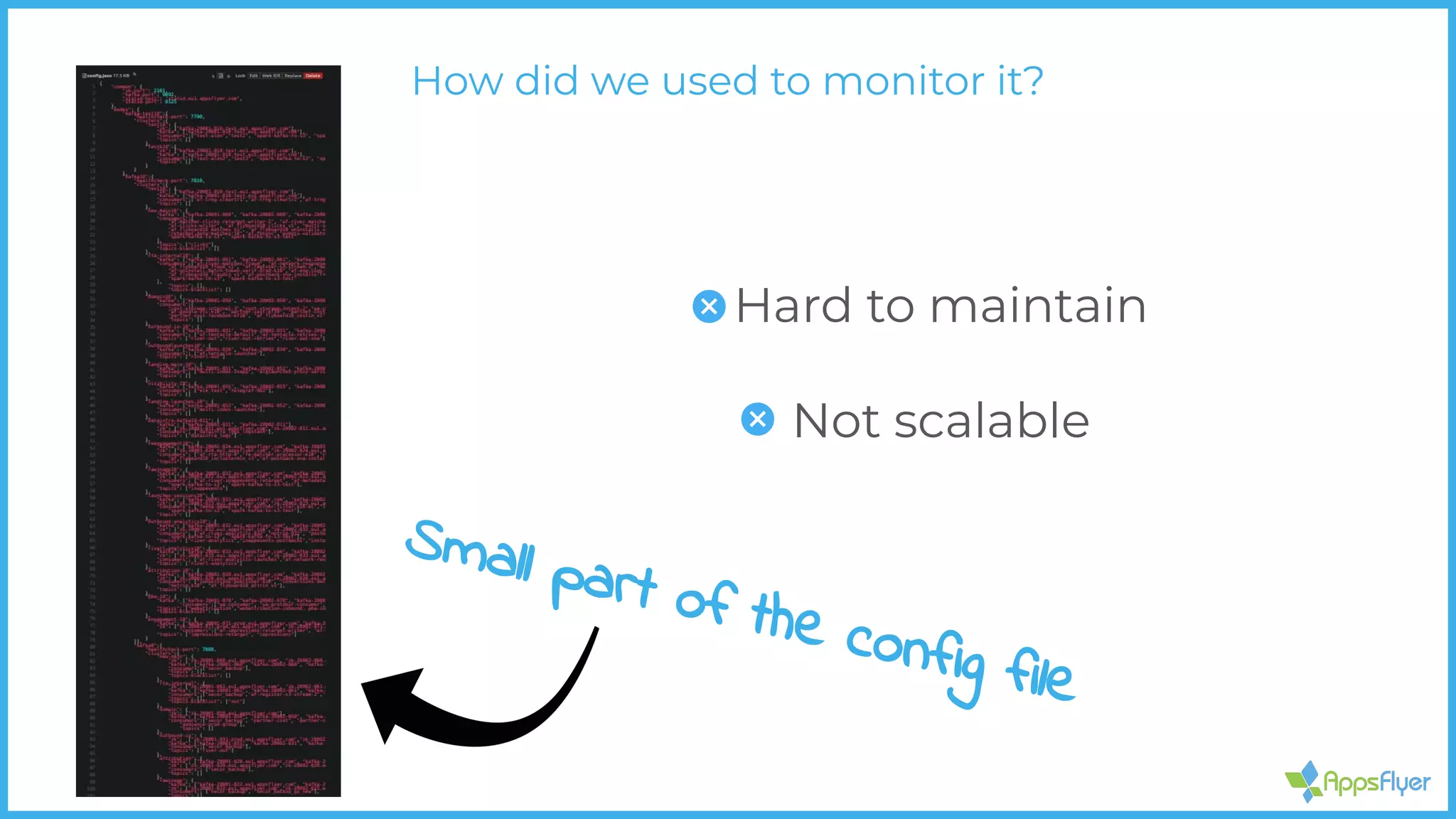

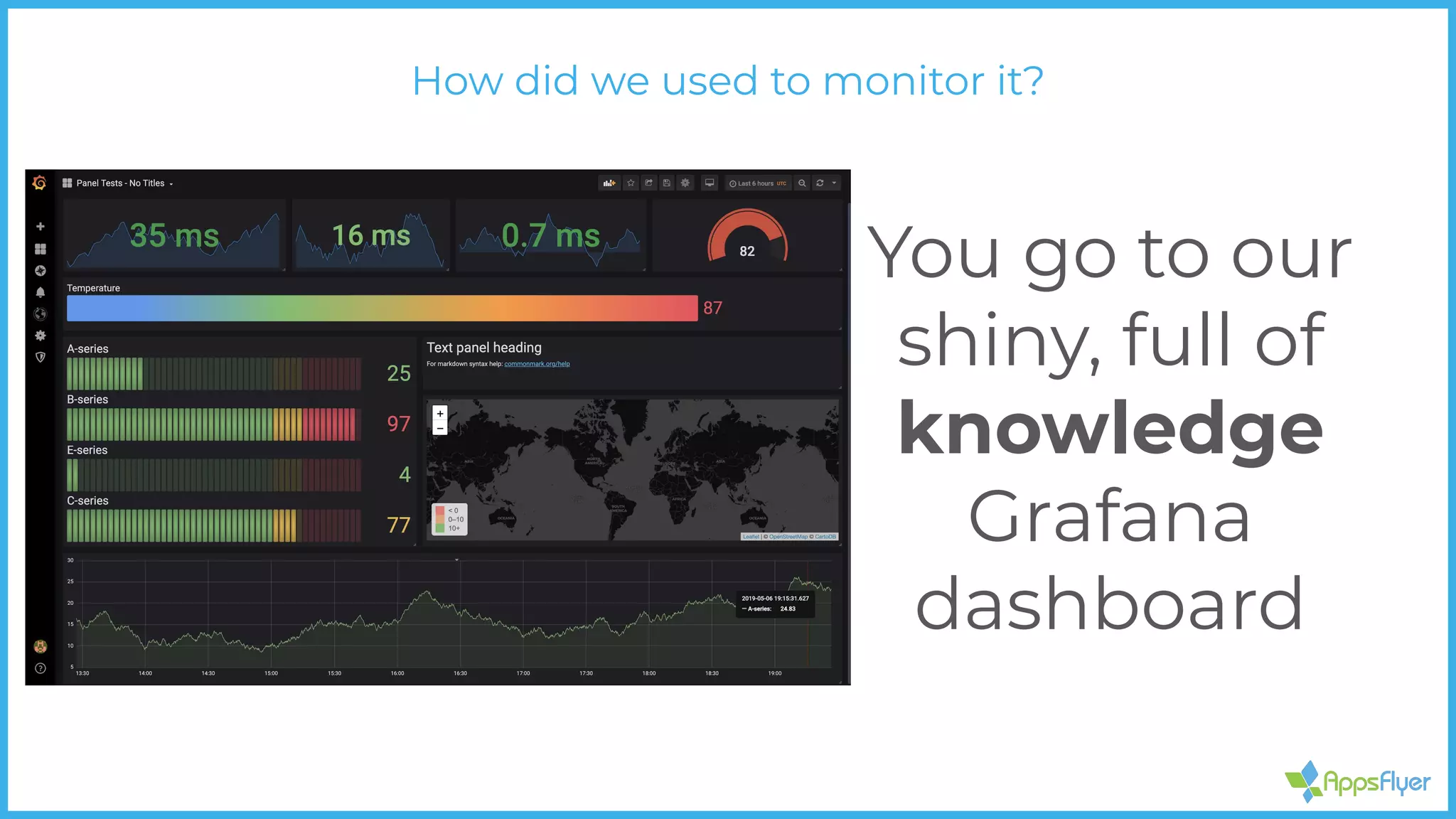

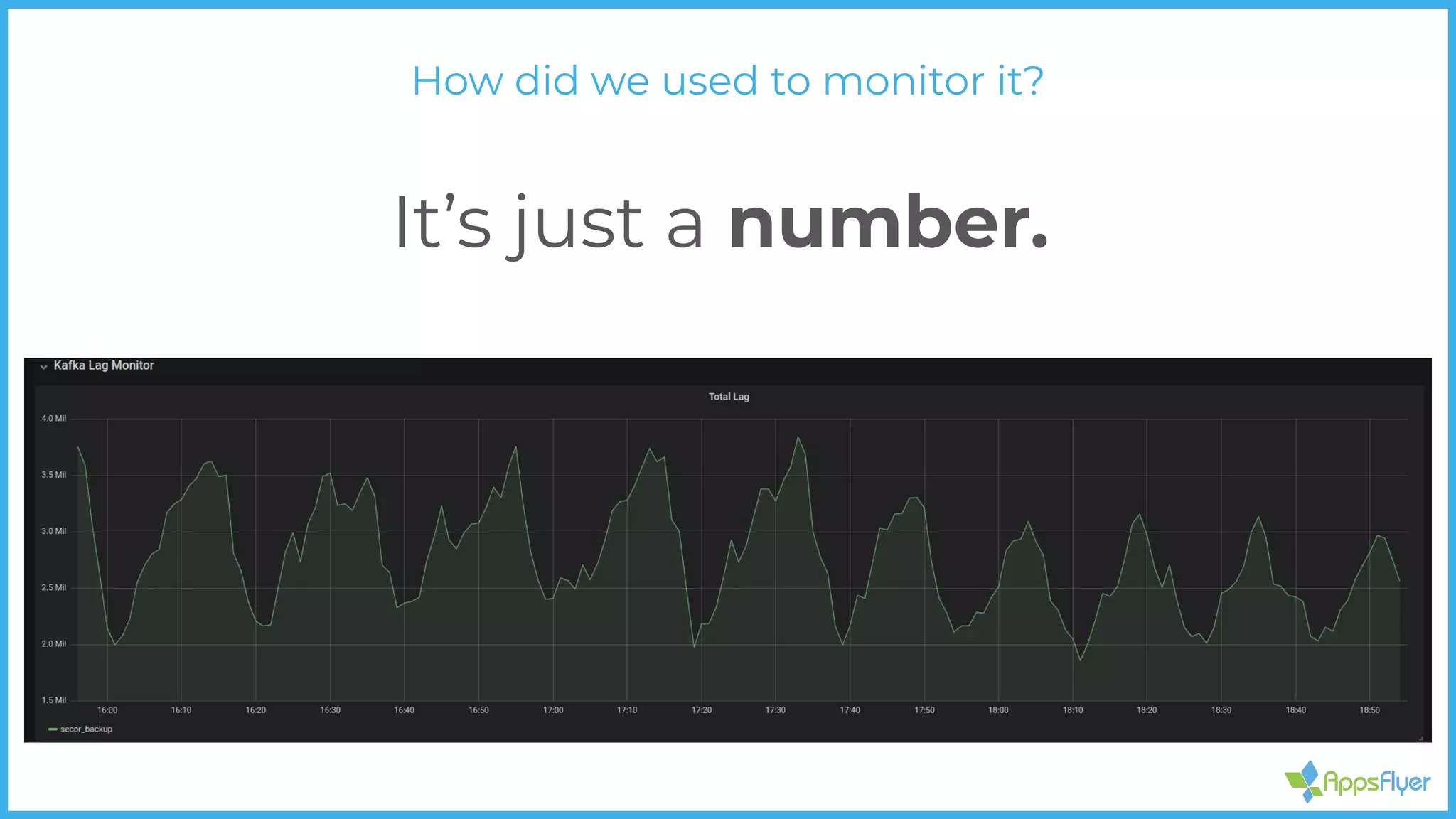

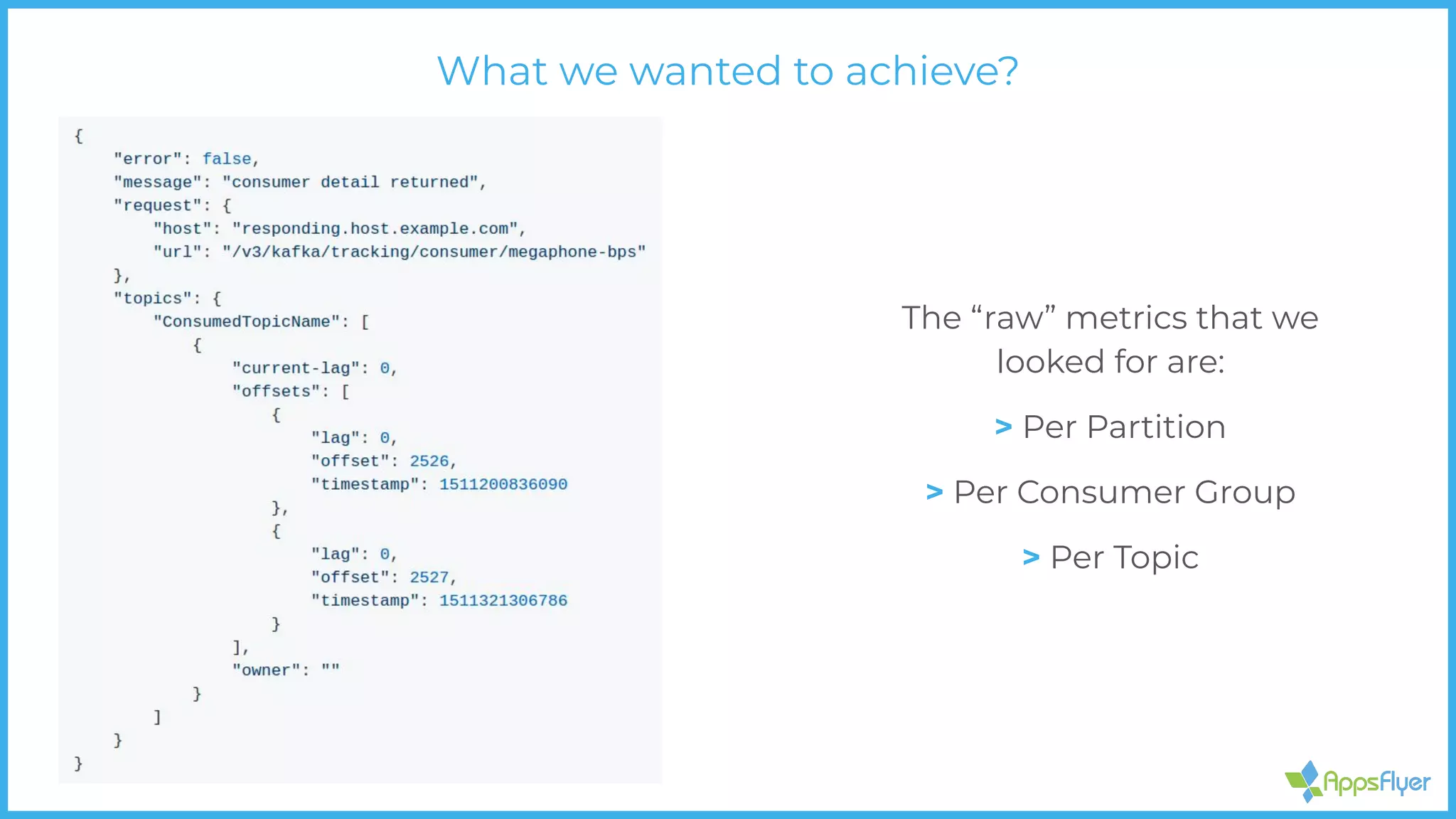

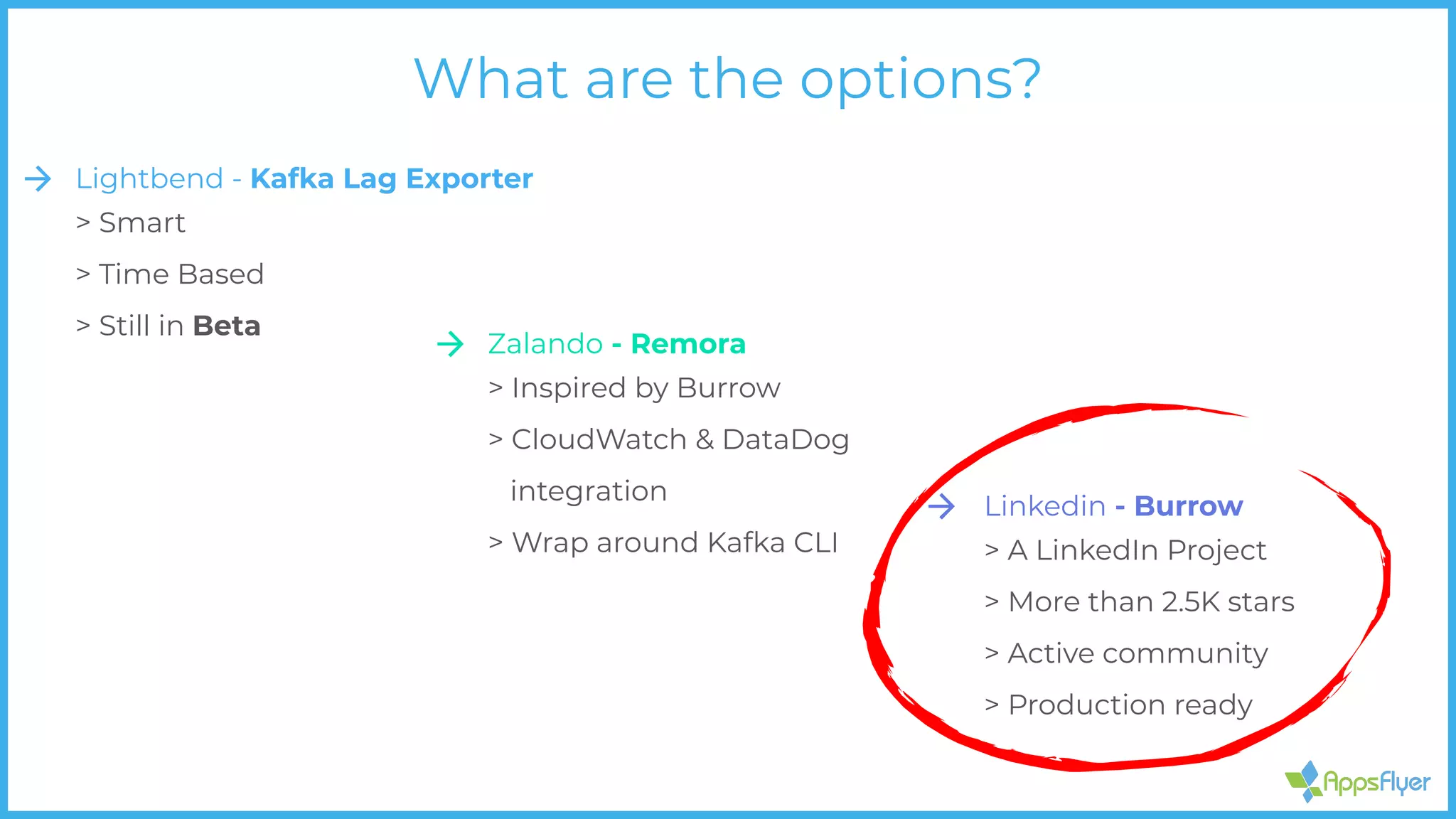

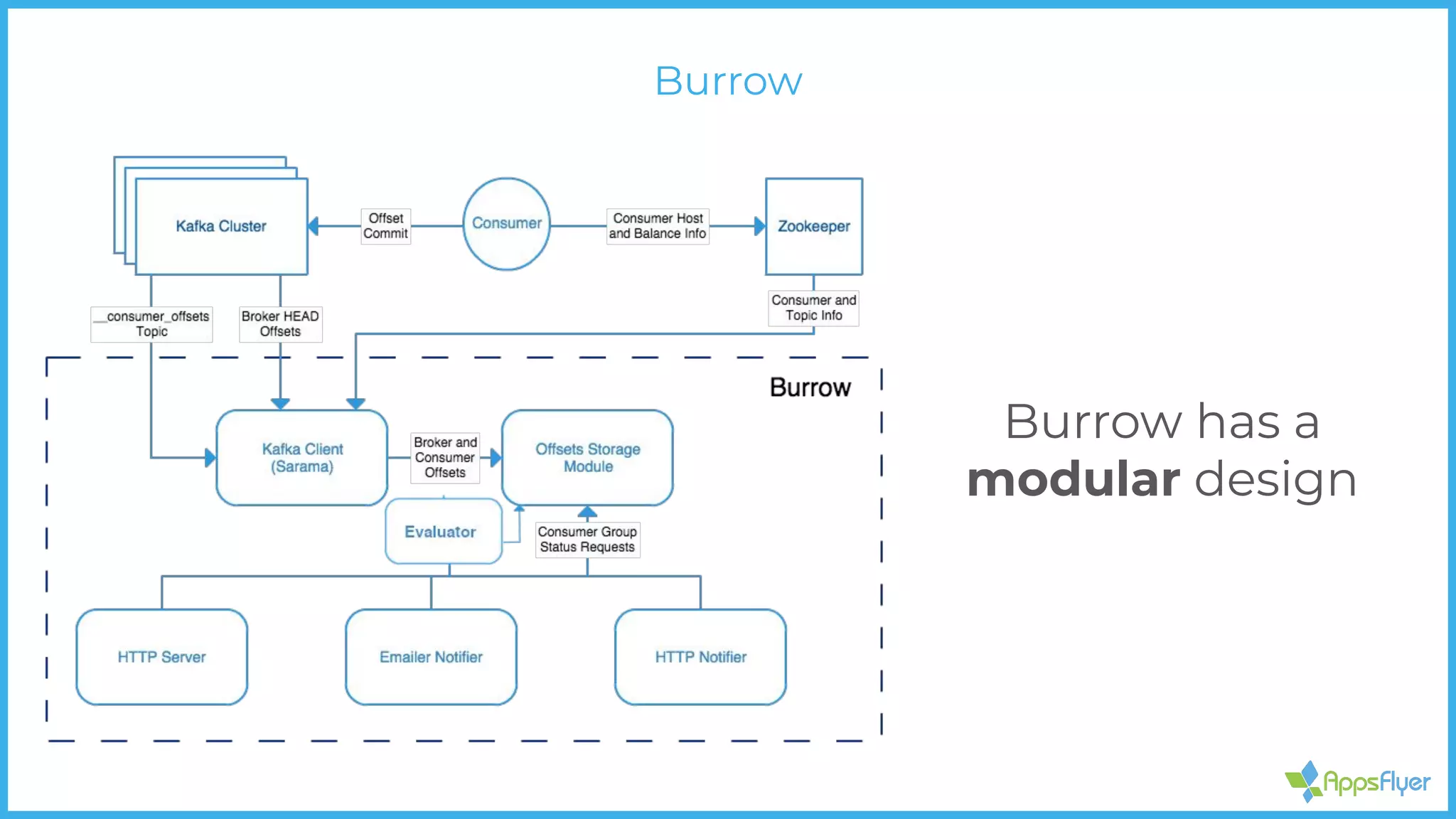

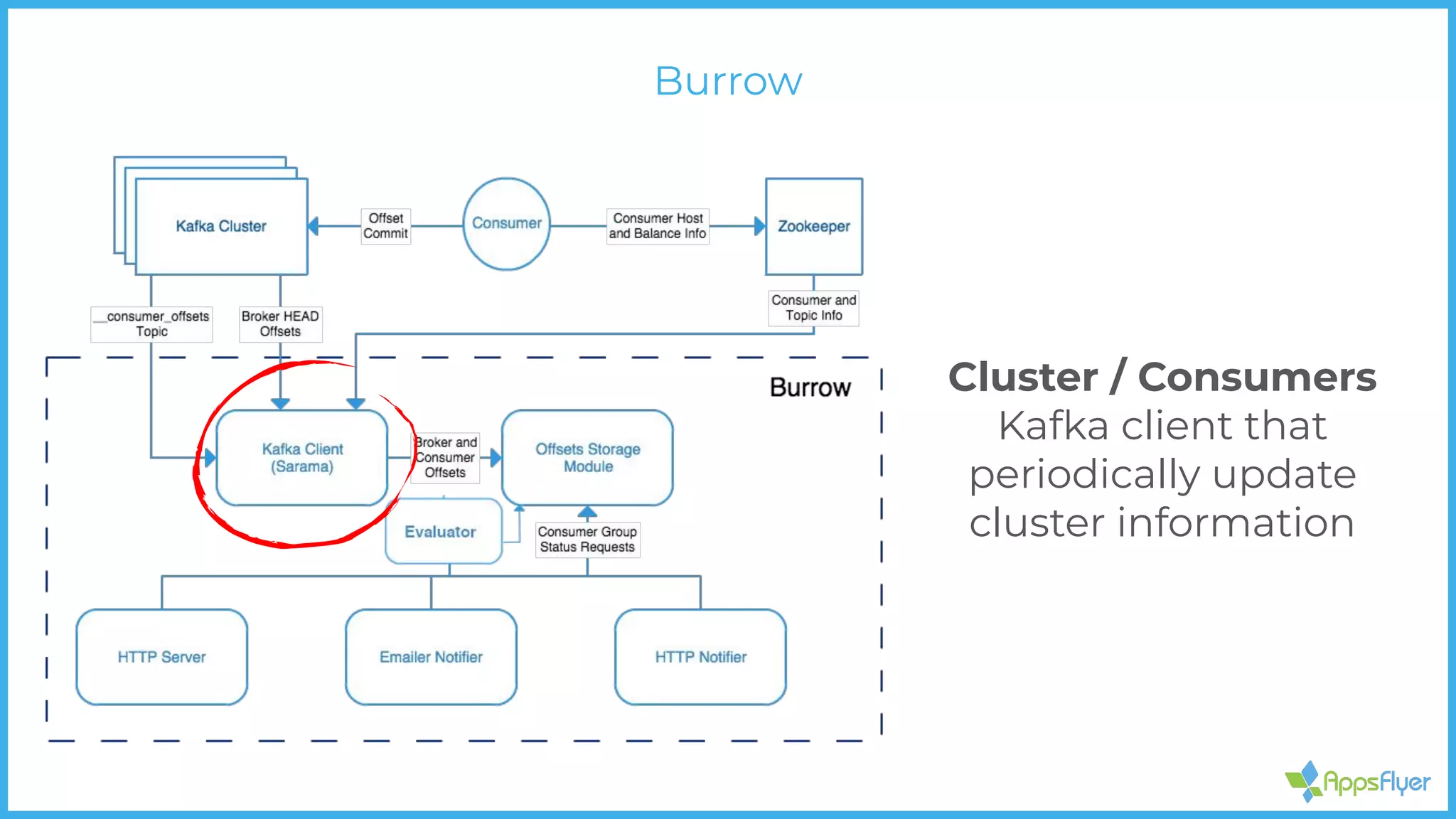

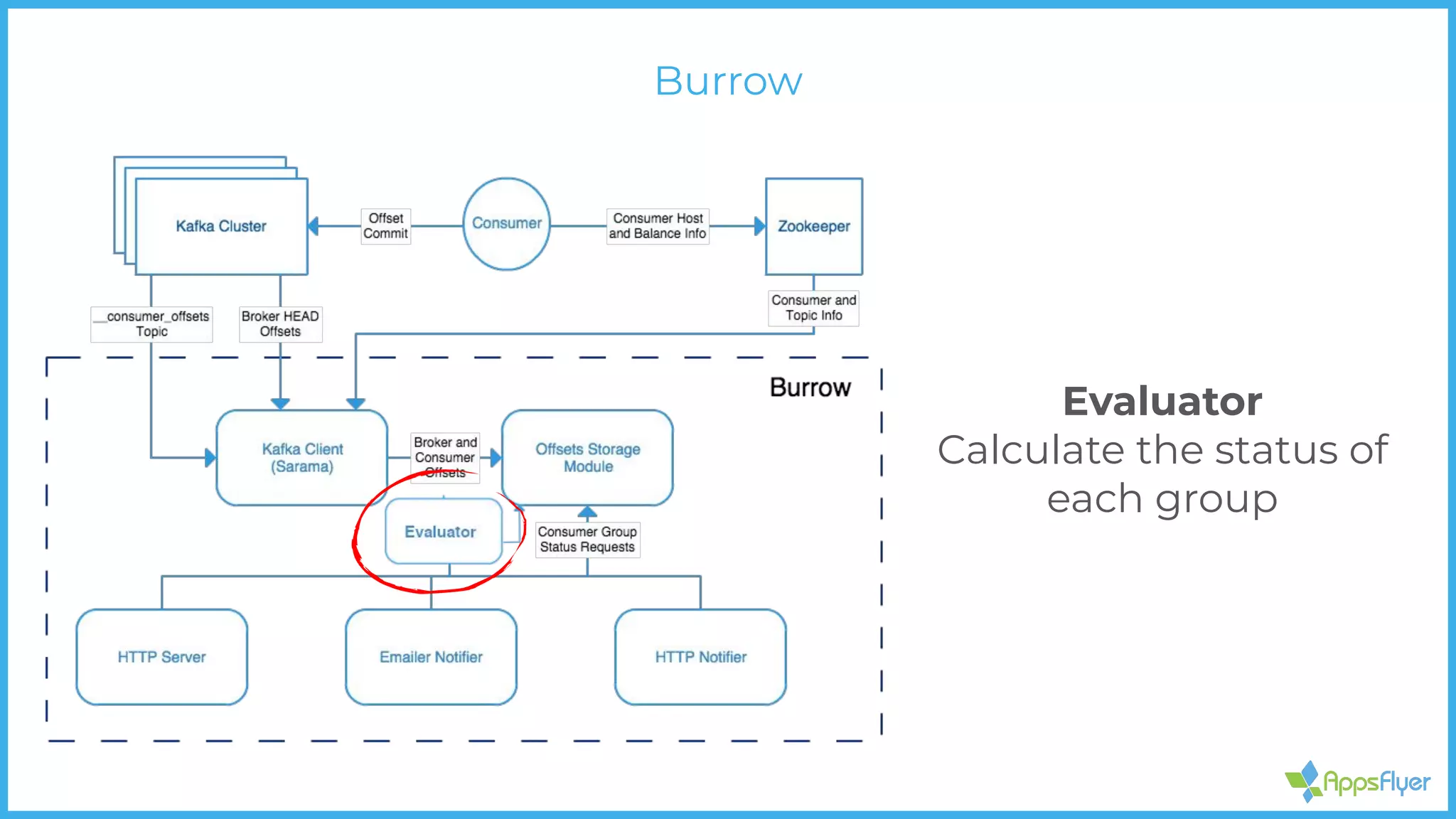

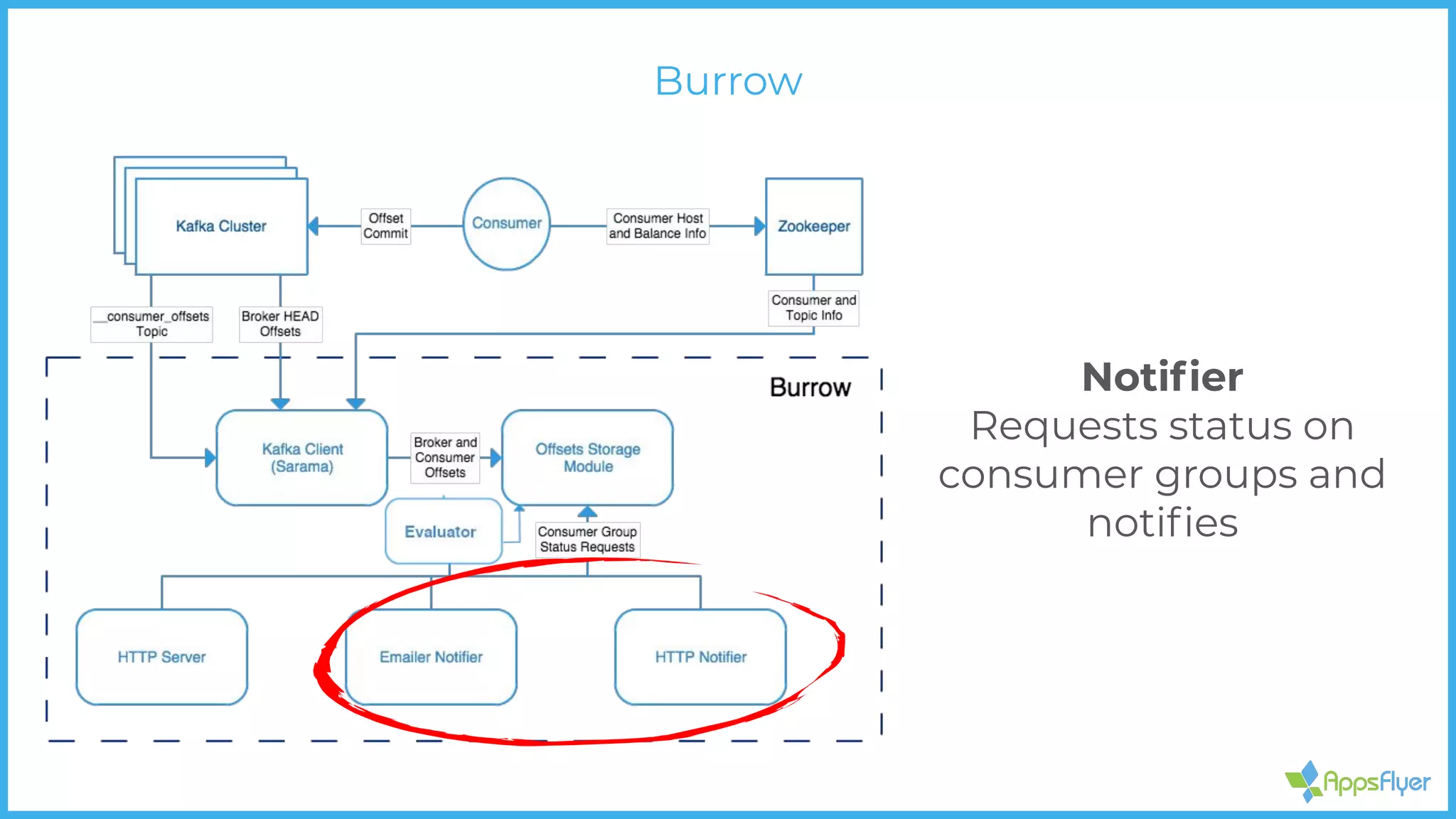

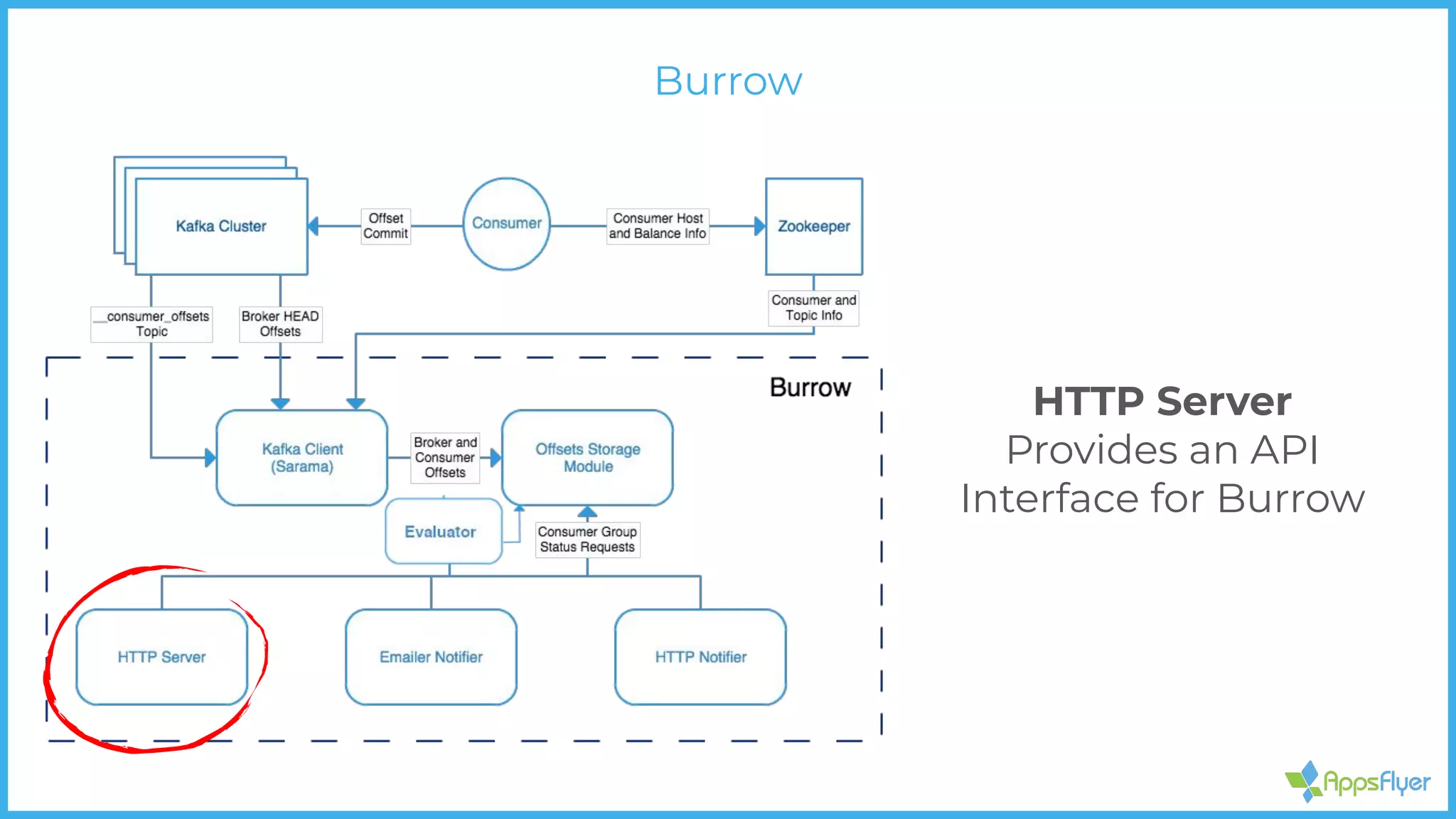

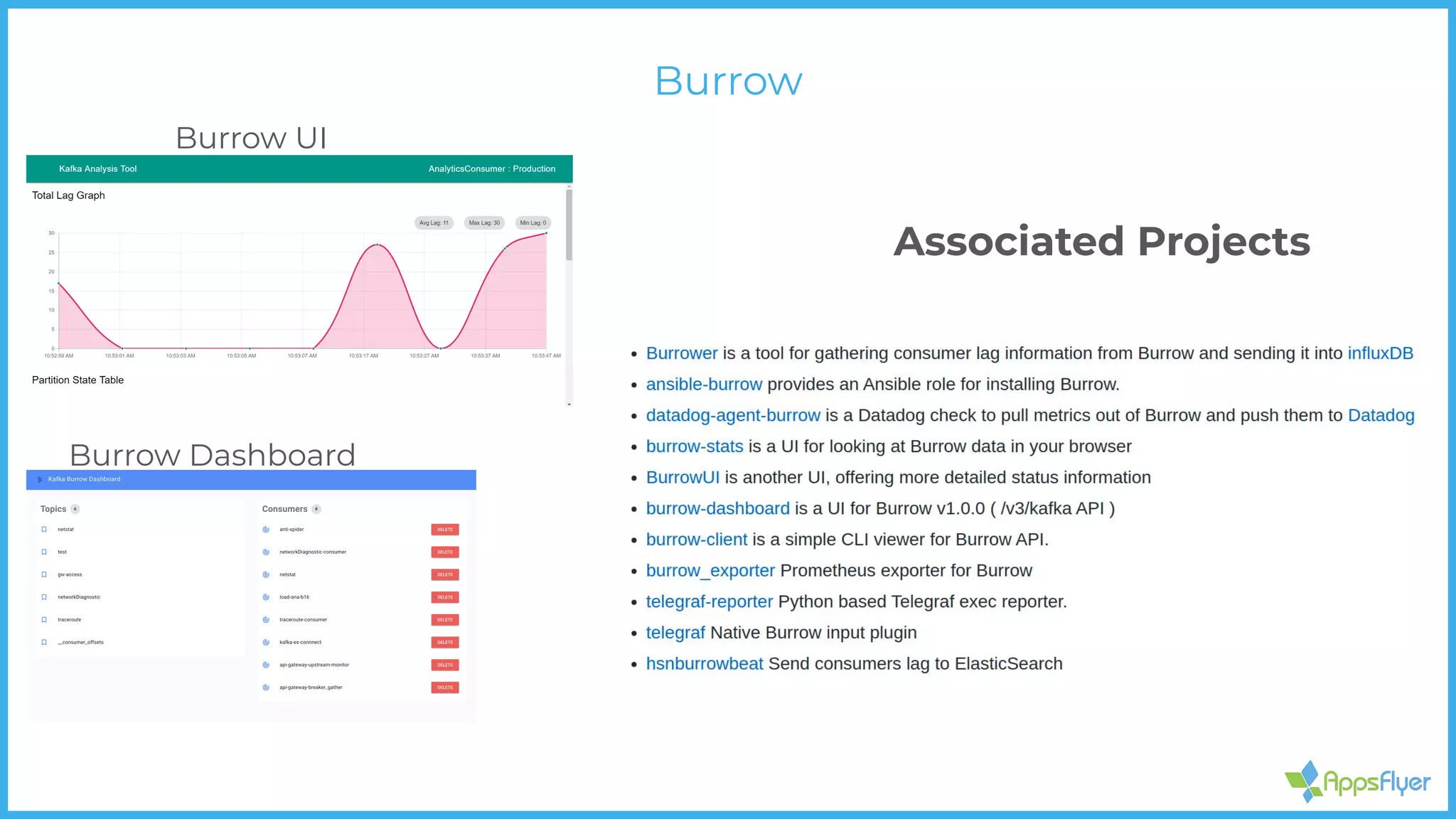

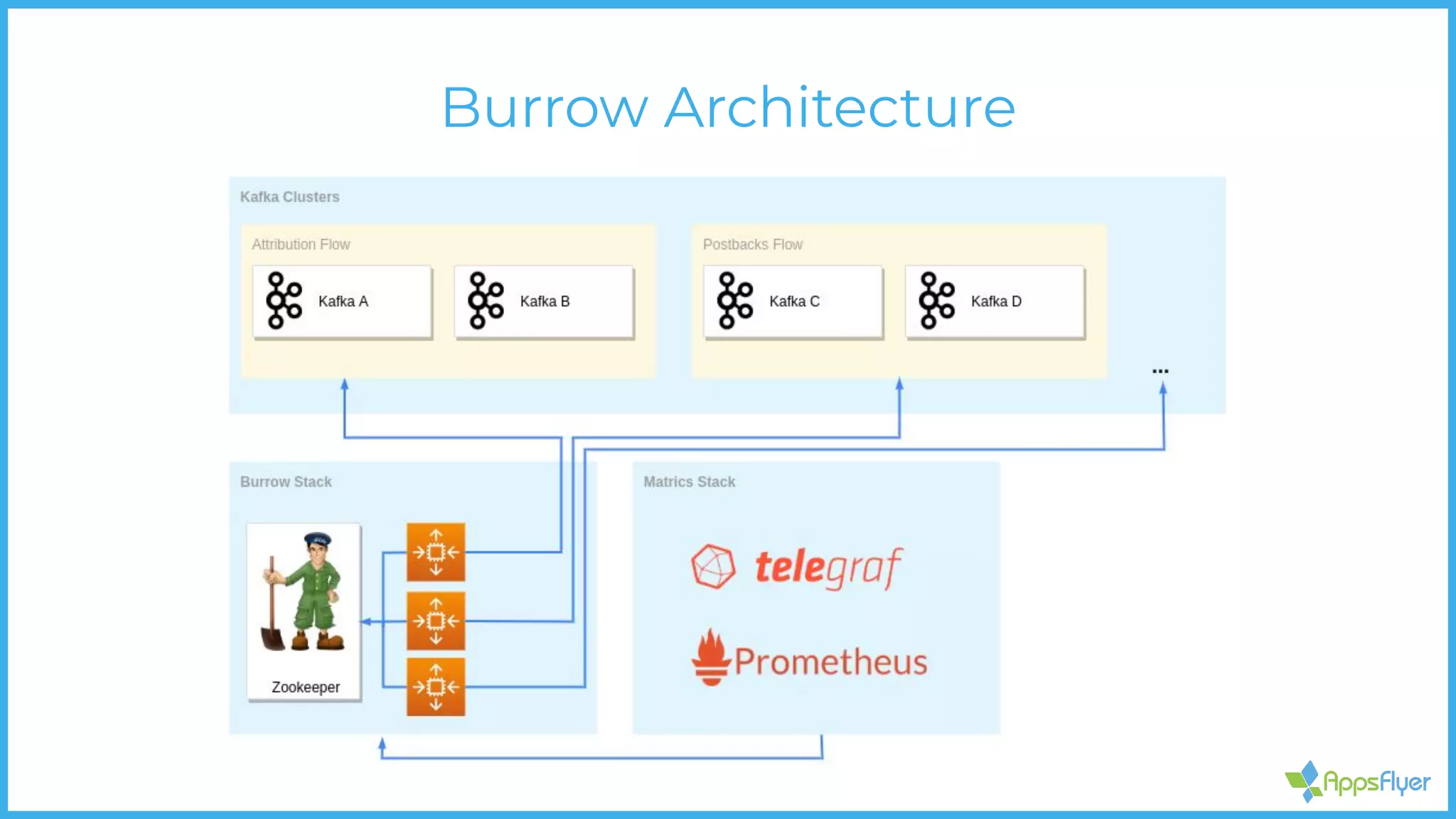

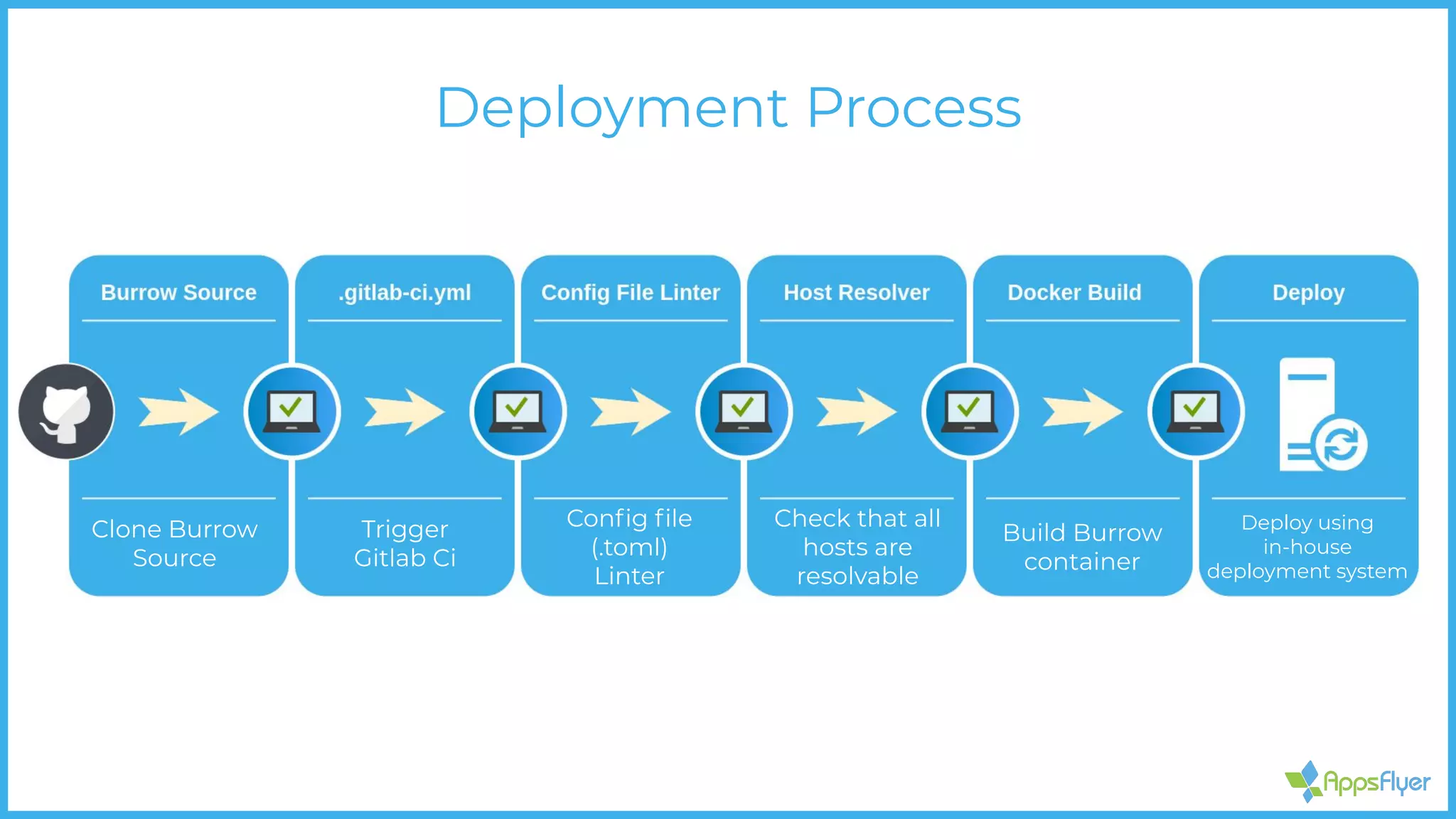

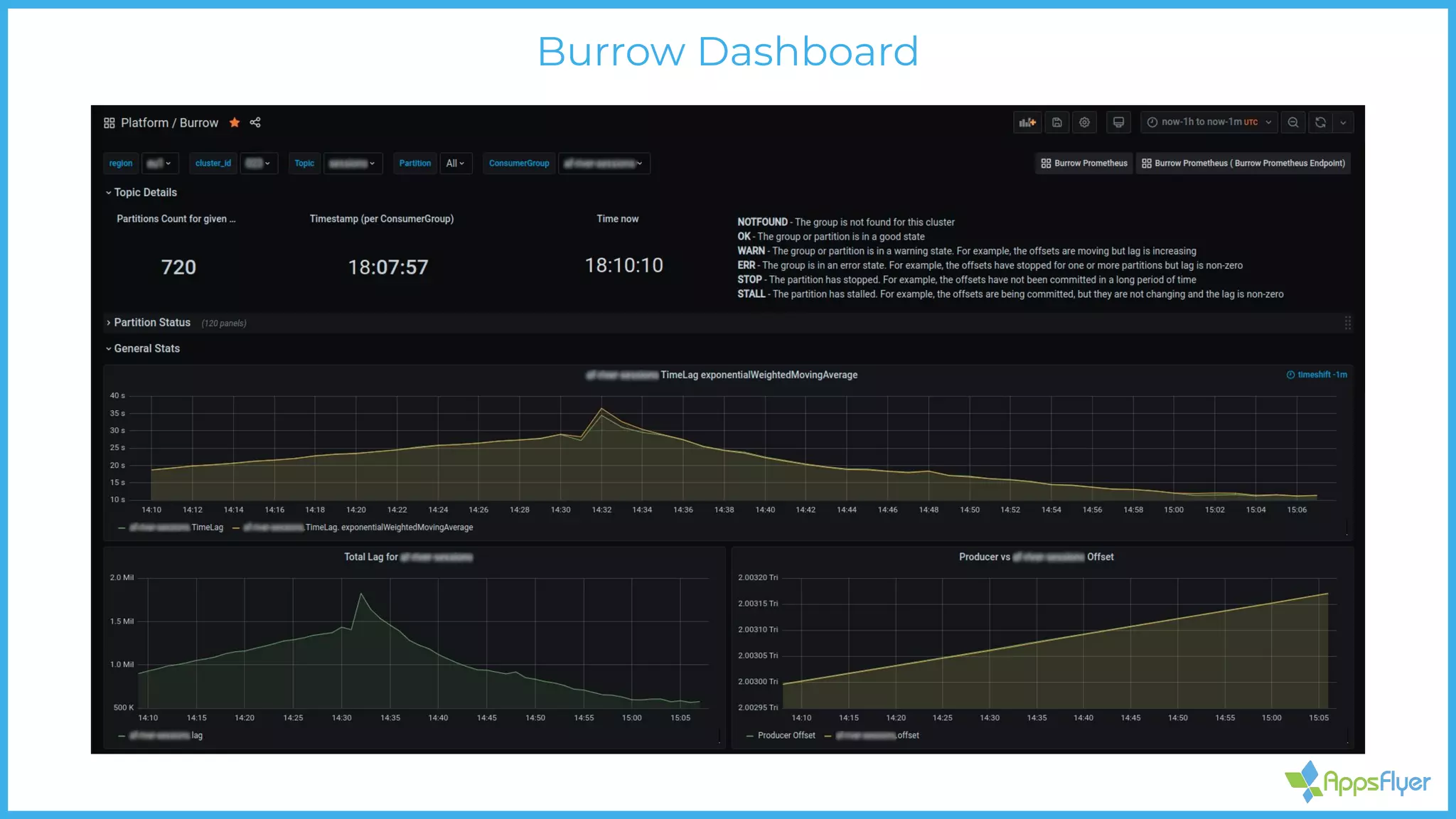

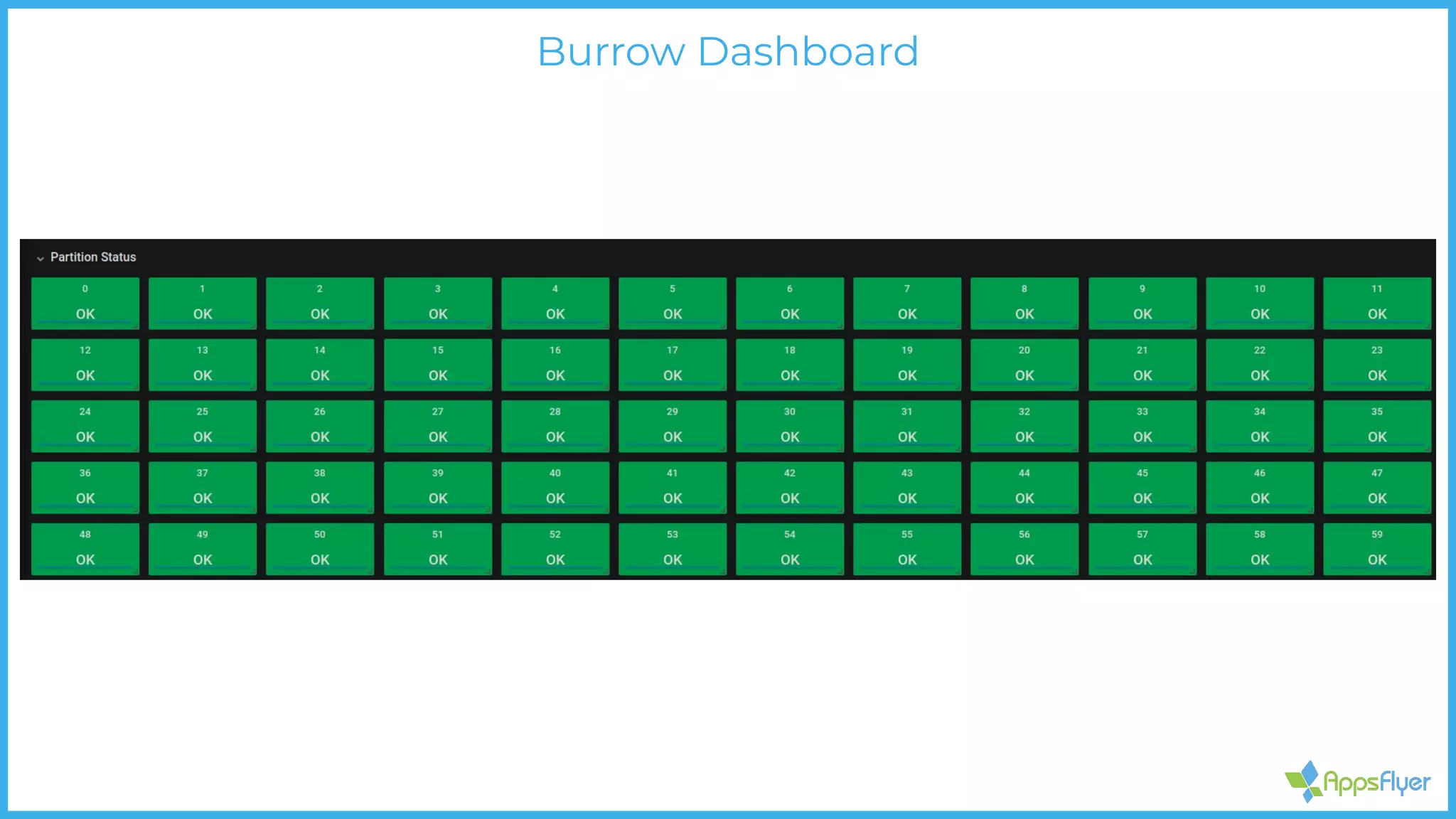

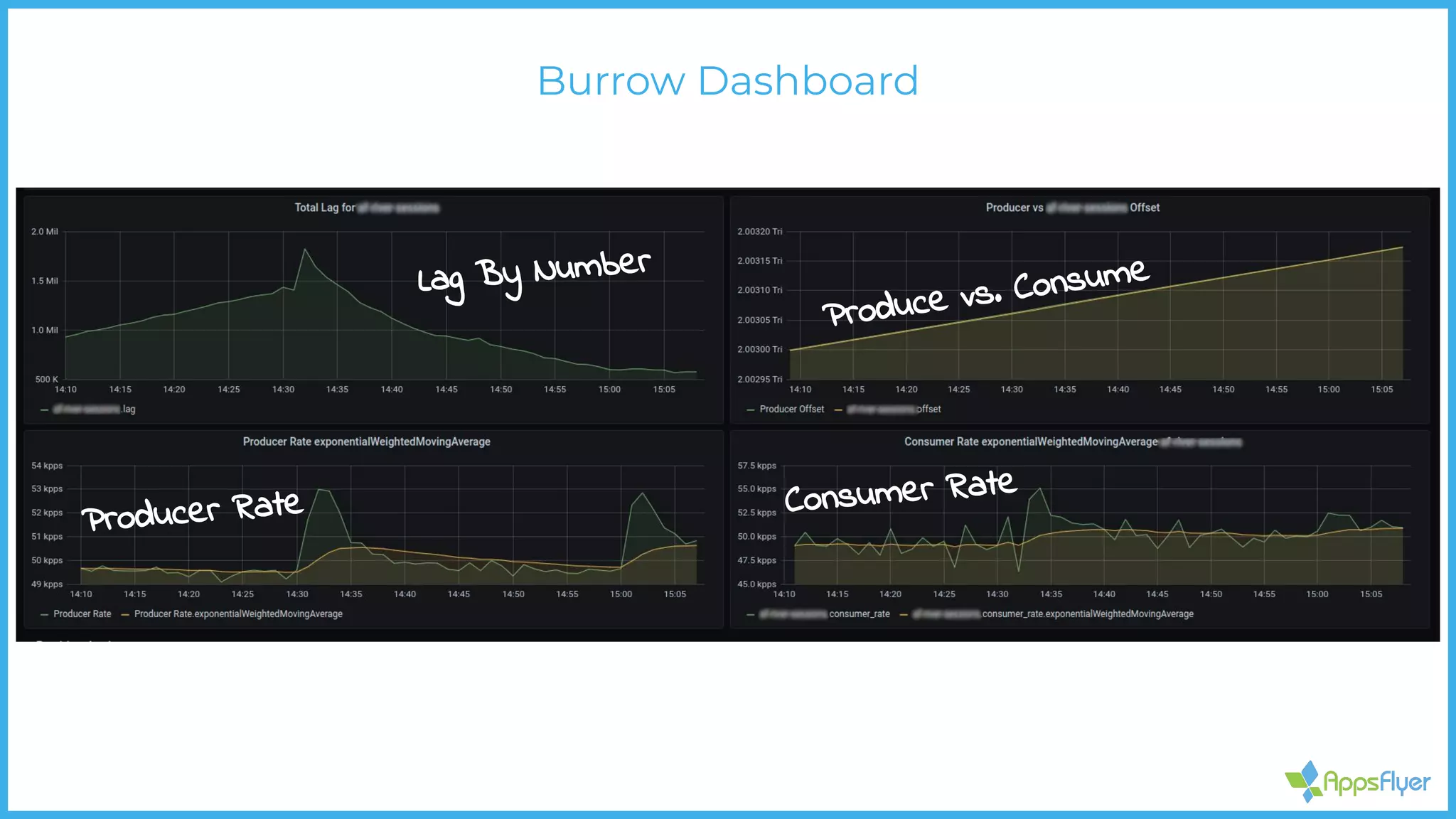

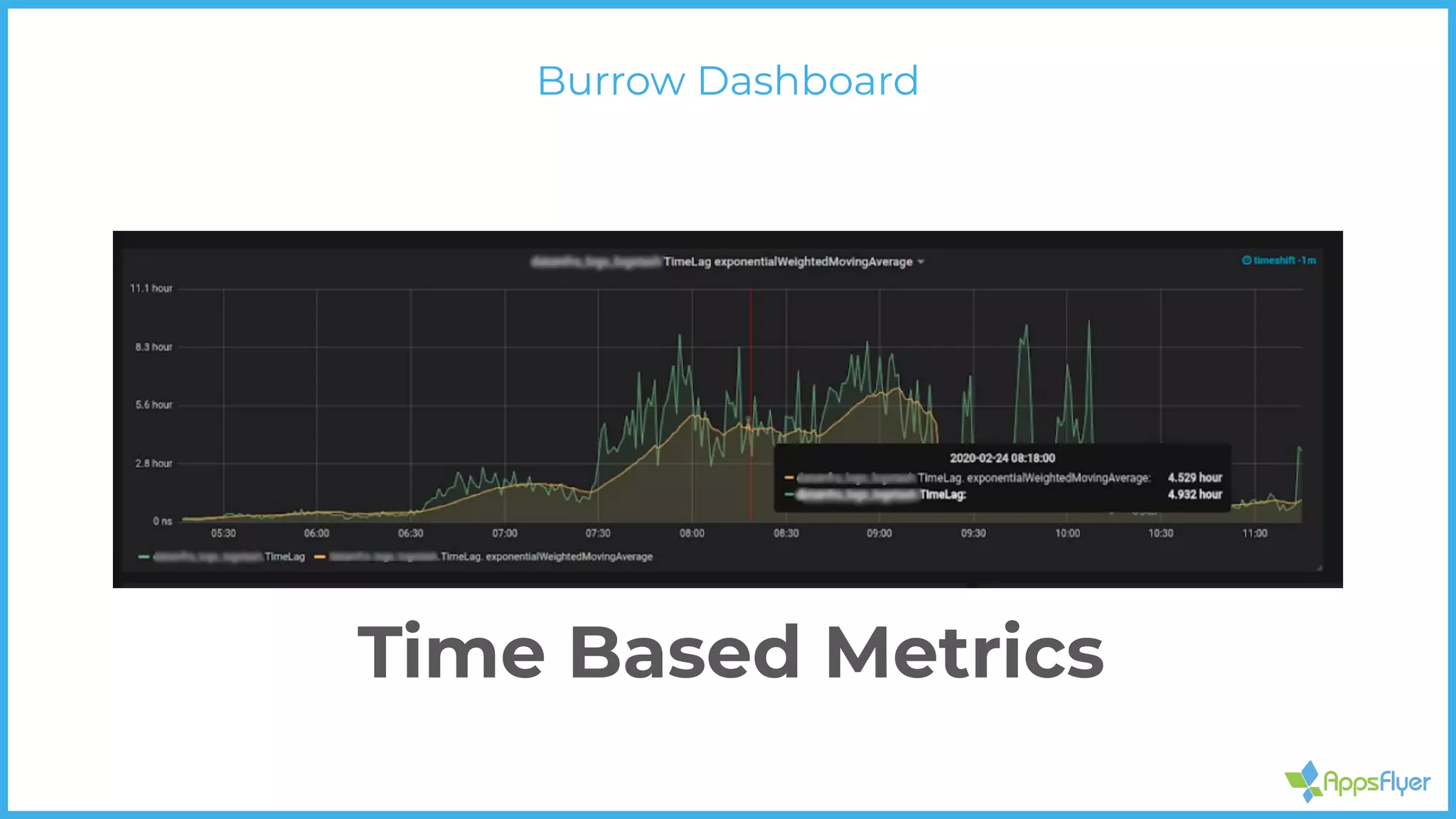

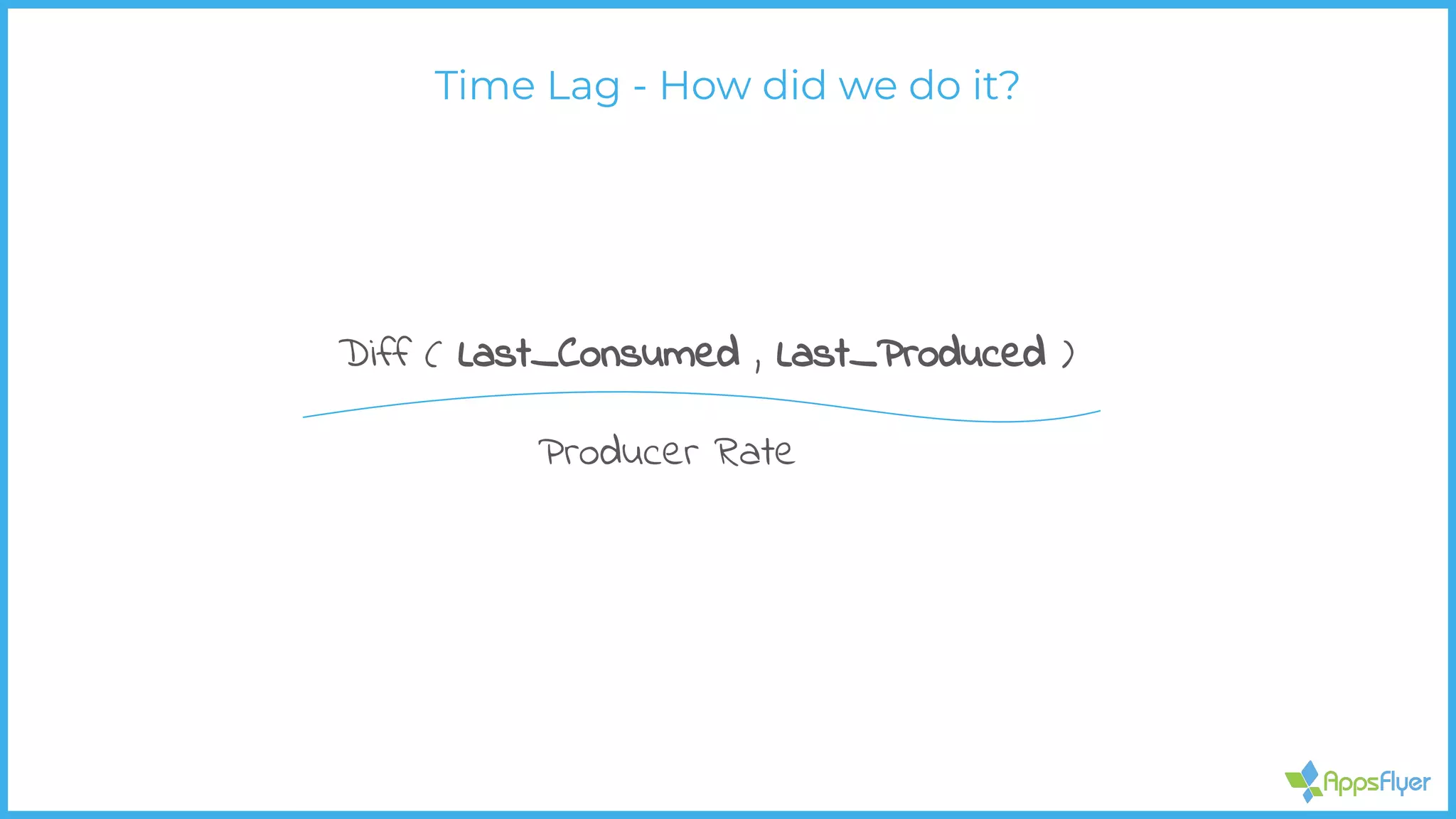

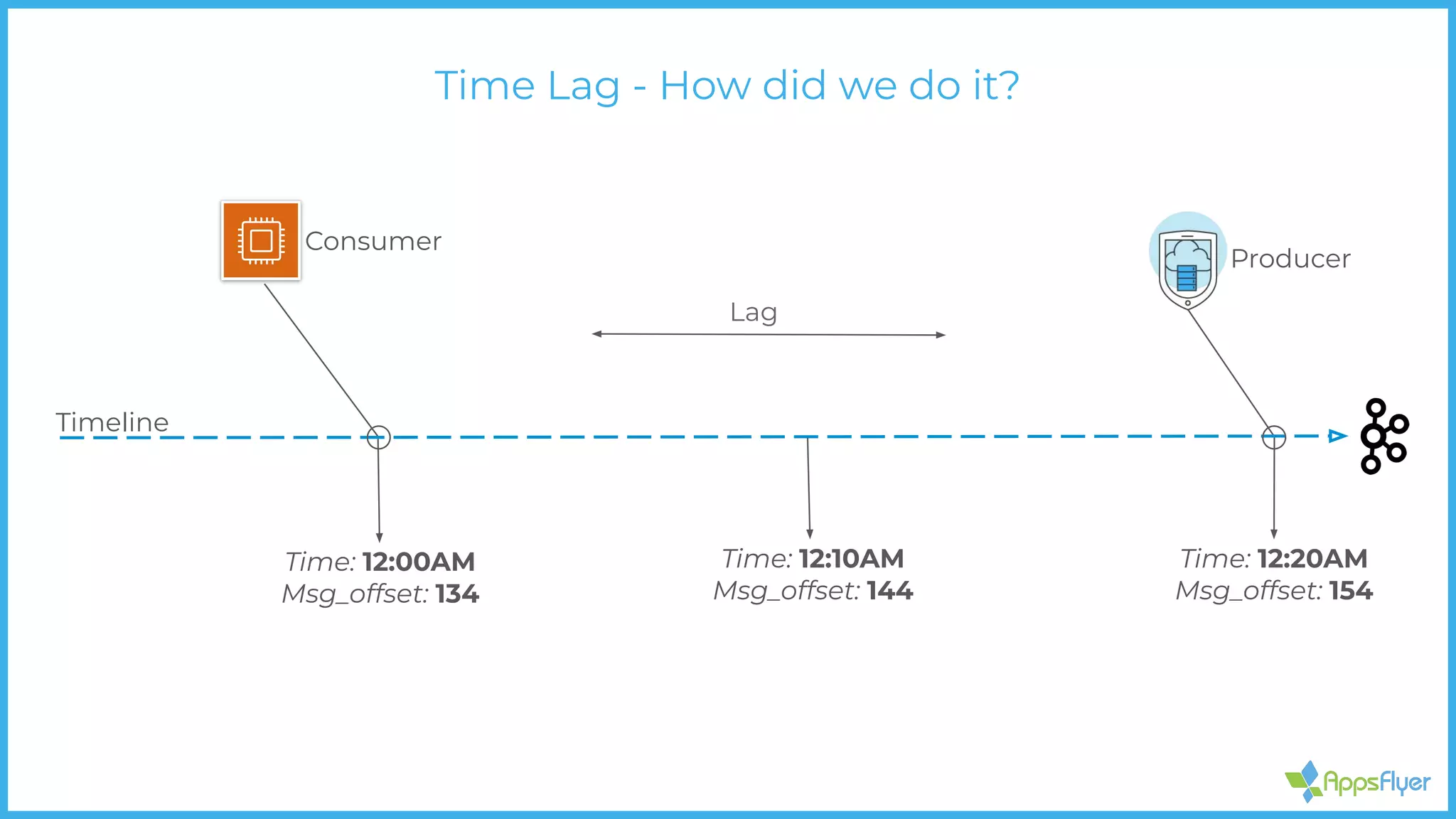

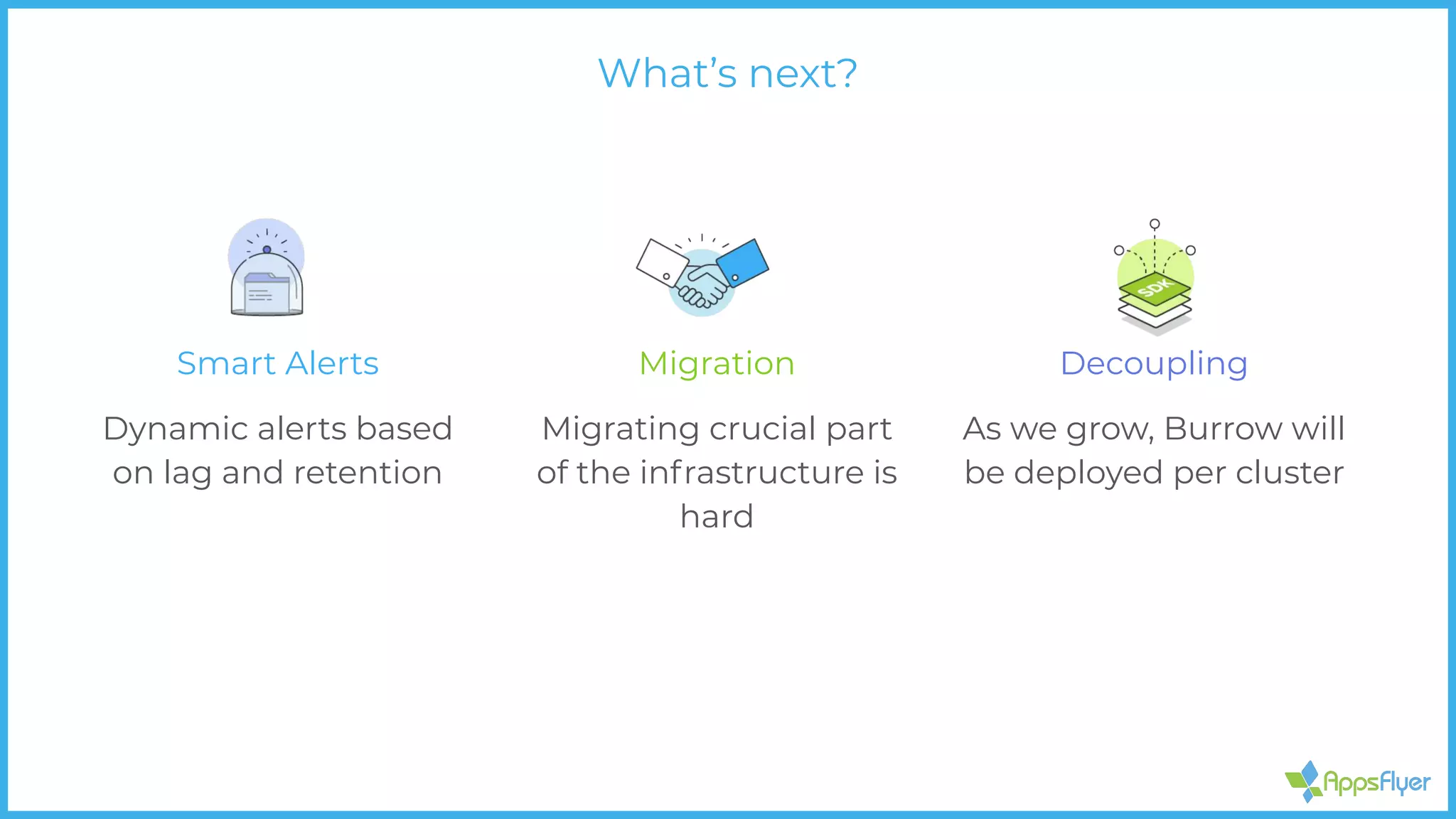

The document discusses Kafka lag monitoring, emphasizing the need for effective monitoring solutions due to the complexities of handling high volumes of data. It introduces Burrow, a monitoring tool for Apache Kafka that provides consumer lag checking and features a modular design. The document highlights the challenges faced in previous monitoring methods and outlines future steps including smart alerts and dynamic monitoring as systems scale.