The document provides an overview of AV Foundation, a framework designed for handling time-based media on iOS and Mac OS X. It covers key components such as AVAsset, AVPlayer, and AVCapture, along with practical examples of working with sample buffers, video tracks, and subtitle data. Additionally, it offers demos for tasks like creating AVAssetWriter and manipulating pixel buffers for video processing.

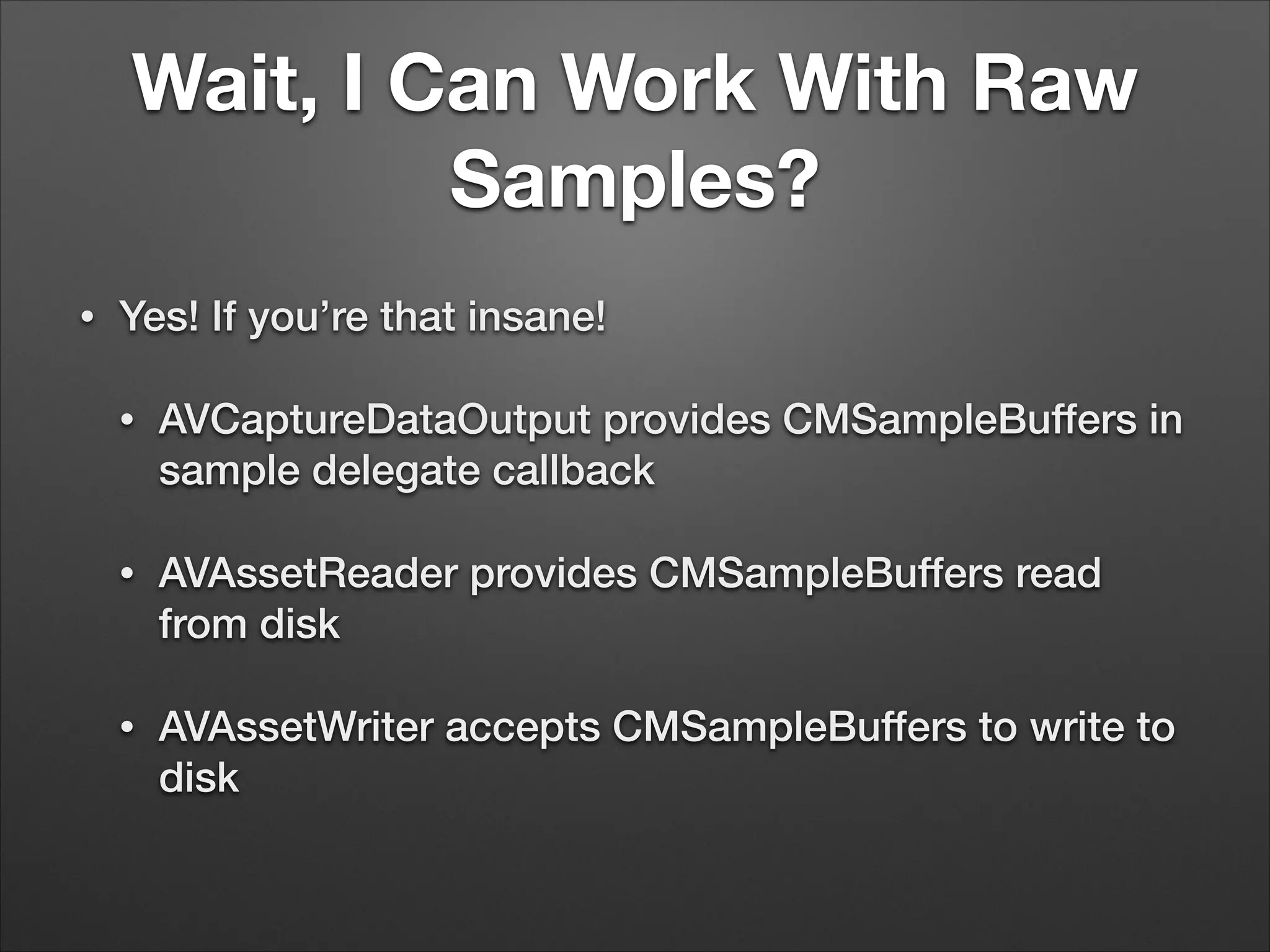

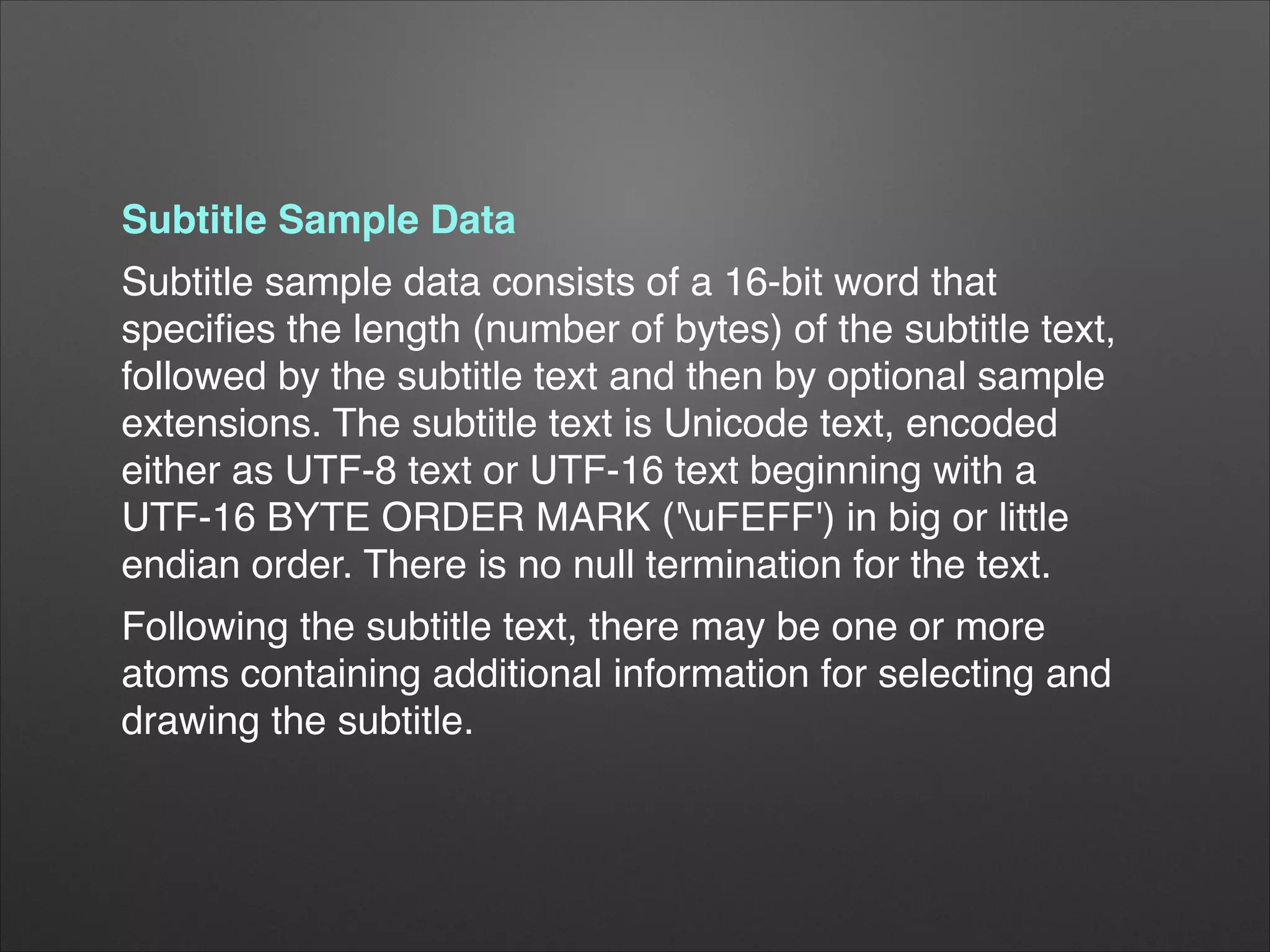

![Creating the AVAssetWriter

self.assetWriter = [[AVAssetWriter alloc] initWithURL:movieURL

fileType: AVFileTypeQuickTimeMovie

error: &movieError];

NSDictionary *assetWriterInputSettings = [NSDictionary dictionaryWithObjectsAndKeys:

AVVideoCodecH264, AVVideoCodecKey,

[NSNumber numberWithInt:FRAME_WIDTH], AVVideoWidthKey,

[NSNumber numberWithInt:FRAME_HEIGHT], AVVideoHeightKey,

nil];

self.assetWriterInput = [AVAssetWriterInput assetWriterInputWithMediaType: AVMediaTypeVideo

outputSettings:assetWriterInputSettings];

self.assetWriterInput.expectsMediaDataInRealTime = YES;

[self.assetWriter addInput:self.assetWriterInput];

self.assetWriterPixelBufferAdaptor = [[AVAssetWriterInputPixelBufferAdaptor alloc]

initWithAssetWriterInput:self.assetWriterInput

sourcePixelBufferAttributes:nil];

[self.assetWriter startWriting];

!

self.firstFrameWallClockTime = CFAbsoluteTimeGetCurrent();

[self.assetWriter startSessionAtSourceTime: CMTimeMake(0, TIME_SCALE)];](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-19-2048.jpg)

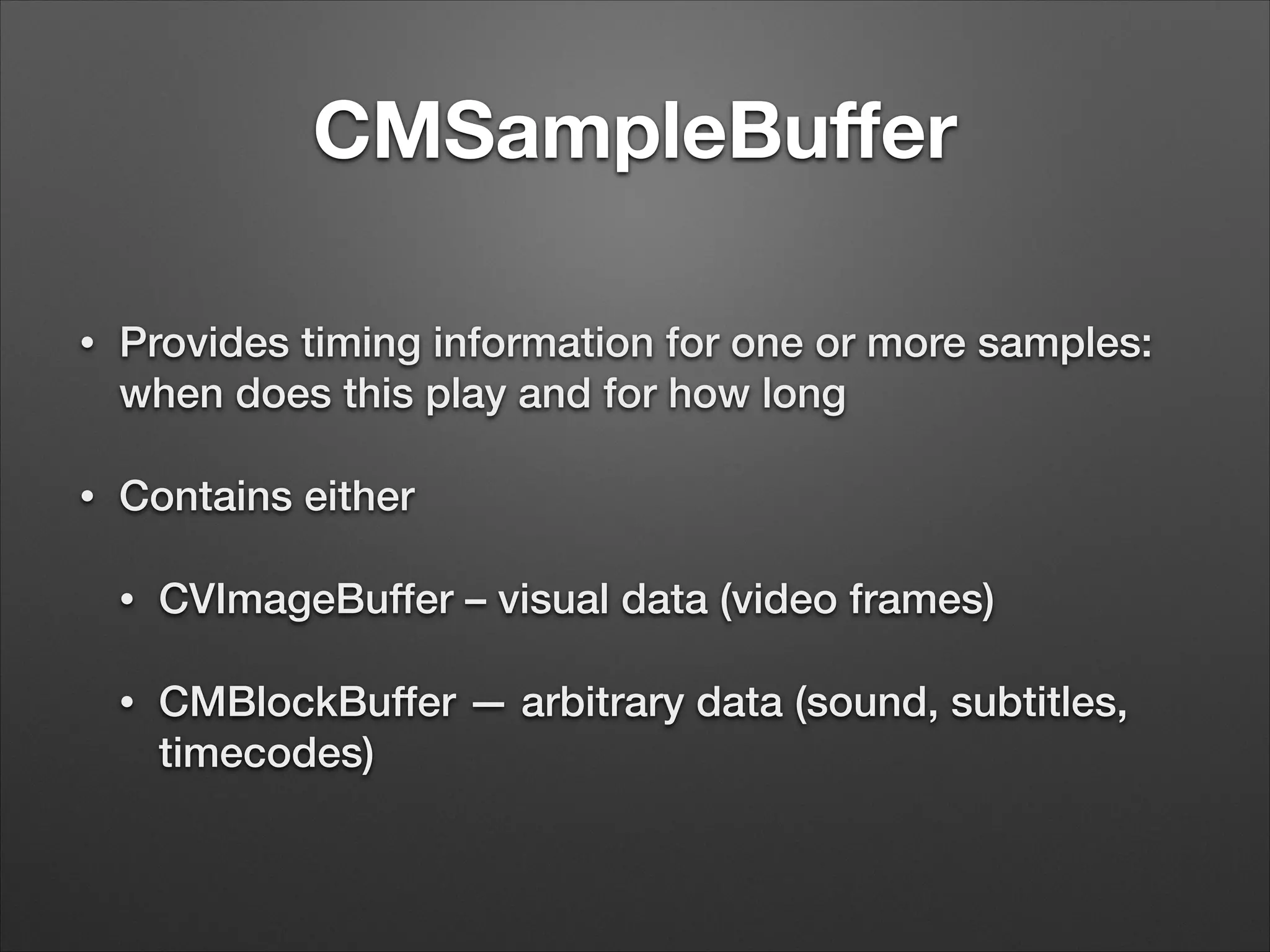

![Write CMSampleBuffer w/

time

// calculate the time

CFAbsoluteTime thisFrameWallClockTime = CFAbsoluteTimeGetCurrent();

CFTimeInterval elapsedTime = thisFrameWallClockTime - self.firstFrameWallClockTime;

CMTime presentationTime = CMTimeMake (elapsedTime * TIME_SCALE, TIME_SCALE);

// write the sample

BOOL appended = [self.assetWriterPixelBufferAdaptor appendPixelBuffer:pixelBuffer

withPresentationTime:presentationTime];](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-21-2048.jpg)

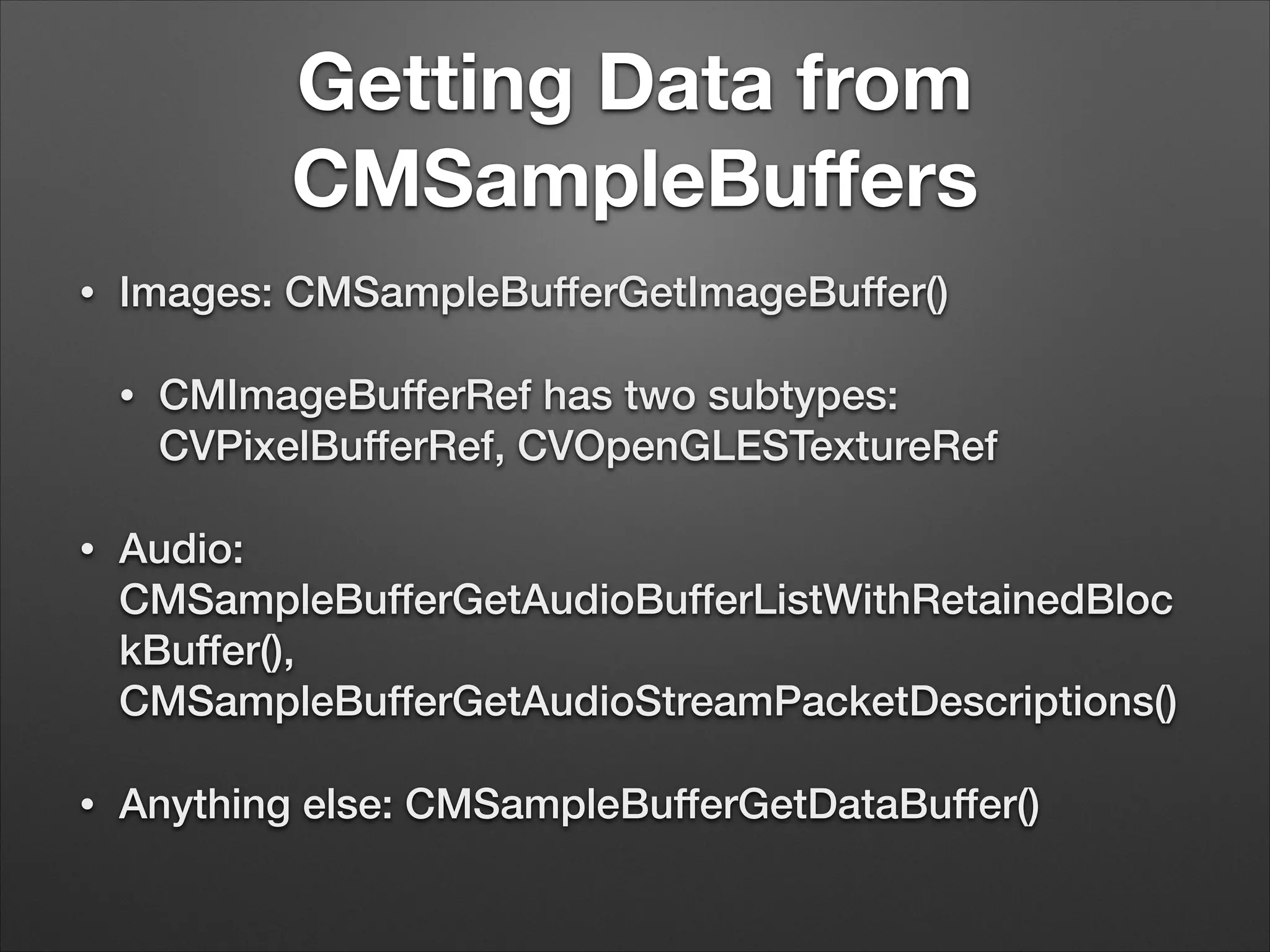

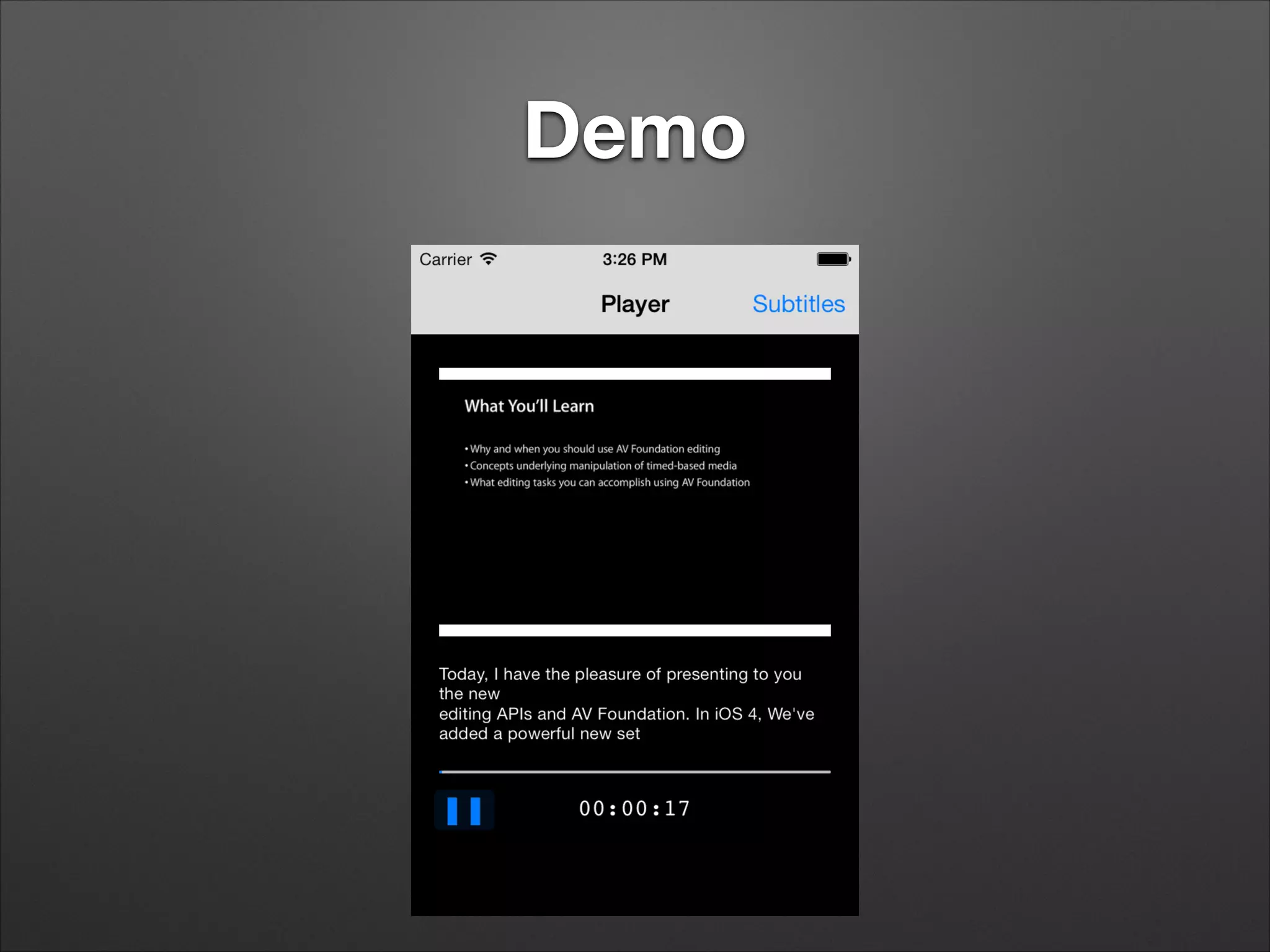

![I Iz In Ur Subtitle Track…

AVAssetReaderTrackOutput *subtitleTrackOutput =

[AVAssetReaderTrackOutput assetReaderTrackOutputWithTrack:subtitleTracks[0]

outputSettings:nil];

!

// ...

while (reading) {

CMSampleBufferRef sampleBuffer = [subtitleTrackOutput copyNextSampleBuffer];

if (sampleBuffer == NULL) {

AVAssetReaderStatus status = subtitleReader.status;

if ((status == AVAssetReaderStatusCompleted) ||

(status == AVAssetReaderStatusFailed) ||

(status == AVAssetReaderStatusCancelled)) {

reading = NO;

NSLog (@"ending with reader status %d", status);

}

} else {

CMTime presentationTime = CMSampleBufferGetPresentationTimeStamp(sampleBuffer) ;

CMTime duration = CMSampleBufferGetDuration(sampleBuffer);](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-28-2048.jpg)

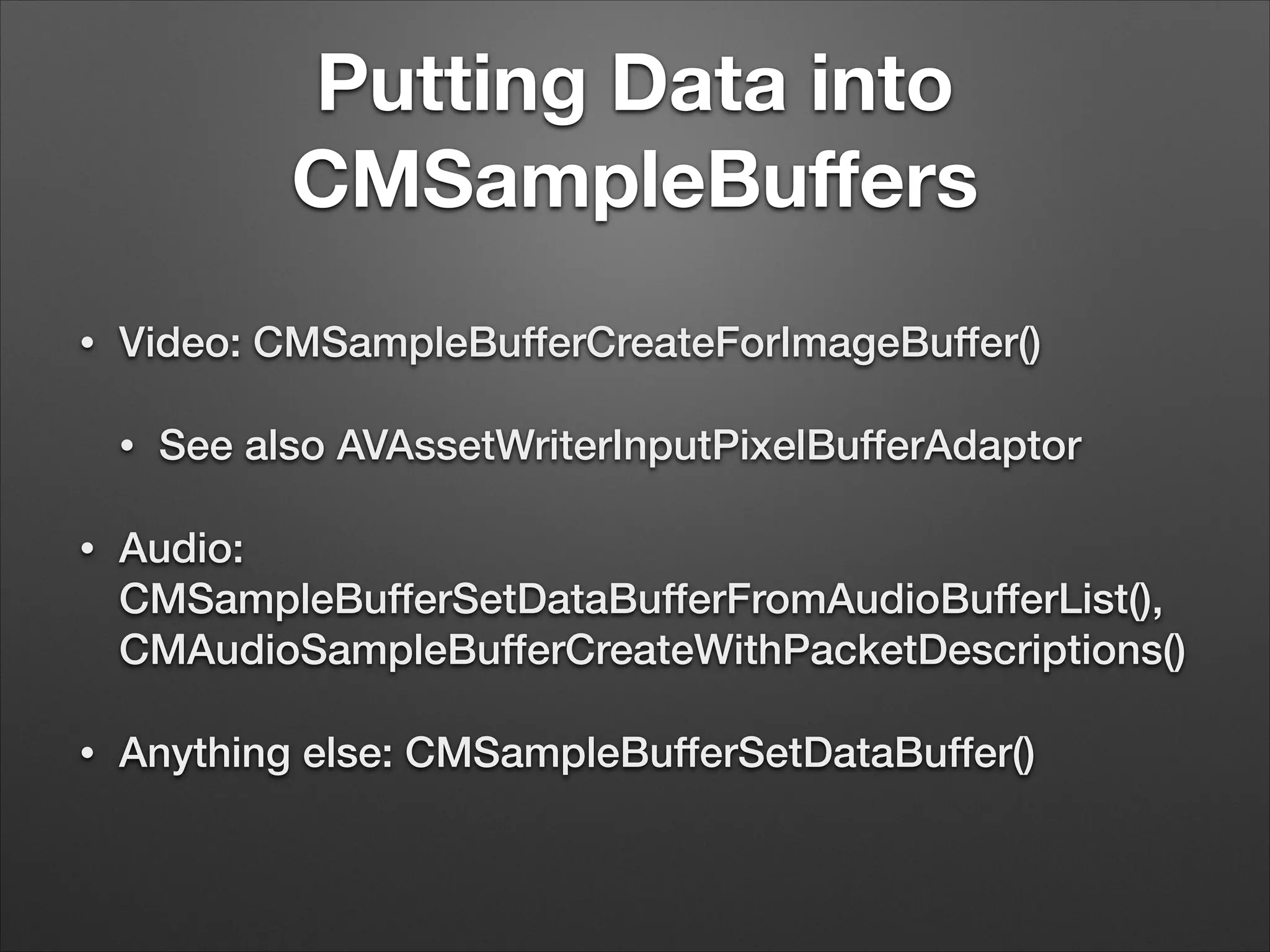

![Use & Abuse of Pixel Buffers

• Straightforward to call -[CIImage

imageWithCVImageBuffer:] (OS X) or -[CIImage

imageWithCVPixelBuffer:] (iOS)

• However, drawing it into a CIContext requires being

backed by a CAEAGLLayer

• So this part is going to be OS X-based for now…](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-33-2048.jpg)

![Core Image Filters

• Create by name with +[CIFilter filterWithName:]

• Several dozen built into OS X, iOS

• Set parameters with -[CIFilter setValue:forKey:]

• Keys are in Core Image Filter Reference. Input image is

kCIInputImageKey

• Make sure your filter is in category CIEffectVideo

• Retrieve filtered image with -[filter valueForKey:

kCIOutputImageKey]](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-35-2048.jpg)

![Post-Filtering

• -[CIImage drawImage:inRect:fromRect:] into a

CIContext backed by an NSBitmapImageRep

• Take these pixels and write them to a new

CVPixelBuffer (if you’re writing to disk)](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-39-2048.jpg)

![CVImageBufferRef outCVBuffer = NULL;

void* pixels = [self.filterGraphicsBitmap bitmapData];

NSDictionary *pixelBufferAttributes = @{

(id)kCVPixelBufferPixelFormatTypeKey: @(kCVPixelFormatType_32ARGB),

(id)kCVPixelBufferCGBitmapContextCompatibilityKey: @(YES),

(id)kCVPixelBufferCGImageCompatibilityKey: @(YES)

};

!

err = CVPixelBufferCreateWithBytes(kCFAllocatorDefault,

self.outputSize.width,

self.outputSize.height,

kCVPixelFormatType_32ARGB,

pixels,

[self.filterGraphicsBitmap bytesPerRow],

NULL, // callback

NULL, // callback context

(__bridge CFDictionaryRef) pixelBufferAttributes,

&outCVBuffer);](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-40-2048.jpg)

![Further Thoughts

• First step to doing anything low level with AV

Foundation is to work with CMSampleBuffers

• -[AVAssetReaderOutput copyNextSampleBuffer], -

[AVAsetWriterInput appendSampleBuffer:]

• -[AVCaptureVideoDataOutputSampleBufferDelegate

captureOutput:didOutputSampleBuffer:fromConnecti

on:]](https://image.slidesharecdn.com/stupid-video-tricks-140310154442-phpapp01/75/Stupid-Video-Tricks-41-2048.jpg)