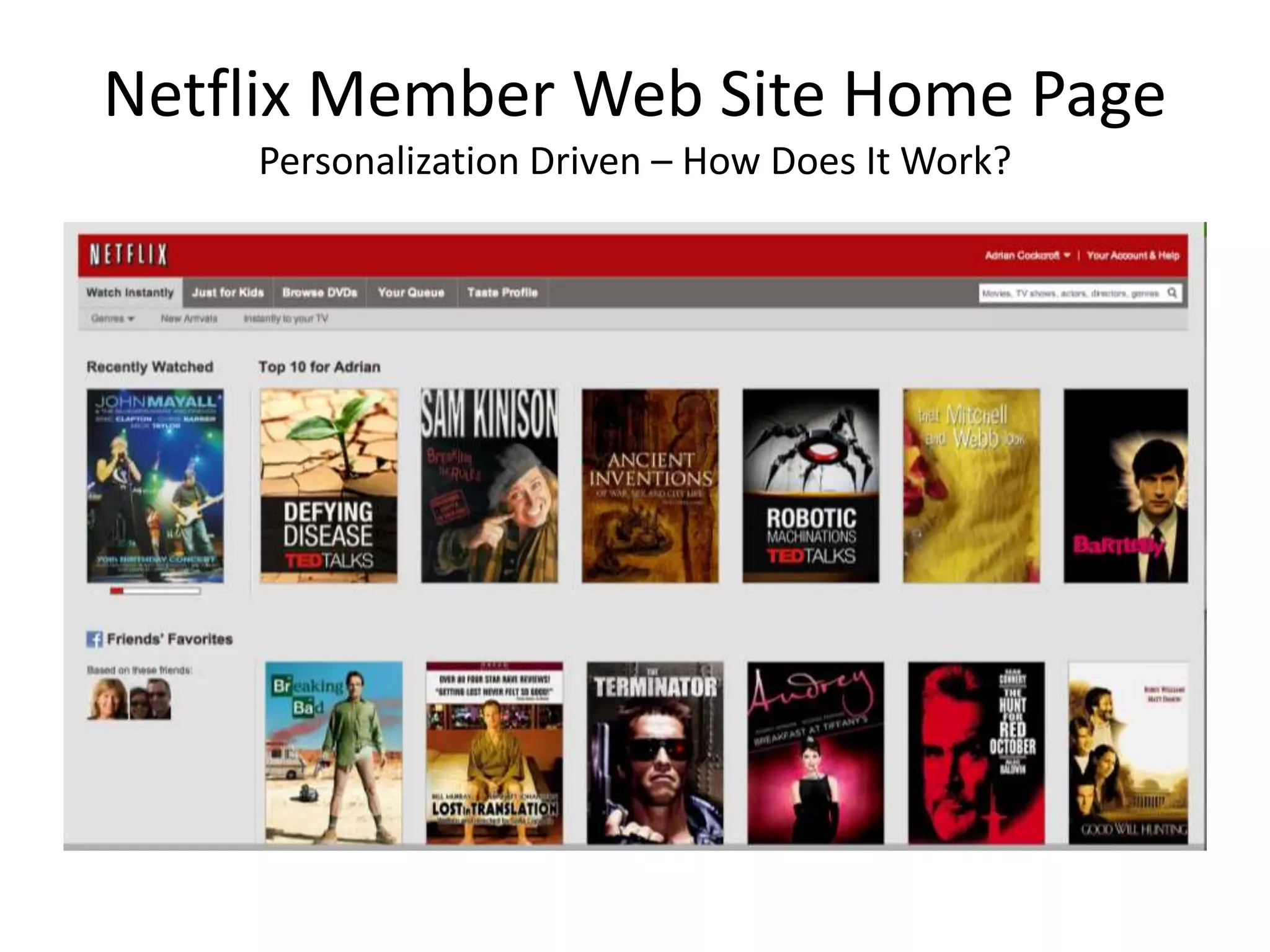

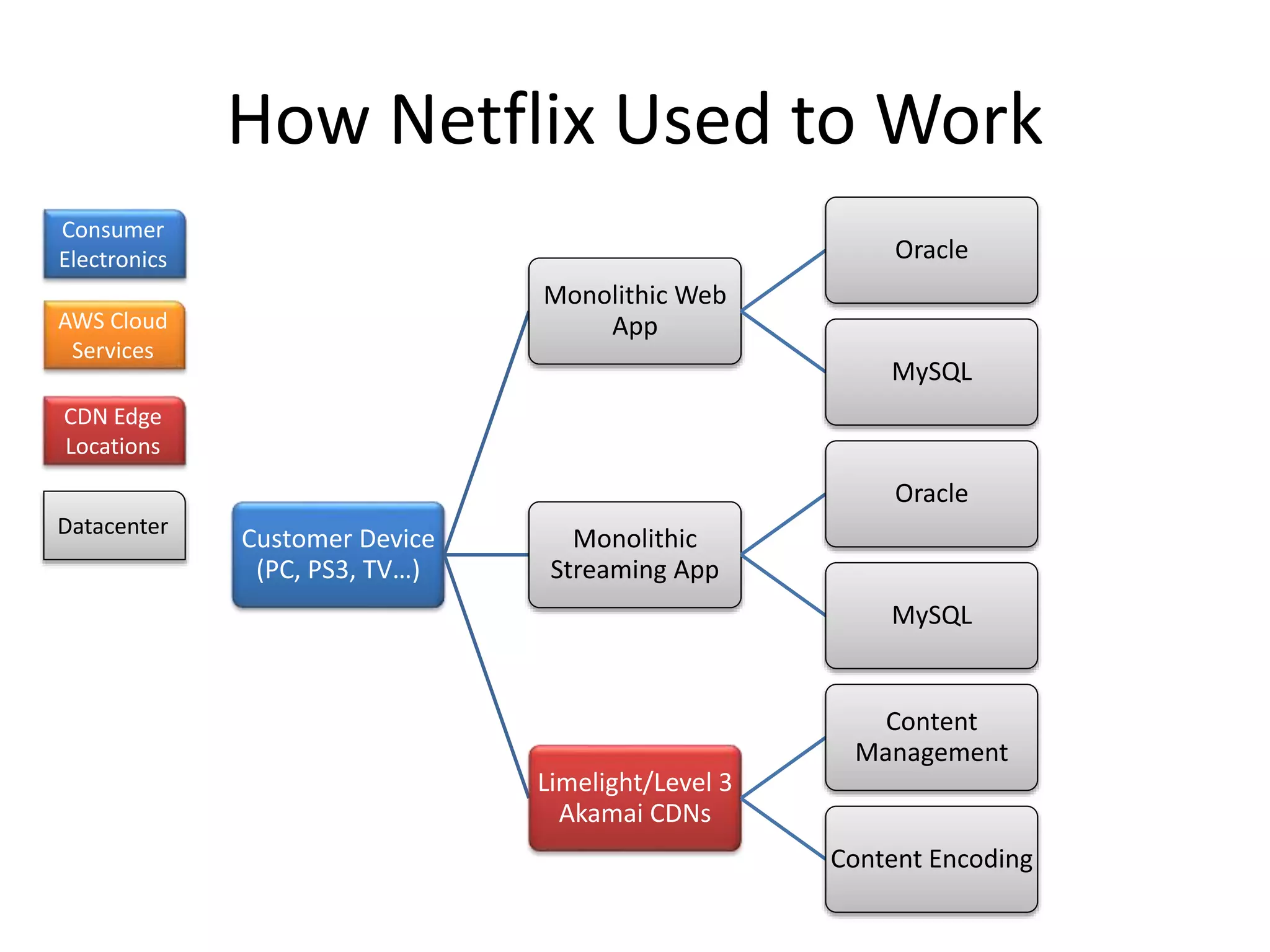

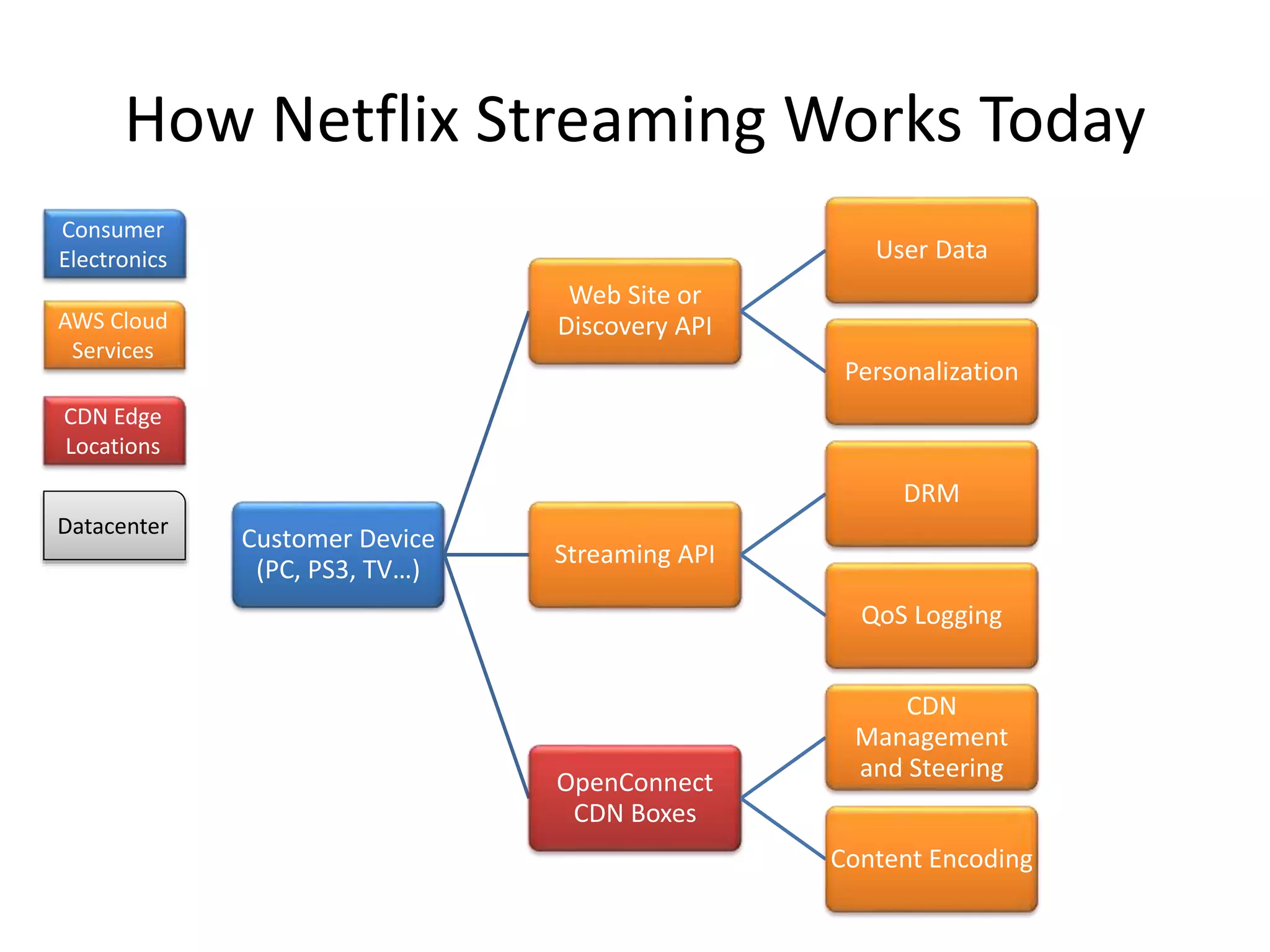

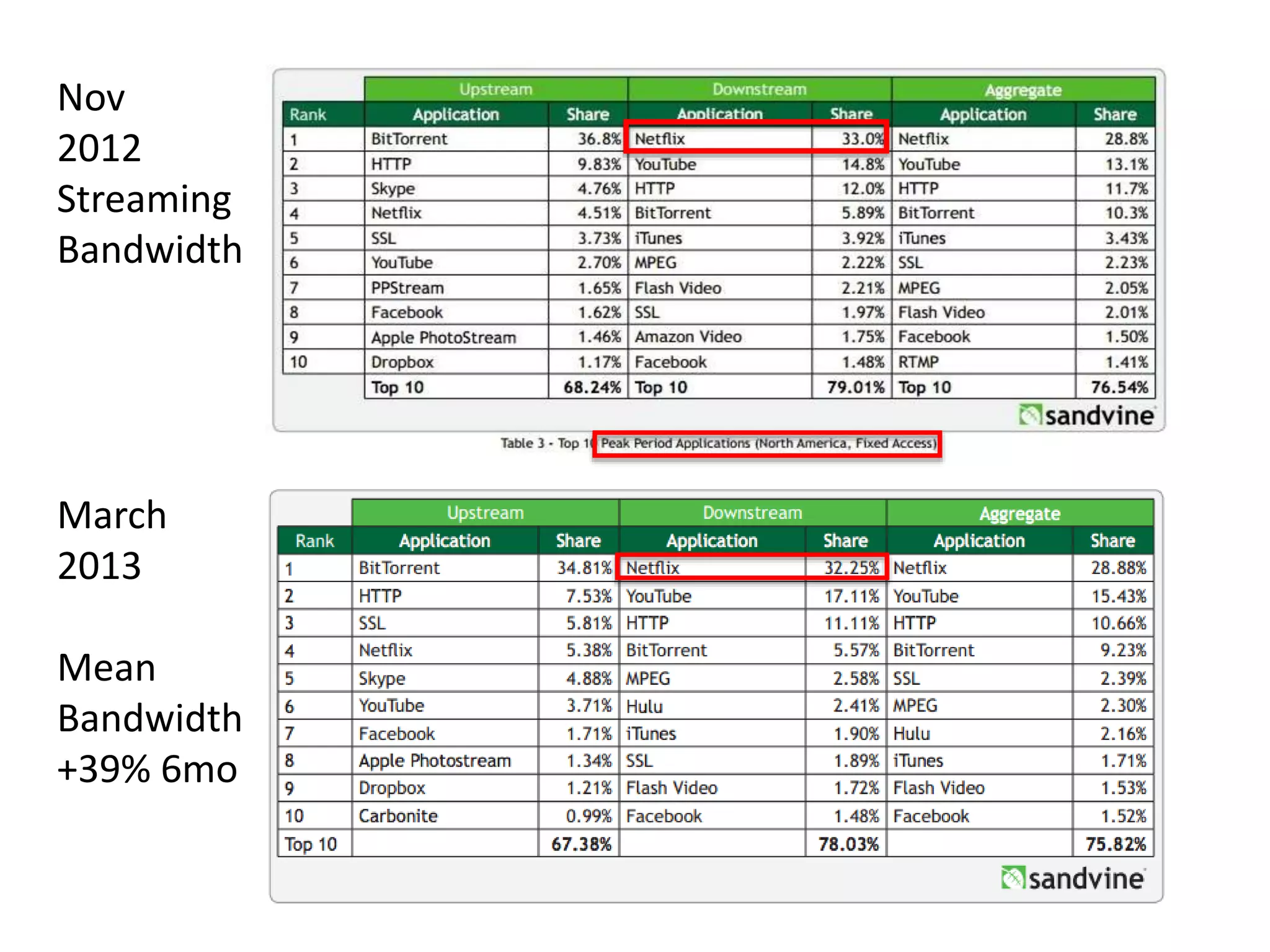

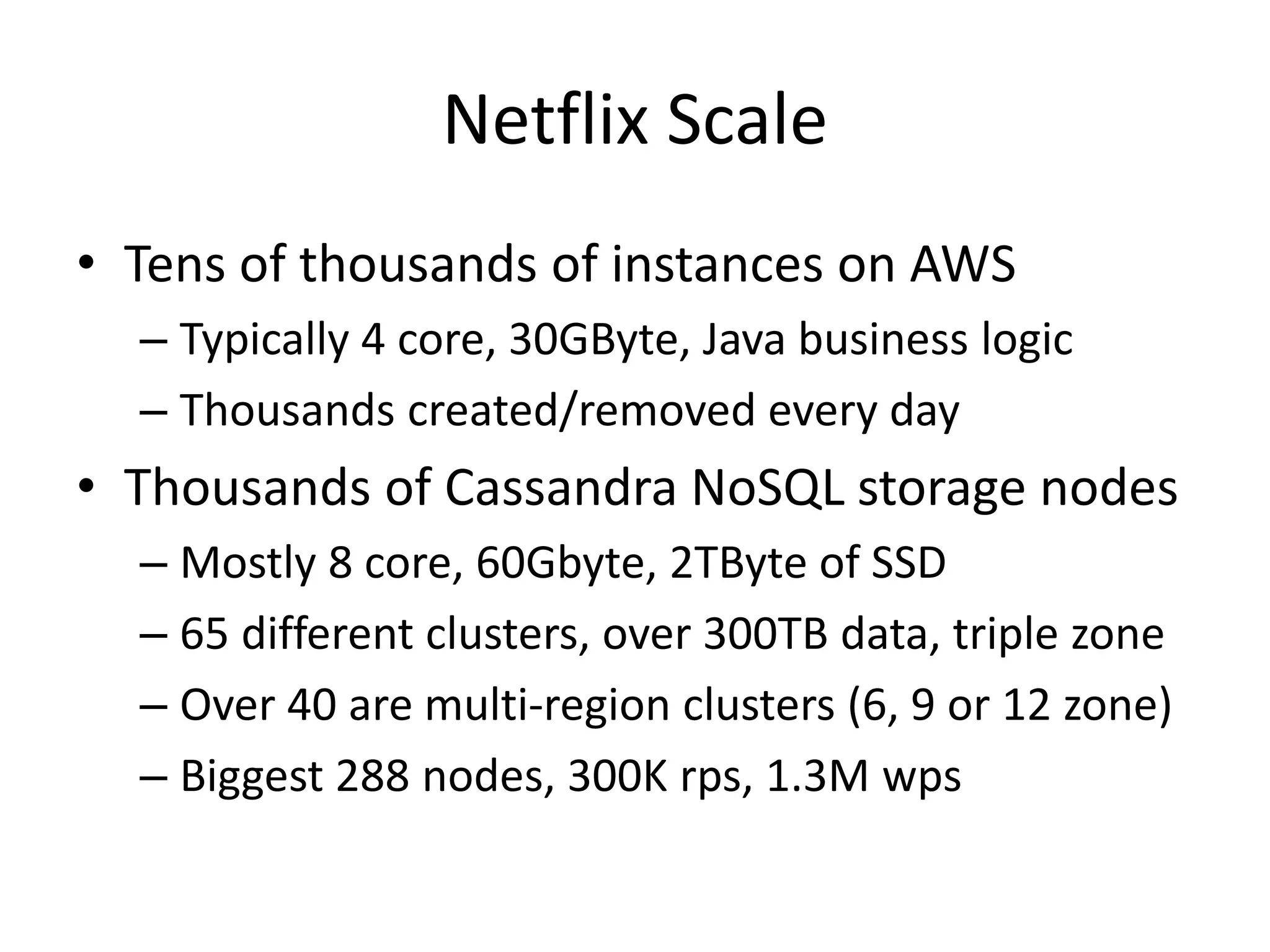

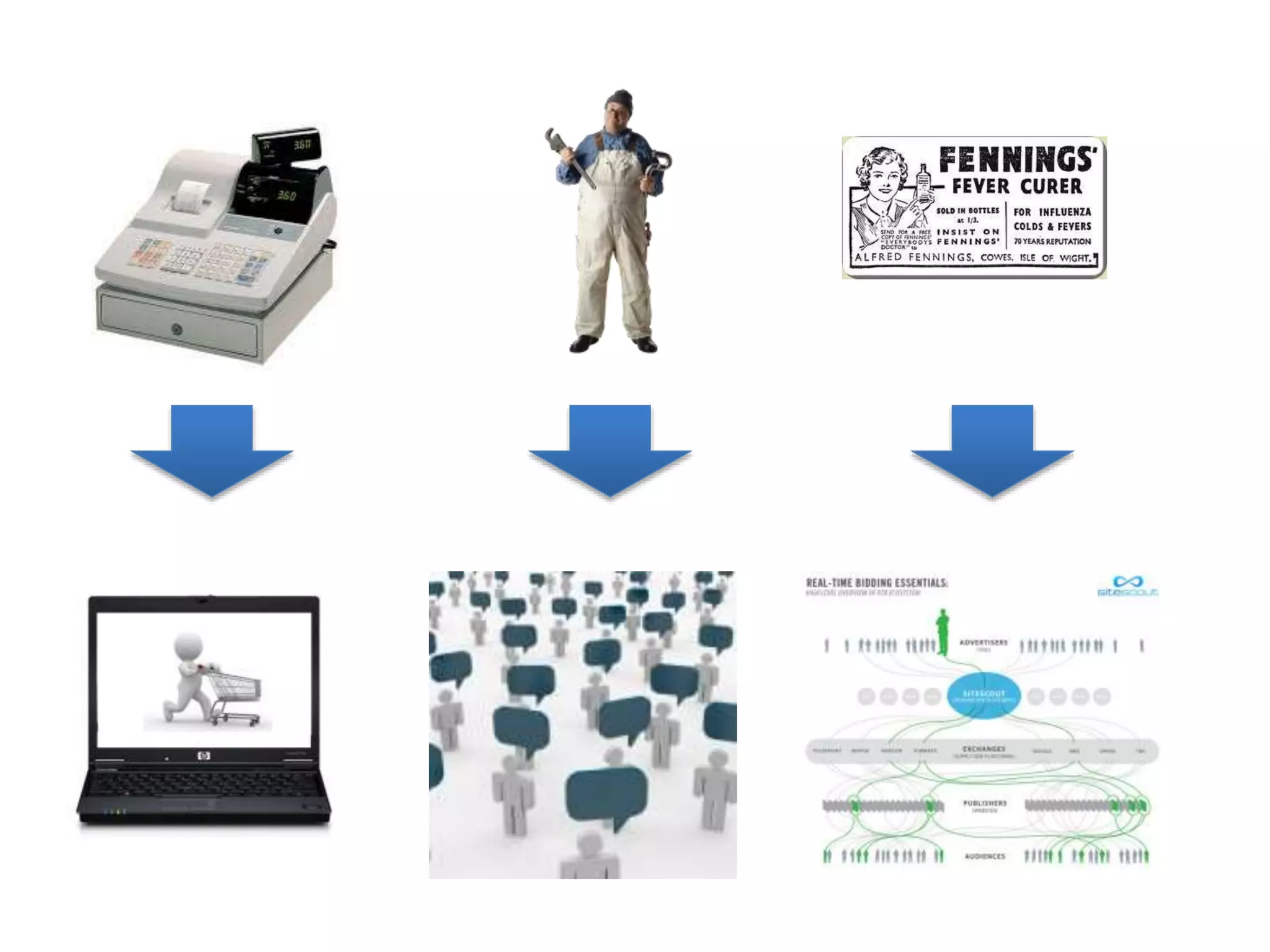

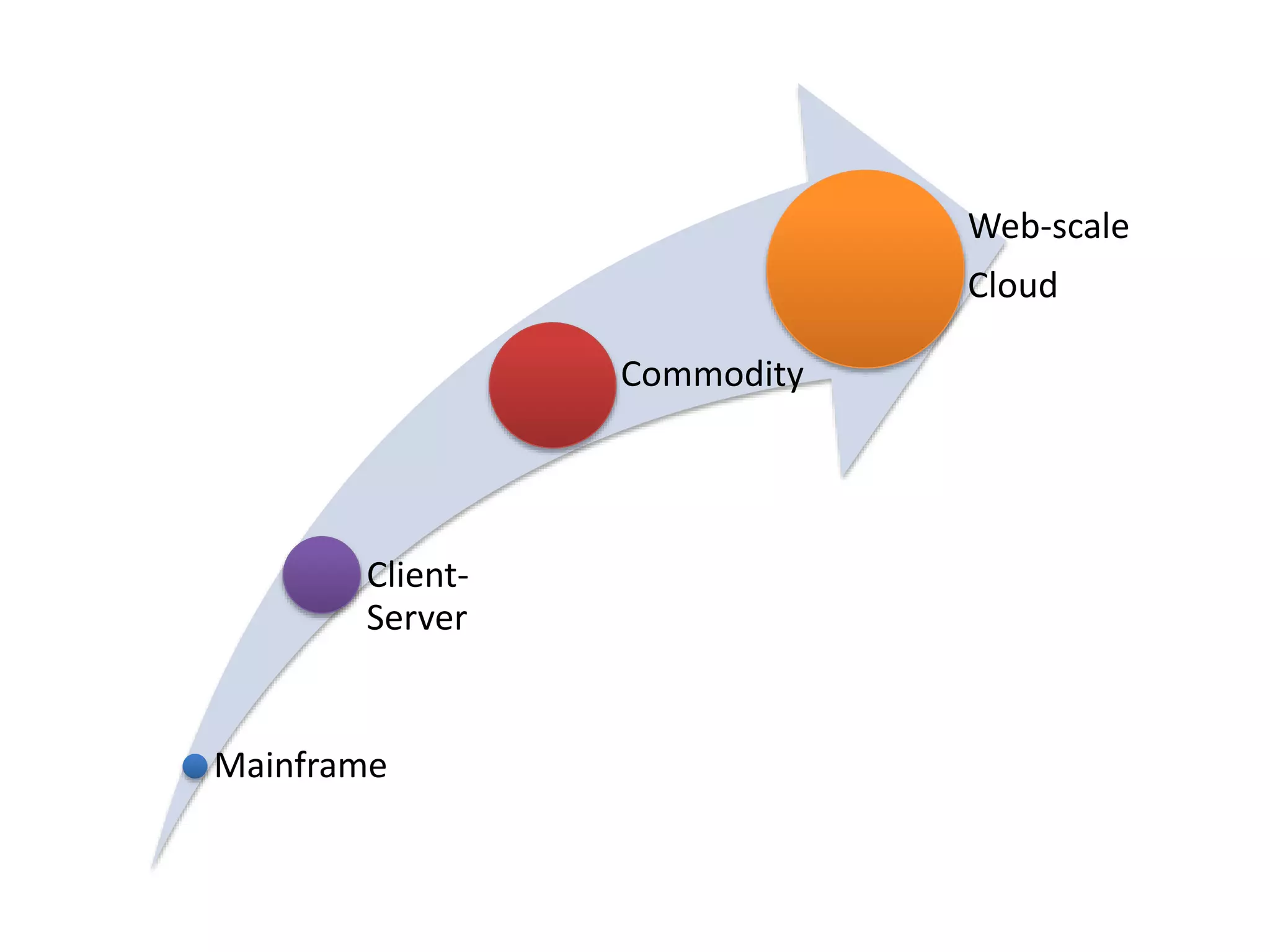

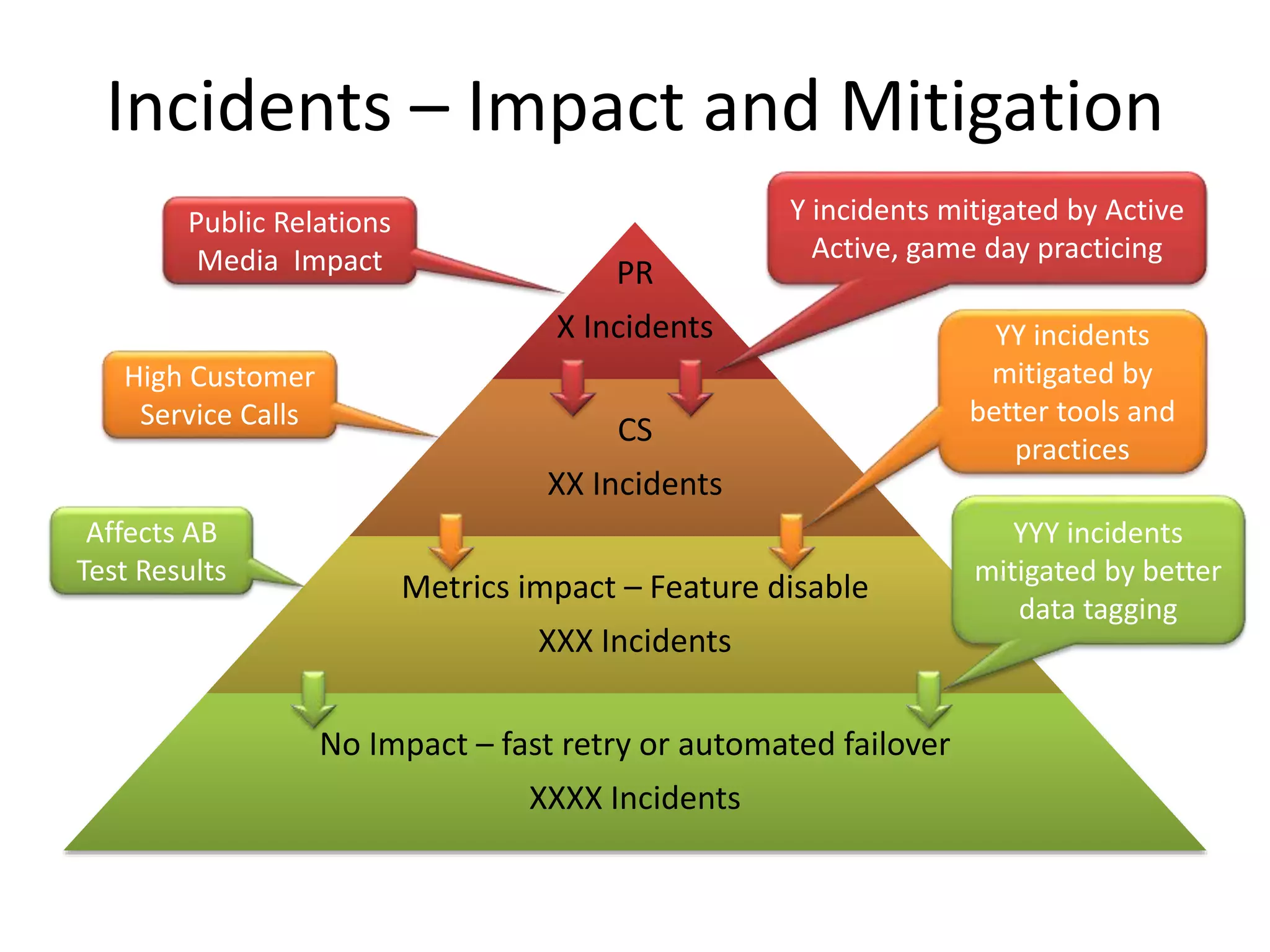

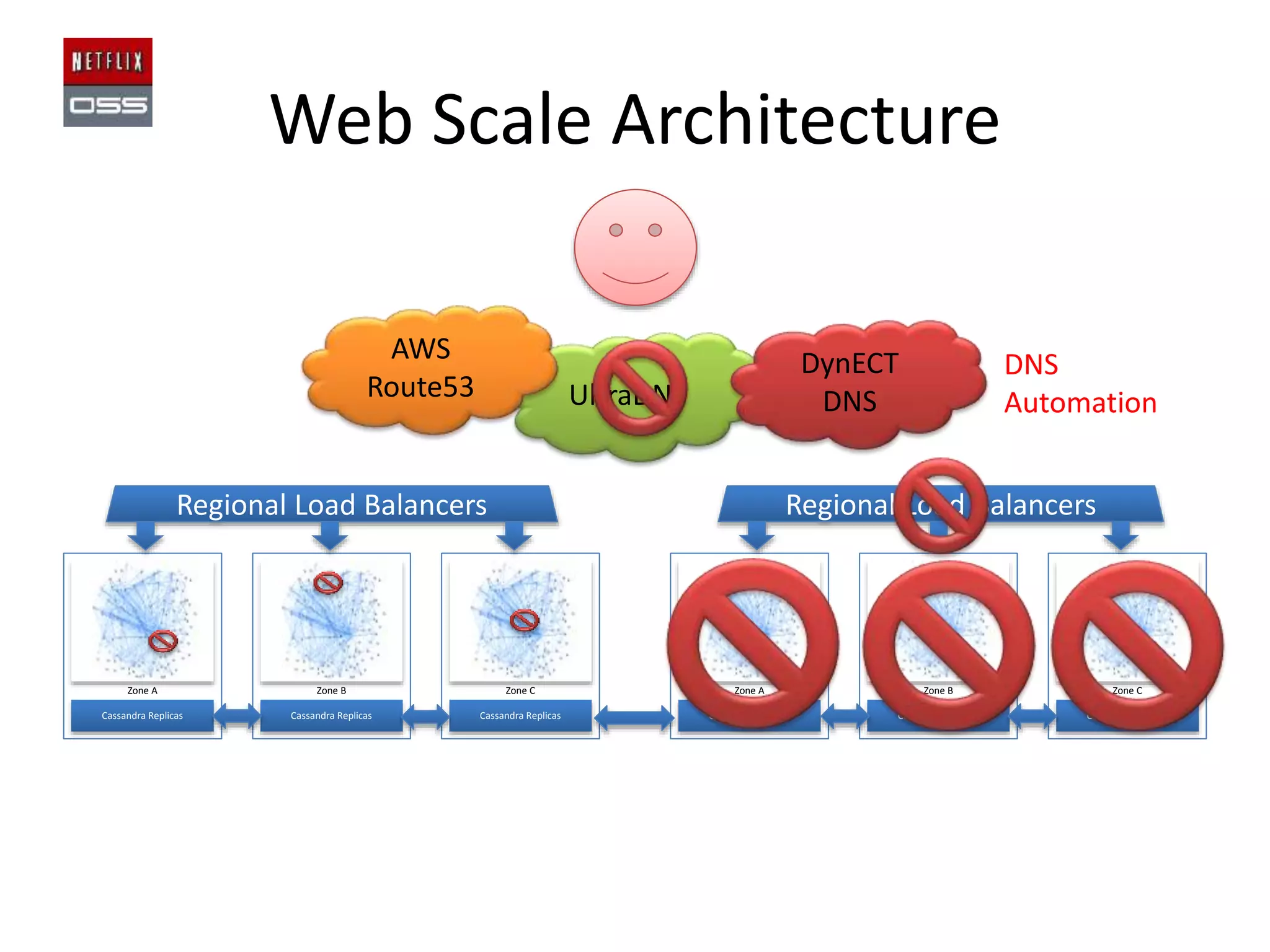

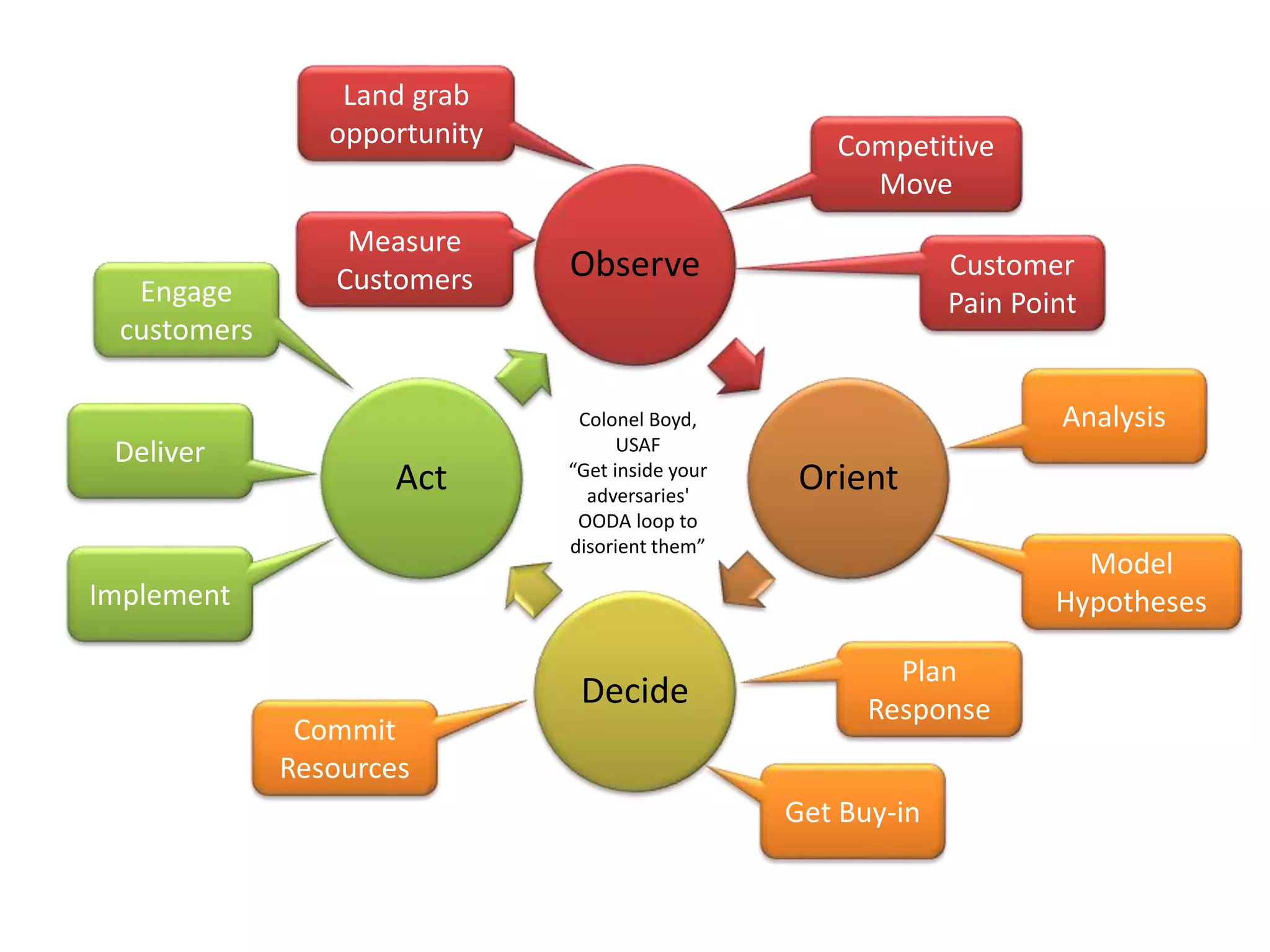

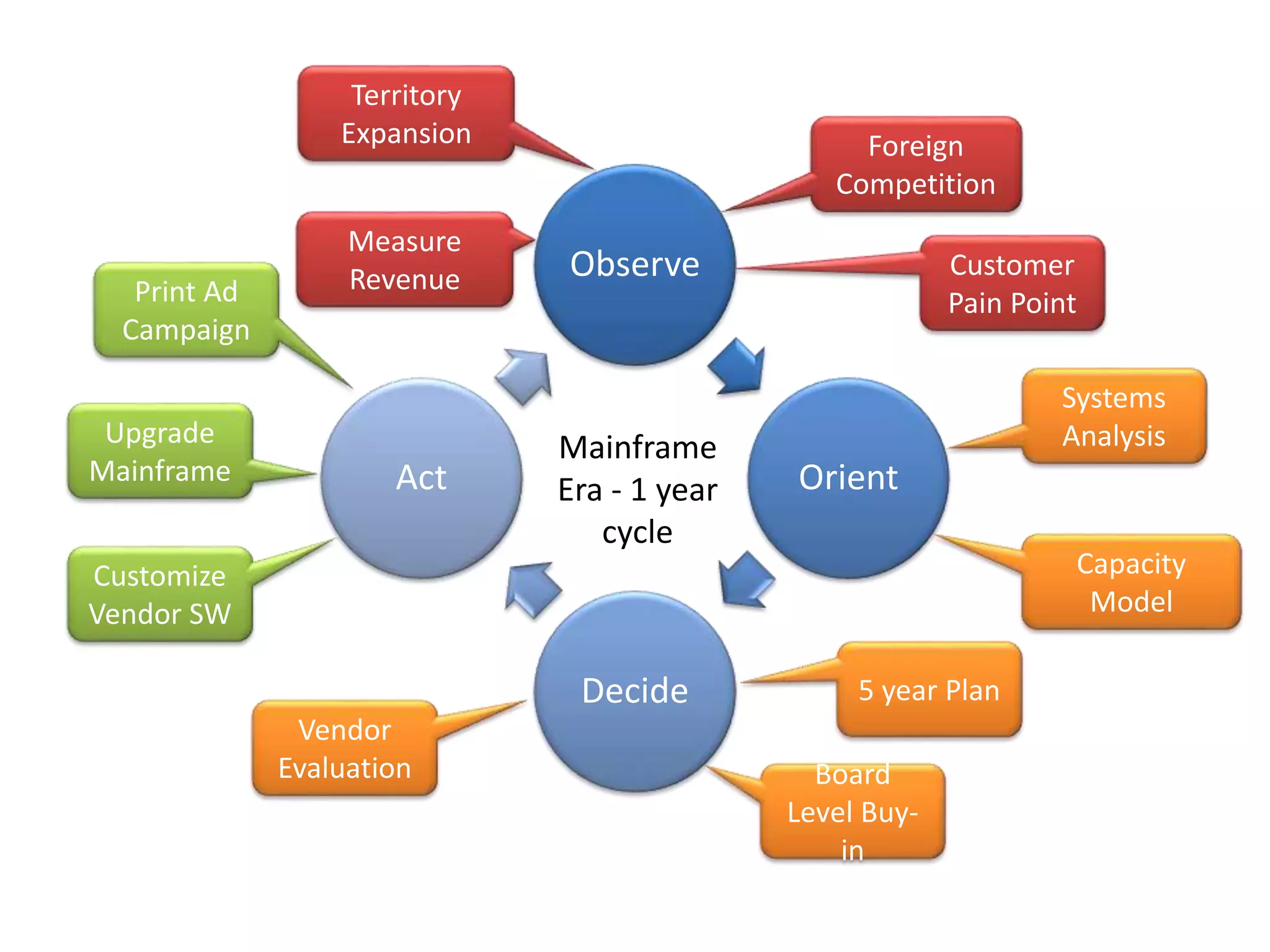

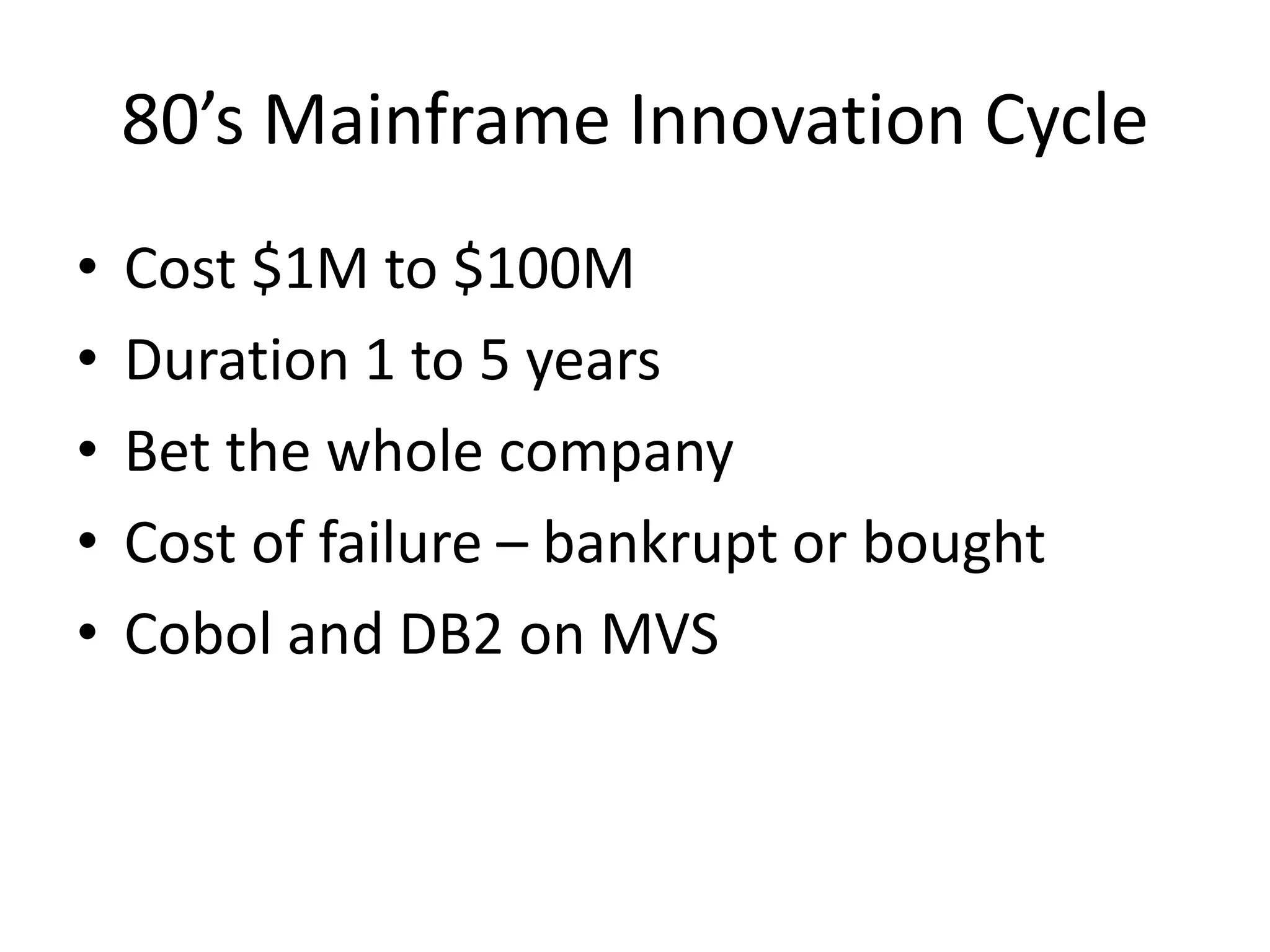

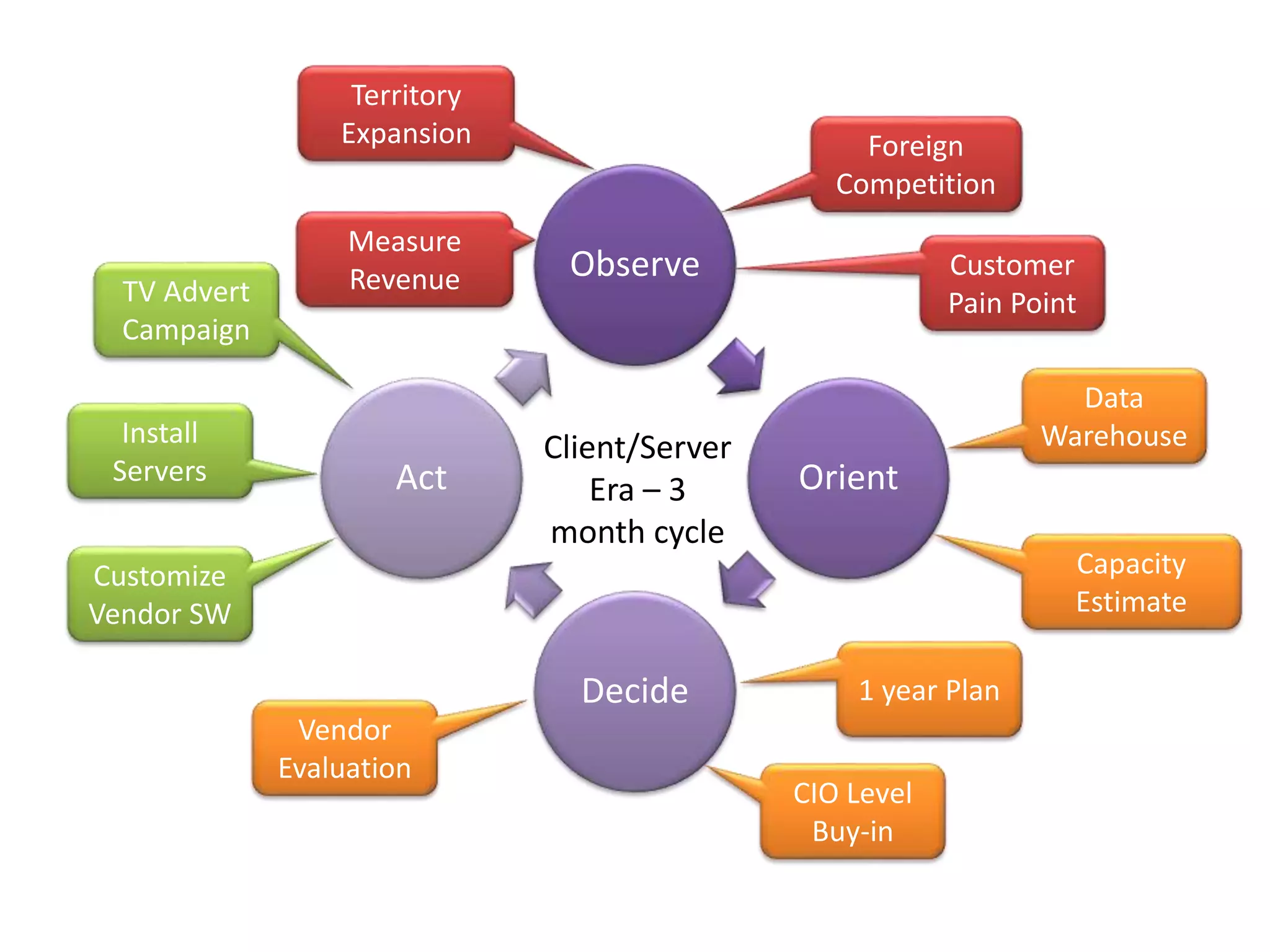

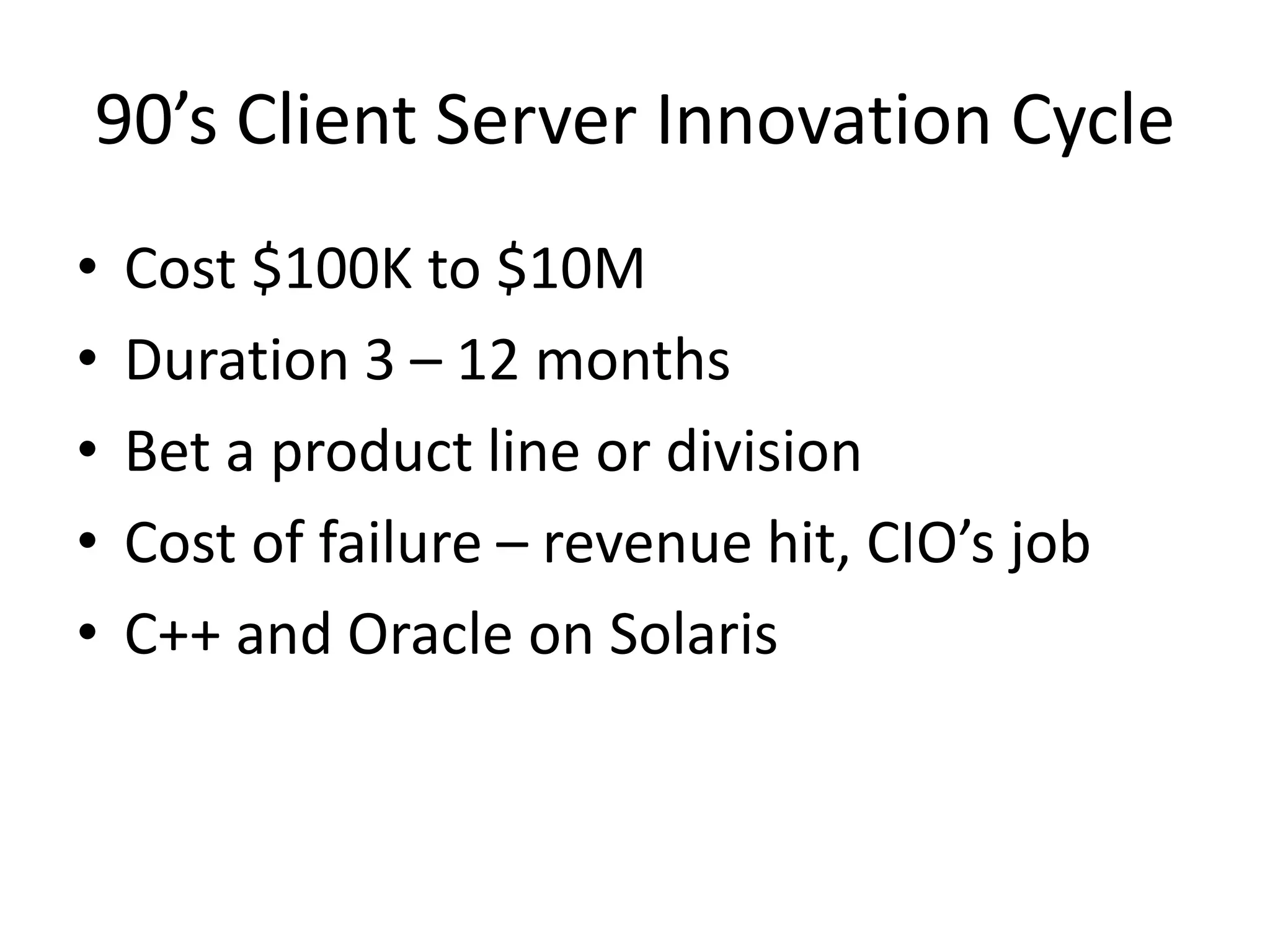

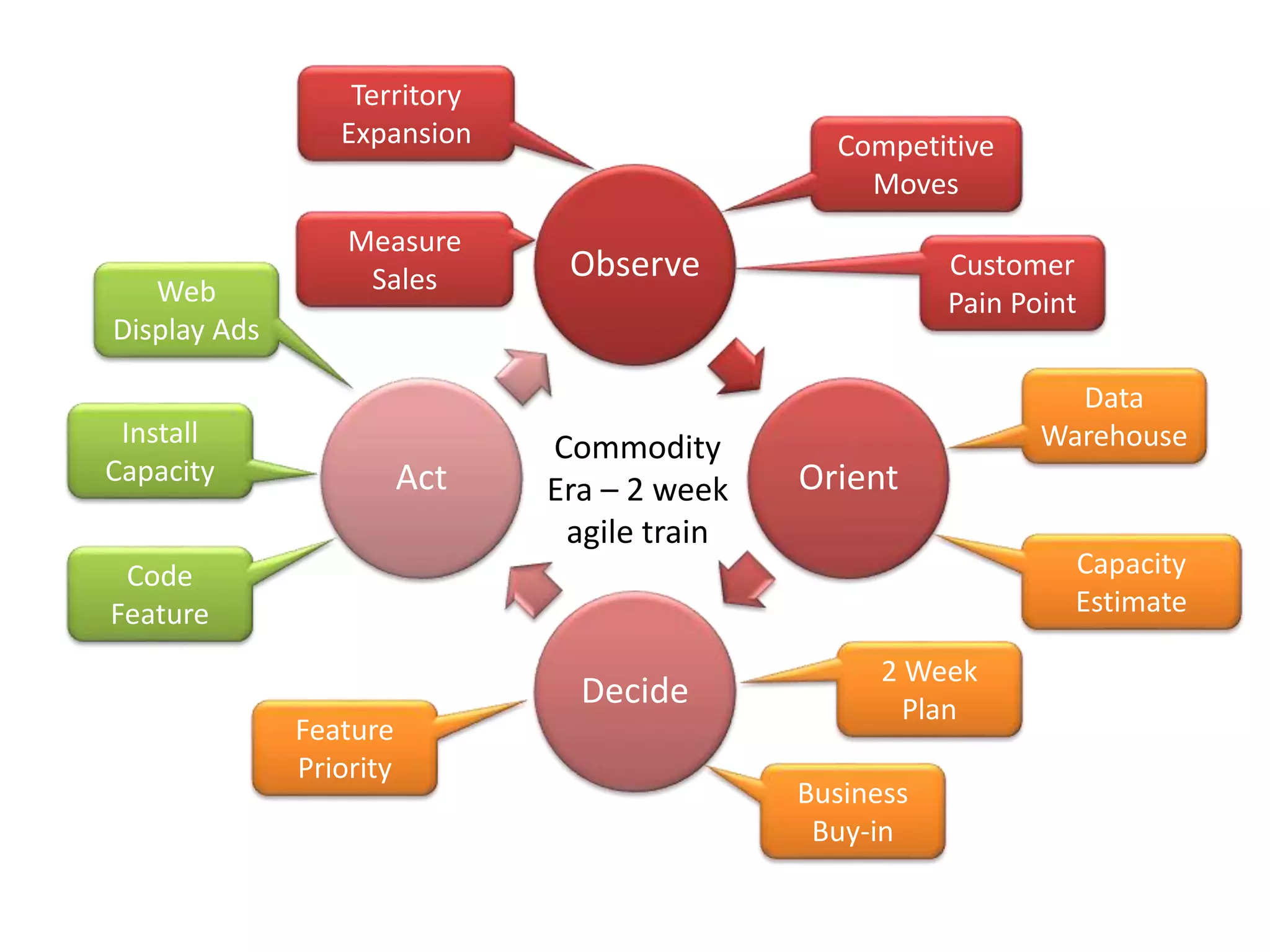

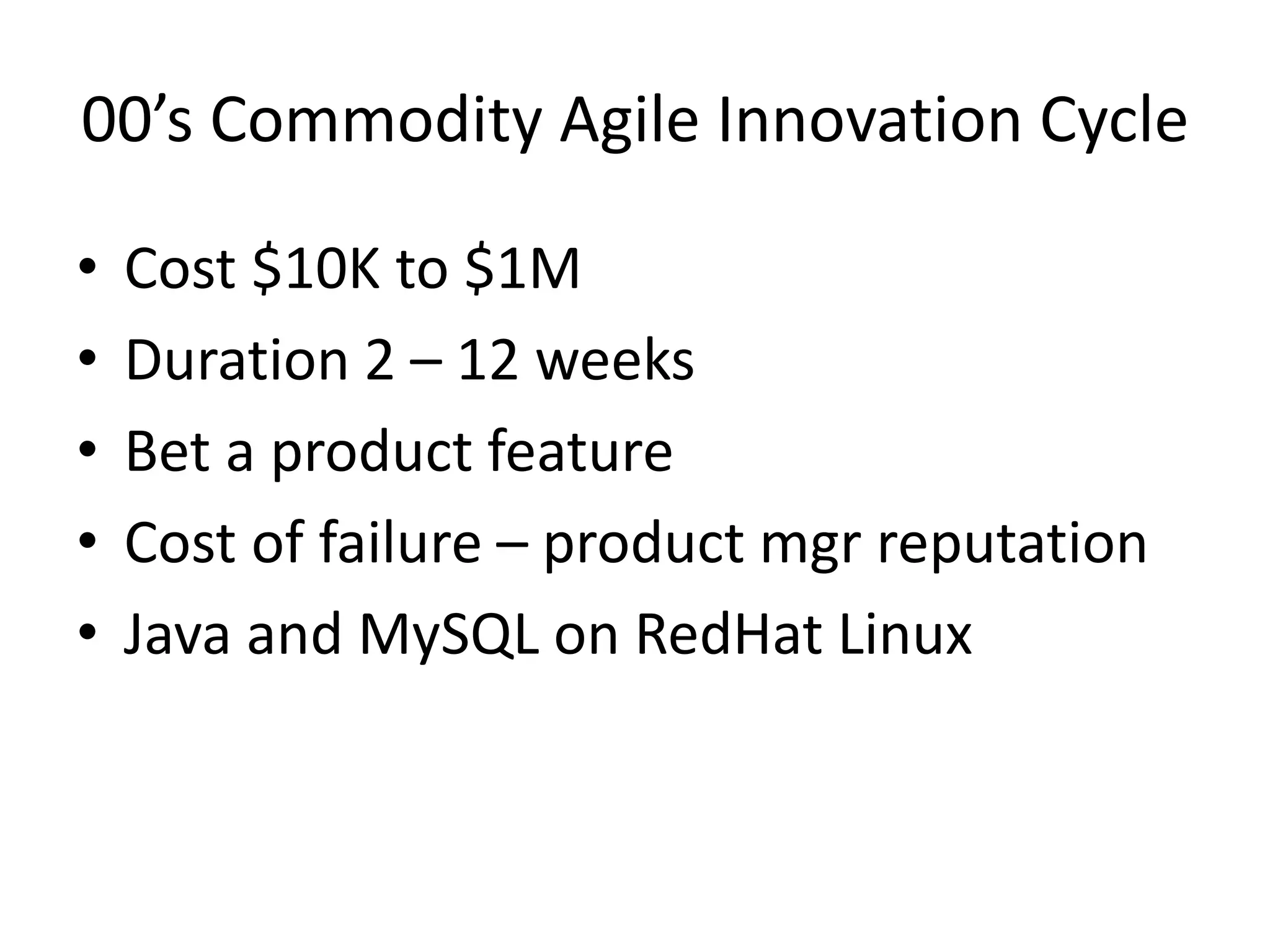

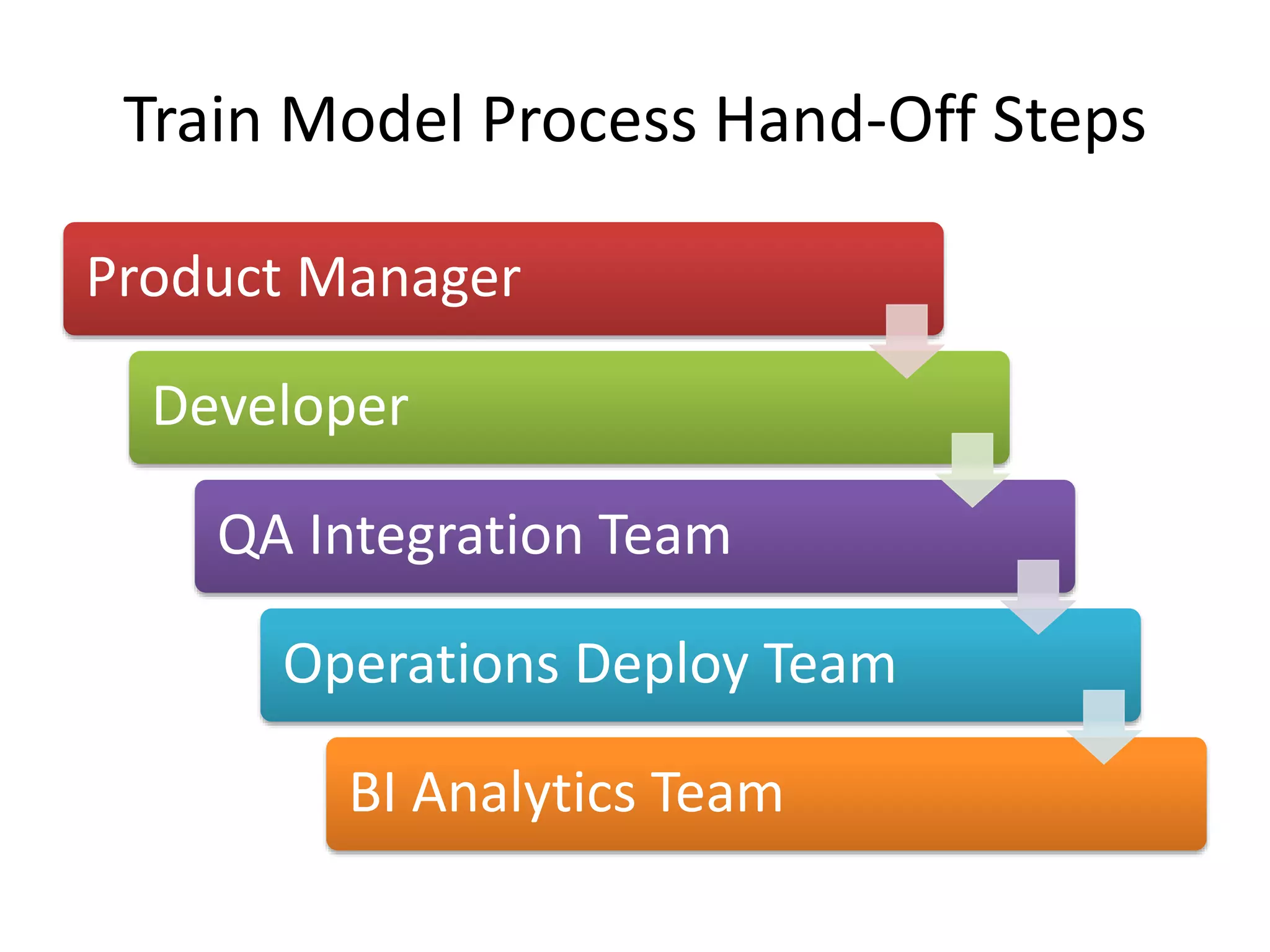

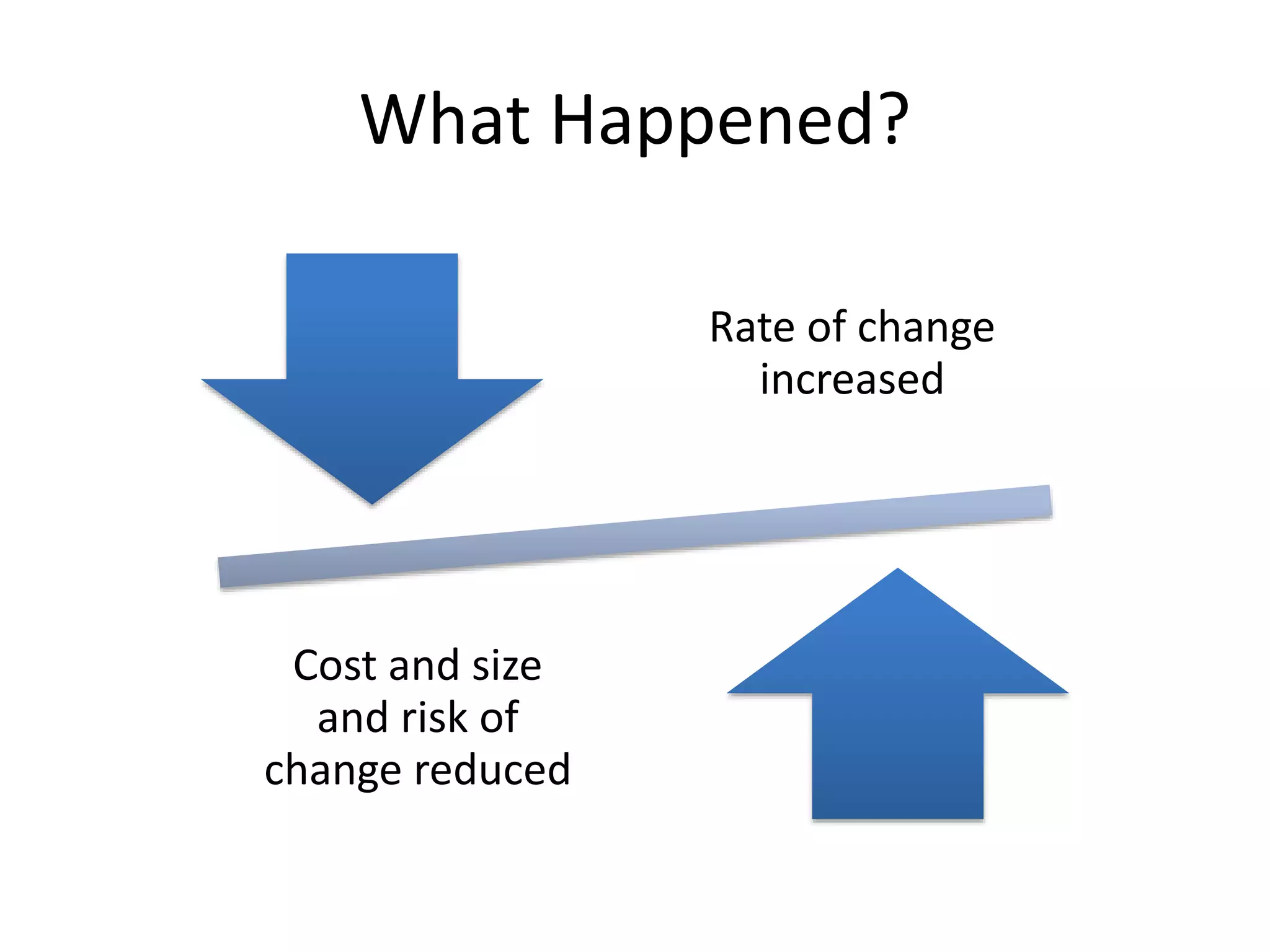

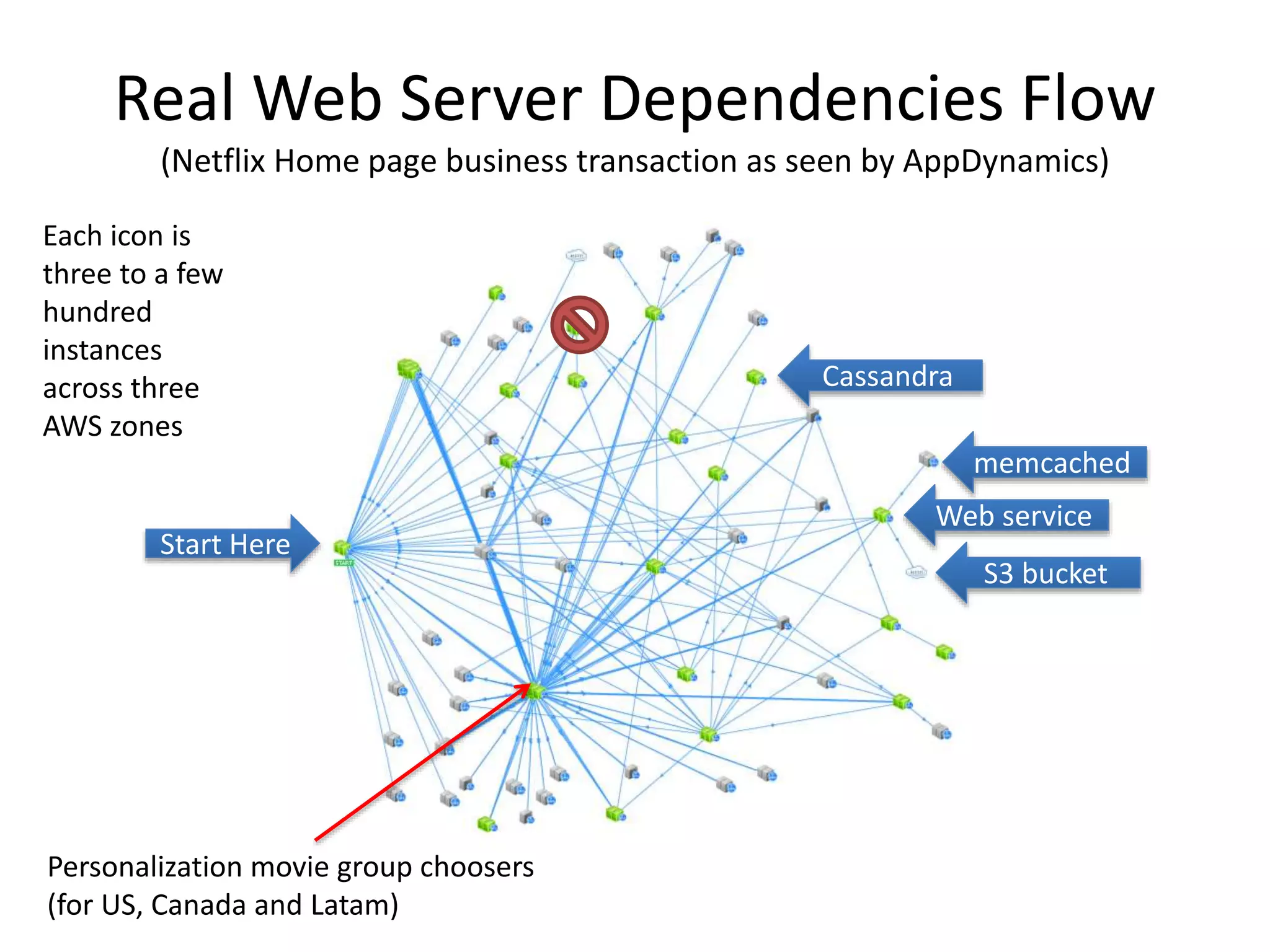

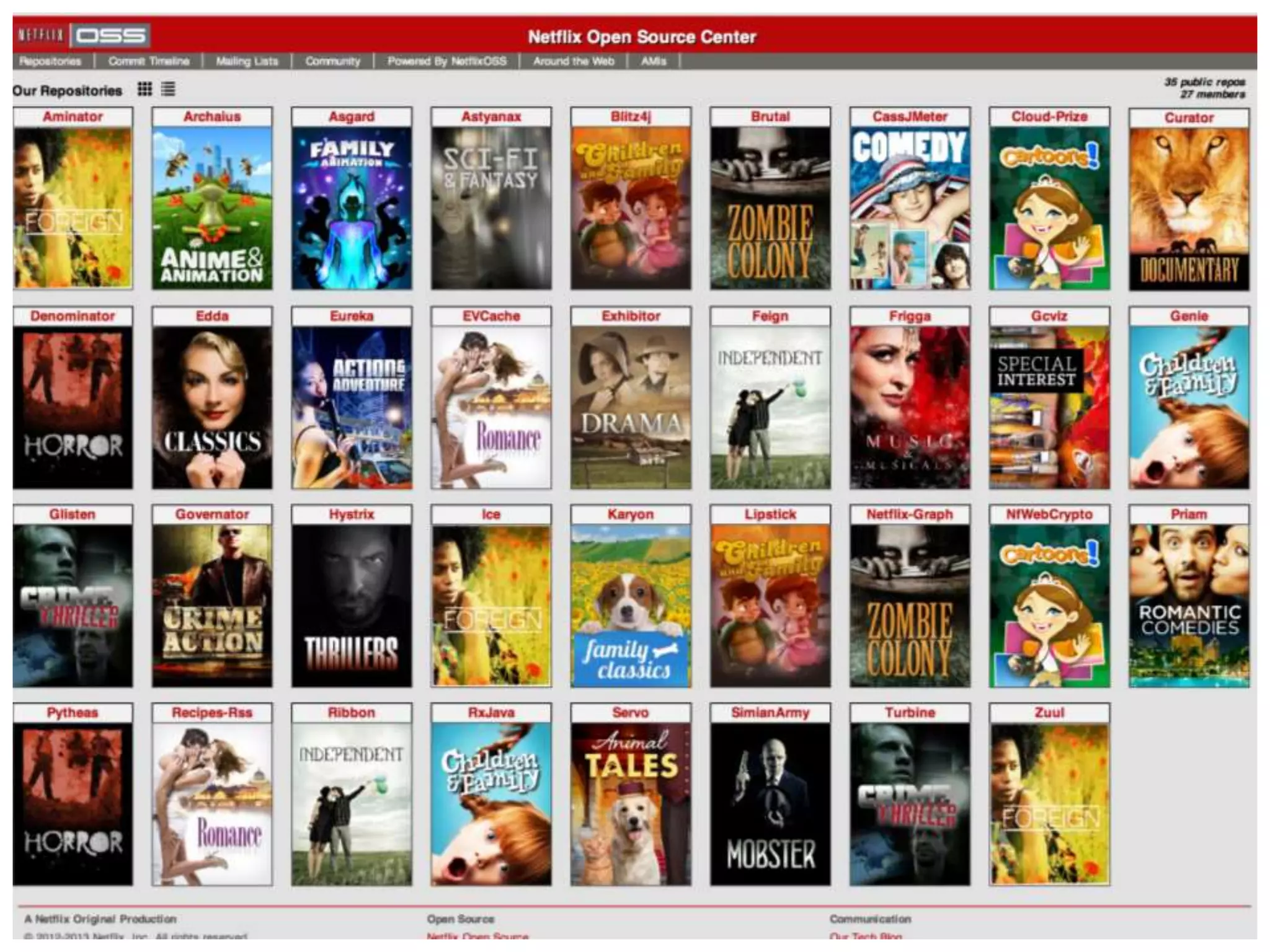

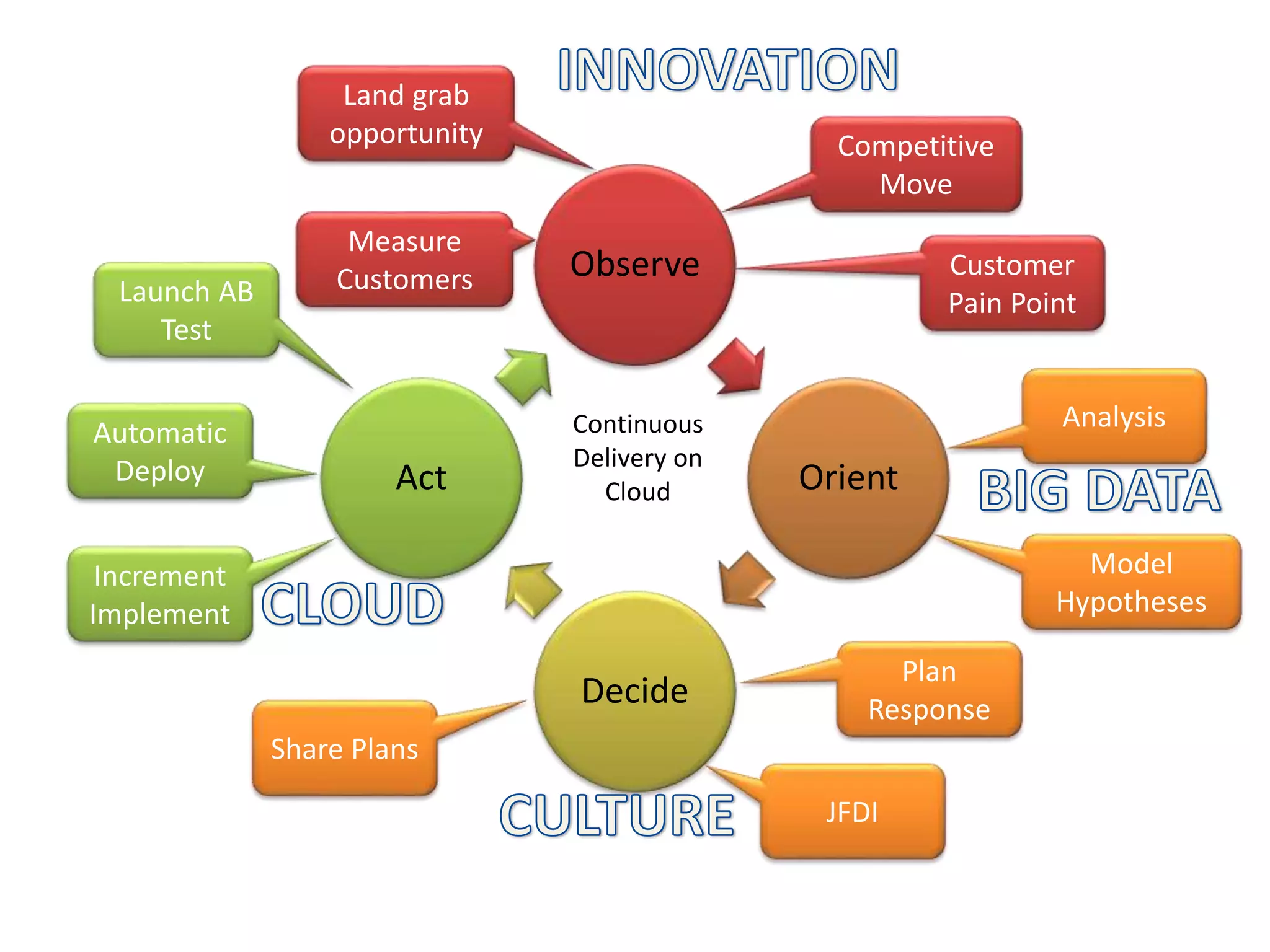

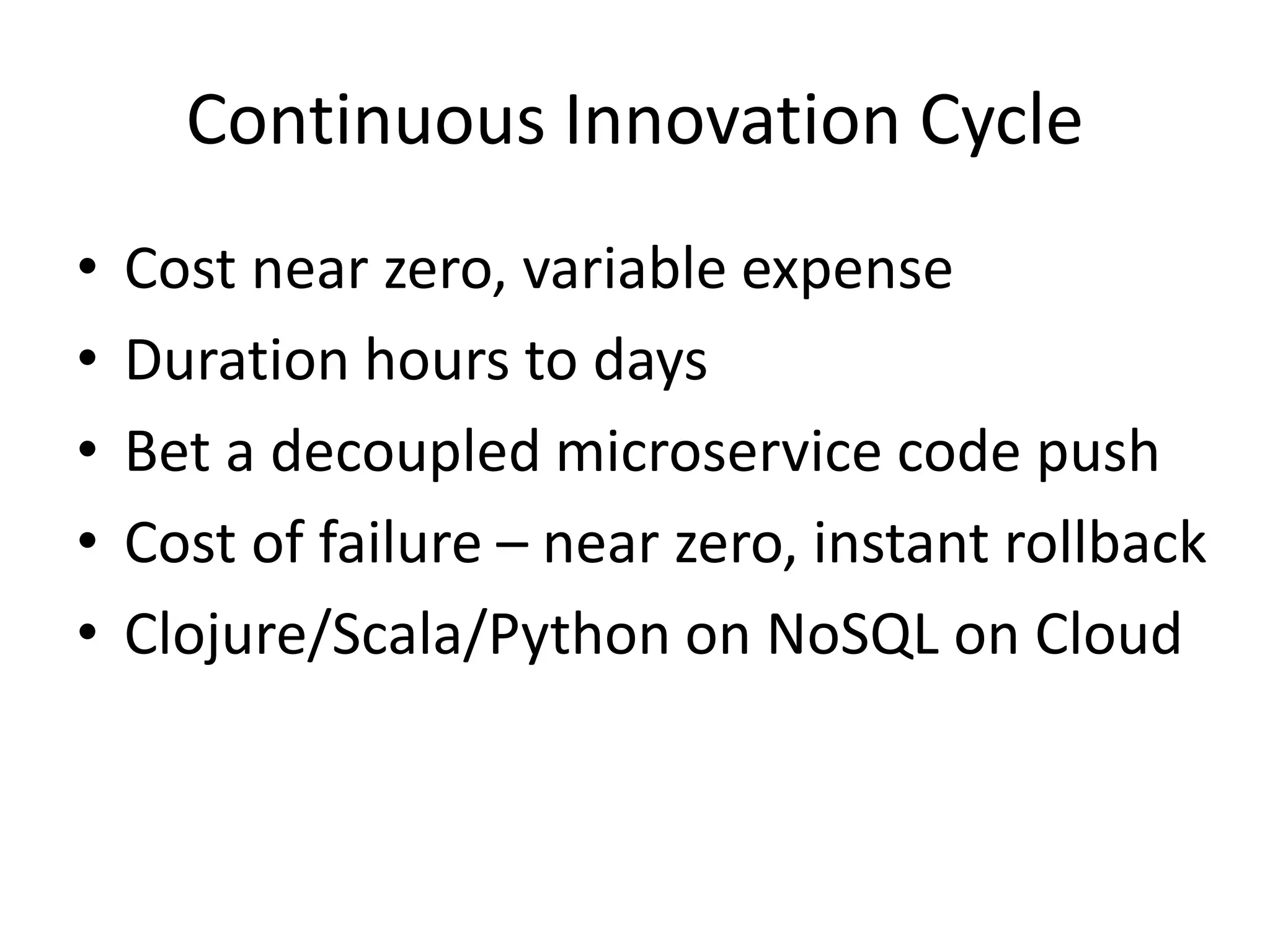

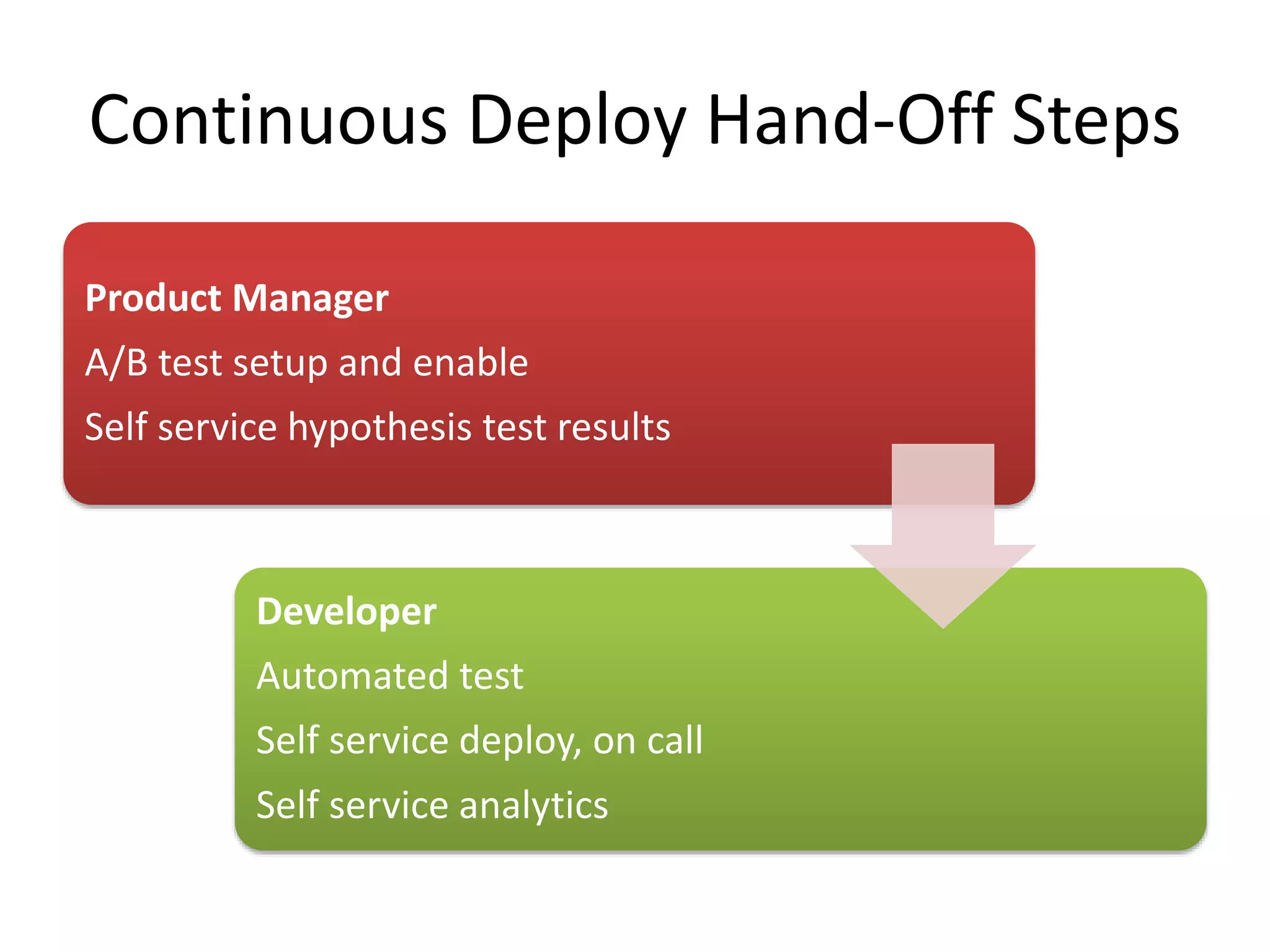

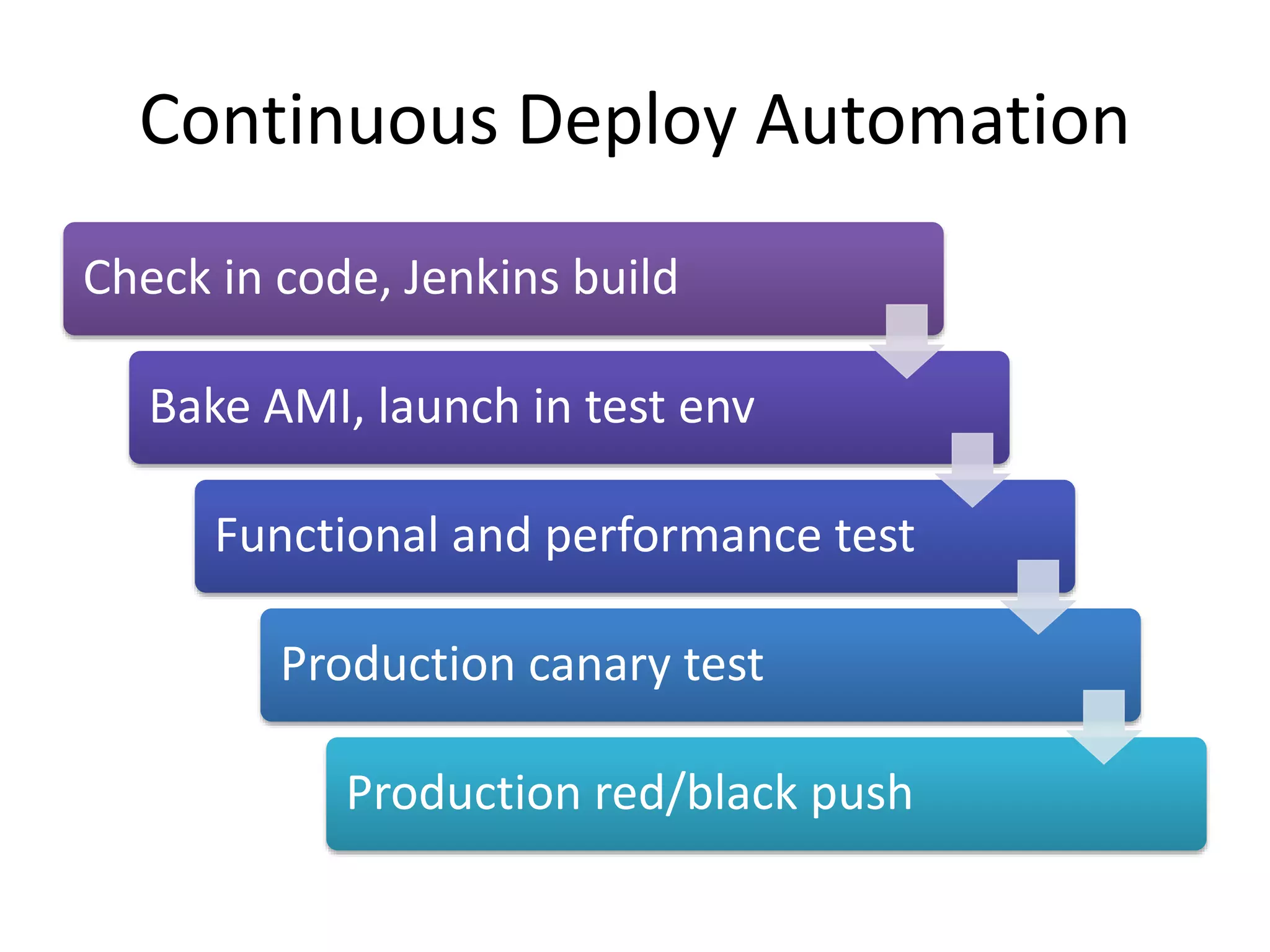

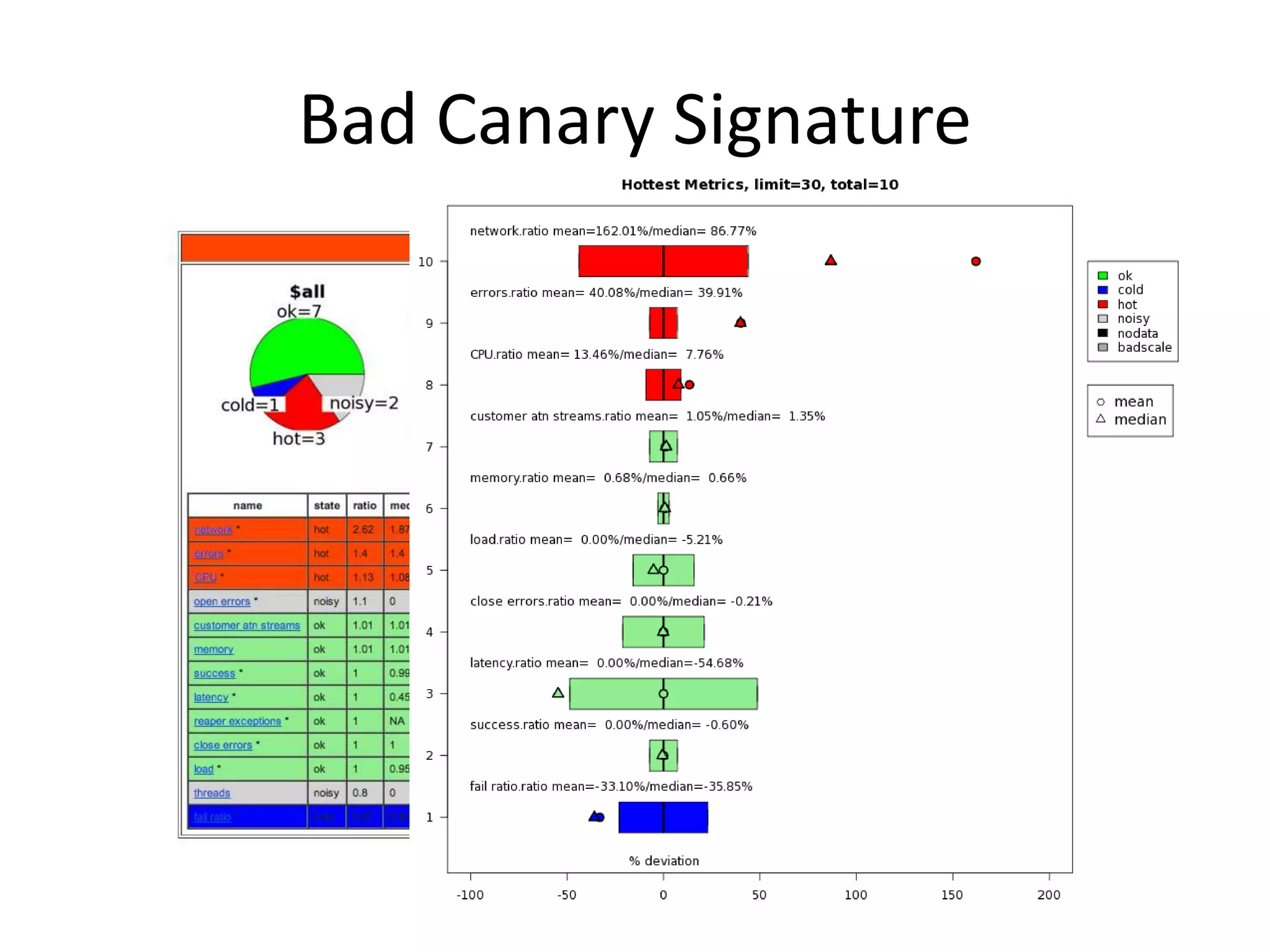

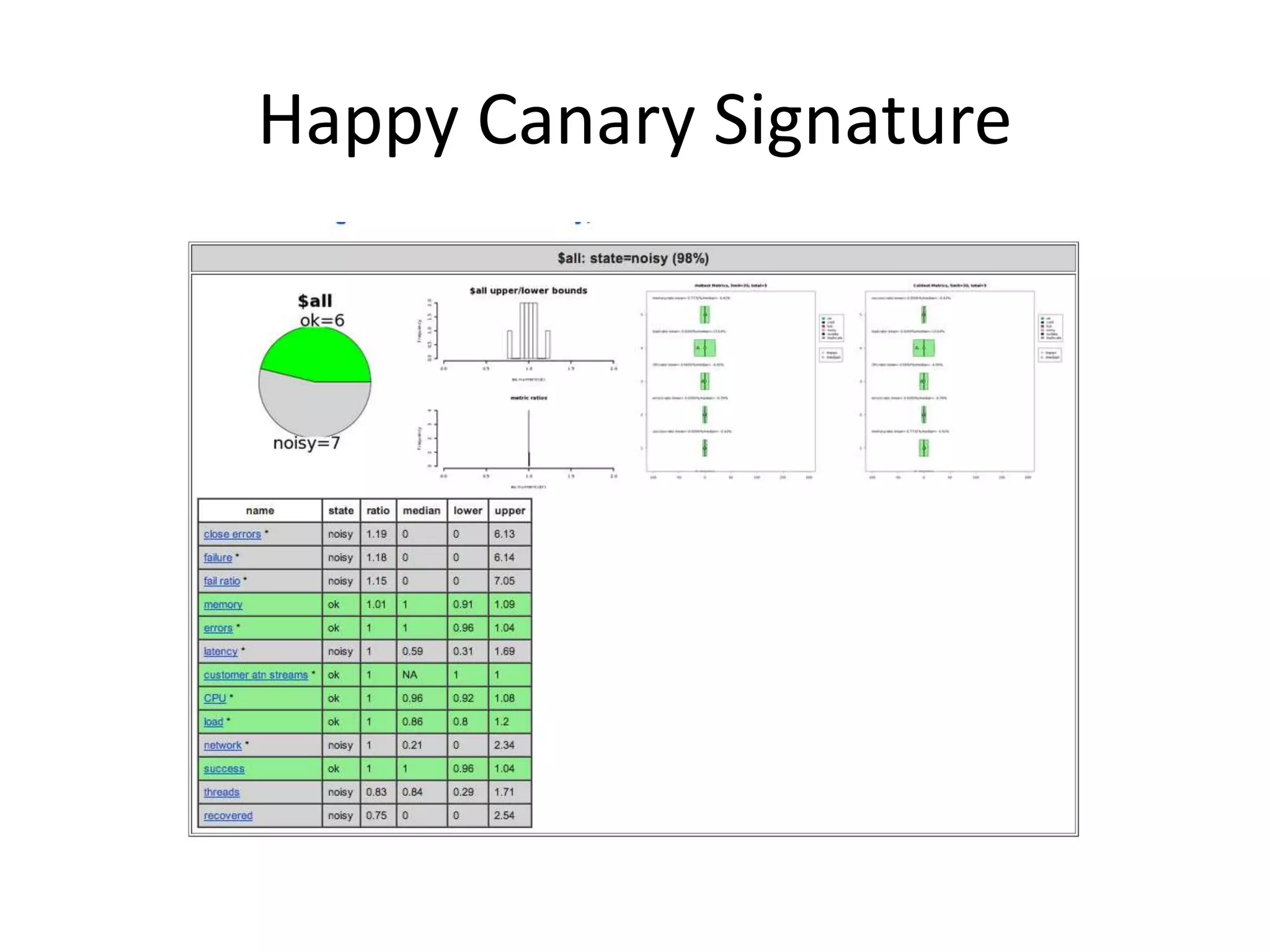

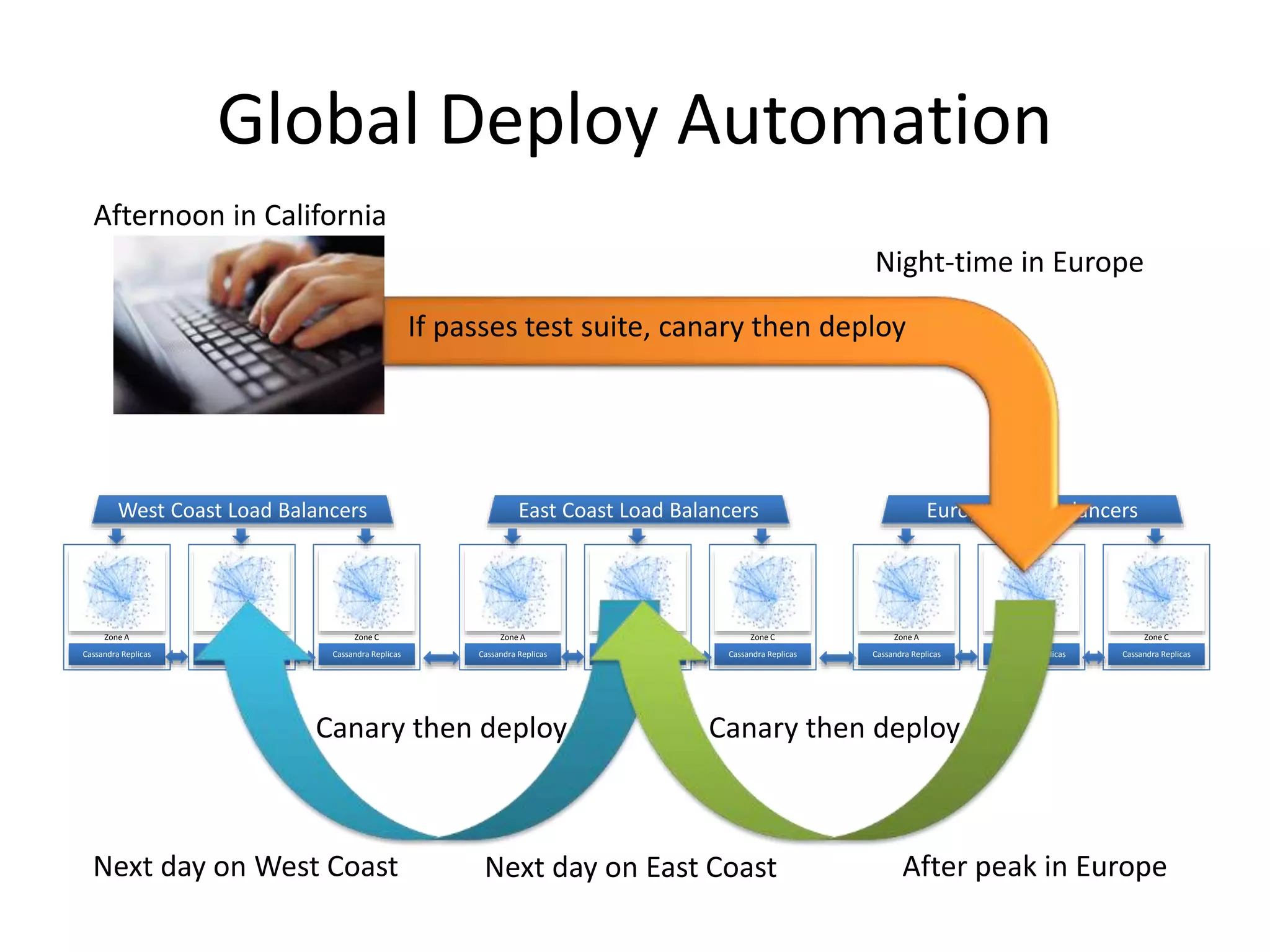

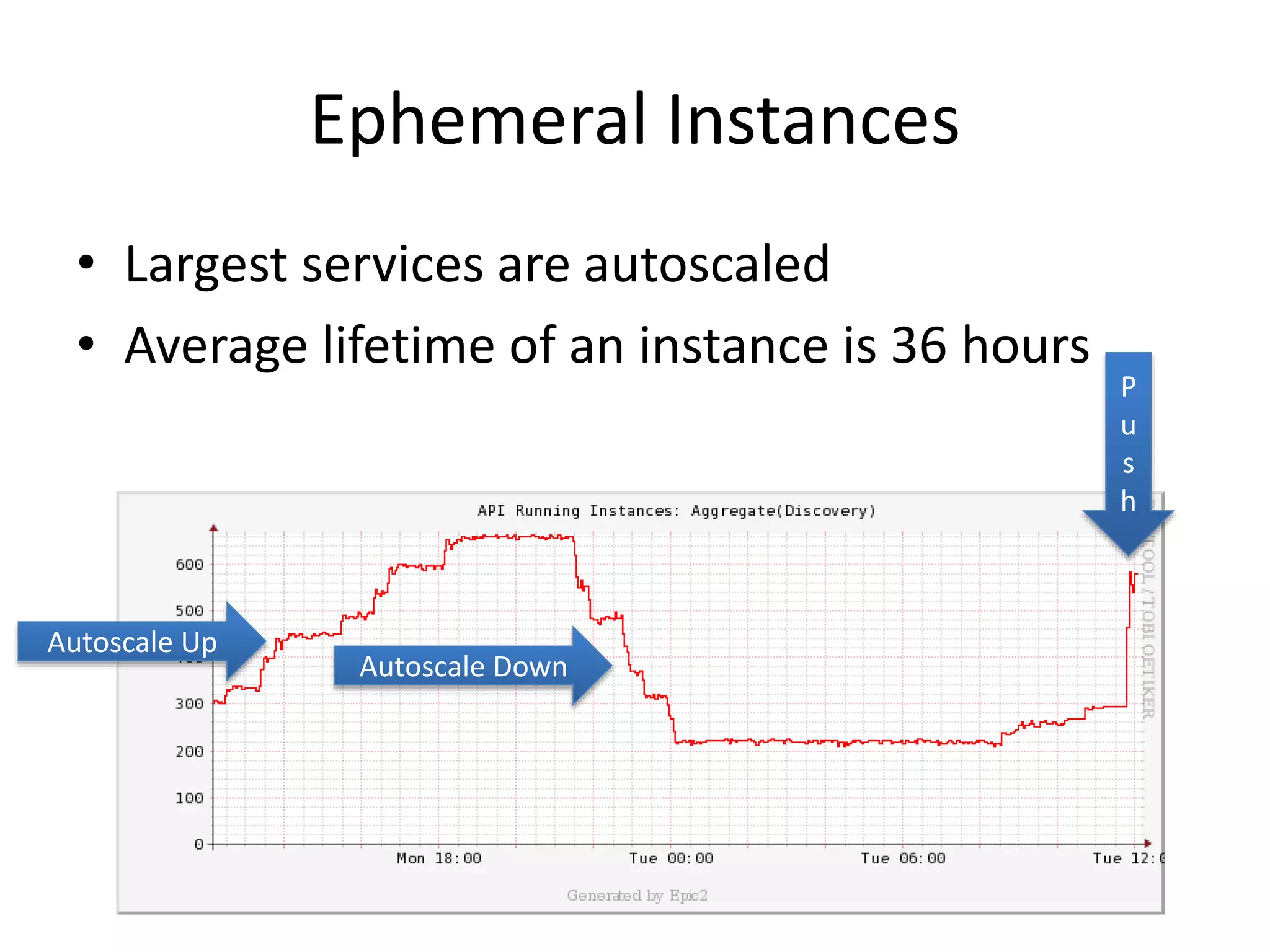

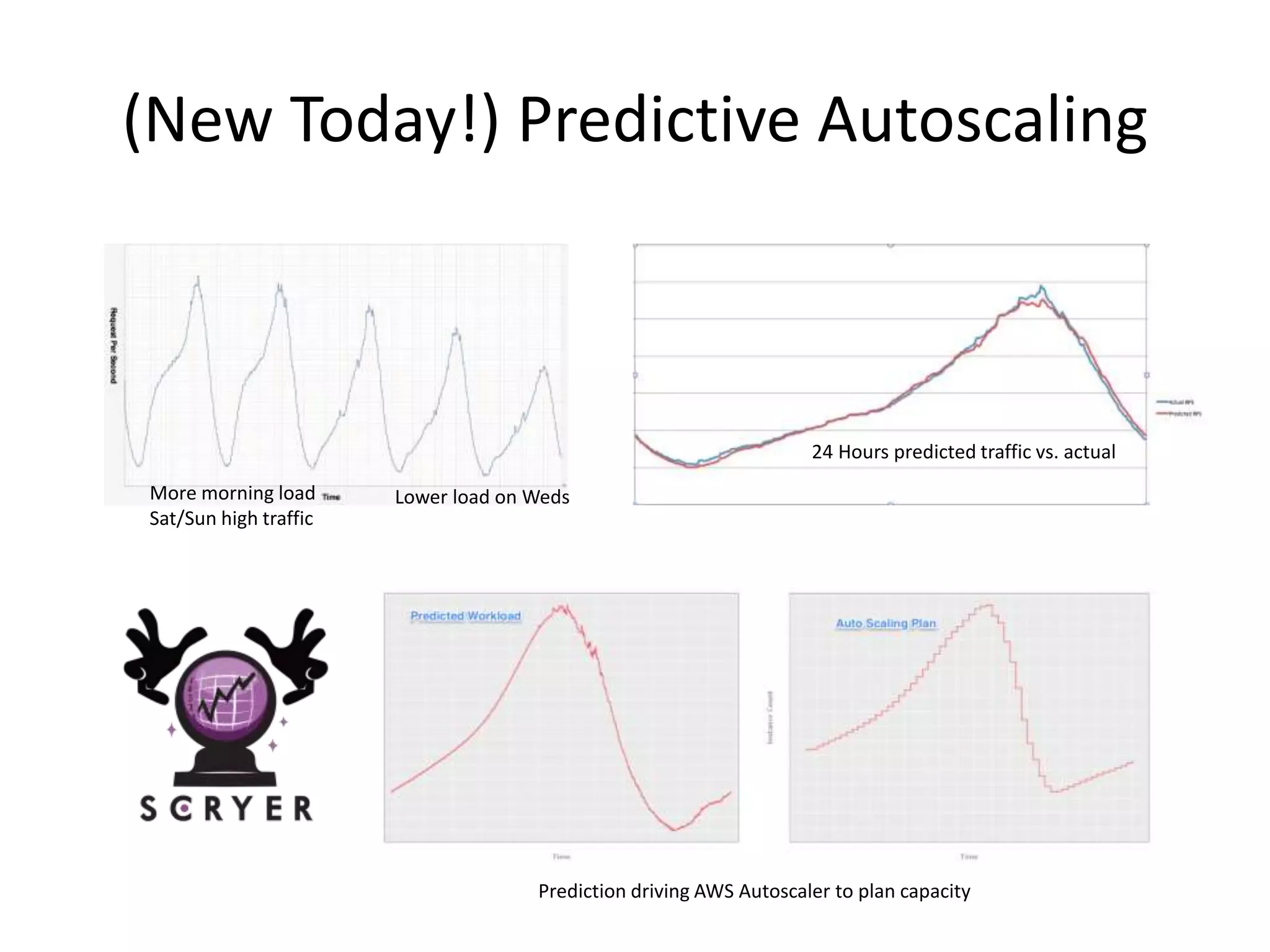

The document discusses Netflix's cloud architecture and operational strategies, emphasizing their transition to a microservices model utilizing AWS and DevOps practices. It highlights the evolution of IT cycles from mainframe to cloud-native approaches, detailing the challenges and solutions Netflix faced during its scaling process. Key elements include automation, continuous deployment, and customer-centric metrics for optimizing performance and service delivery.