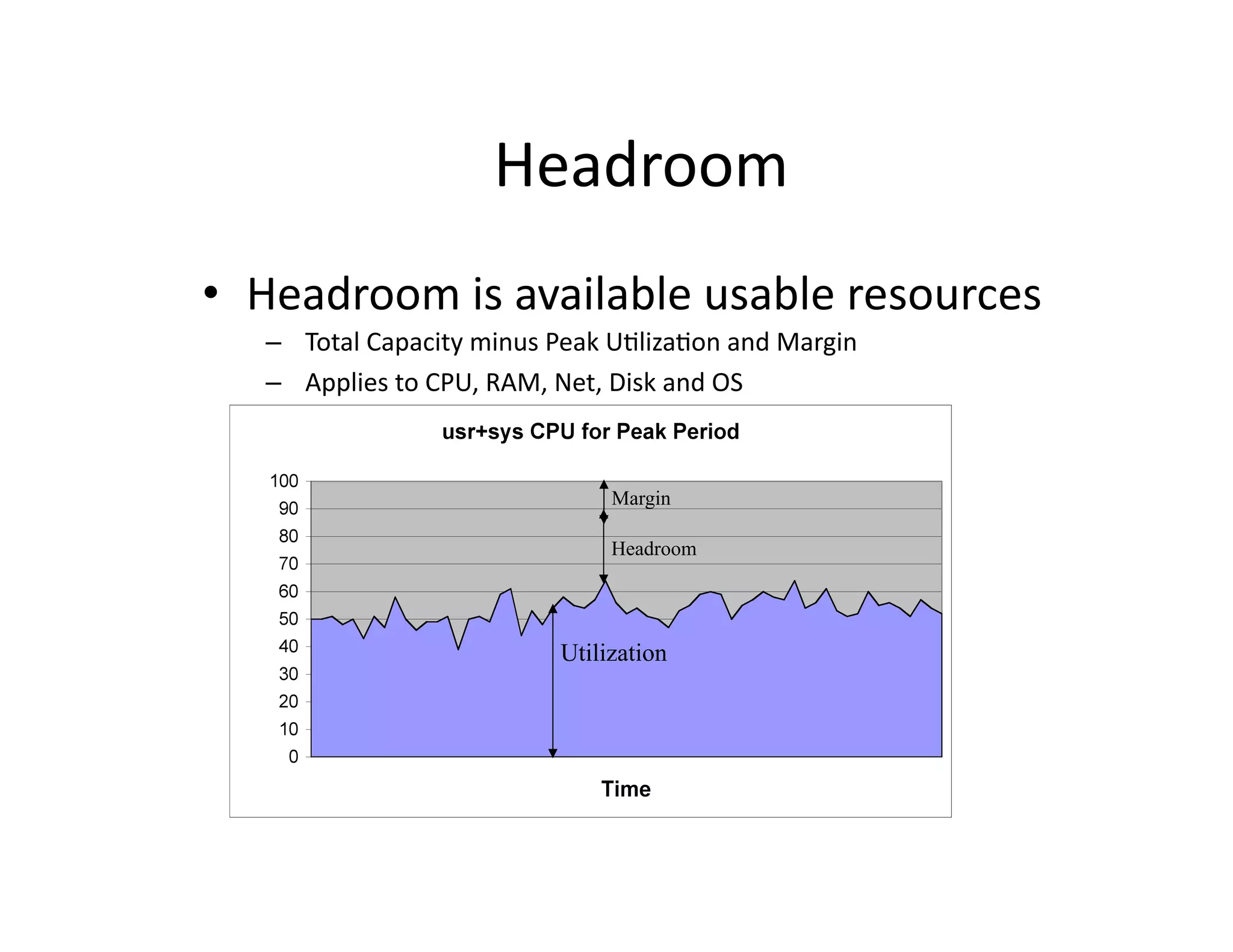

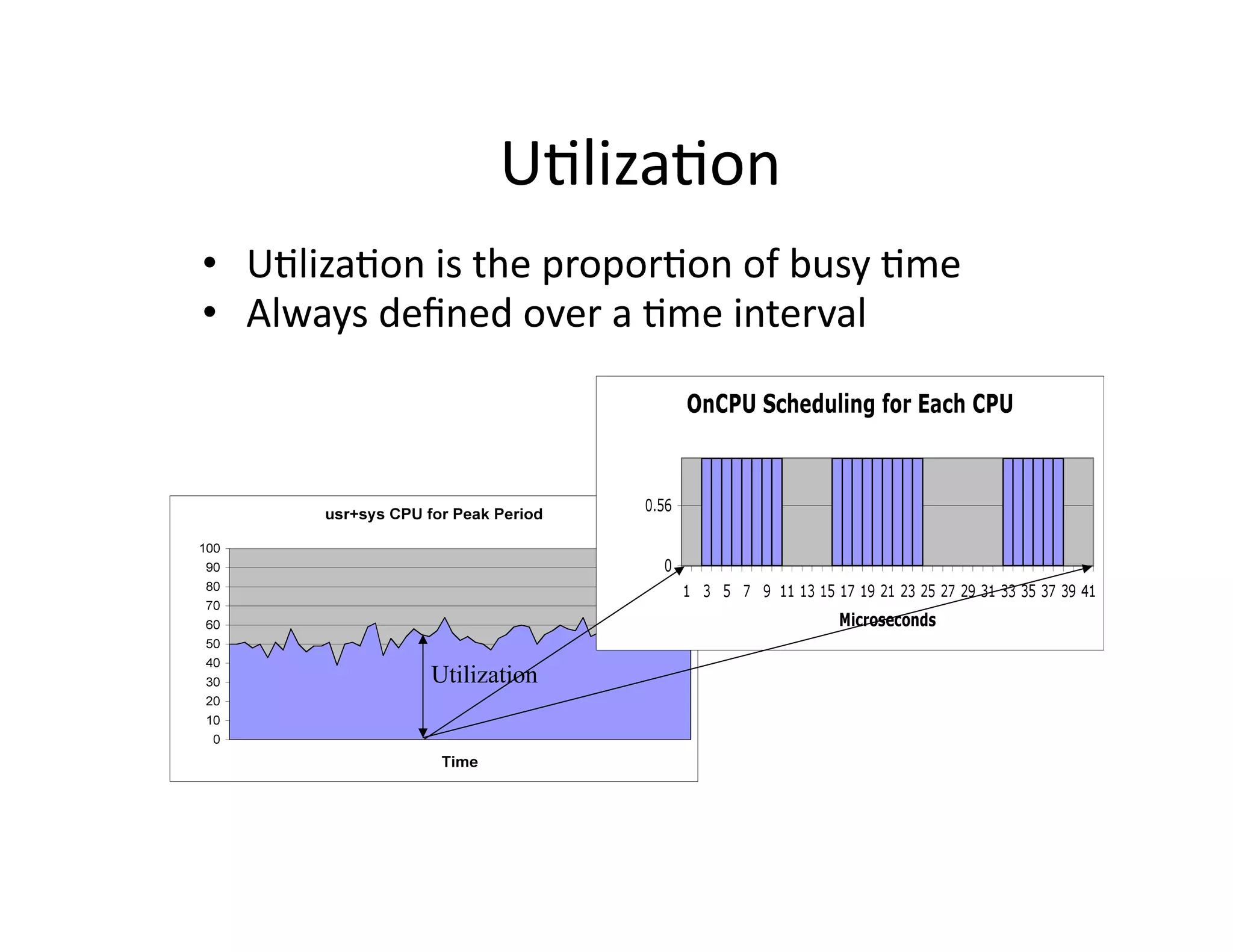

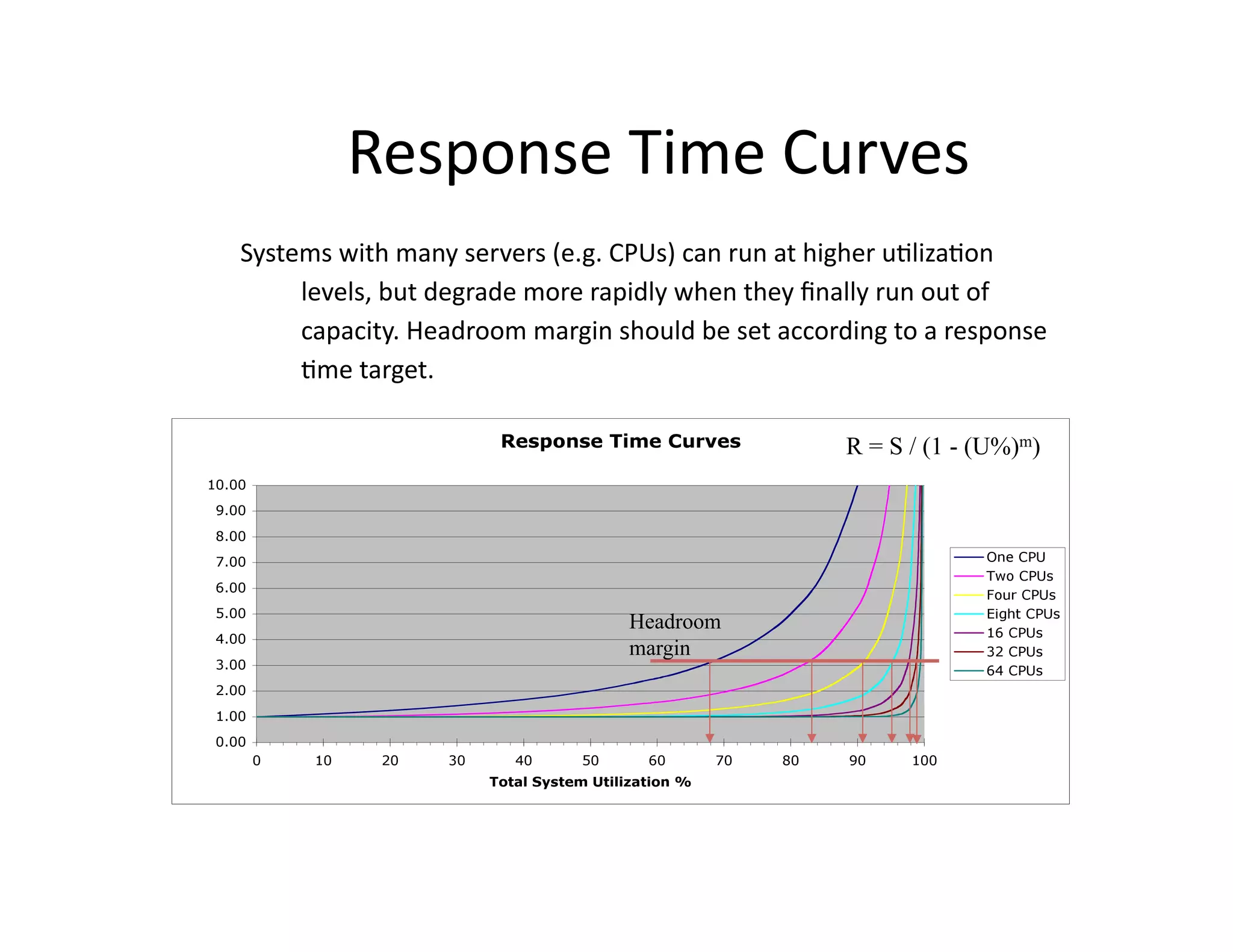

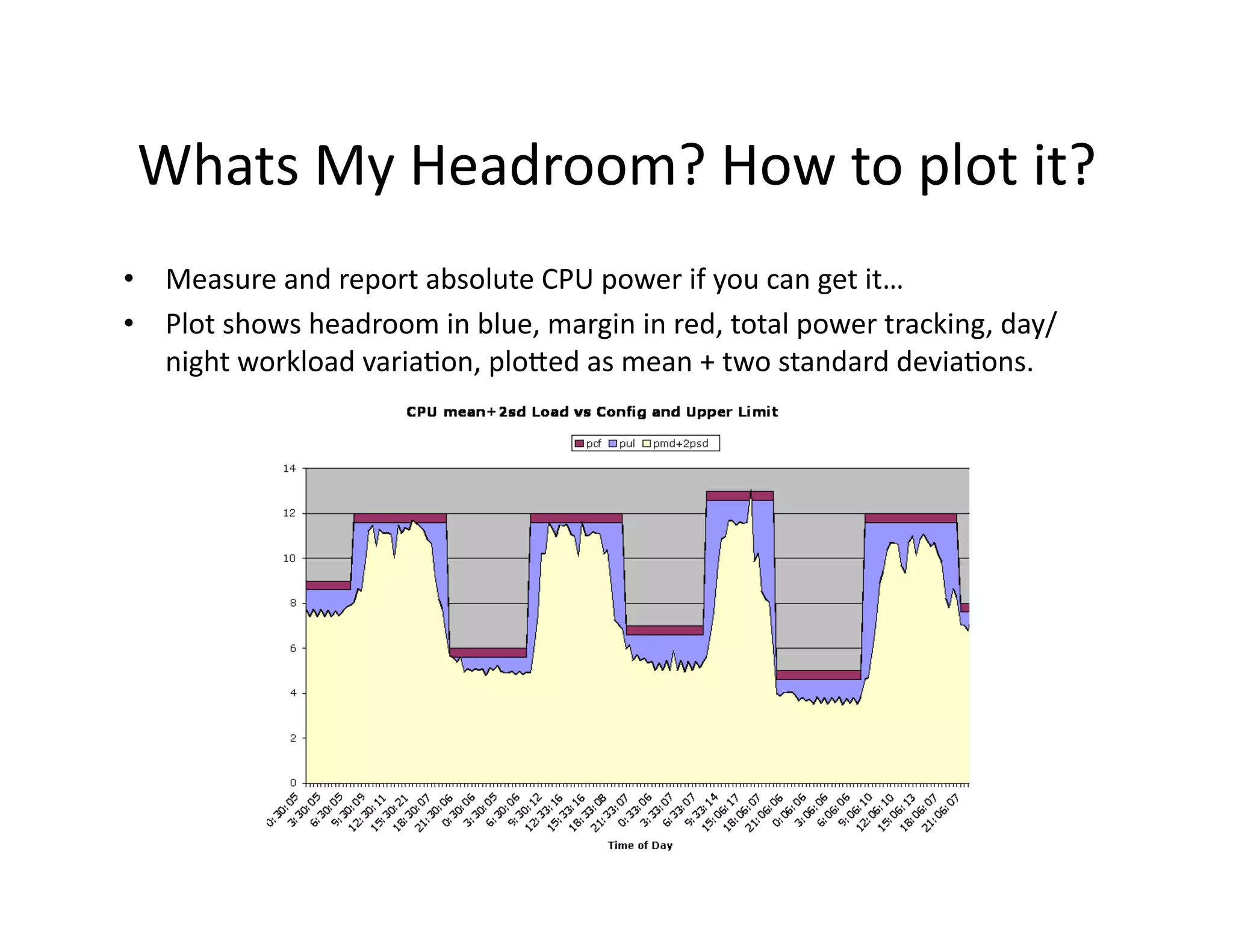

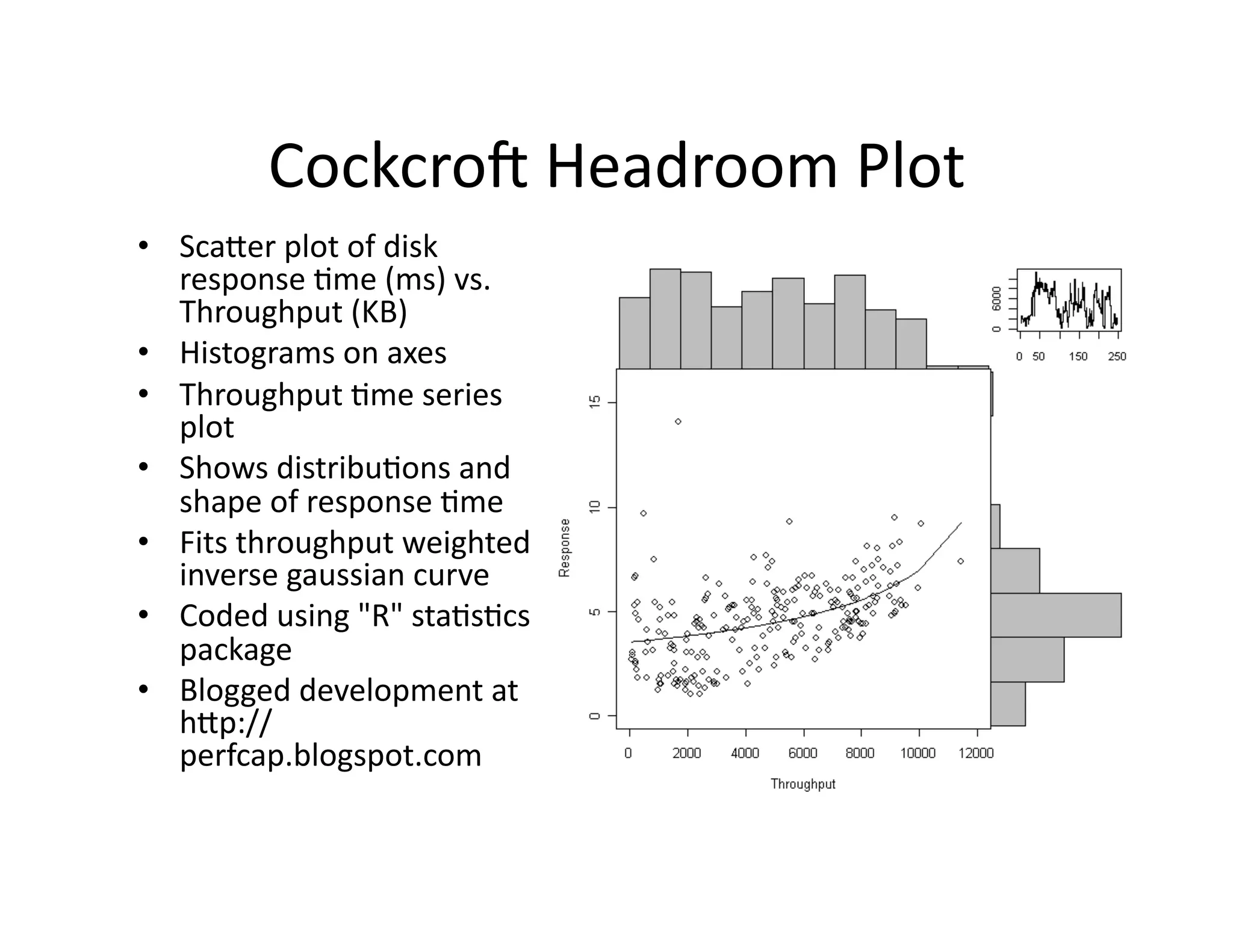

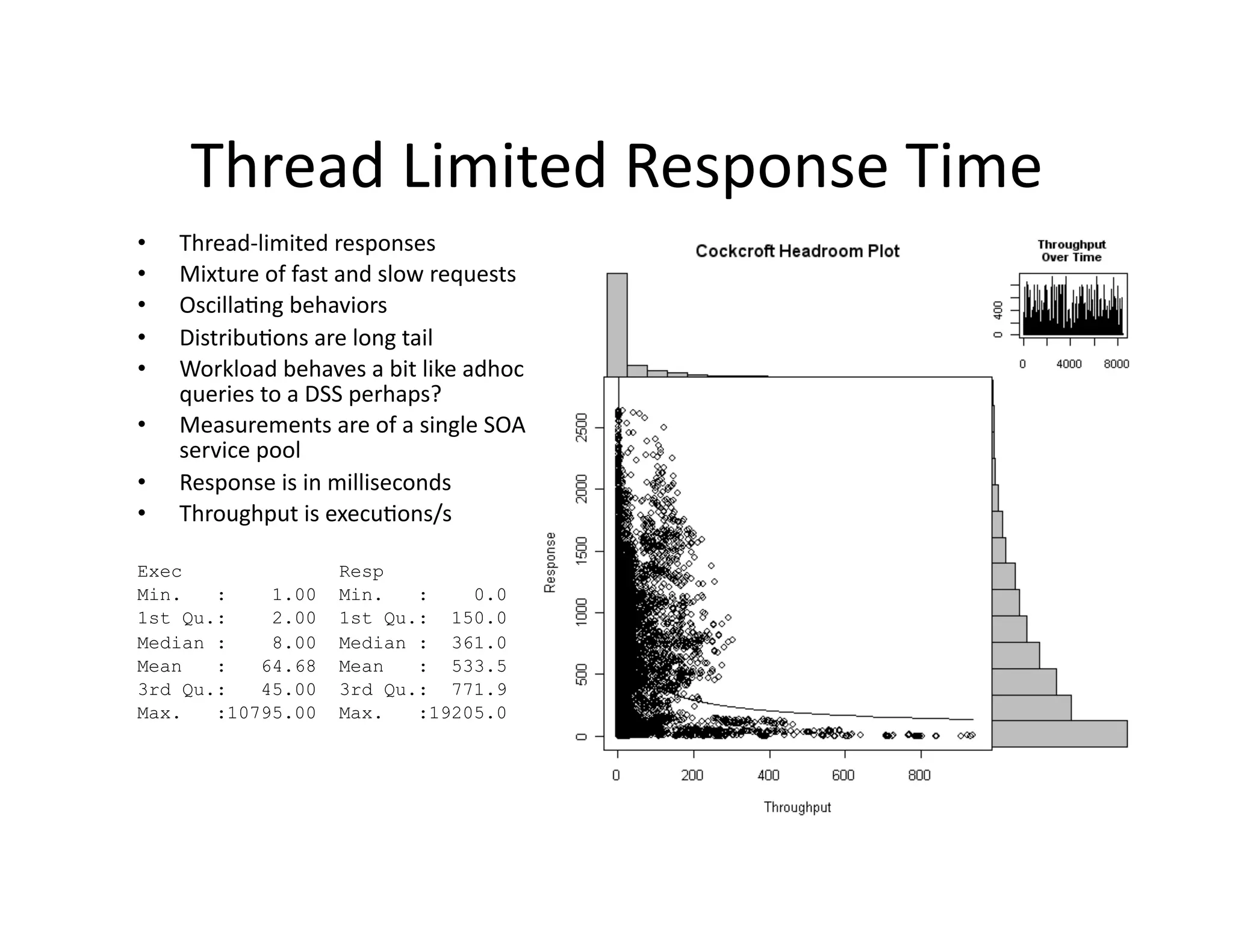

The document discusses several issues with utilizing utilization as a metric for measuring resource usage and performance in modern computing systems. It argues that utilization metrics are broken due to unsafe assumptions about workload characteristics, system architecture like multi-core CPUs, and measurement errors. Alternative metrics that take these factors into account, like response time and capability utilization for storage, are suggested to provide more accurate performance insights.