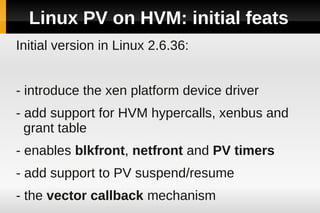

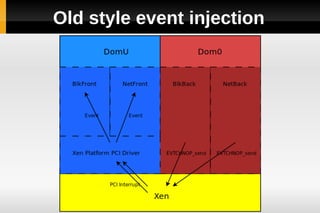

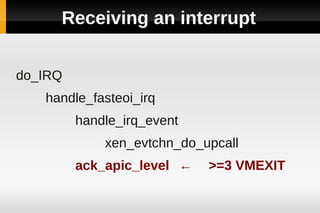

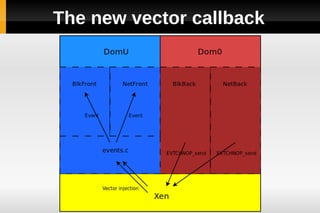

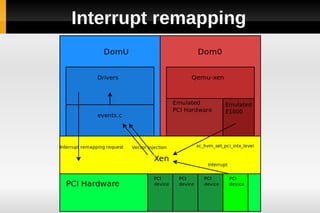

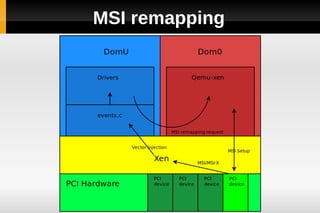

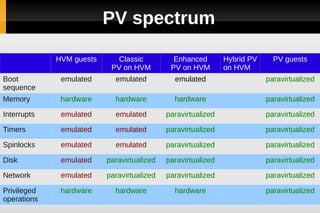

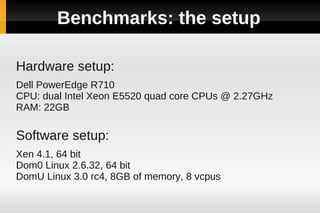

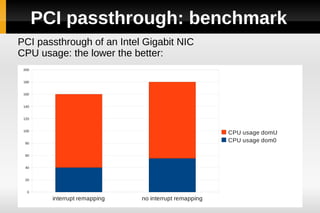

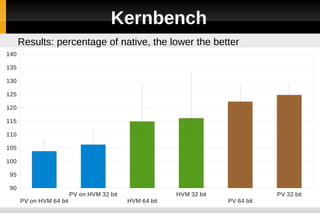

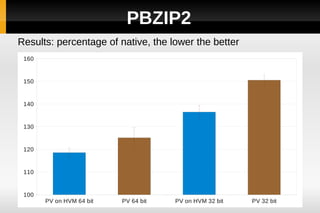

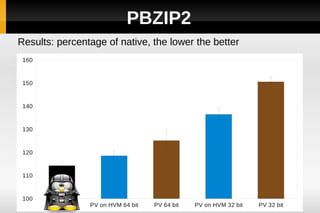

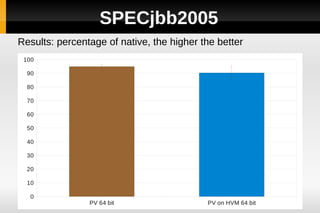

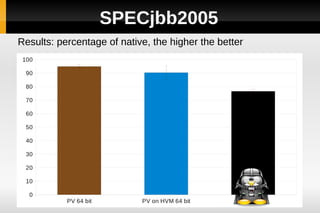

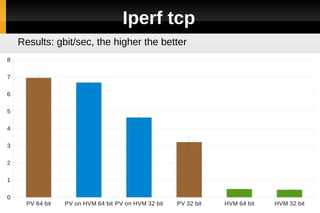

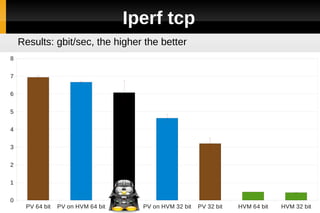

The document discusses the performance and installation issues of Linux PV (paravirtualized) guests on HVM (hardware virtual machine) systems, highlighting the challenges faced by both types of guests. It details enhancements made in Linux kernel versions 2.6.36 and later, such as support for Xen platform device drivers and interrupt remapping, as well as benchmarks comparing performance metrics. The conclusions suggest that PV on HVM guests perform comparably to traditional PV guests in certain benchmarks, particularly those benefiting from nested paging.