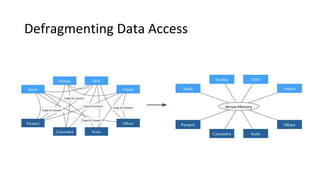

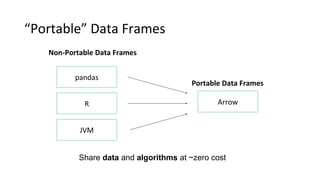

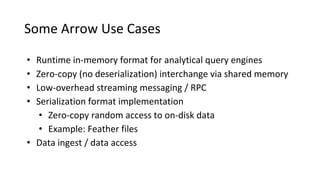

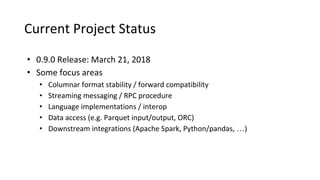

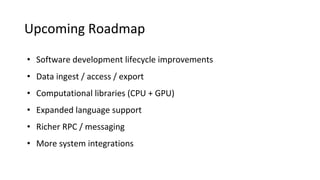

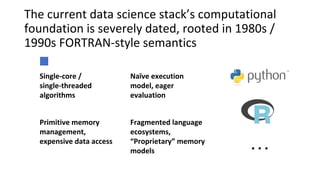

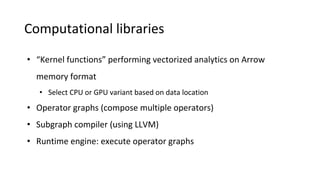

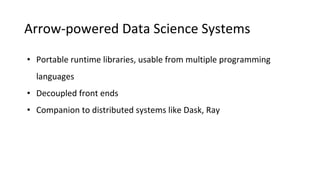

Wes McKinney is the creator of Python's pandas project and a primary developer of Apache Arrow, Apache Parquet, and other open-source projects. Apache Arrow is an open-source cross-language development platform for in-memory analytics that aims to improve data science tools. It provides a shared standard for memory interoperability and computation across languages through its columnar memory format and libraries. Apache Arrow has growing adoption in data science systems and is working to expand language support and computational capabilities.