This document provides an overview of learning analytics, including:

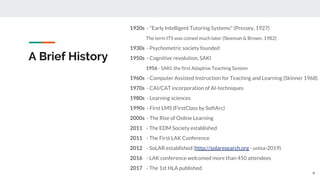

- A brief history of learning analytics and related fields from the 1920s to present day.

- Drivers for the increased focus on learning analytics like big data, personalized learning, and demands for educational institutions to demonstrate performance.

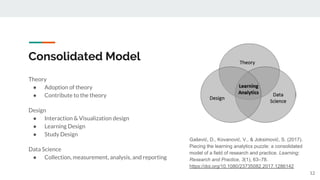

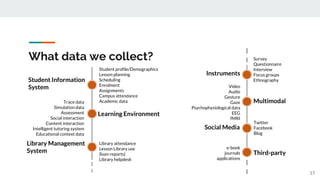

- A definition of learning analytics as "the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs."

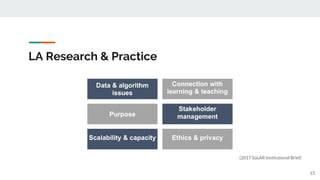

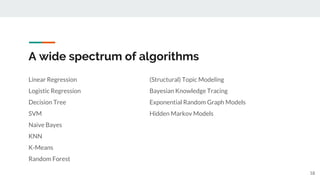

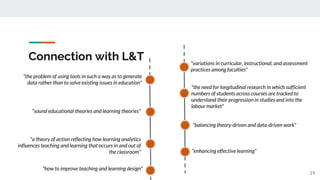

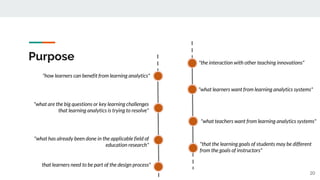

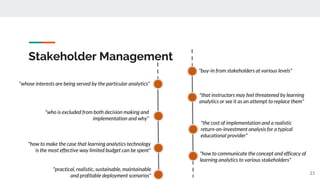

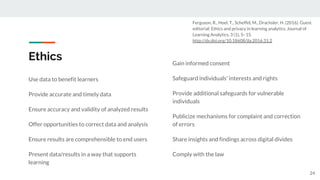

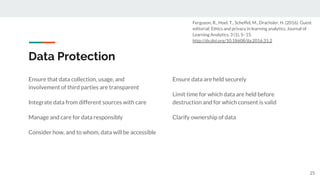

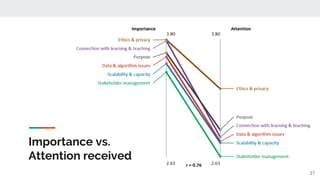

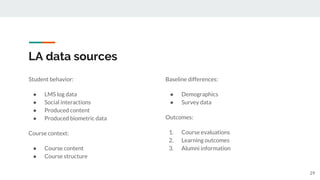

- Key dimensions that learning analytics research and practice focuses on, such as data sources and methods, connection to learning and teaching, purpose, stakeholder management, and ethics.