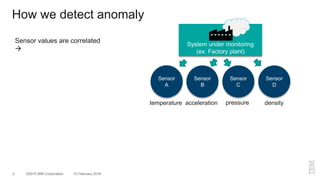

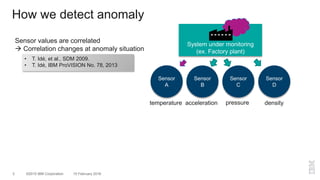

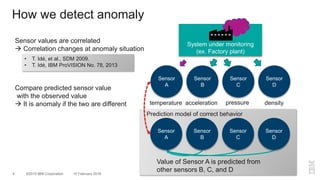

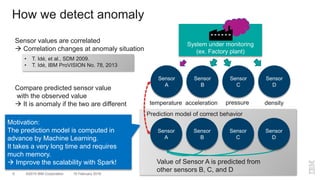

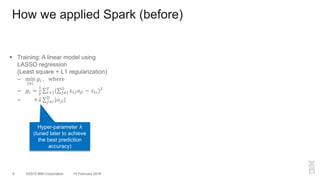

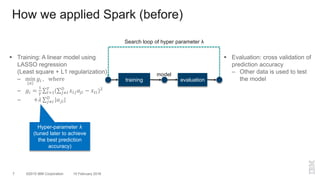

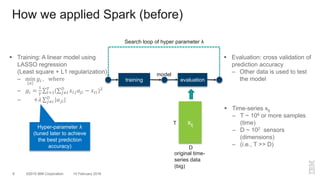

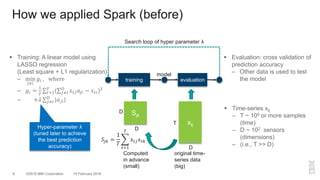

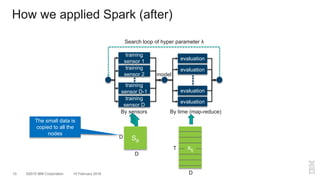

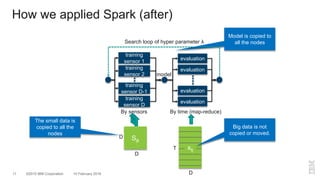

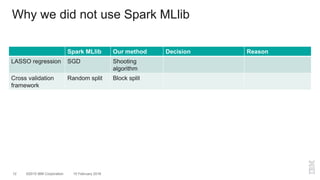

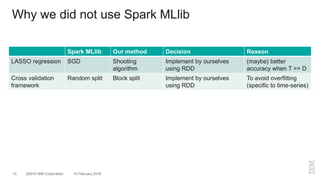

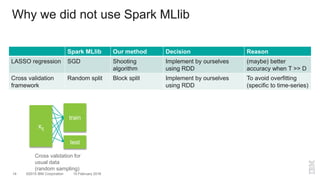

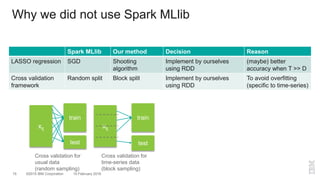

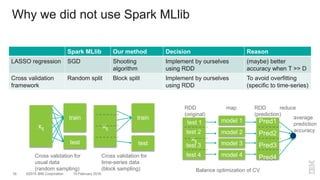

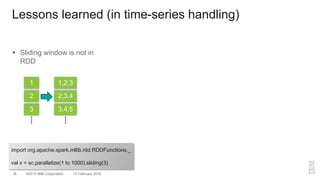

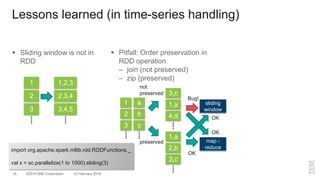

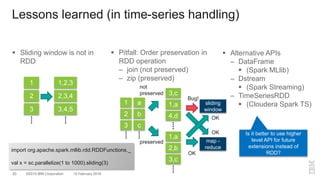

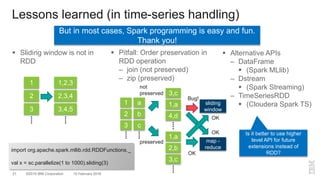

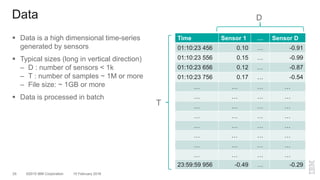

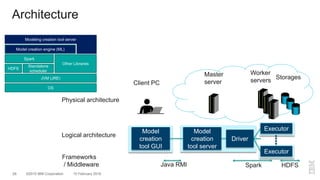

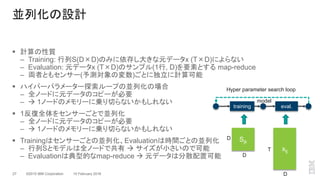

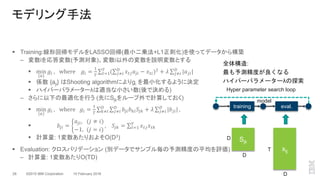

The document outlines a method for developing software for scalable anomaly detection in time-series data using Apache Spark. It discusses the implementation of a prediction model that compares observed sensor data with predicted values to identify anomalies, and details the use of lasso regression for training the model. Performance improvements and challenges faced during the process, such as order preservation in RDD operations and the use of alternative APIs, are also highlighted.